Usually involves model comparasion.

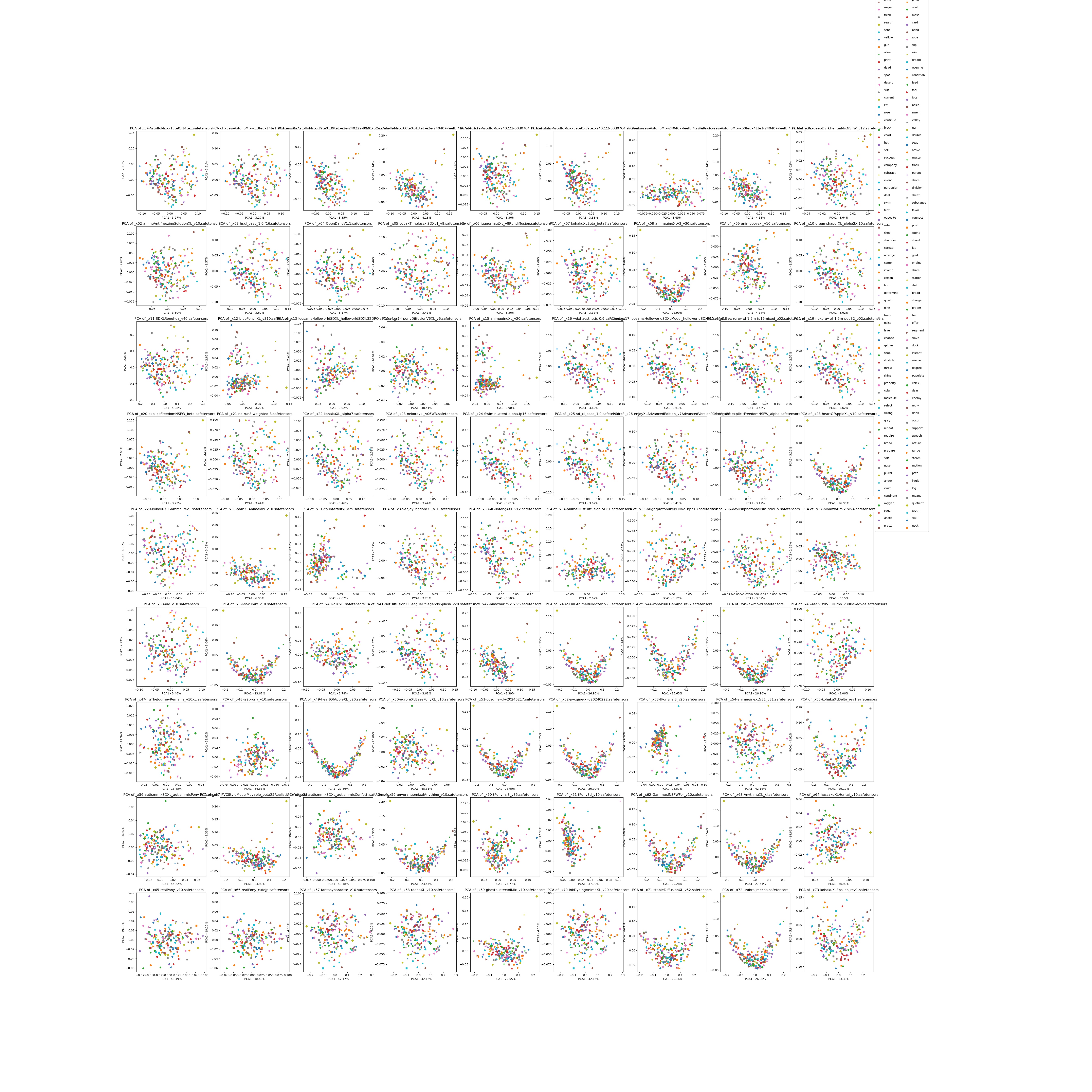

- Comparasion of models by model architecture, with findings: v1/mega_cmp.ipynb

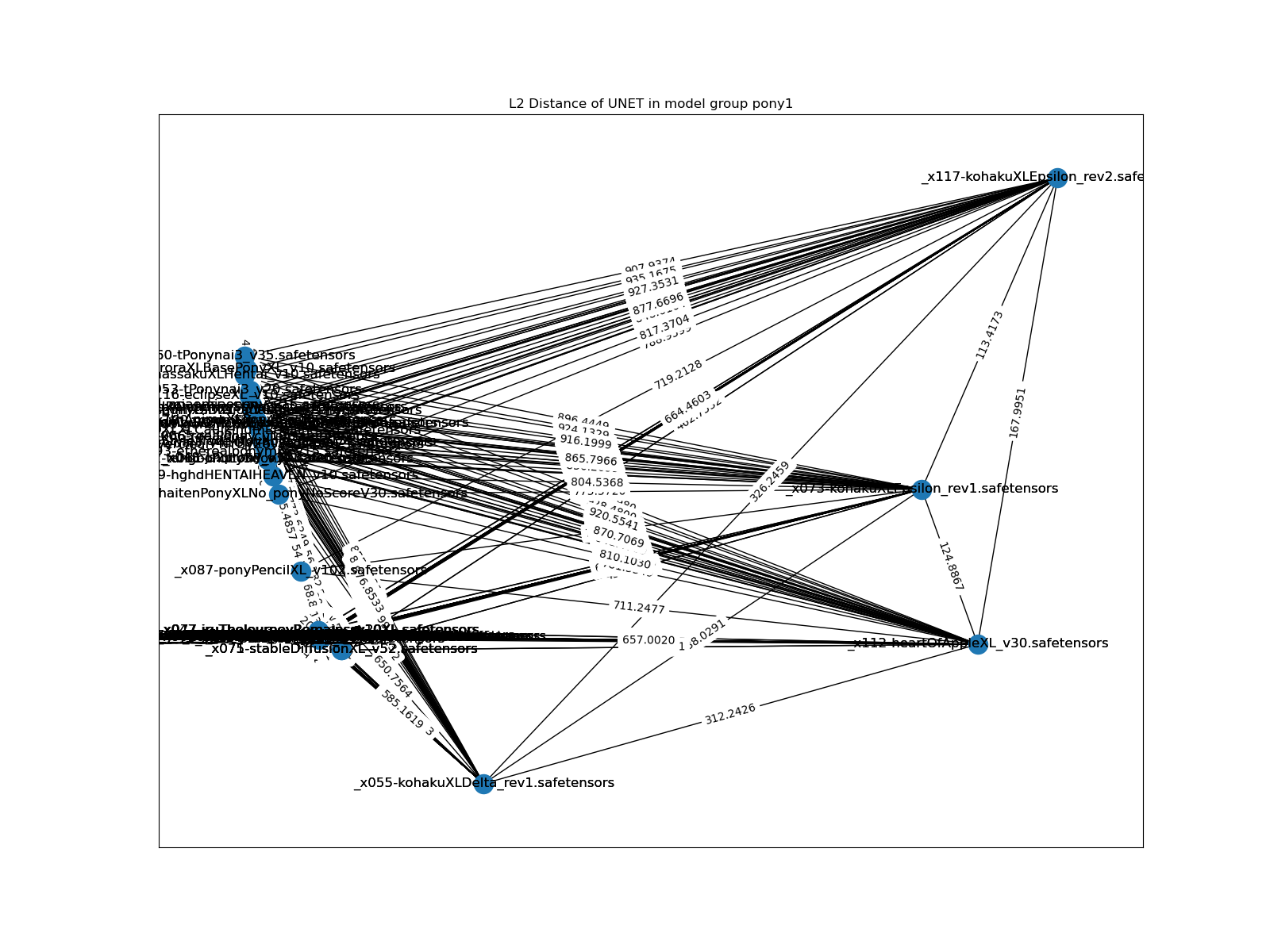

- Mass scale of model comparasion, making distance matrix and try to plot a weighed graph based from the distances: v2a/mega_cmp_v2.ipynb

- V2a but in parallel version (for many CPU cores): v2a/mega_cmp_parallel.ipynb

- Archived reports / diagrams: v2a/results.7z

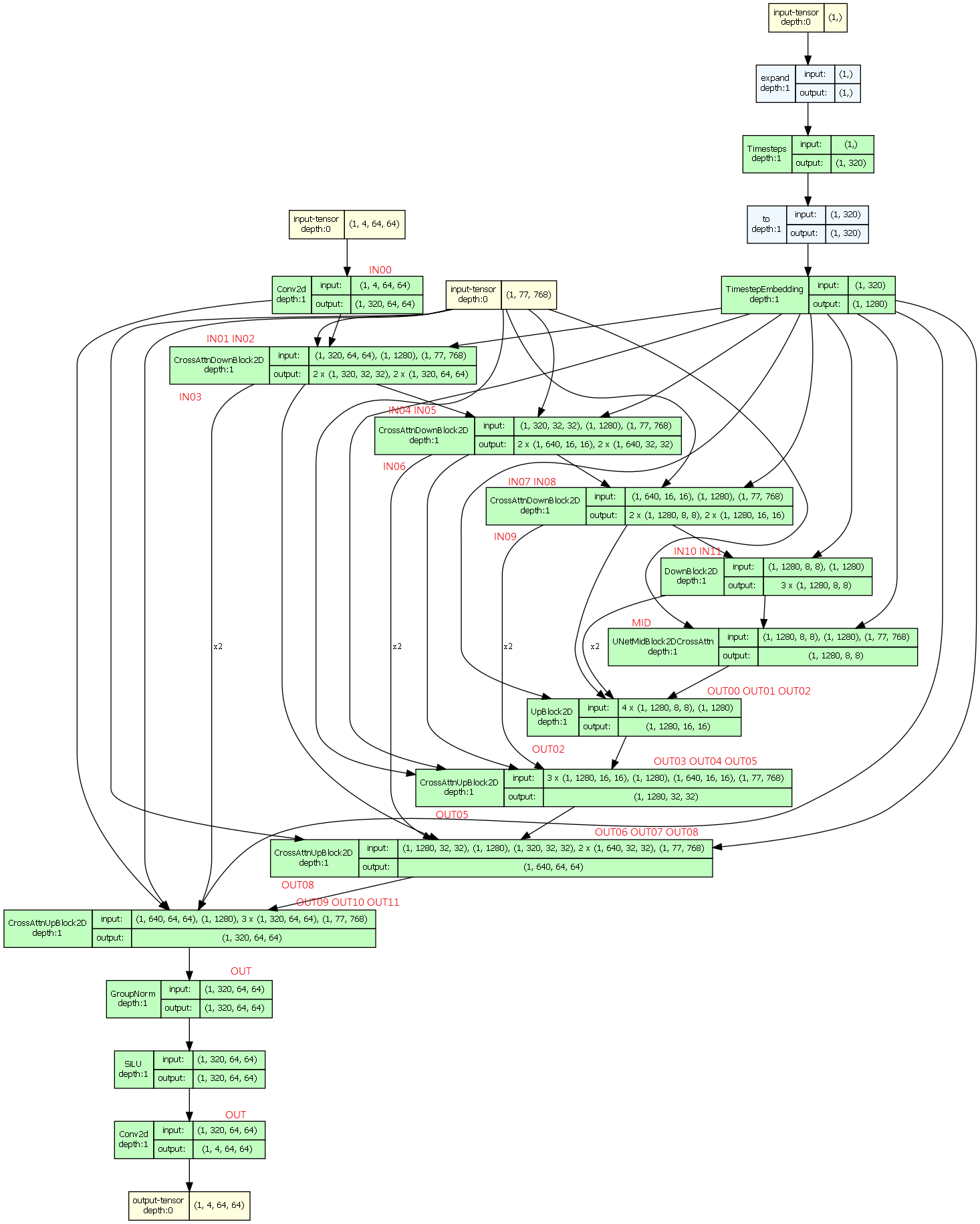

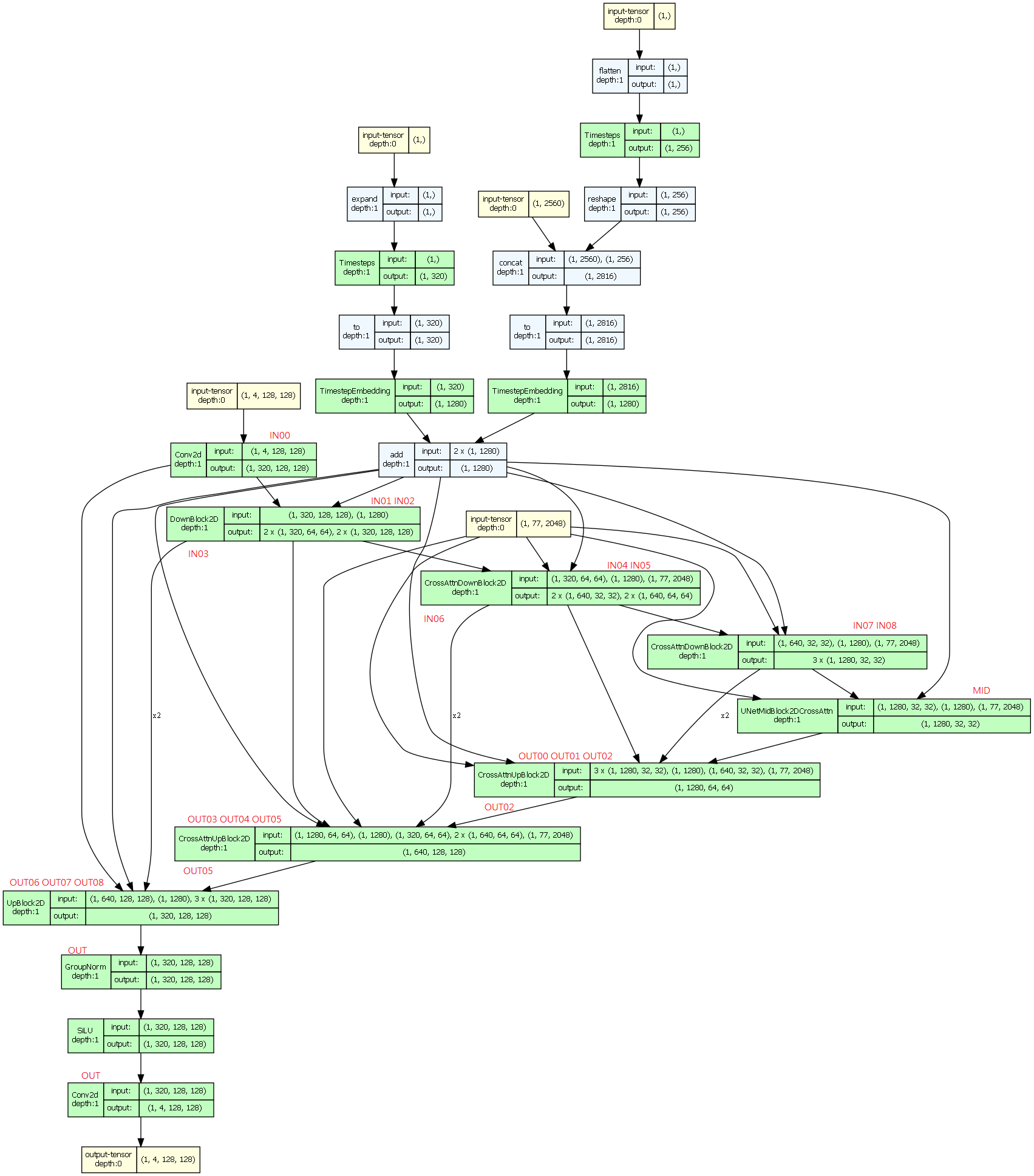

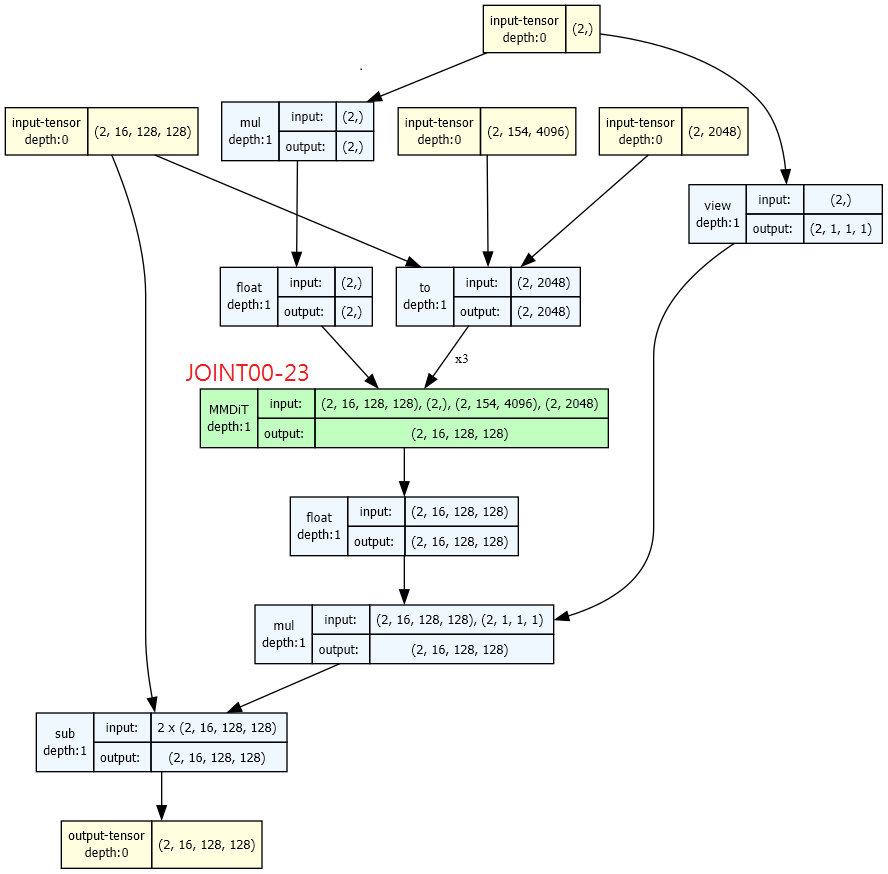

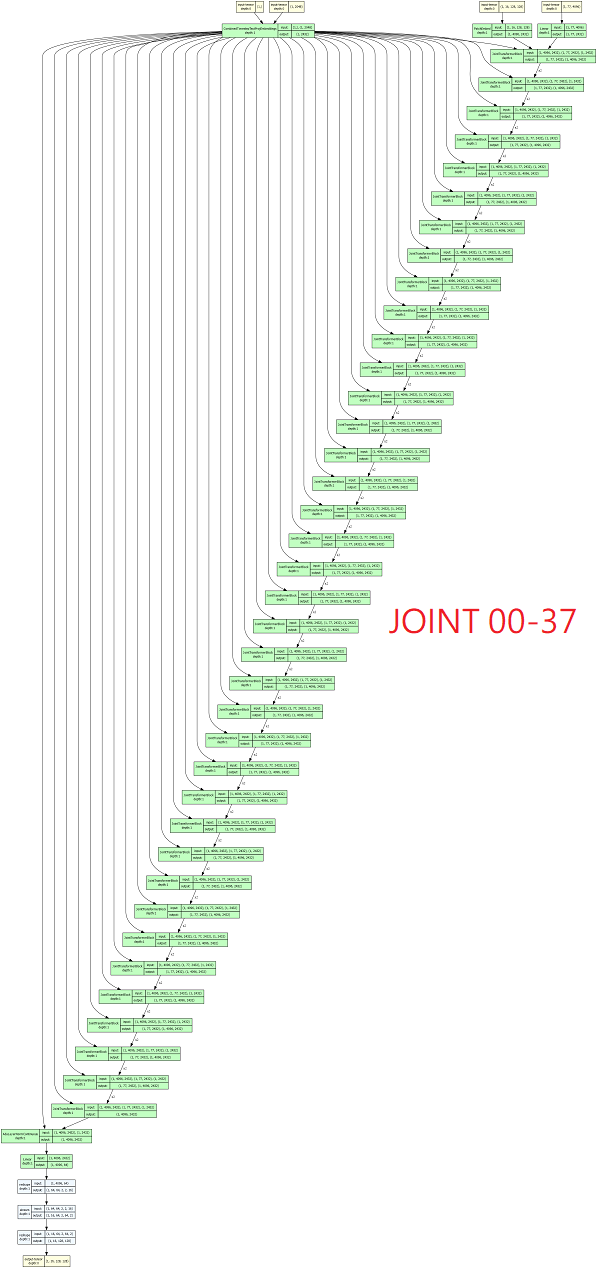

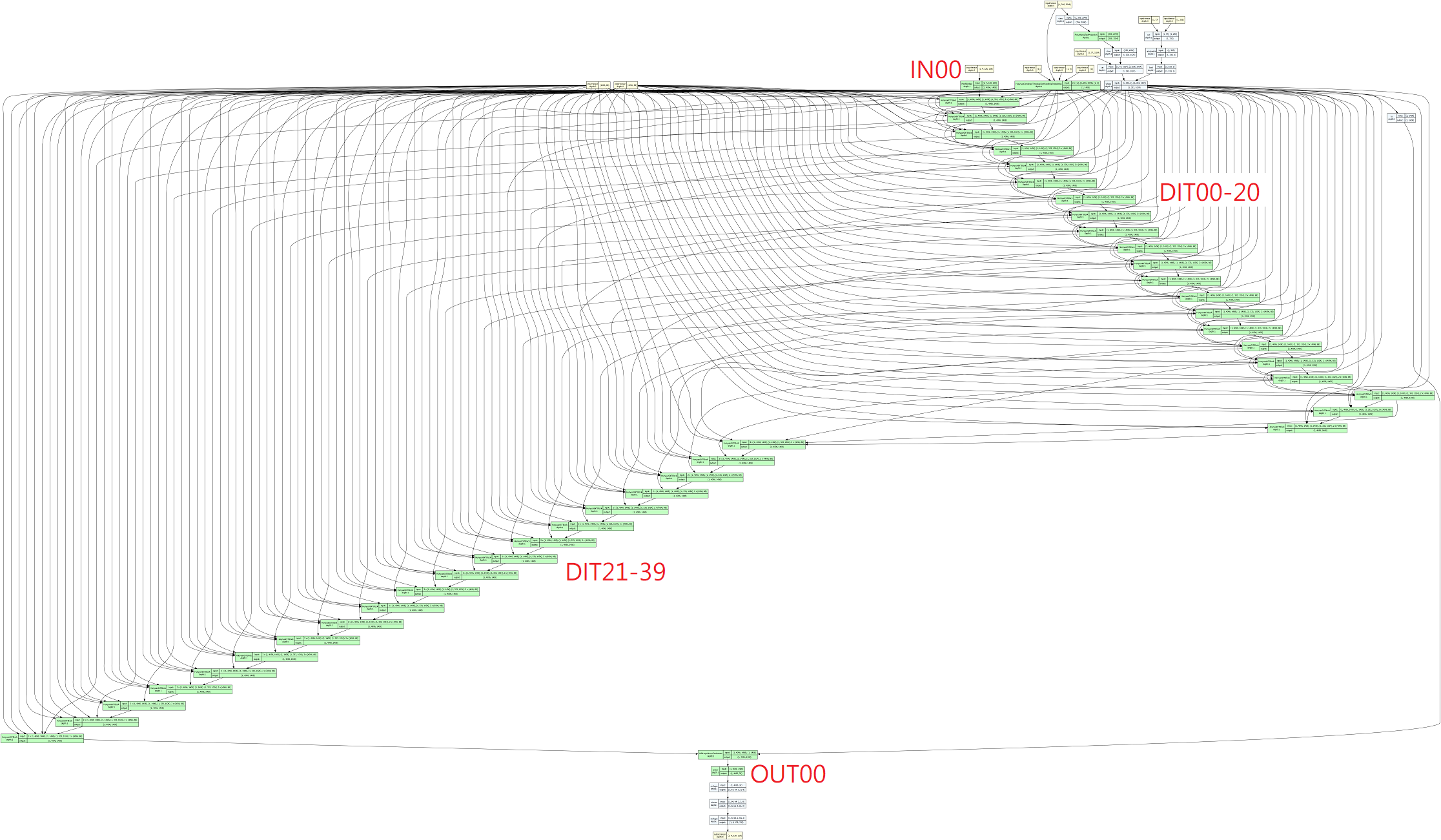

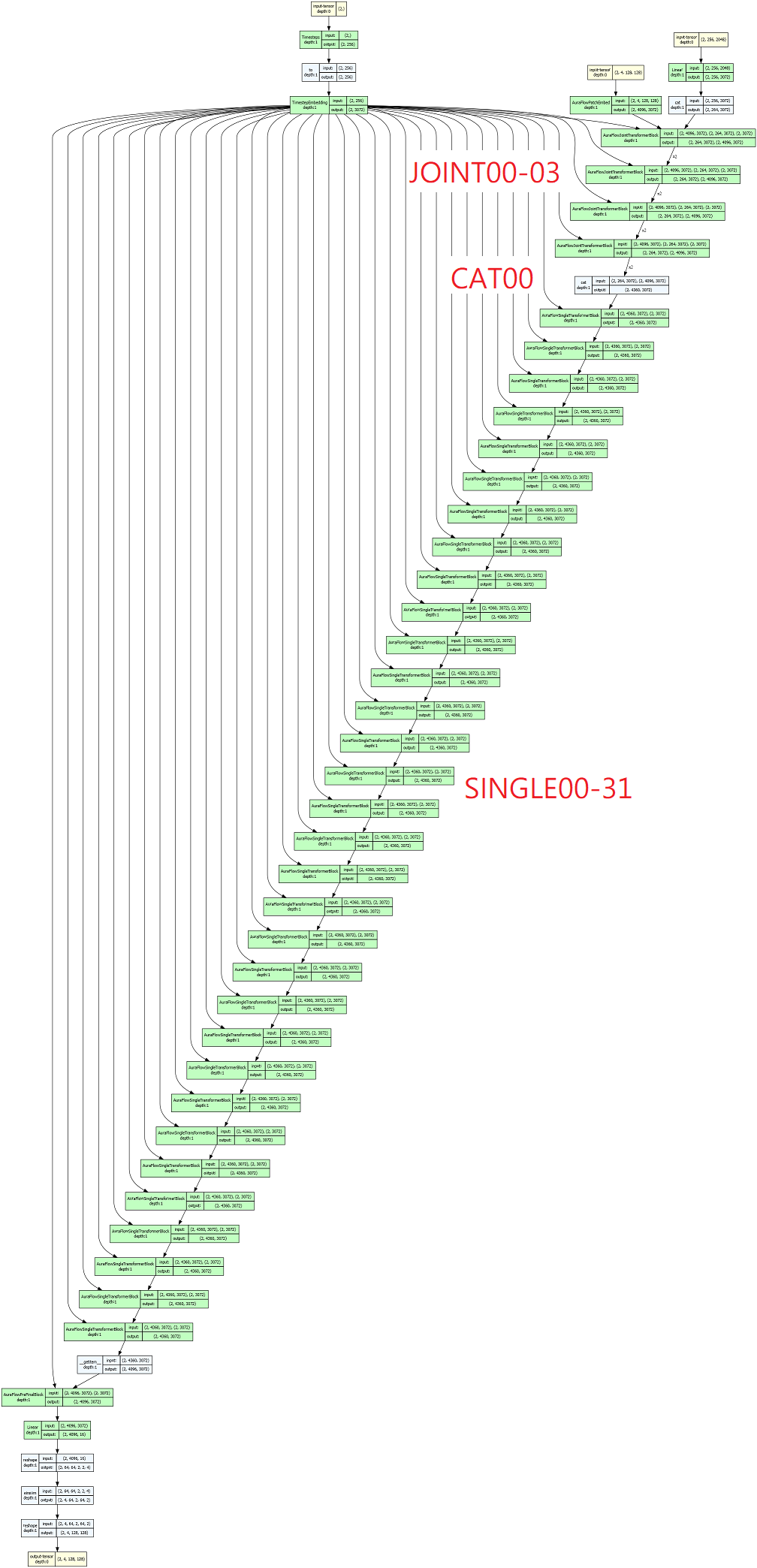

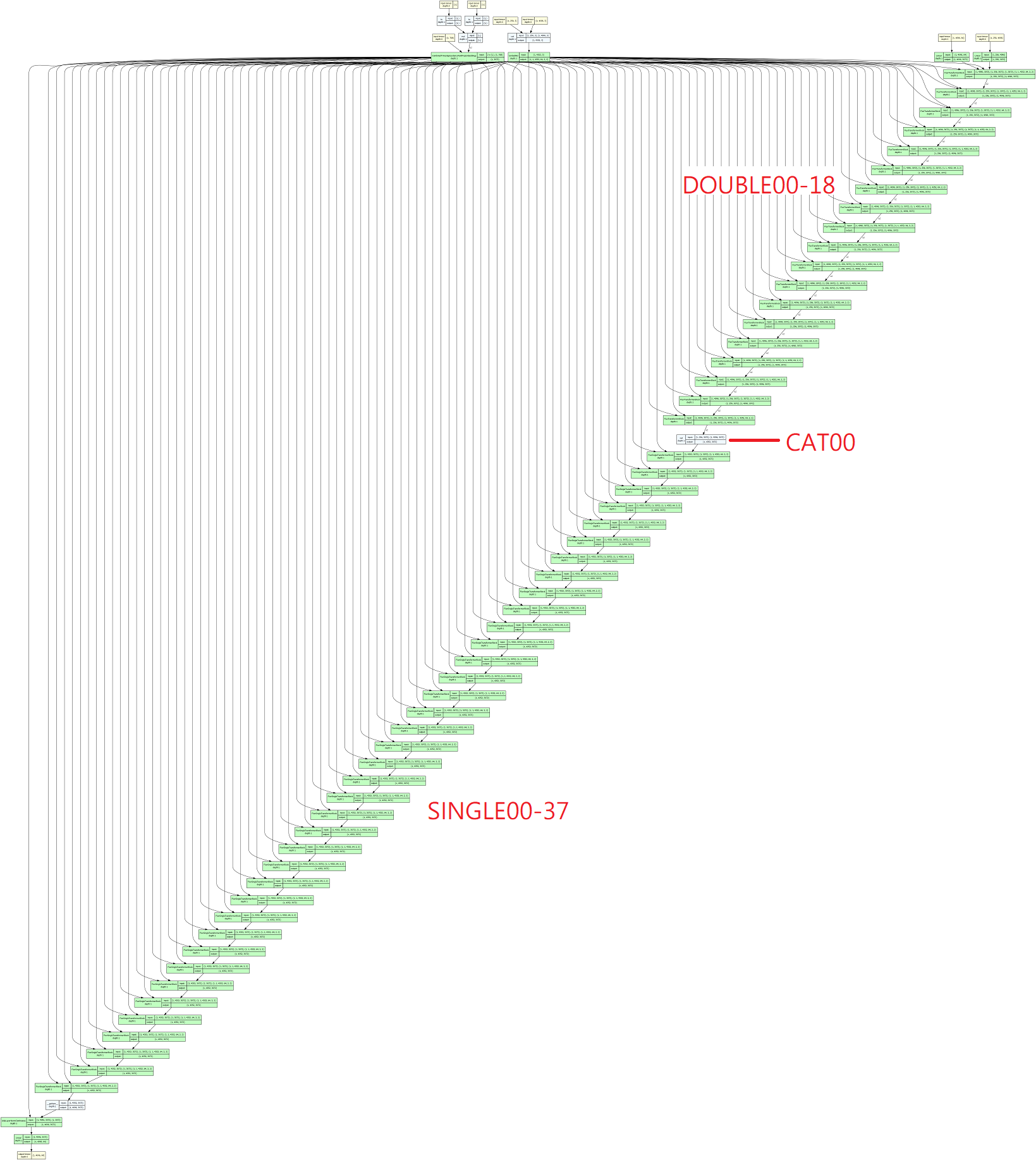

- Tired of diagrams drawn by hand? How about generated solely by program? view_unet.ipynb

SD1: stabilityai/stable-diffusion-v1-5

SD2: stabilityai/stable-diffusion-2-1

SDXL: stabilityai/stable-diffusion-xl-base-1.0

SD3: stabilityai/stable-diffusion-3-medium-diffusers

SD3.5: stabilityai/stable-diffusion-3.5-large

Hunyuan-DiT: Tencent-Hunyuan/HunyuanDiT-Diffusers

AuraFlow: fal/AuraFlow-v0.2

Flux: black-forest-labs/FLUX.1-dev CPU + 80GB RAM only. 31 minutes

-

Enjoy the comparasion. Actual VRAM requirement is different, maybe Total Size (GB) x 0.5 x (image size / 1024) + TEs.

-

Also there are no implied image sizes, the "height / width" in the model is already counted as latent space. It will cause so much confusion therefore I'll try to intercept the input from the diffuser pipeline to the actual model component, which should match the public docuements from the model authors.

-

"MBW layers" is an unit of "funcional layers", according to the concept of "MBW merge" which was the meta of merging SD1.5 models

obviously not working since then. However it is still useful to have a feeling of how the model works, from UNET to DiT.

- From the inconsistint result of

["sdxl", "sd1", "sd2"]which was overestimated for 2.37x (others are < 0.1%), I also implementeddiffusersandtorchnative approach based from this Stackoverflow post. Issues #262, #303, #312 were reported intorchinfo, which made me a bit panic. Hopefully it can be justified from future inconsistent results. - Refer diffusers.num_parameters and its code, nn.Parameter, torch.numel for how it is counted. It is very likely MISMATCH for other contents (e.g.

torchvisionandtorchinfohere, refered as "model summary" ) - The "2.6b", "860M" and "865M" counts are matching the official claim.

- Meanwhile, RTX 3090 is barely capable for flux FP16 for

torchinfo.

| Model | MBW Layers | Params (b, torchinfo) |

Params (b, diffusers) |

Forward/backward pass size (MB, FP16) | Estimated Total Size (GB, FP16) |

|---|---|---|---|---|---|

| SD1 | 25 | 2.0 | 0.860 | 1265 | 2.91 |

| SD2 | 25 | 2.1 | 0.865 | 2837 | 4.46 |

| SDXL | 19 | 5.3 | 2.6 | 8993 | 13.80 |

| SD3 | 24 | 2.0 | 2.0 | 11127 | 18.79 |

| SD3.5 | 38 | 8.0 | 8.0 | 17010 | 32.35 |

| Hunyuan-DiT | 40 | 1.5 | 1.5 | 17595 | 20.12 |

| AuraFlow | 36 | 6.8 | 6.8 | 39974 | 52.38 |

| Flux | 57 | 11.91 | 11.91 | 31557 | 54.06 |

- Would vLLM be the next trend such as Lumia-mGPT (30B), Llava-Visionary-70B (70B) and Qwen2-VL (72B)?

-

As mini side request: view_clip.ipynb

-

More like validate my thought instead of discovery.