-

CivitAI model page. The style there is a bit different.

-

HuggingFace model page. The style is also different.

-

CivitAI article page. Summary of here.

-

BEST REVIEW EVER. Thanks anon. Never thought it reached 4chan.

-

Currently, it is an

Ensemble averaging of 10 neural networksUniformAutoMBW (Bayesian Merge) of1020+1 SD1.5 models. -

Although I'm going to repeat the "mix" for SD2 and SDXL (maybe SDXL Turbo?) models, I will seperate the exclusive findings there. If you find unexplained stuffs there, read this article instead.

- I have found that the potential of community supported SD1.X model still not fully explorered. Meanwhile, although SDXL is greatly improved, it is still lack of THE proper anime finetune (e.g. "full danbooru"). Therefore, would there be something to be explorered by making a model with my own discoveries (theory)?

-

ch01/merge: Even it is not well theorized, merging models is an relatively effective method to "ensemble" models without prior knowledge, includes dataset, and training procedures.

-

Finetuning SDXL has been a engineering challenge, without any publicized models trained with 1M+ images after a month. The largest scale of pure finetune or mixed approach are only 300k images, way less then the publicized 4M "full danbooru" dataset. For me not investing on expensive GPUs, e.g. 8x A40 for WD, or even a single RTX4090, finetuning, or even LoRA, sounds impossible for me. Also, given my hiatus for 2 months because of other hobbies (hint: PC hardware), I won't have time to explore the hyperparameters for model finetuning, or even image tagging, and gathering dataset. Therefore brutally merging models sounds feasible for me. Moreover, even I have two 3090s now, I can choose to spend all the efforts on making lots of images instead of training models.

-

ch02/model_history: Given the rich history since "NAI" and other datasets, SD1.X models after many iterations are still comparable to the new SDXL models with only a few iterations.

-

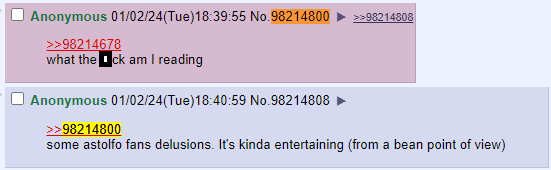

ch01/hires_fix, ch01/cfg_step, ch01/arb: The most noticeable (slight) difference is the lowerbound CFG and the upper bound of resolution. My recent artwork in both SD1.X based and SDXL based models are sharing similar parameters and image content (although the car shape in this comparasion is a lot wacky). With the claimed "1024 ARB" (and "trained with 2048x2048 images") for SD1.x models, comparing with "1024 ARB" SDXL models, generating images with 768x768 hires 2.0x or 1024x1024 hires 1.5x images still yields similar details. However "1024 ARB" SD1.x models are rare, and no one has merge them before.

-

It is hard to compare, especially I don't have nice metrics to compare. It will be benchmarked by ch01/my_procedure, to keep my justification consistant.

- Here is a list of merged, or "may be merged" of models:

| Index | Model Name | Model source | Merged yet? (Baseline / Extended / In review) |

|---|---|---|---|

01 |

VBP | "NAI SFW" | Baseline |

_02 |

CBP | "NAI NSFW" | Baseline |

_03 |

MzPikas TMND Enhanced | AutoMBW |

Baseline |

_04 |

DreamShaperV8 | "A>R" Merge, realistic nxxes | Baseline |

_05 |

CoffeeWithLiquor | NAI (Lots of LoRAs) | Baseline |

_06 |

BreakDomain | NAI | Baseline |

_07 |

AIWMix | "SD", | Baseline |

_08 |

Ether Blu Mix | Merges of famous A | Baseline |

_09 |

majicMIX realistic | Merges of MJ + "2.5" + NWSJ | Baseline |

_10 |

Silicon29 | AutoMBW of "2.5" | Baseline |

_11 |

BP | ACertainty | Extended |

_12 |

CGA9 | AutoMBW | Extended |

_13 |

LimeREmix | Human evaluated merge | Extended |

_14 |

CyberRealistic Classic | Pure Realistic Merge | Extended |

_15 |

ORCHIDHEART | Similar to CGA | Extended |

_16 |

BB95 Furry Mix | E621 | Extended |

_17 |

Indigo Furry mix | E621 | Extended |

_18 |

AOAOKO [PVC Style Model] | PVC | Extended |

_19 |

GuoFeng3 | Chinese fantasy | Extended |

_20 |

YiffyMix | E621 | Extended |

| xx | ALunarDream | NAI (Style Blending) | In review |

| xx | Dreamlike Anime | Non NAI based Anime | Rejected (legal issue) |

| xx | Marbel V182 | Human evaluated merge | In review |

| xx | AIDv2.10 | NAI (Style Blending) | In review |

- Note that all models are abruptly replaced with SD1.4's Text Encoder. Original desired contents (e.g. specific anime contents) will be disabled.

| Merge Batch | Description |

|---|---|

| Baseline | This model is capable to output images well with 1024x1024 native, with great varity contents. Identifying Astolfo is a plus (LAION has a few images of him). |

| Extended | This model is either capable to do any one of them above. It means that the model has experienced radical finetuning, and the output diversity is damaged. |

| In review | This model sounds that it has been finetuned in a menaingful way. It is mostly not effective with great tradeoff, and it need to be carefully merged under sequence (i.e. very last). Now they are put on hold because I have 20 models already. |

-

Given the success of "2.99D" models and "2.5D" models, I expect there will be suprise when I merge them together (however it is hard to preprocess the model, see below).

-

Note that some of them are also merges of other models, I expect that I can be benefied from the inherited contents also, for example, style embeddings and more keywords.

-

(Not proven) The inheritance is not straightforward, it may requires replacing the Text Encoder with the child's instead of the master SD1.x's. See below for how I discover the phenomenon.

-

ch01/merge: Although the MBW / LBW theory lacks of scientific proof, autombw / autombw v2 reconstructed the optimization task / model selection into a ML task, treating MBW / LBW as a framework with simplified parameter, instead of directly applying domain knowledge.

-

There are nice models based from manual MBW, such as majicMIX, GhostMix, and autombw models such as SD-Silicon and MzPikas TMND Enhanced, and an unreleased "CGA" model, they are showing ability to know more entities without sacrificing too much existing knowledges (See this artwork based from majicMIX). Seems that the variance can be a lot greater, resembling my guess long time ago. Therefore it would support the "suprise" I have expected.

-

ch02/animevae_pt: Other then VAE, NAI also used SD's orignal TE. This is special for SD1.X because the CLIP / ViT used was trained with uncropped LAION dataset, including the NSFW words. It is in theory knowing most vocabulary, even the token count is less then SDXL. It shuold be reminded that NSFW SDXL / SD2.X models are almost inexist, with a little exception. Rare artwork done by me.. It further supports "nice content may be better then nice structure".

-

ch03: However, most finetuned models used TTE (train text encoder) to create "triger word" effect, but variance has been greatly sacrificed. Note that it is highly doubtful because most models are TTE enabled, and it is hard to proof or even verify.

-

I experienced floating point error while merging. It is not merger error. This is natural for most programming languages. The merged model must be verified with toolkit to make sure the offset counter must be (XXXX/0000/0000).

-

I further verify with the scripts extracting metadata in model by batch to make sure I am merging the right model (however the "merge chain" is wiped when I reset the Text Encoder):

{

"__metadata__": {

"sd_merge_recipe": {

"type": "webui",

"primary_model_hash": null,

"secondary_model_hash": "aba1307666acb7f5190f8639e1bc28b2d4a1d23b92934ab9fc35abf703e8783d",

"tertiary_model_hash": null,

"interp_method": "Weighted sum",

"multiplier": 0.125,

"save_as_half": true,

"custom_name": "08-vcbpmt_d8cwlbd_aweb5-sd",

"config_source": 0,

"bake_in_vae": "None",

"discard_weights": "",

"is_inpainting": false,

"is_instruct_pix2pix": false

},

"format": "pt",

"sd_merge_models": {

"fe38511e88a8b7110a61658af6a0ff6a6b707852ff2893a38bc8fa0f92b3ace4": {

"name": "07-vcbp_mtd8cwl_bdaw-sd.safetensors",

"legacy_hash": "72982c20",

"sd_merge_recipe": null

},

"aba1307666acb7f5190f8639e1bc28b2d4a1d23b92934ab9fc35abf703e8783d": {

"name": "etherBluMix5-sd-v1-4.safetensors",

"legacy_hash": "25d4f007",

"sd_merge_recipe": null

}

}

}

}-

For full recipe, see recipe-10a.json.Replace with SD original CLIP / VAE to obtain "10", however the equivalence may not in file hash precision because of floating point error.

-

Personally I prefer using vae-ft-mse-840000-ema-pruned for VAE, but I'll keep it neutral while merging.

-

For the merge ratio, I round to 3 d.p. which is engough for the first 20 merges.

-

Docuement first. If you see any content not covered in this article, it is either an idea just appeared, or I really havn't considered. Most idea in this article is original and relies on my own experience.

-

Recover, and even replace TE. Models have been uploaded to HuggingFace. Thanks @gesen2gee (Discord) for mentioning stable-diffusion-webui-model-toolkit. After some simple test (make sure it can run, can being merged afterward), I'll post into HuggingFace for reference. Here is the scirpt segment to remove TE. Recovering them is unknown. Probably need to program it myself. Here is a script potentially useful.

-

Baseline merge: Uniform merge a.k.a

baggingaveraging. Models have been uploaded to HuggingFace. As easy as the "Checkpoint Merger" does. To make sure all of them are in uniform distribution, merge with weight$1/x$ for$x > 1$ . Therefore 50% for the 2nd model, 33% for the 3rd model, and so on. The process should be parameterless. -

Proposed merge: autombw a.k.a

boostingRL. Use my own fork of autombw v2.Technical details are under analysis. It has been known as memory intensive instead of GPU intensive. I have some ex-mining SSDs which may help the process. This will not happen soon. I didn't expect for merging 10+ models. Boosting in such scale requires a new software from ground up. I am still using pure GUI with minimum coding.It is not memory intensive. It is just time consuming. See my findings on autombw. Also it is more like RL instead of boosting. -

Get a capable GPU to output such kind of images. I'm suprised that it pulls 19GB of VRAM already.

- Current resolution limit is 1280x1280 (native T2I), hires 1.4x (2080Ti 11G), or 2.0x (Tesla M40).

parameters

(aesthetic:0), (quality:0), (solo:0), (boy:0), (ushanka:0.98), [[braid]], [astolfo], [[moscow, russia]]

Negative prompt: (worst:0), (low:0), (bad:0), (exceptional:0), (masterpiece:0), (comic:0), (extra:0), (lowres:0), (breasts:0.5)

Steps: 256, Sampler: Euler, CFG scale: 4.5, Seed: 132385090, Size: 1344x768, Model hash: 6ffdb39acd, Model: 10-vcbpmtd8_cwlbdaw_eb5ms29-sd, VAE hash: 551eac7037, VAE: vae-ft-mse-840000-ema-pruned.ckpt, Denoising strength: 0.7, Clip skip: 2, FreeU Stages: "[{\"backbone_factor\": 1.2, \"skip_factor\": 0.9}, {\"backbone_factor\": 1.4, \"skip_factor\": 0.2}]", FreeU Schedule: "0.0, 1.0, 0.0", Hires upscale: 2, Hires steps: 64, Hires upscaler: Latent, Dynamic thresholding enabled: True, Mimic scale: 1, Separate Feature Channels: False, Scaling Startpoint: MEAN, Variability Measure: AD, Interpolate Phi: 0.7, Threshold percentile: 100, Version: v1.6.0

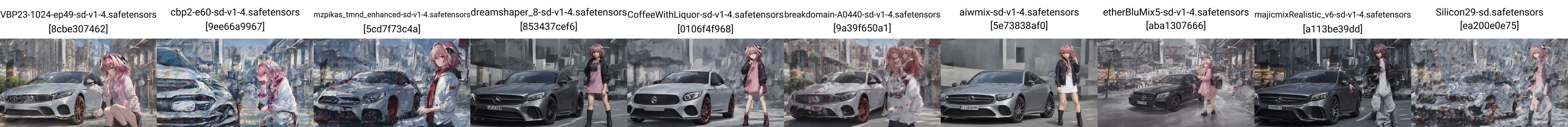

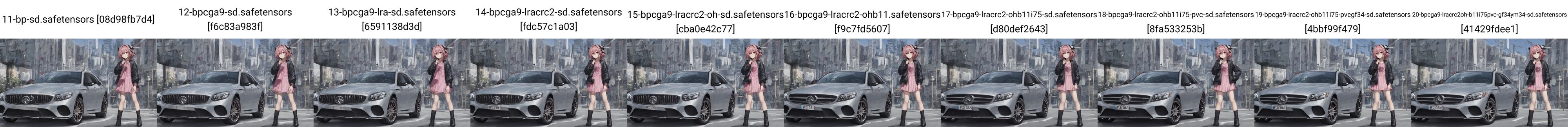

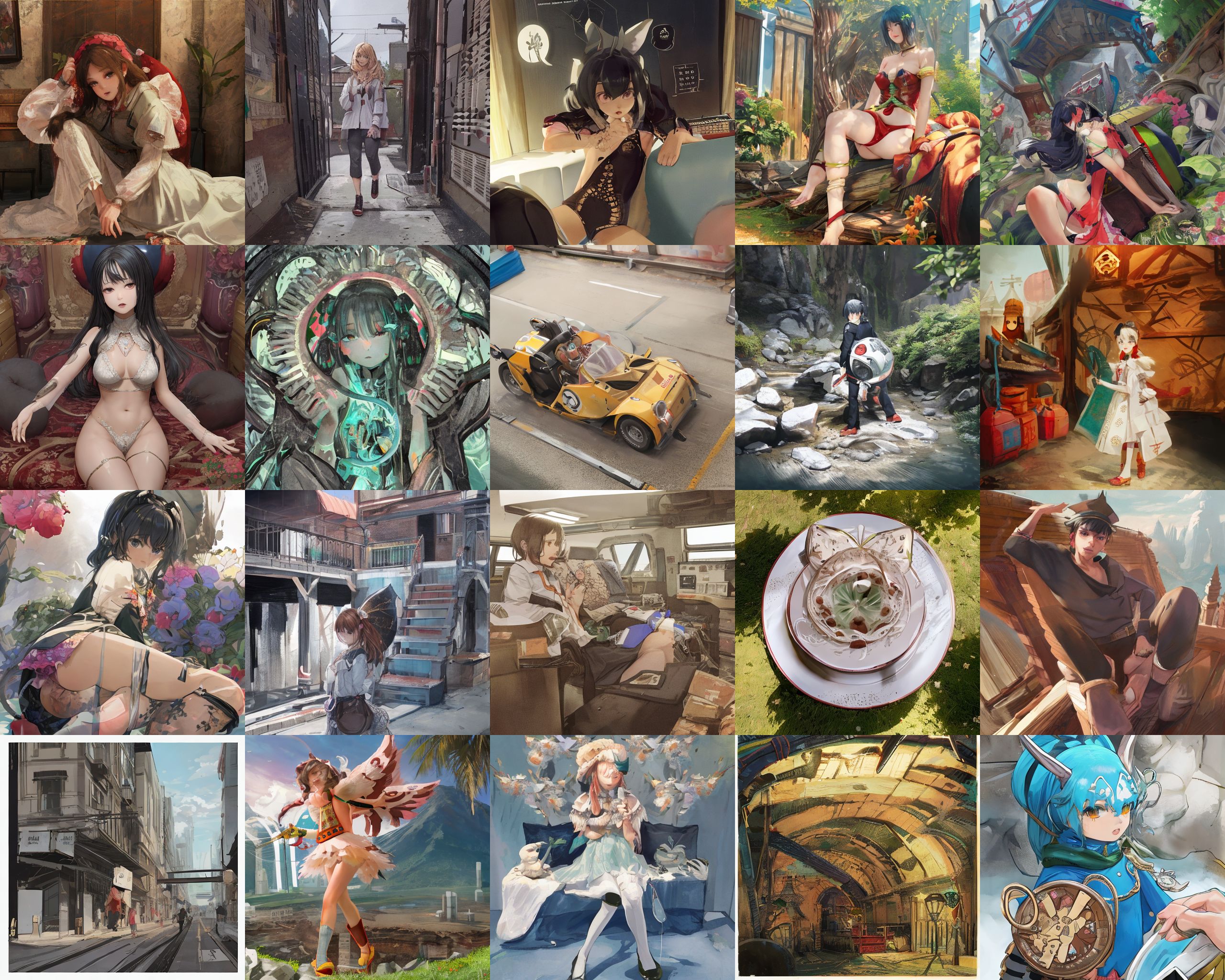

- Associative property has been observed. First, these are comparasion between 10 models without pre processing:

- And then mixing them together, you will find the intelligence agent successfully choose to "draw" the most confident one:

- This time all models are replaced with original SD's CLIP / Text Encoder:

- Finally mixing them together again, now it is capable to "draw" in 1344x768x2.0, which SD was supposed to be trained with 512px images:

(aesthetic:0), (quality:0), (car:0), [[mercedes]], (1girl:0), (boy:0), [astolfo] Negative prompt: (worst:0), (low:0), (bad:0), (exceptional:0), (masterpiece:0), (comic:0), (extra:0), (lowres:0), (breasts:0.5) Steps: 48, Sampler: Euler, CFG scale: 4.5, Seed: 3972813705, Size: 1024x576, Model hash: 8cbe307462, Model: VBP23-1024-ep49-sd-v1-4, VAE hash: 551eac7037, VAE: vae-ft-mse-840000-ema-pruned.ckpt, Denoising strength: 0.7, Clip skip: 2, FreeU Stages: "[{\"backbone_factor\": 1.2, \"skip_factor\": 0.9}, {\"backbone_factor\": 1.4, \"skip_factor\": 0.2}]", FreeU Schedule: "0.0, 1.0, 0.0", Hires upscale: 2.5, Hires upscaler: Latent, Dynamic thresholding enabled: True, Mimic scale: 1, Separate Feature Channels: False, Scaling Startpoint: MEAN, Variability Measure: AD, Interpolate Phi: 0.7, Threshold percentile: 100, Script: X/Y/Z plot, X Type: Checkpoint name, X Values: "VBP23-1024-ep49-sd-v1-4.safetensors [8cbe307462],02-vbp23-cbp2-sd.safetensors [6075160ea7],03-vcbp-mzpikas_tmnd-sd.safetensors [4f4da1e956],04-vcbp_mzpt_d8-sd.safetensors [4b36d29be3],05-vcbp_mtd8_cwl-sd.safetensors [84c1865c1e],06-vcbp_mtd8cwl_bd-sd.safetensors [dd1d0b7fc4],07-vcbp_mtd8cwl_bdaw-sd.safetensors [fe38511e88],08-vcbpmt_d8cwlbd_aweb5-sd.safetensors [b21ea2b267],09-vcbpmt_d8cwlbd_aweb5m-sd.safetensors [f32f9b8e99],10-vcbpmtd8_cwlbdaw_eb5ms29-sd.safetensors [6ffdb39acd]", Version: v1.6.0

- (Diagram coming soon) The "merge pipeline" is drawn below. With "uniform merge", merge order is arbitrary, you will get the same mixture eventually. You can verify if you "bag of SD" is performing as expected.

- Also, merging 10 "CLIP / TE reseted" models is same as merg 10 models and then "reset CLIP / TE". In practice, you may suffer floating point error, making the "hash" is different in toolkit:

- You can switch Text Encoder to what you're familiar with. The model still remember how it looks. However it tends to be effective for an entity instead of abstract art style (including nsfw).

parameters

(aesthetic:0), (quality:0), (solo:0), (1girl:0), (gawr_gura:0.98)

Negative prompt: (worst:0), (low:0), (bad:0), (exceptional:0), (masterpiece:0), (comic:0), (extra:0), (lowres:0)

Steps: 48, Sampler: Euler, CFG scale: 4.5, Seed: 978318572, Size: 768x768, Model hash: d94d7363a0, Model: 08-vcbpmt_d8cwlbd_aweb5-cwl, VAE hash: 551eac7037, VAE: vae-ft-mse-840000-ema-pruned.ckpt, Clip skip: 2, Version: v1.6.0

-

"Ensemble" is a well known method on ML field, and it is possible to have ensemble over neural networks. "Ensemble averaging" without soft voting is the most simple approach, it shows improvement on ML tasks by resolving bias-variance dilemma in the fundamental way. Hinted by "evolutionary computing", "polyak averaging" (average out models in different aspect, including time) makes the model easier to converge while transversing in high dimensional latent space, i.e. generating sementic fragements from noise. Althoguh it is a technique in RL field, it is applicable on open-end, real-world problem which communities keep envolving / iterlating the same SD model in their "artistic way" across this year (2022 Aug - 2023 Sept). Such artistic problem has been discused, and attempted to be formulated ("latent space") before SD or even LDA model, as soon as StyleGAN in 2019, I believe that such complex problem can be related in interdisciplinary manner, like computer vision / computer graphic in BIM / GIS / CFD, and even acoustic.)

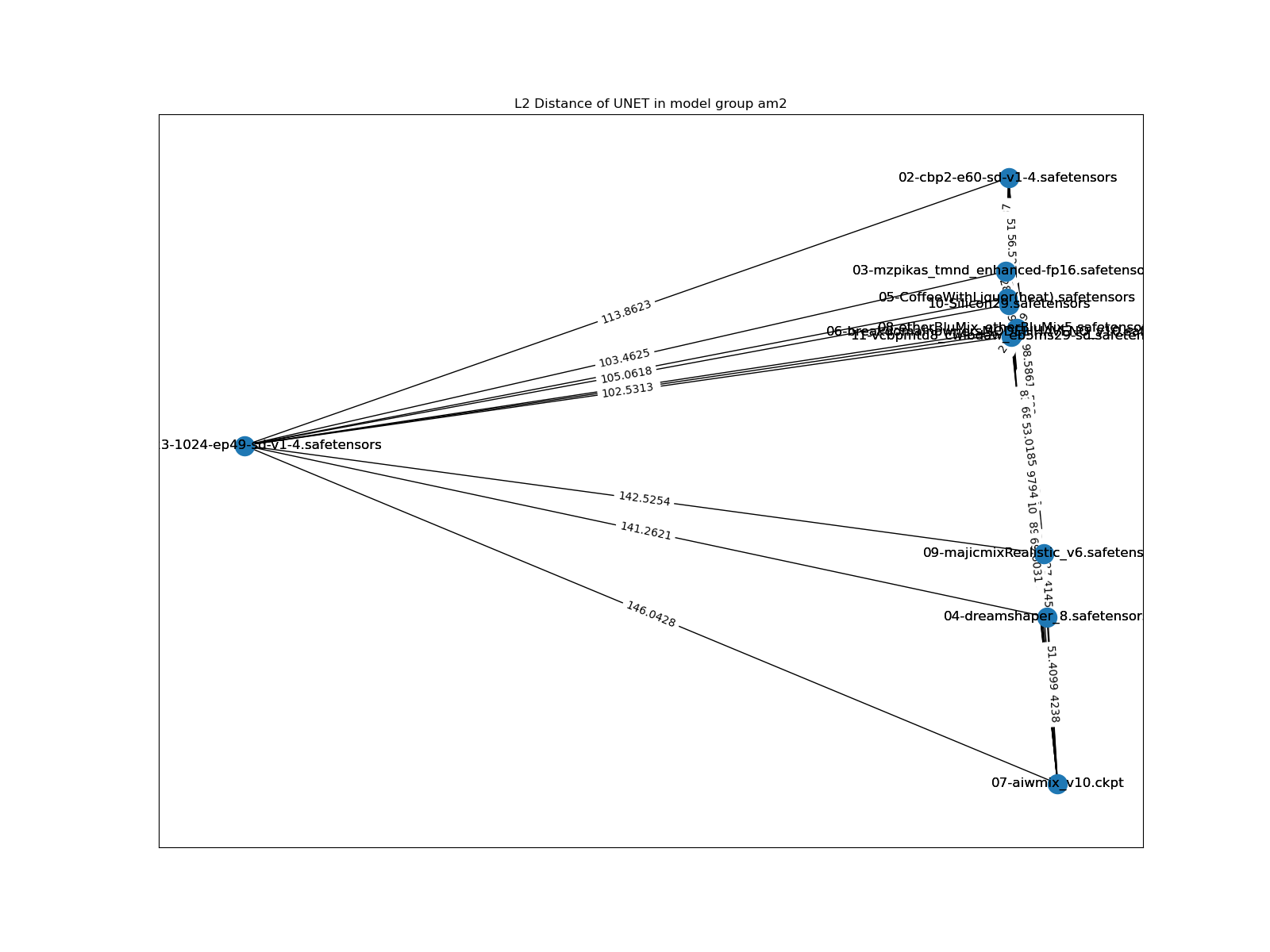

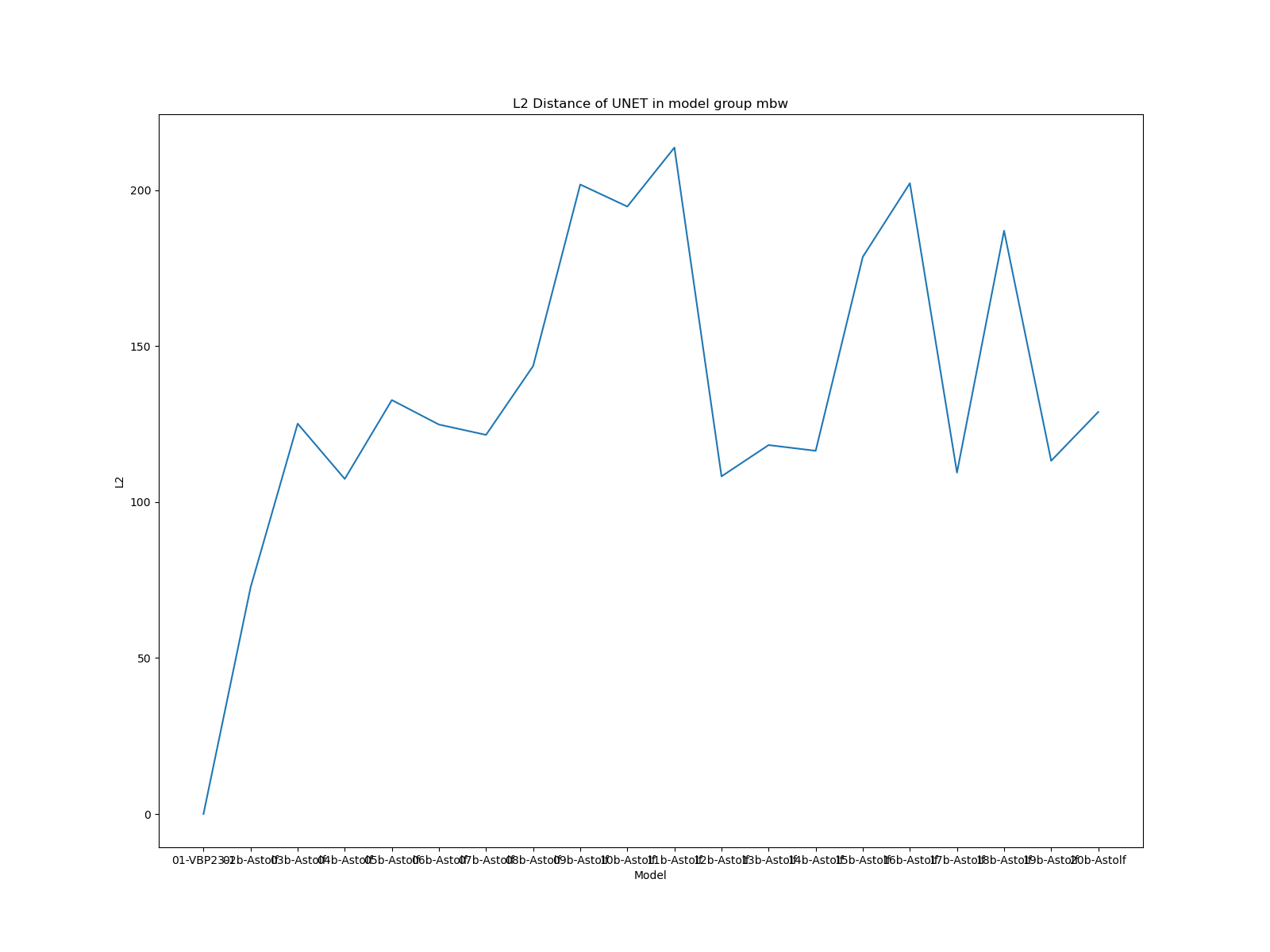

- Note that it is not validated or verified, even if it is possible to do so in CS + Art manner. This should depends on model selection, but the dimensionless MSE somehow show correlation to the image difference in the xy plots (Astolfo with Mercedes) above. If the correlation is legit, the equilibrium will let the intelligence agent try to draw most objects with lowest variance disregarding any art style it have learnt (almost no impact on bias).

- The proof of correlation should be an extended work in ch03 but I have no time to write the script. Image difference can be calculated with MSE with SIFT or encoded by Swin Transformer. So the proof is left as an exercise.

- Model doesn't break even I've merged some radical models.

- Recommended CFG has been reduced from 4.5 to 4.

parameters

(aesthetic:0), (quality:0), (1girl:0), (boy:0), [[shirt]], [[midriff]], [[braid]], [astolfo], [[[[sydney opera house]]]]

Negative prompt: (worst:0), (low:0), (bad:0), (exceptional:0), (masterpiece:0), (comic:0), (extra:0), (lowres:0), (breasts:0.5)

Steps: 256, Sampler: Euler, CFG scale: 4, Seed: 341693176, Size: 1344x768, Model hash: 41429fdee1, Model: 20-bpcga9-lracrc2oh-b11i75pvc-gf34ym34-sd, VAE hash: 551eac7037, VAE: vae-ft-mse-840000-ema-pruned.ckpt, Denoising strength: 0.7, Clip skip: 2, FreeU Stages: "[{\"backbone_factor\": 1.2, \"skip_factor\": 0.9}, {\"backbone_factor\": 1.4, \"skip_factor\": 0.2}]", FreeU Schedule: "0.0, 1.0, 0.0", Hires upscale: 2, Hires steps: 64, Hires upscaler: Latent, Dynamic thresholding enabled: True, Mimic scale: 1, Separate Feature Channels: False, Scaling Startpoint: MEAN, Variability Measure: AD, Interpolate Phi: 0.7, Threshold percentile: 100, Version: v1.6.0

- With DynamicCFG and FreeU enabled, CFG1 can produce reasonable images, however pure negative prompt is harder to be effective.

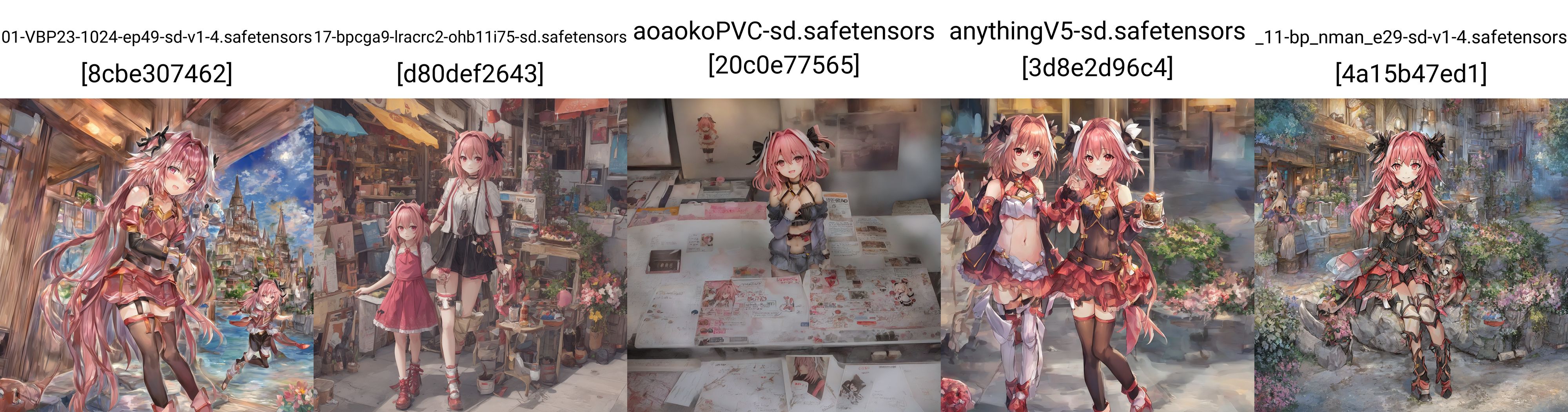

- For Embedding, as what we've seen in CivitAI, VBP's embeddings works, LoRA also works. Hint: Do you know "momoko", the unfourtunate person styled as AnythingV3 and mmk-e?

parameters

(aesthetic:0), (quality:0), (solo:0), (boy:0), (momoko=momopoco_ms100:0.98), (astolfo:0.98)

Negative prompt: (worst:0), (low:0), (bad:0), (exceptional:0), (masterpiece:0), (comic:0), (extra:0), (lowres:0), (breasts:0.5)

Steps: 48, Sampler: Euler, CFG scale: 4, Seed: 1920996841, Size: 1024x1024, Model hash: 8cbe307462, Model: 01-VBP23-1024-ep49-sd-v1-4, VAE hash: 551eac7037, VAE: vae-ft-mse-840000-ema-pruned.ckpt, Denoising strength: 0.7, Clip skip: 2, FreeU Stages: "[{\"backbone_factor\": 1.2, \"skip_factor\": 0.9}, {\"backbone_factor\": 1.4, \"skip_factor\": 0.2}]", FreeU Schedule: "0.0, 1.0, 0.0", Hires upscale: 1.5, Hires upscaler: Latent, Dynamic thresholding enabled: True, Mimic scale: 1, Separate Feature Channels: False, Scaling Startpoint: MEAN, Variability Measure: AD, Interpolate Phi: 0.7, Threshold percentile: 100, TI hashes: "momoko=momopoco_ms100: 8fe99091c82b, momoko=momopoco_ms100: 8fe99091c82b", Script: X/Y/Z plot, X Type: Checkpoint name, X Values: "01-VBP23-1024-ep49-sd-v1-4.safetensors [8cbe307462],17-bpcga9-lracrc2-ohb11i75-sd.safetensors [d80def2643],aoaokoPVC-sd.safetensors [20c0e77565],anythingV5-sd.safetensors [3d8e2d96c4],_11-bp_nman_e29-sd-v1-4.safetensors [4a15b47ed1]", Version: v1.6.0

- However we should make sure the EMB / LoRA should be trained on NAI or SD for best expectancy. Ideal match will not affect any other parameters, and it serves as another prompt keyword. See this LoRA's info for example (first session, artwork):

{

"ss_sd_model_name": "Animefull-final-pruned.ckpt",

"ss_clip_skip": "2",

"ss_num_train_images": "92",

"ss_tag_frequency": {

"train_data": {

"asagisuit": 46,

"1girl": 46,

"solo": 41

}

}

}- If the EMB / LoRA is trained on other "popular models" (e.g. AnyLora / AOM3 / Pastel), such as this LoRA (artwork), the compability should remains unchanged. Usually the "maximum resolution" will be dropped to be the original training resolution. For this merge, it drops from 1344x768 x2.0 to 1344x768 x1.0, which there is no hires scaling.

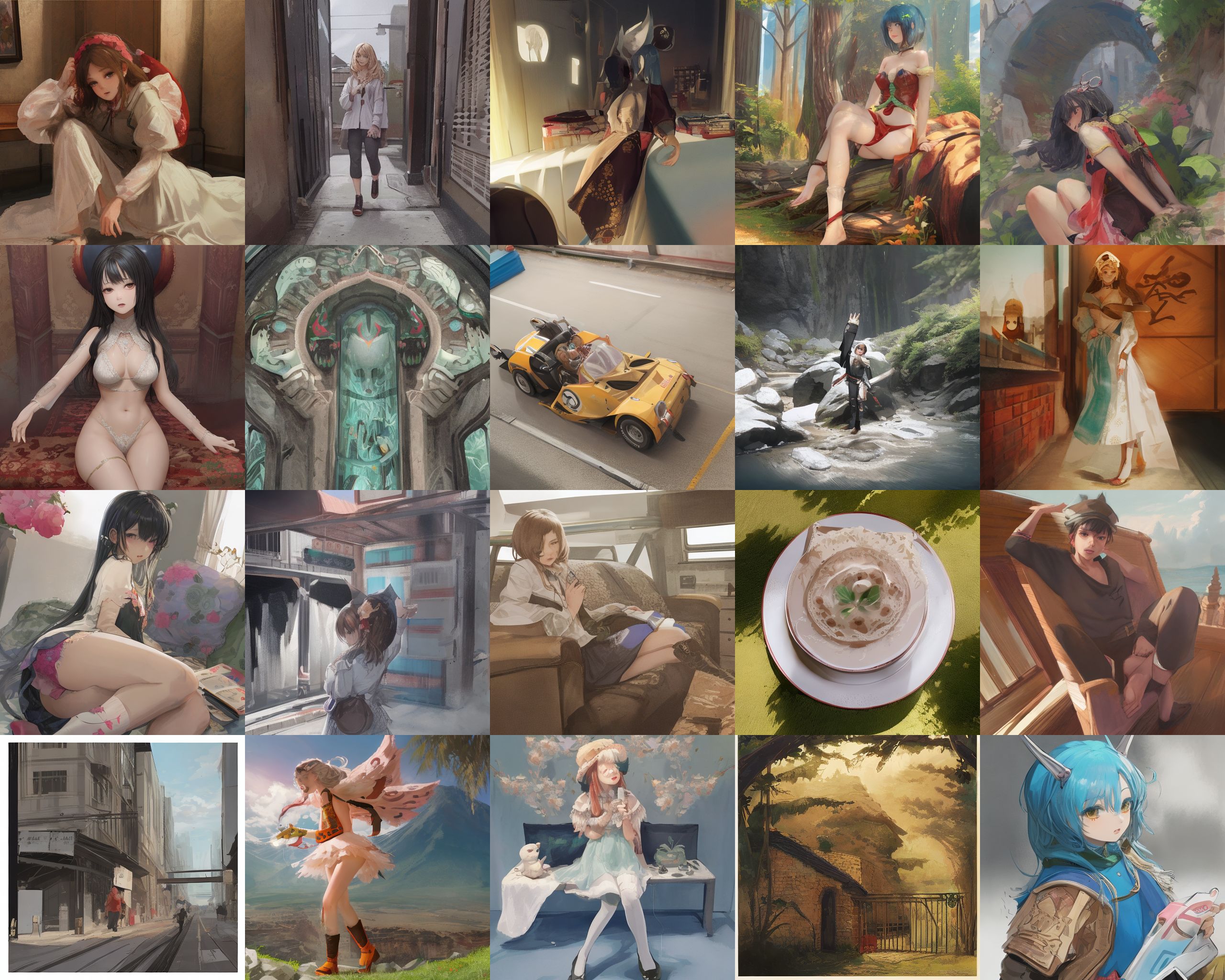

- And then here is the xy plots as in Baseline:

-

For full recipe, see recipe-20a.json.

-

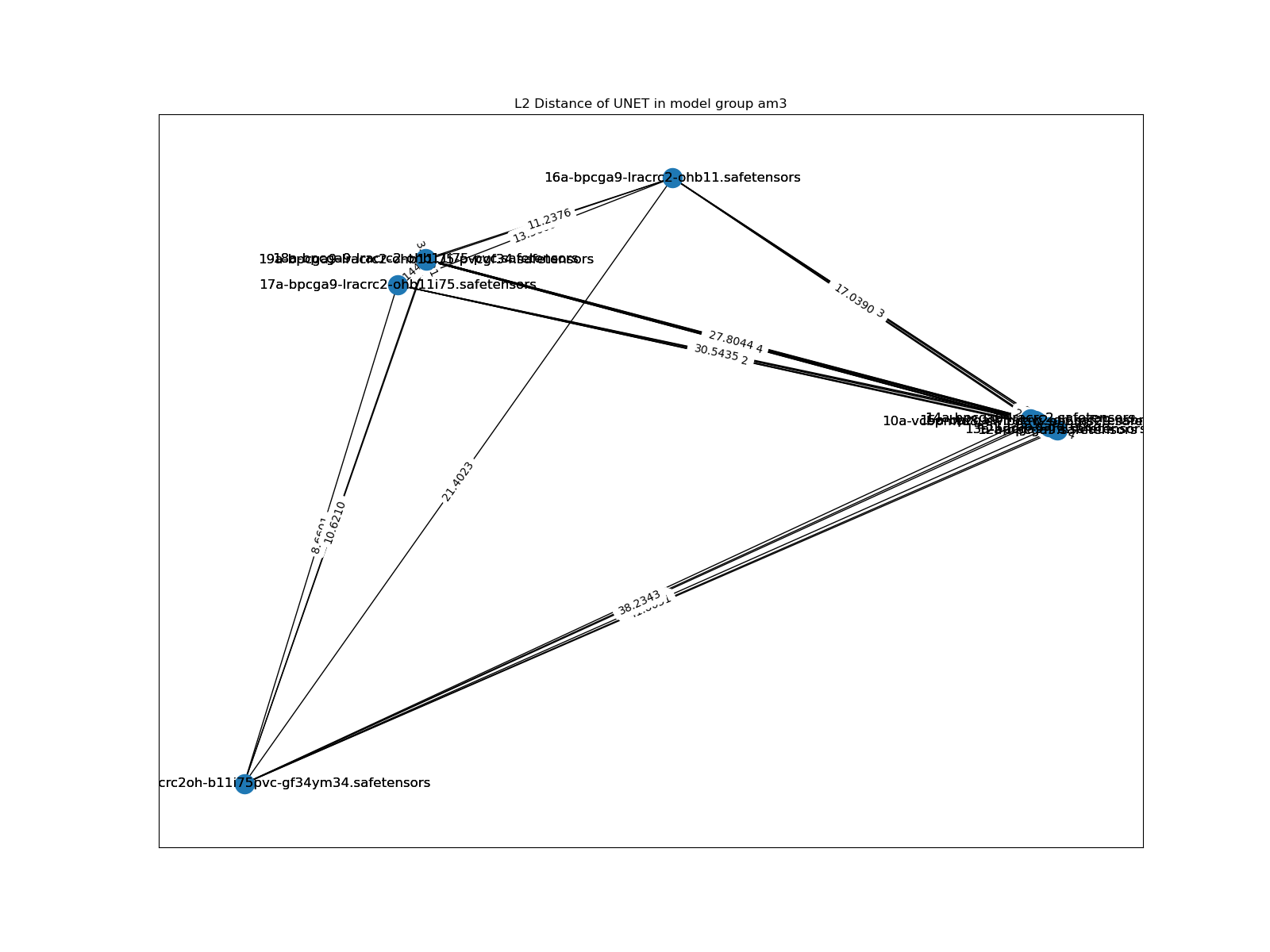

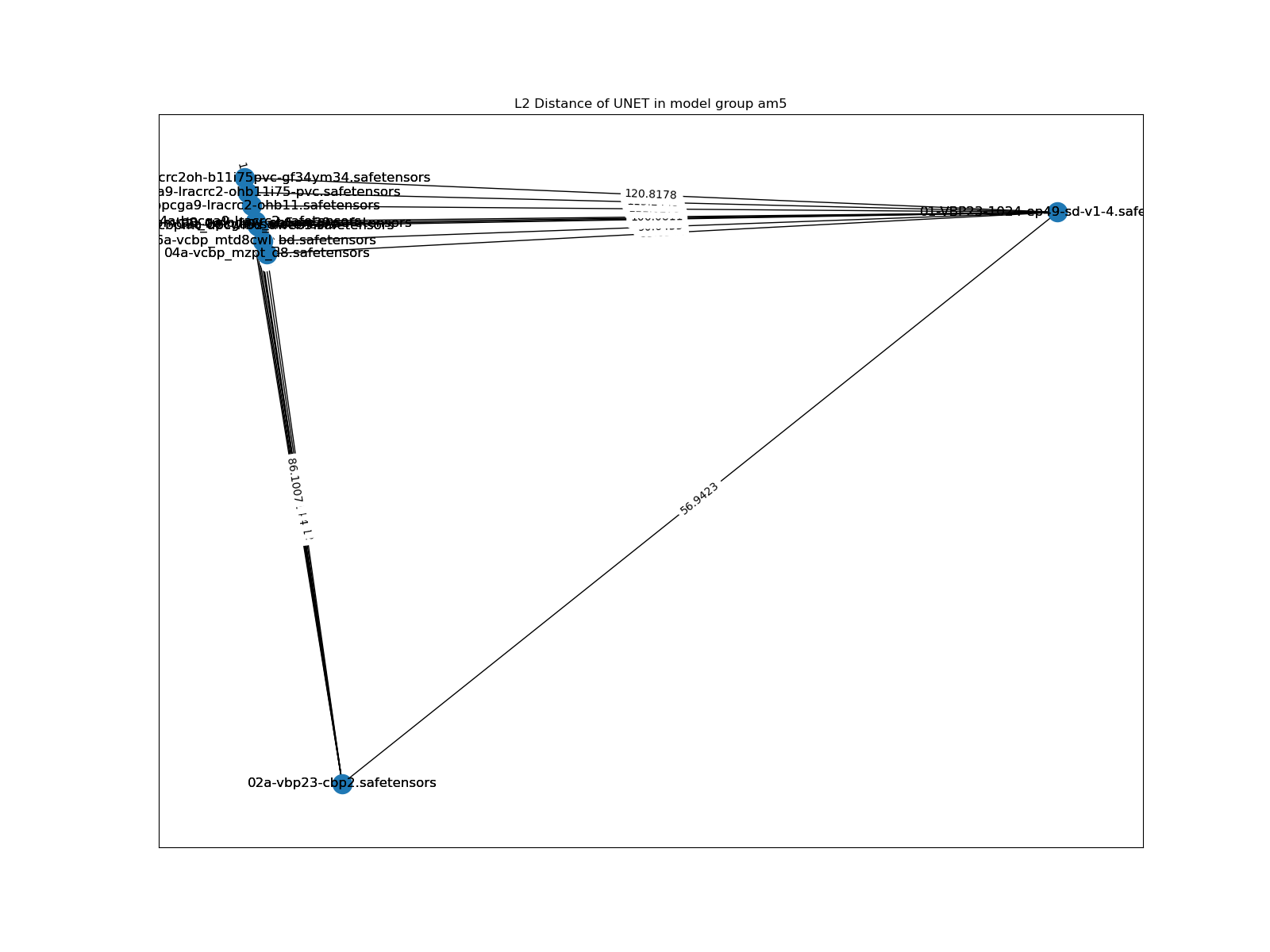

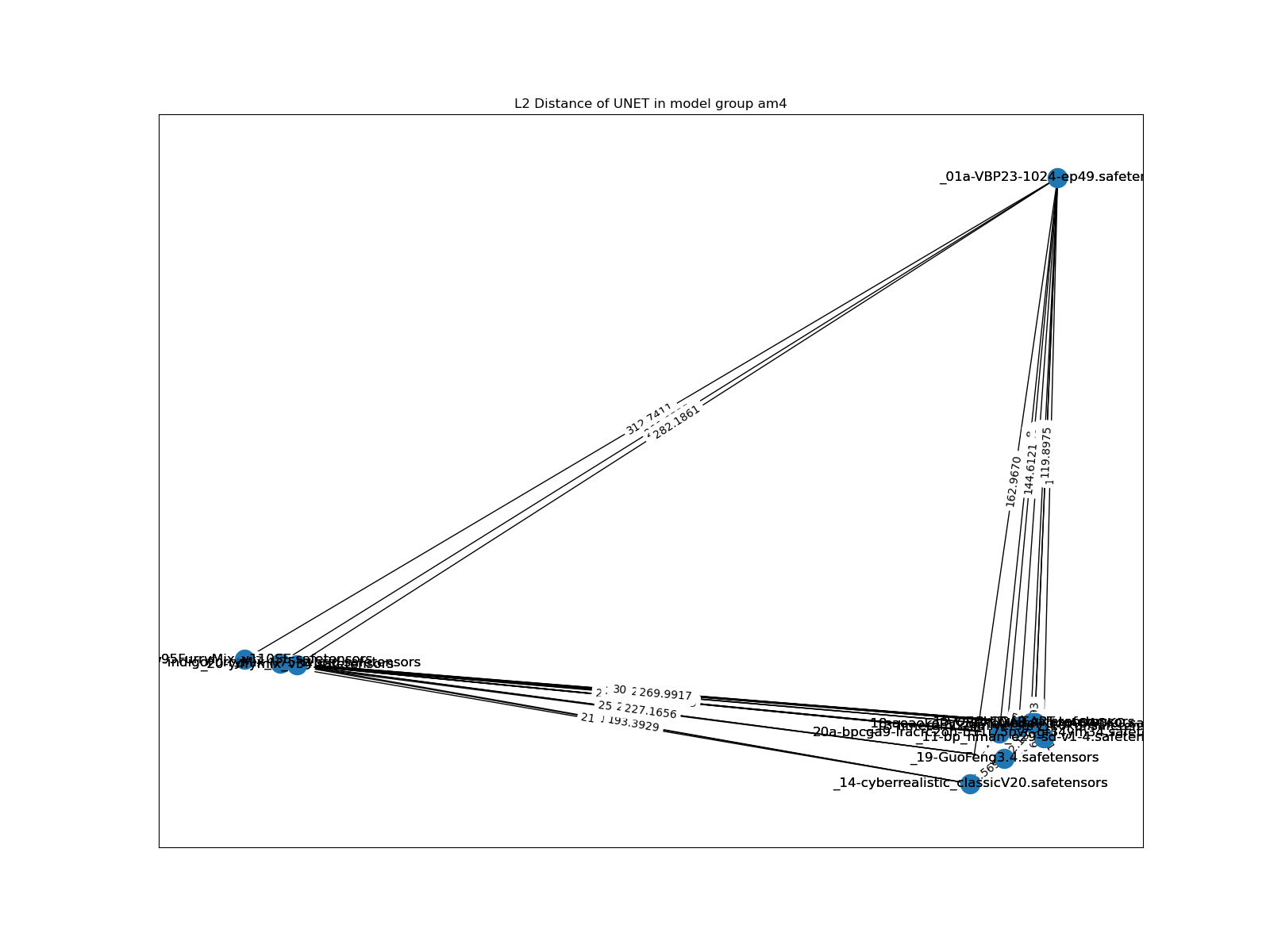

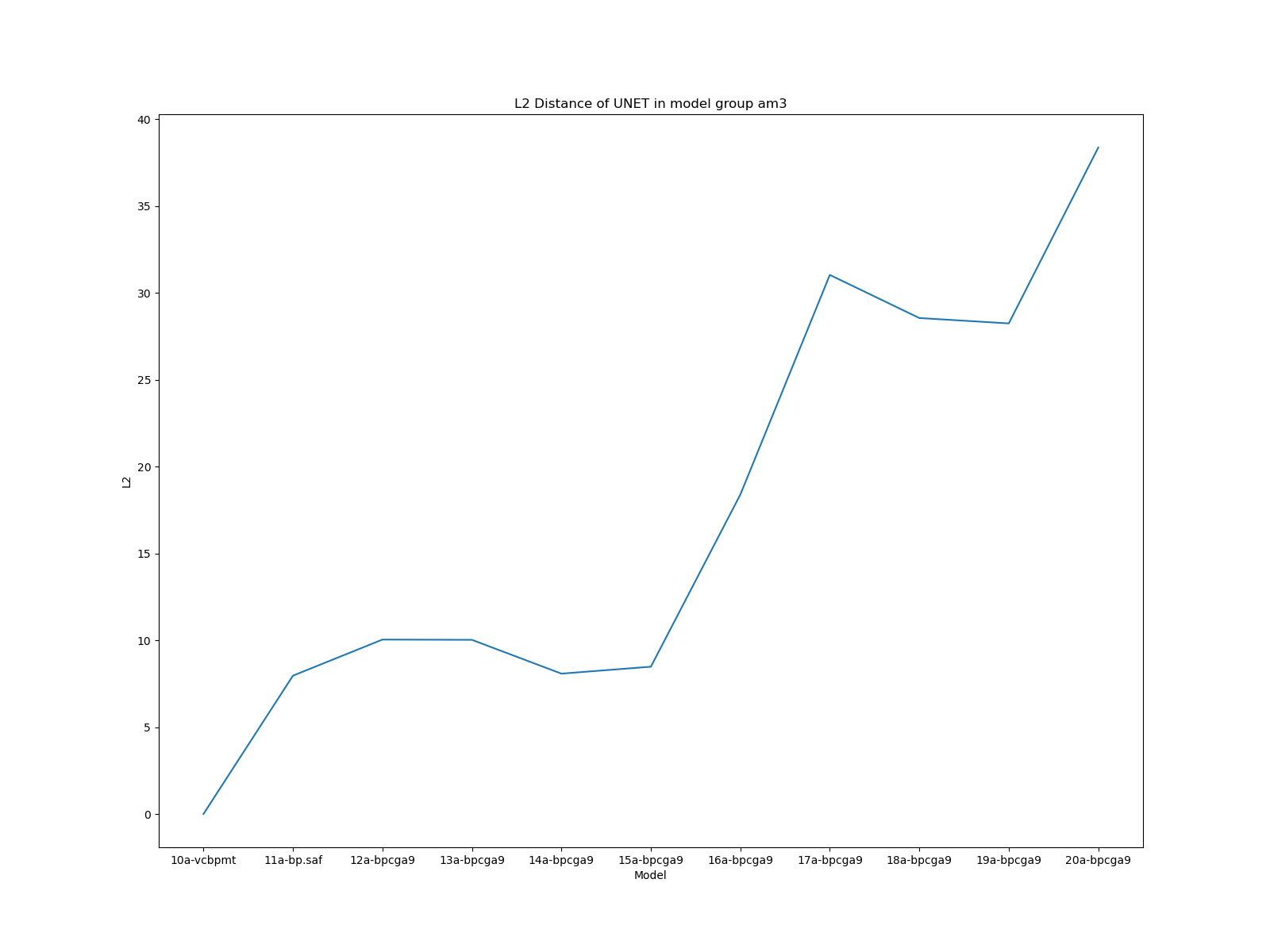

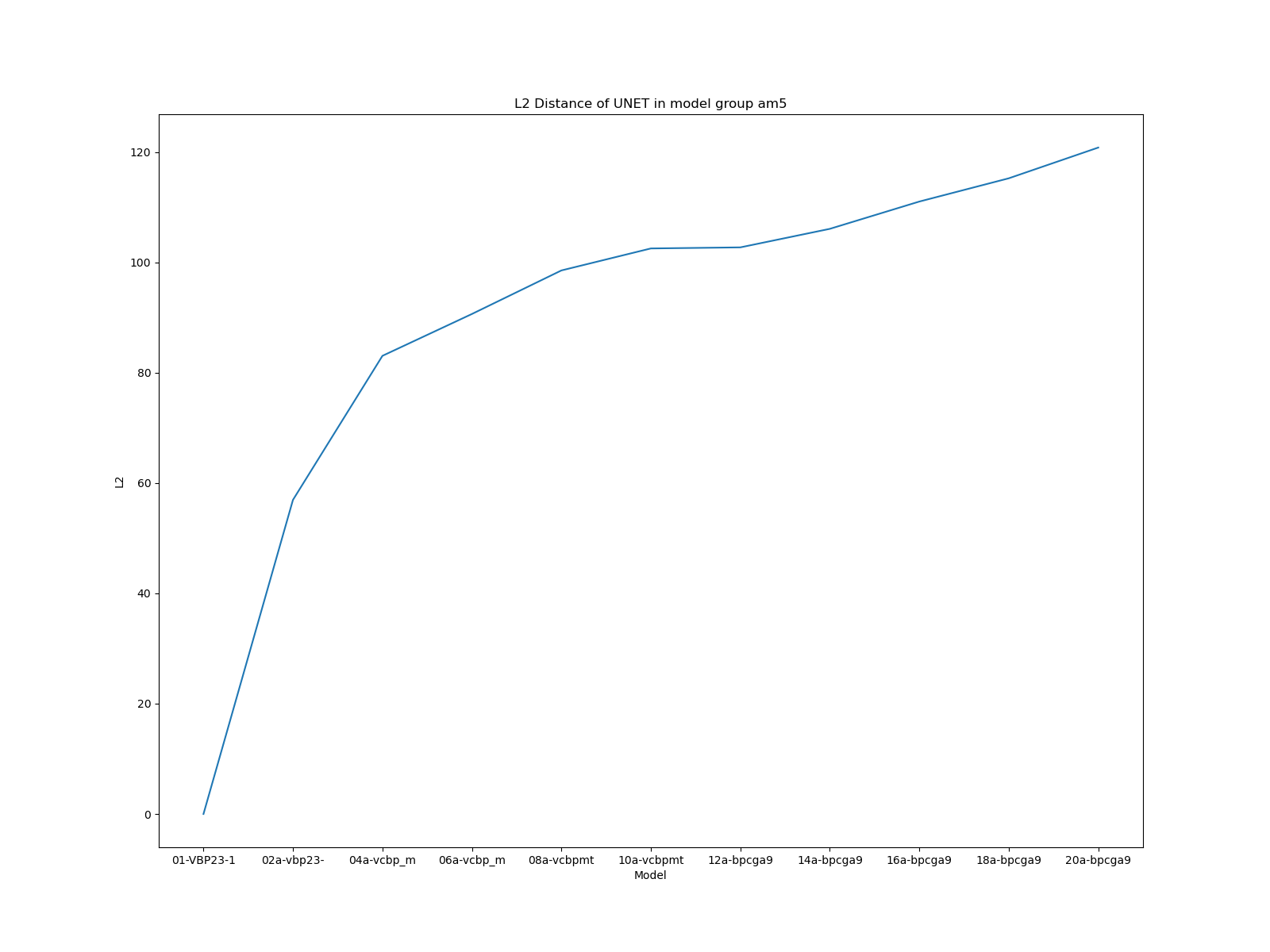

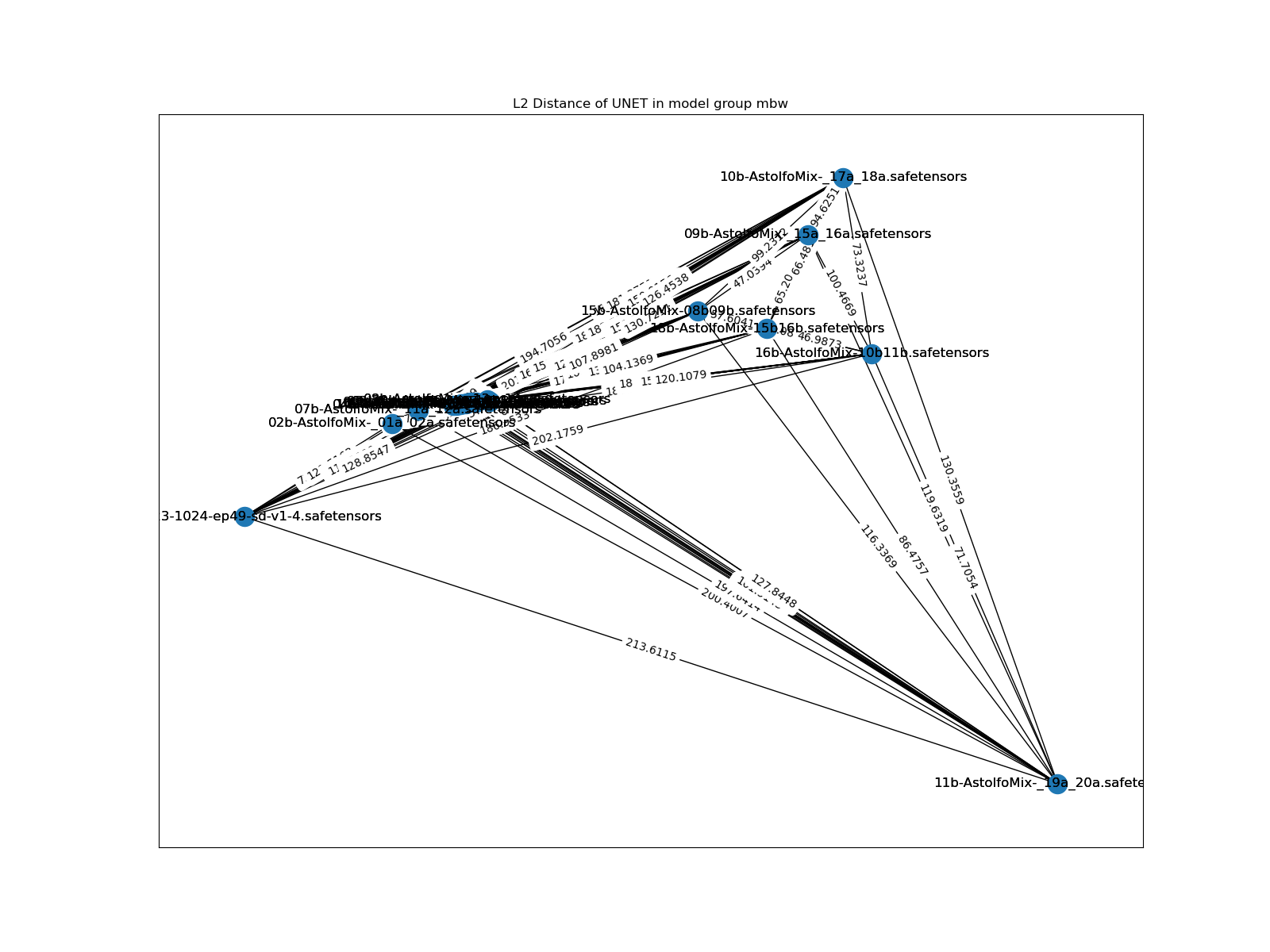

Now the L2 distance graph (both X-Y axis are arbitary picked, in order to visually seperate the points):

- For the "a posteriori style", it is "western anime (2.5D) close to photorealism (2.99D) but proportion is more realistic, more like impressionism oil painting with modern content". It can be related to the "relational coorinate of stylish models":

- Comparing to baseline, since the art style varies, it shows less convergent. It actually drifts outward.

- In artistic sense, we can see that the human face has been slightly changed, and the background is more clean and consistant. Could it be correlated to other statistical tools? Once again the proof is left as an exercise.

- Currently in rabbit hole. Forked auto-MBW-rt and sd-webui-runtime-block-merge. Yes I write codes again.

-

For Bayesian Optimization, the library used Hyperactive for implementation, Check out this guide (very bottom) for description. From this code, it points to this code, which make use of GaussianProcessRegressor. The

xiis the only hyperparameter, which makes "warm start"warm_start_smbosounds irrelevant. -

I think ImageReward is the SOTA of the general evaluation metric, which is advanced from the original AVA used by SD, or Cafe's Aesthetic score in anime community, and the hybird approach

-

For the payloads, my Astolfo is already a great testing dataset. All 20 models has almost none data related to him, or any actual location, or vehicles. The problem is I'm not sure how the BLIP inside ImageReward treat the prompts. By NLP sense, BPE should handle the syntax and punctuations well, especially the training progress in ImageReward involves tag-styled prompts. Also, "batch count" (

n_iter) is important, which I can make use of averaged score:

if tally_type == "Arithmetic Mean":

testscore = statistics.mean(imagescores)-

For choosing Arithmetic Mean, Bayesian

statisticsOptimization relies on MAP and MLE, which are purely arithmetic. -

For comparasion with other optimizers and related parameters, check out this discussion. Usually it is compared with LIPO, or randomized search. Hillclimb (Gradient descent) is also legit, but evalulation time and hyperparameter count is way too high. As stated in the original notebook, and this paper, if the problem is complicated

art is pure abstractand it is costly to try60x of payload, I should invest on the most statistic advanced algorithm. -

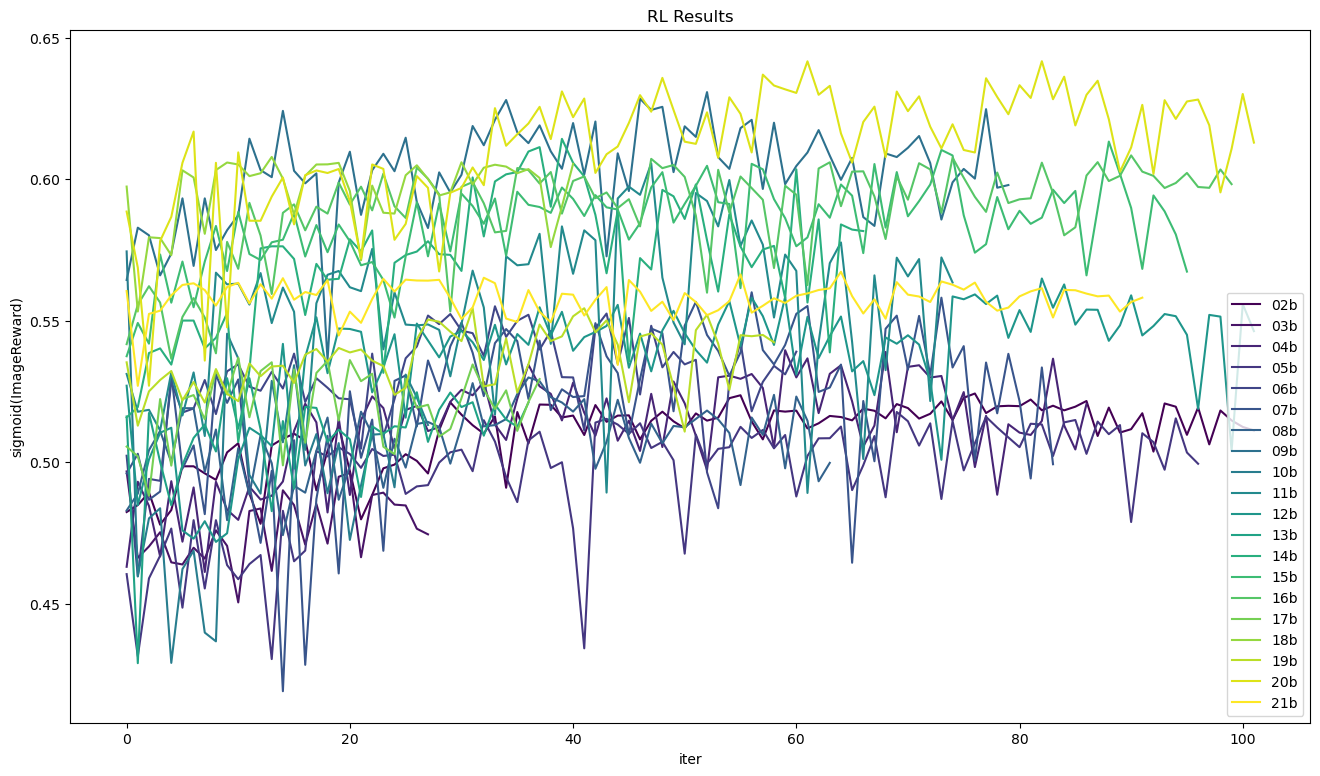

Now I am worried on the "training time" (optimization time on AuboMBW). "RLHF" (the optimization) went wild. With the "payload" of 12 recent artworks (directly from Baseline), it takes almost 30 minutes to complete. Since all 12 artworks has different prompts and seeds (even they are highly similar), setting "early stopping" as 25 best iterlations is too large, I will reduce after analyzing the first successful merge. The time limit (2880 minutes, 48 hours) will be followed, although I have a more "flexible range" up to 10000 minutes (close to a week).

-

For other parameters I have not mentioned, they are against consistancy and overcomplicated. Even I need to read actual codes to understand what they means, because they are original ideas from origianl author, which are not explained.

-

This time I'm doubt that if associative property still remains in

boostingRL. The mean (just naively take average of 26 parameters) of the merged weight is ranged from 0.33-0.45, with minimal as 0.1 (0.05 will throw runtime error), which yields to uneven merge. Instead of merging in sequence, this time I will use parallel merge, which tends to save the "good bias" in progress, while I still need to survive the tedious merges. Since it is not a perfect binary tree, the "better 4" model merges will be preserved for a round. -

Special case:

01=_01a. -

(After days)

14bhas the best score among12bto16b. -

Cannot parallel since

17bto20b -

20bis yet to be decided (AutoMBW with20a?)

| Model Index | Description |

|---|---|

10 |

The 10th uniform merge model with TE reset. |

10a |

The 10th uniform merge model without TE reset. |

_10 |

The 10th raw model without TE reset. |

_10a |

The 10th raw model with TE reset. |

10b |

The 10th autoMBW model with TE reset, parallel merge, started from _01a with _02a. |

10c |

The 10th autoMBW model with TE reset, sequential merge, started from 20 with _01a. |

| Model O | Model A | Model B |

|---|---|---|

02b |

_01a |

_02a |

03b |

_03a |

_04a |

04b |

_05a |

_06a |

05b |

_07a |

_08a |

06b |

_09a |

_10a |

07b |

_11a |

_12a |

08b |

_13a |

_14a |

09b |

_15a |

_16a |

10b |

_17a |

_18a |

11b |

_19a |

_20a |

12b |

02b |

03b |

13b |

04b |

05b |

14b |

06b |

07b |

15b |

08b |

09b |

16b |

10b |

11b |

17b |

12b |

13b |

18b |

15b |

16b |

19b |

14b |

17b |

20b |

18b |

19b |

-

With

20a,20b,20cpresents, I may make21by uniform merge20+20b, and21bby one more autombw with20+20b. See the chapter below forexplainantionexpression. -

For payloads and recipes, see folder autombw. Plot has been added. Since I've found the later models (

15b,16b) failed to early exit within time limit, I slightly increase to 4320 minutes (3 days)

-

The "feature" may be preserved across the merge iterlations, make sure you follow the merge pattern. Since

09bhas the highest score (excluding20b) while merging, it is expected that most contents come from there.Obviously Astolfo won't appear anthro because other models will contribute. -

RL does introduce bias, therefore the background is not rich, comparing to both

09bor20. -

The "count of merged iterlations" should exceed 6 to successfully output the legit image, which matches with the session above.

L2 graph coming soon.L2 graph is generated. Since it requires O(N^2) space therefore I may omit the lowest layer, or shows only a segment of the ["tree"]https://en.wikipedia.org/wiki/Tree_(data_structure) only. Convergence still appears, and it seems drifts towards where "20" stay. However it is debunked in the next session, where merge "20" and "20b" once more.+

-

Academically this process resembles RL or Boosting. It is not RLHF in strict sence, because the "human feedback" is not directly involved.

-

Philosophicaly, by belief, the MBW (Merge Block Weight) here serves a framework which a whole "SD" is simplified (affirmed) as "12 layers of UNet with a whole CLIP". Checkout my findings / opionions if interested.

-

Therefore "Auto" "MBW" is a kind of cheap way to "train" a SD model from an ensemble of SD models, without millions of images "6M" "10e" for "the anime model", disregarding the original LAION5B for SD, which is "5B".

parameters

(aesthetic:0), (quality:0), (solo:0.98), (boy:0), (ushanka:0.98), [[braid]], [astolfo], [[moscow, russia]]

Negative prompt: (worst:0), (low:0), (bad:0), (exceptional:0), (masterpiece:0), (comic:0), (extra:0), (lowres:0), (breasts:0.5)

Steps: 256, Sampler: Euler, CFG scale: 4, Seed: 873435189, Size: 1792x768, Model hash: 28adb7ba78, Model: 21b-AstolfoMix-2020b, VAE hash: 551eac7037, VAE: vae-ft-mse-840000-ema-pruned.ckpt, Denoising strength: 0.7, Clip skip: 2, FreeU Stages: "[{\"backbone_factor\": 1.2, \"skip_factor\": 0.9}, {\"backbone_factor\": 1.4, \"skip_factor\": 0.2}]", FreeU Schedule: "0.0, 1.0, 0.0", FreeU Version: 2, Hires upscale: 2, Hires steps: 64, Hires upscaler: Latent, Dynamic thresholding enabled: True, Mimic scale: 1, Separate Feature Channels: False, Scaling Startpoint: MEAN, Variability Measure: AD, Interpolate Phi: 0.5, Threshold percentile: 100, Version: v1.6.1

-

21and21bis a pair of model.21try to "understand more from it other self", and21benrich itself with the missing "add difference" with$b-c=a+1$ , and the "1" is expressed as "difference between 20 and 20b, in unit of art" (no meaning, just arbitary). -

Deleted some hyped paragraphs. Not hyped anymore. -

Since I have included model info in auto-MBW-rt, I need to "wipe model info" by replacing a dummy CIP with toolkit (but identical), otherwise you will get

TypeError: argument 'metadata': 'dict' object cannot be converted to 'PyString'. -

Although

20bhas the highest score on ImageReward, it tends to blur background. Merge with the original20gained back the nice background generation, with autombw again to "select the best balance" again.

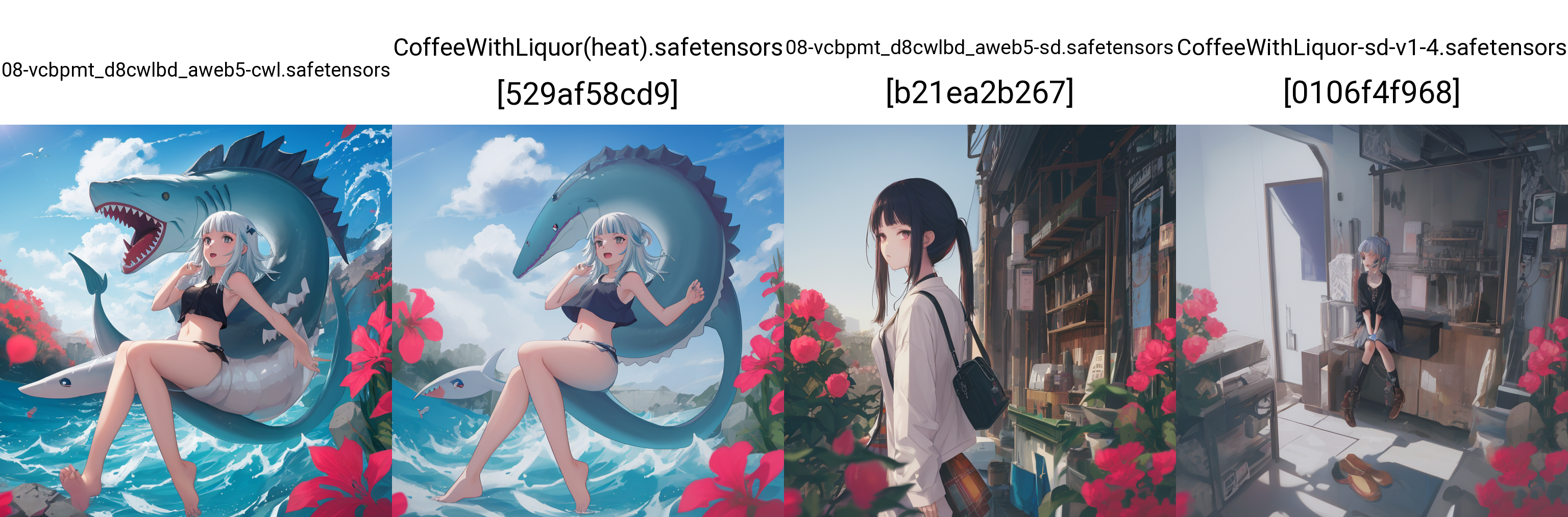

- And then here is a forgotten prior test, with model "20", "20b" and "21b" (top to bottom)

- With pure random content, we can see the bias and variance of the model. "20" tends to generate noisy fragement (even it is very robust already, images from general models are hard to identify), meanwhile "20b" generate identifiable but blurry images. "21b" has a good balance to keep everything in place, although it does not have a high score of ImageReward. Correlation matters here, like most psychological studies instead of computer science.

- This VG chart is not expected. The triangle is legit, with the L2 distance matches with low difference.

- Could this be explained in academic / technical sense? I don't know. I really don't know. This may be an original idea which makes its own tier and have nothing to compare. How on earth there is a SD model "trained" with the most general objective which cannot be described, and just try to "replace the inital original SD model", and start the new fintuning cycle? The idea of "use no images" is already weird enough. Maybe this is art.

-

Repeat in SD2.1: In progress.

-

Repeat in SDXL,

but I need to rewrite the extensionAutoMBW is rewritten. Will start after SD2. May switch to WhiteWipe/sd-webui-bayesian-merger because of active development. -

Keep testing the models with new technology (LCM, SDXL Turbo etc.). CUrrently I know my model has maximum capability on CS level to observe and discover new or ignored technologies, as what I do in ch01.