To play the audio examples in this notebook, please view it with the Jupyter Notebook Viewer.

This iPython Notebook is a demo of GCC-NMF blind source separation algorithm, combining:

- Non-negative matrix factorization (NMF): unsupervised dictionary learning algorithm

- Generalized cross-correlation (GCC): source localization method

Separation is performed directly on the stereo mixture signal using no additional data:

- An NMF dictionary is first learned from the left and right mixture spectrograms, concatenated in time.

- Dictionary atoms are then attributed to individual sources over time according to their time difference of arrival (TDOA) estimates, defining a binary coefficient mask for each source.

- Masked coefficients are then used to reconstruct each source independently.

This demo separates the speech sources from the data/dev1_female3_liverec_130ms_1m_mix.wav mixture, taken from the SiSEC 2016 Underdetermined speech mixtures "dev1" dataset, and saves results to the data directory.

- Preliminary setup

- Input mixture signal

- Complex mixture spectrogram

- GCC-PHAT source localization

- NMF decomposition

- GCC-NMF coefficient mask generation

- Source spectrogram estimates reconstruction

- Source signal estimates reconstruction

from gccNMF.gccNMFFunctions import *

from gccNMF.gccNMFPlotting import *

from IPython import display

%matplotlib inline# Preprocessing params

windowSize = 1024

fftSize = windowSize

hopSize = 128

windowFunction = hanning

# TDOA params

numTDOAs = 128

# NMF params

dictionarySize = 128

numIterations = 100

sparsityAlpha = 0

# Input params

mixtureFileNamePrefix = 'data/dev1_female3_liverec_130ms_1m'

microphoneSeparationInMetres = 1.0

numSources = 3mixtureFileName = getMixtureFileName(mixtureFileNamePrefix)

stereoSamples, sampleRate = loadMixtureSignal(mixtureFileName)

numChannels, numSamples = stereoSamples.shape

durationInSeconds = numSamples / float(sampleRate)describeMixtureSignal(stereoSamples, sampleRate)

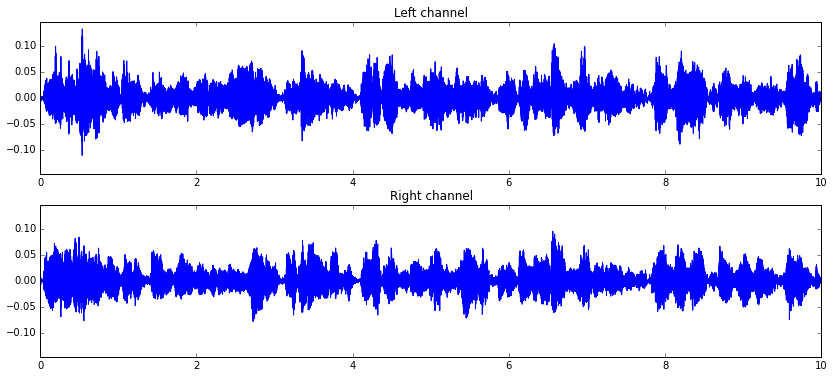

figure(figsize=(14, 6))

plotMixtureSignal(stereoSamples, sampleRate)Input mixture signal:

sampleRate: 16000 samples/sec

numChannels: 2

numSamples: 160000

dtype: float32

duration: 10.00 seconds

complexMixtureSpectrogram = computeComplexMixtureSpectrogram( stereoSamples, windowSize,

hopSize, windowFunction )

numChannels, numFrequencies, numTime = complexMixtureSpectrogram.shape

frequenciesInHz = getFrequenciesInHz(sampleRate, numFrequencies)

frequenciesInkHz = frequenciesInHz / 1000.0describeMixtureSpectrograms(windowSize, hopSize, windowFunction, complexMixtureSpectrogram)

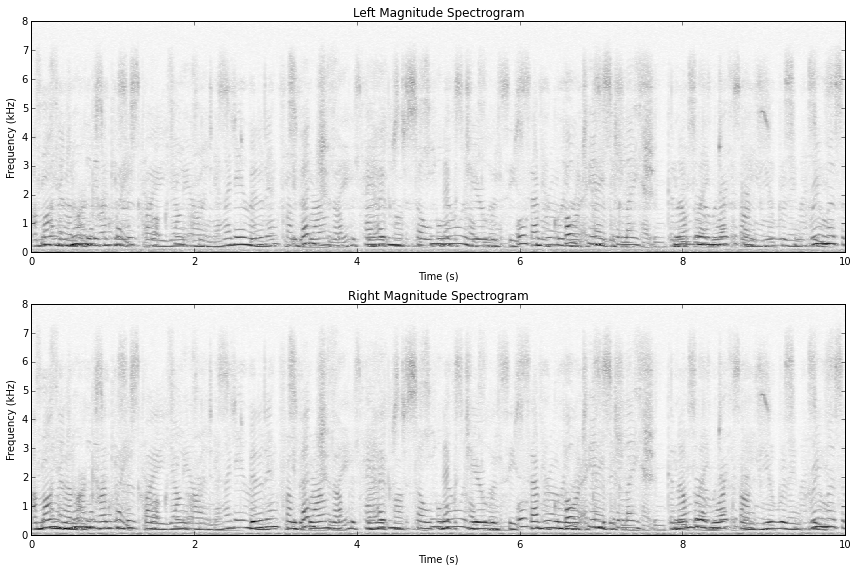

figure(figsize=(12, 8))

plotMixtureSpectrograms(complexMixtureSpectrogram, frequenciesInkHz, durationInSeconds)STFT:

windowSize: 1024

hopSize: 128

windowFunction: <function hanning at 0x1075e8140>

complexMixtureSpectrogram.shape = (numChannels, numFreq, numWindows): (2, 513, 1243)

complexMixtureSpectrogram.dtype = complex64

spectralCoherenceV = complexMixtureSpectrogram[0] * complexMixtureSpectrogram[1].conj() \

/ abs(complexMixtureSpectrogram[0]) / abs(complexMixtureSpectrogram[1])

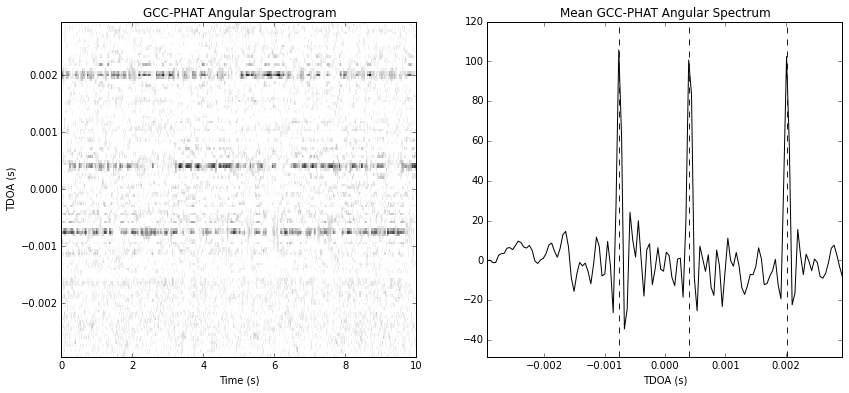

angularSpectrogram = getAngularSpectrogram( spectralCoherenceV, frequenciesInHz,

microphoneSeparationInMetres, numTDOAs )

meanAngularSpectrum = mean(angularSpectrogram, axis=-1)

targetTDOAIndexes = estimateTargetTDOAIndexesFromAngularSpectrum( meanAngularSpectrum,

microphoneSeparationInMetres,

numTDOAs, numSources)figure(figsize=(14, 6))

plotGCCPHATLocalization( spectralCoherenceV, angularSpectrogram, meanAngularSpectrum,

targetTDOAIndexes, microphoneSeparationInMetres, numTDOAs,

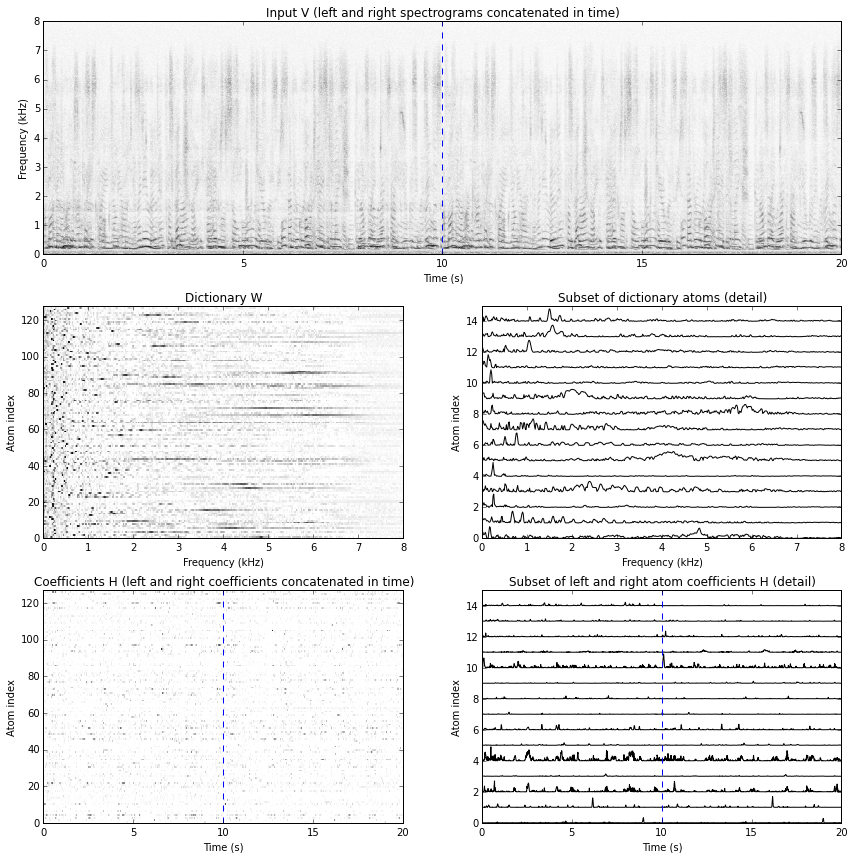

durationInSeconds )V = concatenate( abs(complexMixtureSpectrogram), axis=-1 )

W, H = performKLNMF(V, dictionarySize, numIterations, sparsityAlpha)

numChannels = stereoSamples.shape[0]

stereoH = array( hsplit(H, numChannels) )describeNMFDecomposition(V, W, H)

figure(figsize=(12, 12))

plotNMFDecomposition(V, W, H, frequenciesInkHz, durationInSeconds, numAtomsToPlot=15)Input V:

V.shape = (numFreq, numWindows): (513, 2486)

V.dtype = float32

Dictionary W:

W.shape = (numFreq, numAtoms): (513, 128)

W.dtype = float32

Coefficients H:

H.shape = (numAtoms, numWindows): (128, 2486)

H.dtype = float32

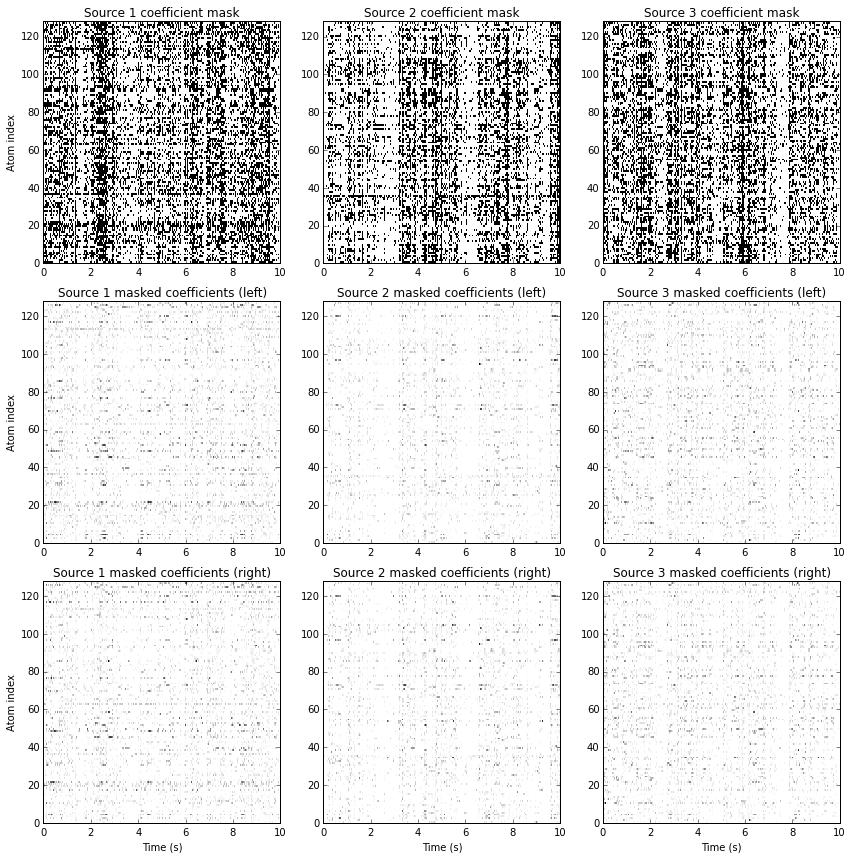

targetTDOAGCCNMFs = getTargetTDOAGCCNMFs( spectralCoherenceV, microphoneSeparationInMetres,

numTDOAs, frequenciesInHz, targetTDOAIndexes, W,

stereoH )

targetCoefficientMasks = getTargetCoefficientMasks(targetTDOAGCCNMFs, numSources)figure(figsize=(12, 12))

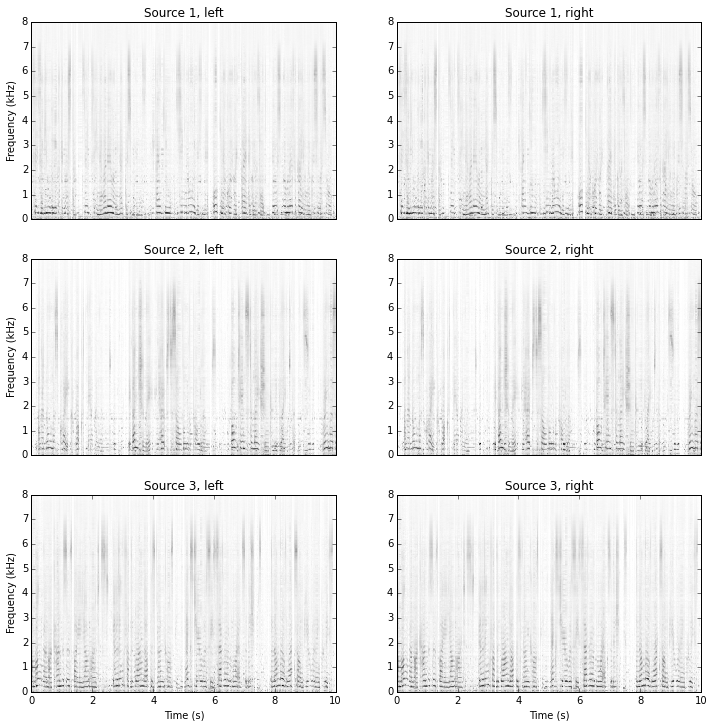

plotCoefficientMasks(targetCoefficientMasks, stereoH, durationInSeconds)Reconstruct source spectrogram estimates using masked NMF coefficients for each target, and each channel

targetSpectrogramEstimates = getTargetSpectrogramEstimates( targetCoefficientMasks,

complexMixtureSpectrogram, W,

stereoH )figure(figsize=(12, 12))

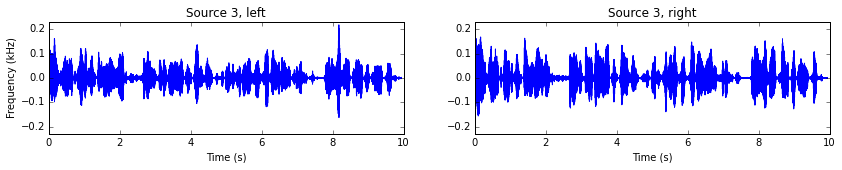

plotTargetSpectrogramEstimates(targetSpectrogramEstimates, durationInSeconds, frequenciesInkHz)Combine source estimate spectrograms with the input mixture spectrogram's phase, and perform the inverse STFT

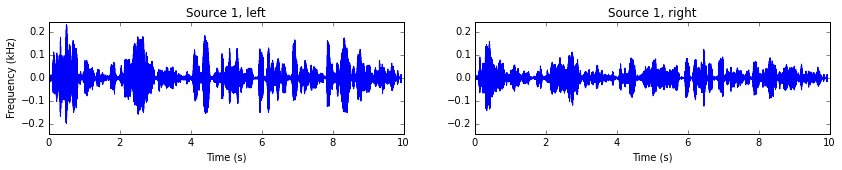

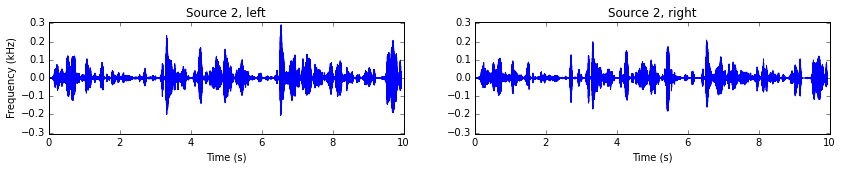

targetSignalEstimates = getTargetSignalEstimates( targetSpectrogramEstimates, windowSize,

hopSize, windowFunction )

saveTargetSignalEstimates(targetSignalEstimates, sampleRate, mixtureFileNamePrefix)for sourceIndex in xrange(numSources):

figure(figsize=(14, 2))

fileName = getSourceEstimateFileName(mixtureFileNamePrefix, sourceIndex)

plotTargetSignalEstimate( targetSignalEstimates[sourceIndex], sampleRate,

'Source %d' % (sourceIndex+1) )