A tool for ONNX model:

- Rapid shape inference.

- Profile model.

- Constant Folding.

- Compute Graph and Shape Engine.

- OPs fusion.

- Activation memory compression.

- Quantized models and sparse models are supported.

Supported Models:

- NLP: BERT, T5, GPT, LLaMa, MPT(TransformerModel)

- Diffusion: Stable Diffusion(TextEncoder, VAE, UNET)

- CV: BEVFormer, MobileNet, YOLO, ...

- Audio: sovits, LPCNet

how to use: data/Profile.md.

pytorch usage: data/PytorchUsage.md.

tensorflow

usage: data/TensorflowUsage.md.

samples: benchmark/samples.py.

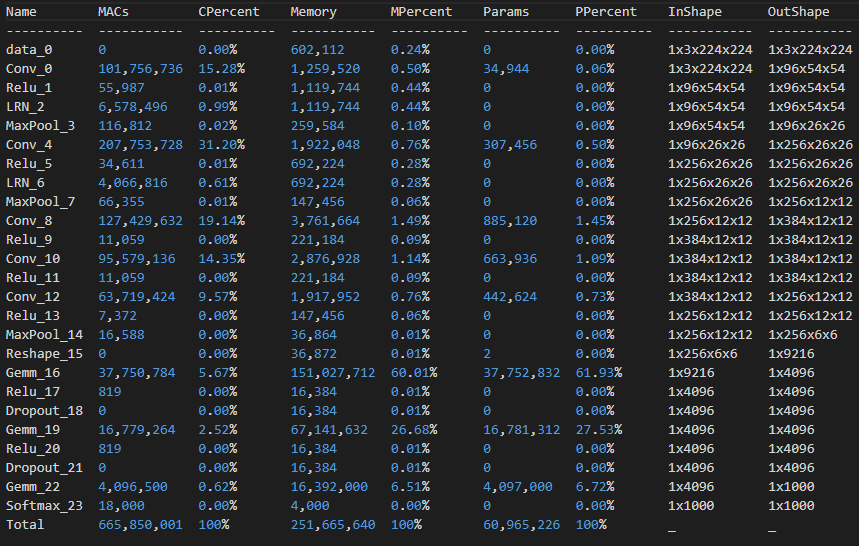

Float MultipleAdd Count(1 MAC=2 FLOPs), Memory Usage(in bytes), Parameters(elements number)

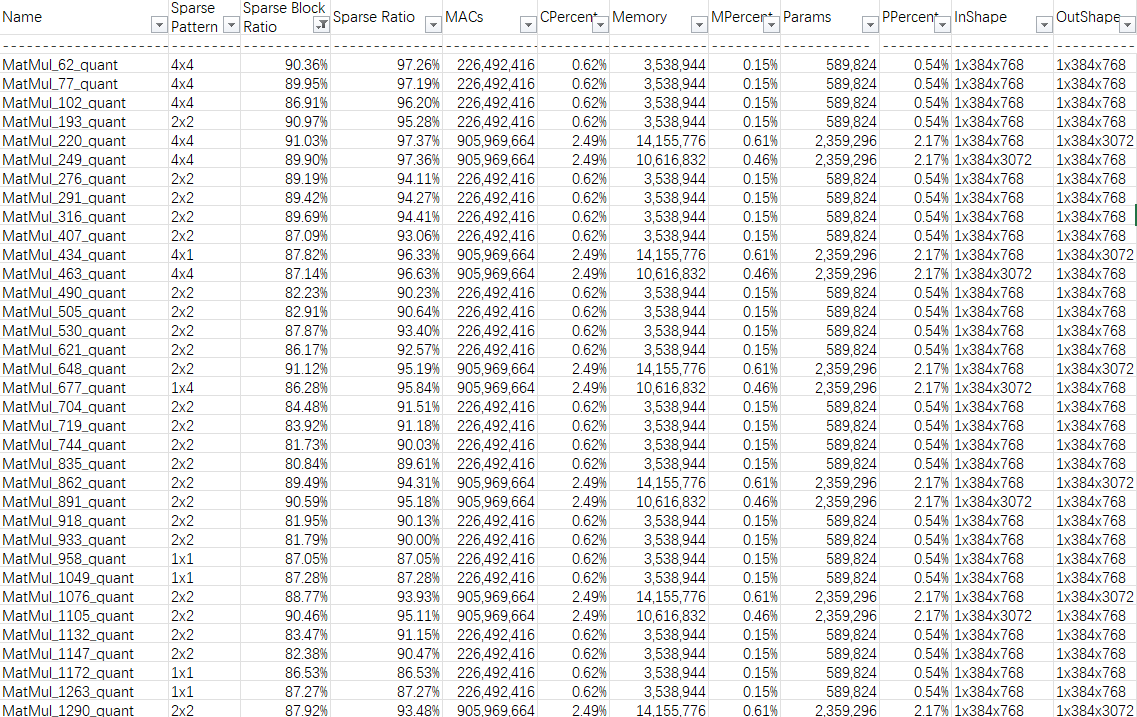

Sparse Pattern, Sparse Block Ratio, Sparse Element Ratio

how to use: data/Profile.md.

pytorch usage: data/PytorchUsage.md.

tensorflow

usage: data/TensorflowUsage.md.

samples: benchmark/samples.py.

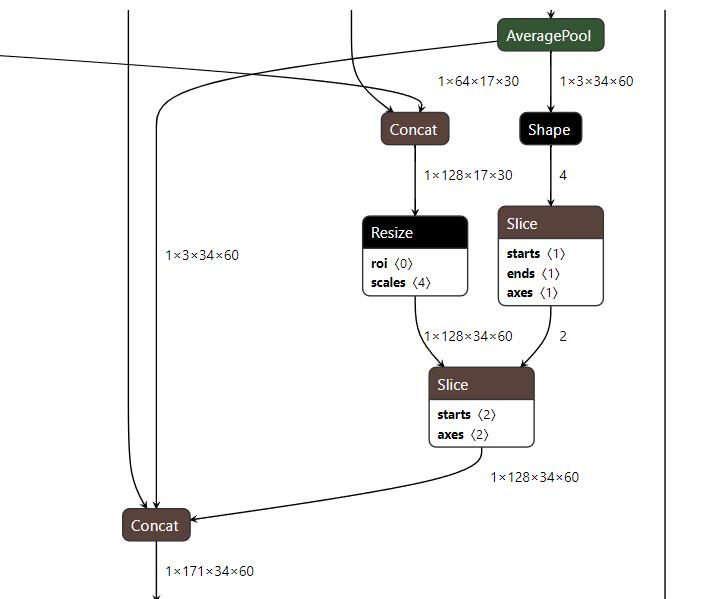

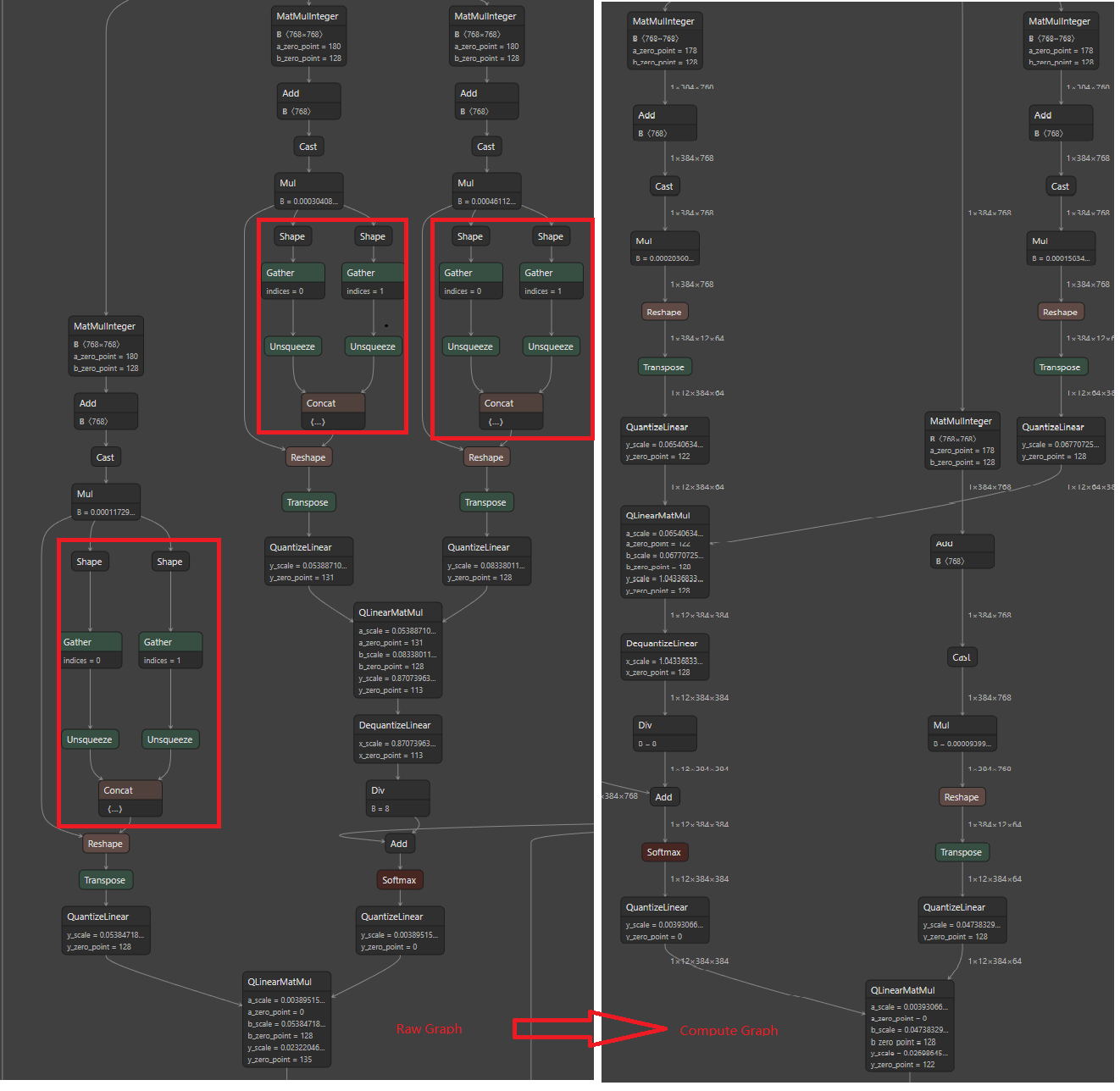

Remove shape calculation layers(created by ONNX export) to get a Compute Graph. Use Shape Engine to update tensor

shapes at runtime.

Samples: benchmark/shape_regress.py.

benchmark/samples.py.

Integrate Compute Graph and Shape Engine into a cpp inference

engine: data/inference_engine.md

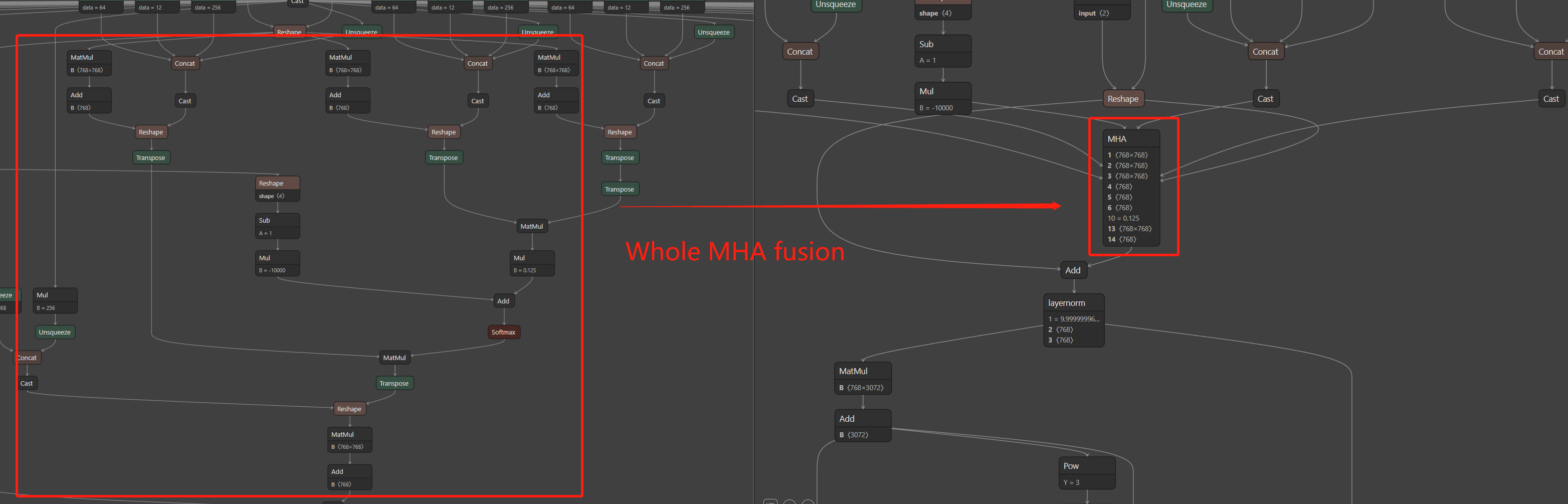

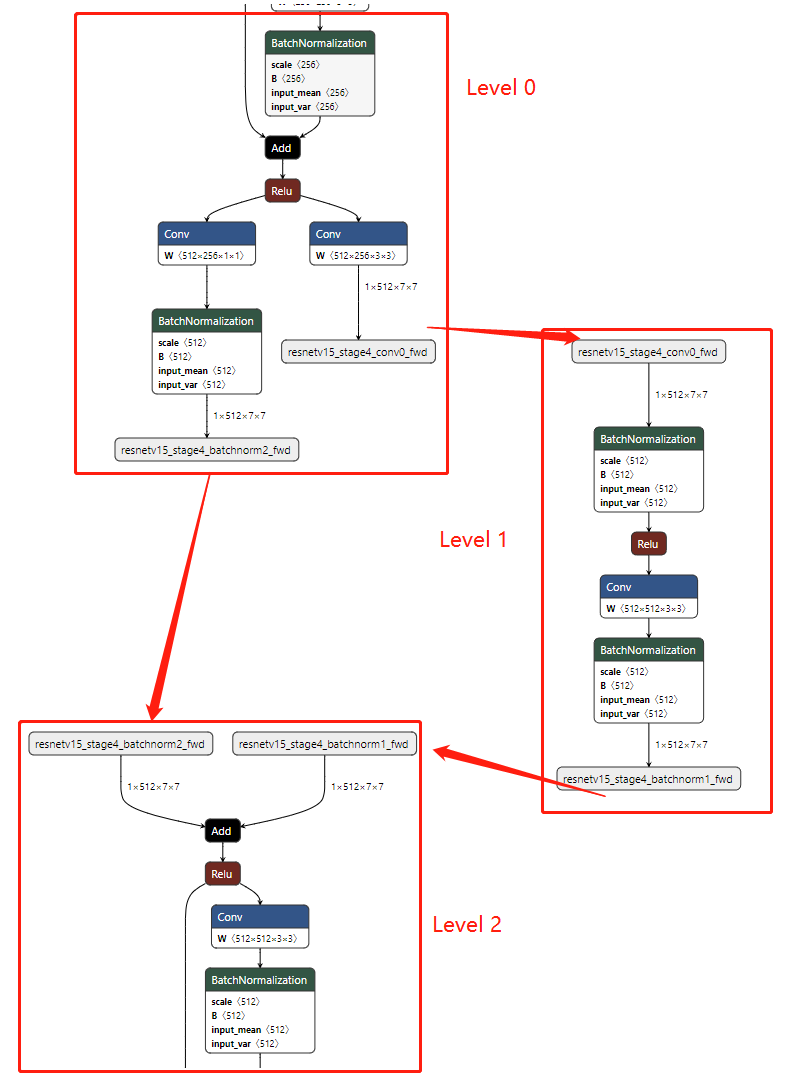

MHA and Layernorm Fusion for Transformers

Resnet18 fusionhow to use: data/Subgraph.md.

BERT samples: benchmark/samples.py.

Pattern fusion: benchmark/do_fusion.py.

Help implement model parallelism.

how to use: data/Subgraph.md.

For large language models and high-resolution CV models, the activation memory compression is a key to save memory.

The compression method achieves 5% memory compression on most models.

For example:

| model | Native Memory Size(MB) | Compressed Memory Size(MB) | Compression Ratio(%) |

|---|---|---|---|

| StableDiffusion(VAE_encoder) | 14,245 | 540 | 3.7 |

| StableDiffusion(VAE_decoder) | 25,417 | 1,140 | 4.48 |

| StableDiffusion(Text_encoder) | 215 | 5 | 2.5 |

| StableDiffusion(UNet) | 36,135 | 2,232 | 6.2 |

| GPT2 | 40 | 2 | 6.9 |

| BERT | 2,170 | 27 | 1.25 |

code sample: benchmark/compression.py

- Export weight tensors to files

- Simplify tensor and node names, convert name from a long string to a short string

- Remove unused tensors, models like vgg19-7.onnx set its static weight tensors as its input tensors

- Set custom input and output tensors' name and dimension, change model from fixed input to dynamic input

how to use: data/Tensors.md.

pip install onnx-tool

OR

pip install --upgrade git+https://github.com/ThanatosShinji/onnx-tool.git

python>=3.6

If pip install onnx-tool failed by onnx's installation, you may try pip install onnx==1.8.1 (a lower version like this) first.

Then pip install onnx-tool again.

- Loop op is not supported

- Activation Compression is not optimum

Results of ONNX Model Zoo and SOTA models

Some models have dynamic input shapes. The MACs varies from input shapes. The input shapes used in these results are writen to data/public/config.py. These onnx models with all tensors' shape can be downloaded: baidu drive(code: p91k) google drive

|

|