Business Case: Looking for an efficient, statistically sound approach to uncovering latent market segments.

Ultimate goal is to find an easy way to classify someone with as few variables as possible (minimizing the number of variables required to classify them) The data could be obtained through surveys, focus groups, web sources, etc. The point is, it's data showing how people react to certain stimuli. We use that data to identify groups in the marketplace.

Ultimate final final goal: We were able to use two major variables to understand people, and now we can use the other 34 variables that describe the cluster.

Interpreting consumer product market research data can be a daunting task. Researchers may collect user input on dozens of variables, and then it is up to analysts to interpret and generalize those results into meaningful market segments and actionable insights. The goal of this project has been to create a repeatable, efficient, and technically sound approach to uncovering latent market segments from market research data and to identify variables that are most influential in defining those market segments so marketing and product development teams can more efficiently reach their target audiences.

The final deliverable of this project is a web application where end-users can upload their datasets for analysis and receive clustered results as well as a ranked list of the most influential variables. The latest classification model has achieved a 90.57% accuracy, compared to just 61.94% accuracy through random classification.

Do we want to include an example here?

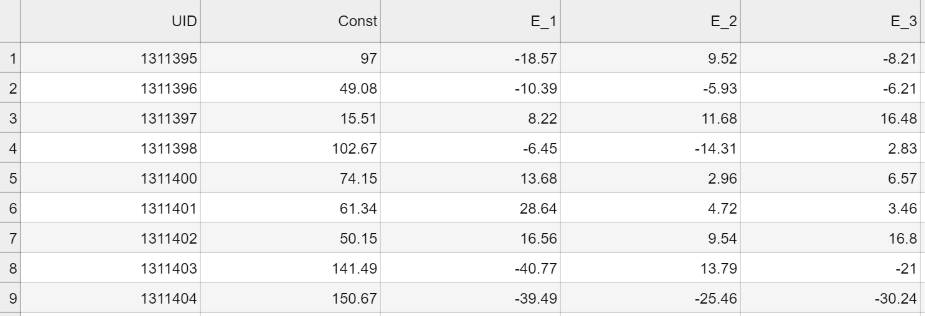

The data collected in these experiments is formatted in a specific and consistent way, which includes an anonymized participant ID, a baseline constant, and columns for each of the tested variables (aka stimuli).

The analysis for this tool is comprised of three major components: Factor Analysis, Clustering, and Classification.

The analysis script begins with dimension reduction through factor analysis. The purpose of the factor analysis, as opposed to just clustering on the raw customer data, is to reduce noise in the initial dataset and hopefully obtain factors that are more generalizable to future, unseen data.

The variables are all on a similar scale, so the script uses the covariance matrix for identifying principal components.

The clustering portion of the latest script creates and compares clustering solutions for 2-7 clusters using k-Means, k-Medoids, and hierarchical algorithms. It uses the silhouette score, which is calculated using the average distance of points within a cluster (intra-cluster distance) and the average distance between clusters (sklearn silhouette score documentation).

The optimal clustering solution is selected based on the highest silhouette score and serves as the labeled data used in the training, validation, and test data.

In a business setting, the optimal clustering solution would need to be validated as useful before being used to classify new customer data. For the sake of expediency, this project assumes that the clusters are representative of customer groups.

After splitting the clustered data into training, validation, and test sets, classifiers are trained using Random Forest, gradient boosted trees, support vector classification, and k-Nearest Neighbors. Accuracy is used to select the optimal classification model. We selected accuracy as our primary metric instead of precision and recall because there are no "false positive" or "false negative" outcomes, and misclassification of any one cluster does not cost any more or less than any other cluster. Another benefit of using accuracy is that it is easily understood by end-users.

Individual variable importance is obtained by averaging the feature importance outputs of the Random Forest and gradient boosted trees algorithms. In the initial analysis, 7 out of the top 10 variables appeared in both lists.