Install AdalFlow with pip:

pip install adalflowPlease refer to the full installation guide for more details. Package changelog.

- Try the Building Quickstart in Colab to see how AdalFlow can build the task pipeline, including Chatbot, RAG, agent, and structured output.

- Try the Optimization Quickstart to see how AdalFlow can optimize the task pipeline.

[Jan 2025] Auto-Differentiating Any LLM Workflow: A Farewell to Manual Prompting

- LLM Applications as auto-differentiation graphs

- Token-efficient and better performance than DsPy

We work closely with the VITA Group at University of Texas at Austin, under the leadership of Dr. Atlas Wang, alongside Dr. Junyuan Hong, who provides valuable support in driving project initiatives.

For collaboration, contact Li Yin.

- Say goodbye to manual prompting: AdalFlow provides a unified auto-differentiative framework for both zero-shot optimization and few-shot prompt optimization. Our research,

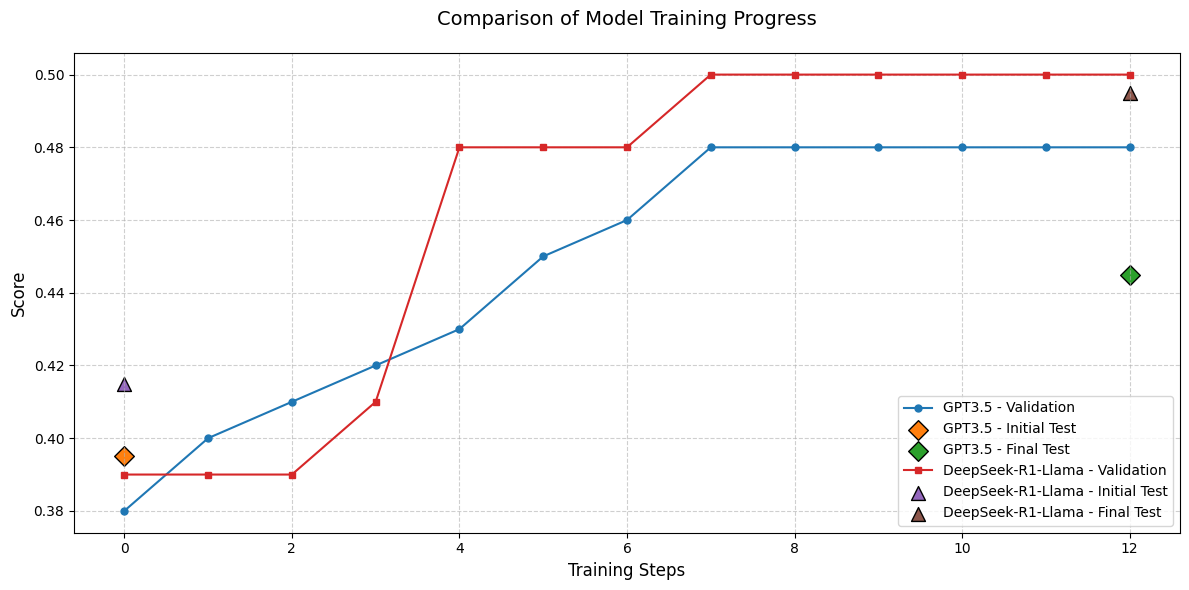

LLM-AutoDiffandLearn-to-Reason Few-shot In Context Learning, achieve the highest accuracy among all auto-prompt optimization libraries. - Switch your LLM app to any model via a config: AdalFlow provides

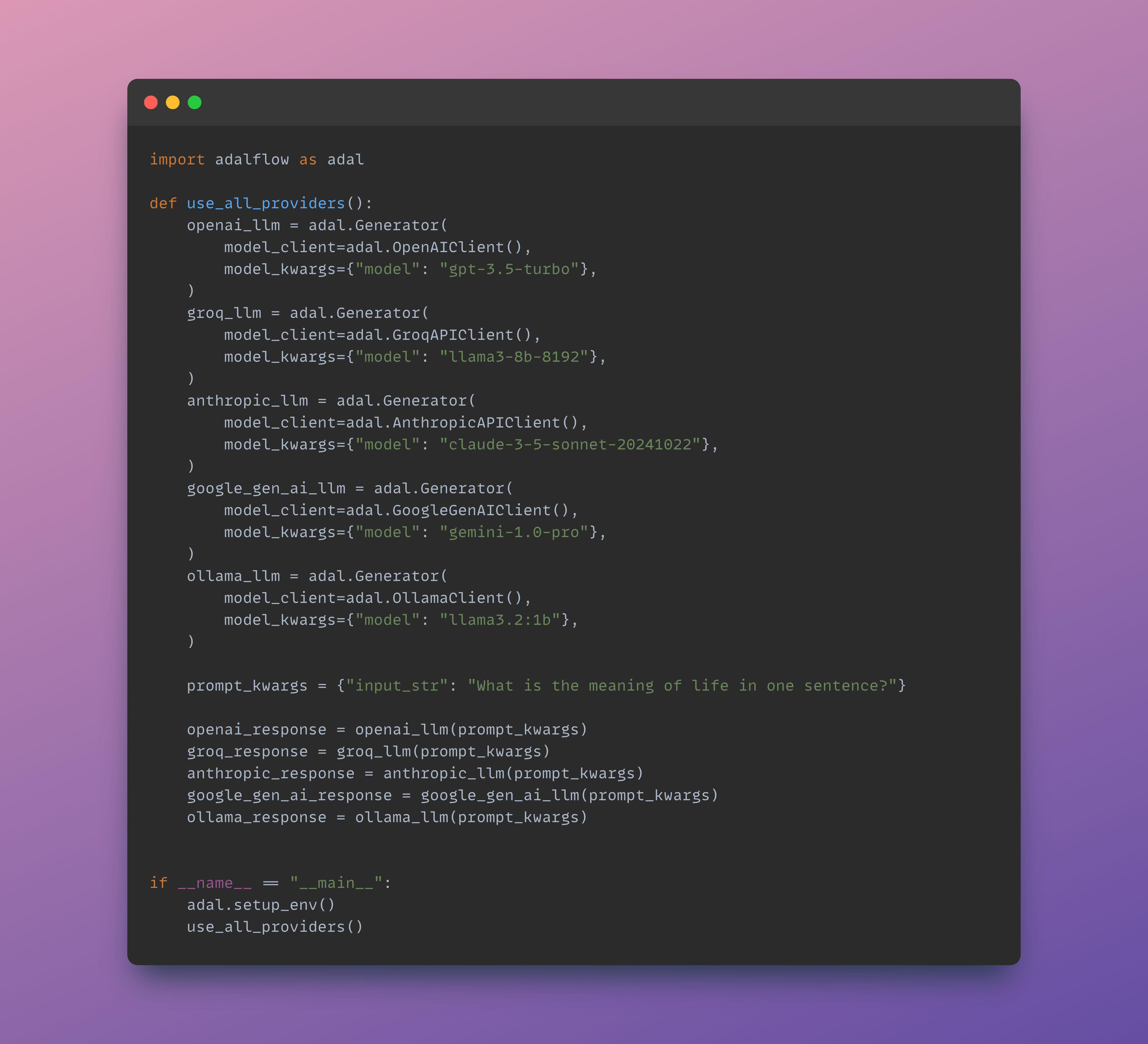

Model-agnosticbuilding blocks for LLM task pipelines, ranging from RAG, Agents to classical NLP tasks.

A trained DeepSeek R1 LLaMA70B(r1 distilled) is even better than GPT-o1 without training.

Further reading: Use Cases

LLMs are like water; AdalFlow help you quickly shape them into any applications, from GenAI applications such as chatbots, translation, summarization, code generation, RAG, and autonomous agents to classical NLP tasks like text classification and named entity recognition.

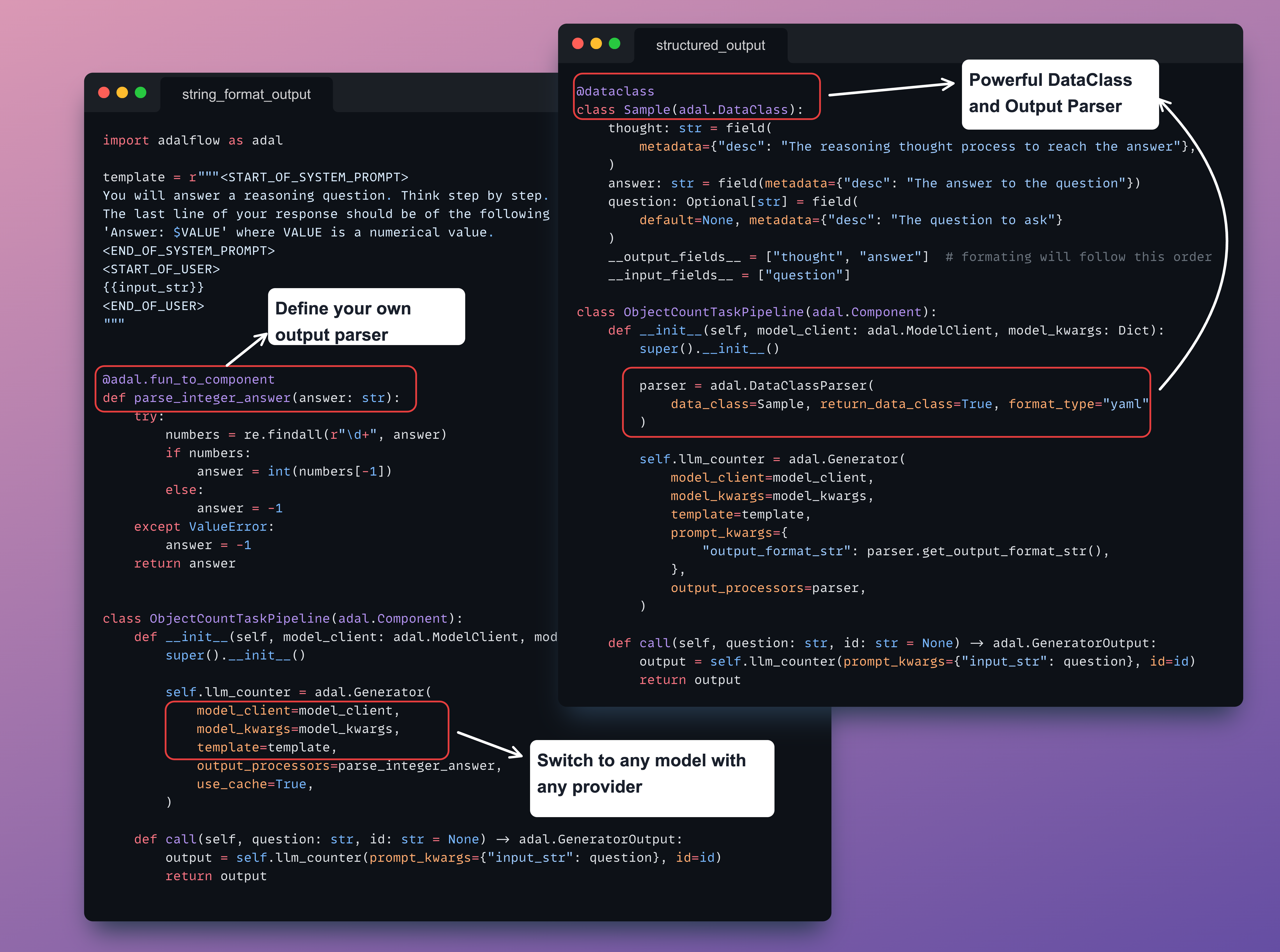

AdalFlow has two fundamental, but powerful, base classes: Component for the pipeline and DataClass for data interaction with LLMs.

The result is a library with minimal abstraction, providing developers with maximum customizability.

You have full control over the prompt template, the model you use, and the output parsing for your task pipeline.

Many providers and models accessible via the same interface:

Further reading: How We Started, Design Philosophy and Class hierarchy.

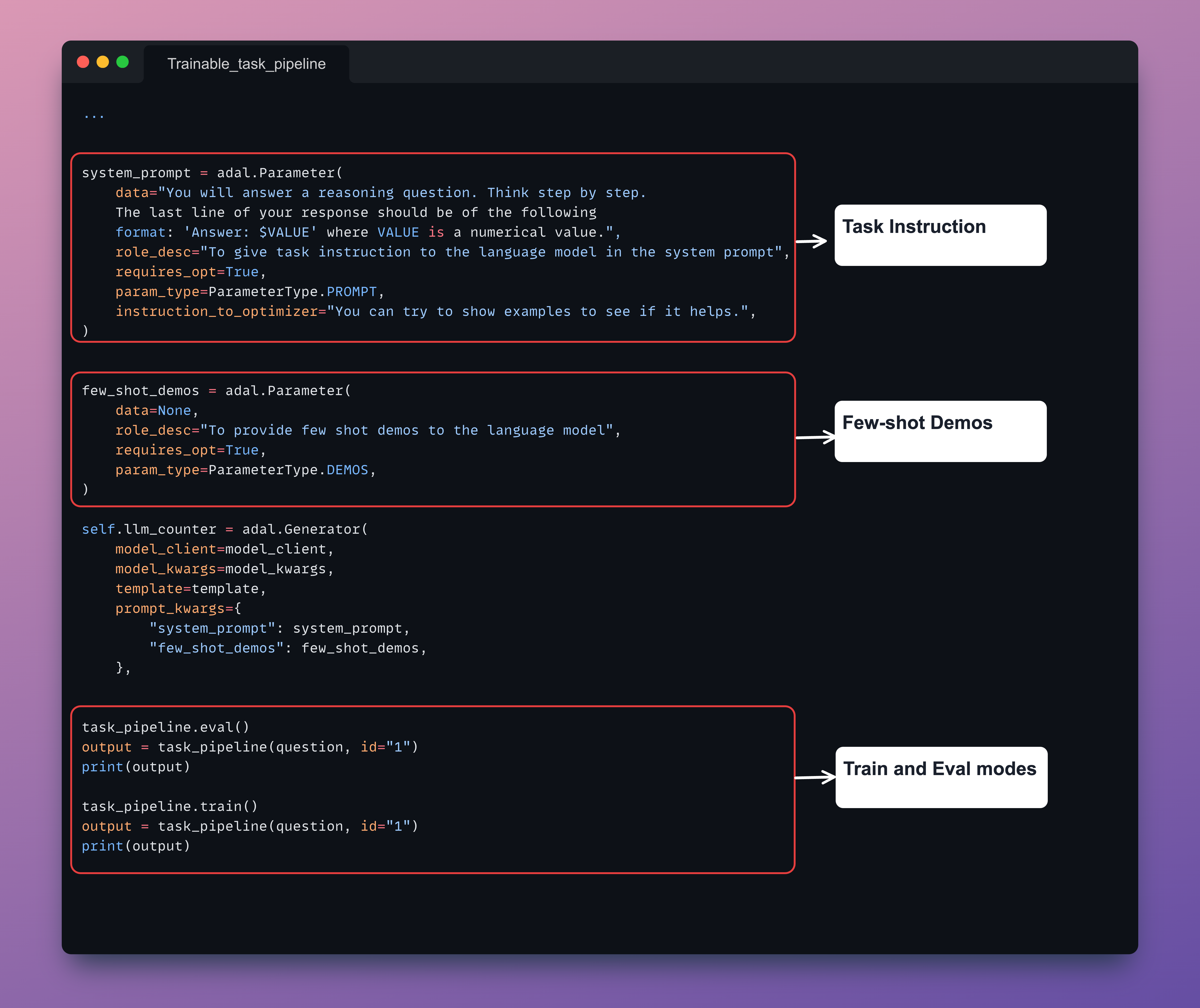

To optimize your pipeline, simply define a Parameter and pass it to AdalFlow's Generator.

You use PROMPT for prompt tuning via textual gradient descent and DEMO for few-shot demonstrations.

We let you diagnose, visualize, debug, and train your pipeline.

Just define it as a Parameter and pass it to AdalFlow's Generator.

AdalComponent acts as the 'interpreter' between task pipeline and the trainer, defining training and validation steps, optimizers, evaluators, loss functions, backward engine for textual gradients or tracing the demonstrations, the teacher generator.

AdalFlow full documentation available at adalflow.sylph.ai:

- How We Started

- Introduction

- Full installation guide

- Design philosophy

- Class hierarchy

- Tutorials

- Supported Models

- Supported Retrievers

- API reference

AdalFlow is named in honor of Ada Lovelace, the pioneering female mathematician who first recognized that machines could go beyond mere calculations. As a team led by a female founder, we aim to inspire more women to pursue careers in AI.

The AdalFlow is a community-driven project, and we welcome everyone to join us in building the future of LLM applications.

Join our Discord community to ask questions, share your projects, and get updates on AdalFlow.

To contribute, please read our Contributor Guide.

Many existing works greatly inspired AdalFlow library! Here is a non-exhaustive list:

- 📚 PyTorch for design philosophy and design pattern of

Component,Parameter,Sequential. - 📚 Micrograd: A tiny autograd engine for our auto-differentiative architecture.

- 📚 Text-Grad for the

Textual Gradient Descenttext optimizer. - 📚 DSPy for inspiring the

__{input/output}__fieldsin ourDataClassand the bootstrap few-shot optimizer. - 📚 OPRO for adding past text instructions along with its accuracy in the text optimizer.

- 📚 PyTorch Lightning for the

AdalComponentandTrainer.