+

+ +

+## 联系方式

+

+加微信进群:foolcage 添加暗号:zvt

+

+

+## 联系方式

+

+加微信进群:foolcage 添加暗号:zvt

+ +

+------

+微信公众号:

+

+

+------

+微信公众号:

+ +

+知乎专栏:

+https://zhuanlan.zhihu.com/automoney

+

+## Thanks

+

diff --git a/README-en.md b/README-en.md

deleted file mode 100644

index 92622de3..00000000

--- a/README-en.md

+++ /dev/null

@@ -1,155 +0,0 @@

-[](https://pypi.org/project/zvt/)

-[](https://pypi.org/project/zvt/)

-[](https://pypi.org/project/zvt/)

-[](https://travis-ci.org/zvtvz/zvt)

-[](https://codecov.io/github/zvtvz/zvt)

-[](https://pepy.tech/project/zvt)

-

-**Read this in other languages: [English](README-en.md).**

-

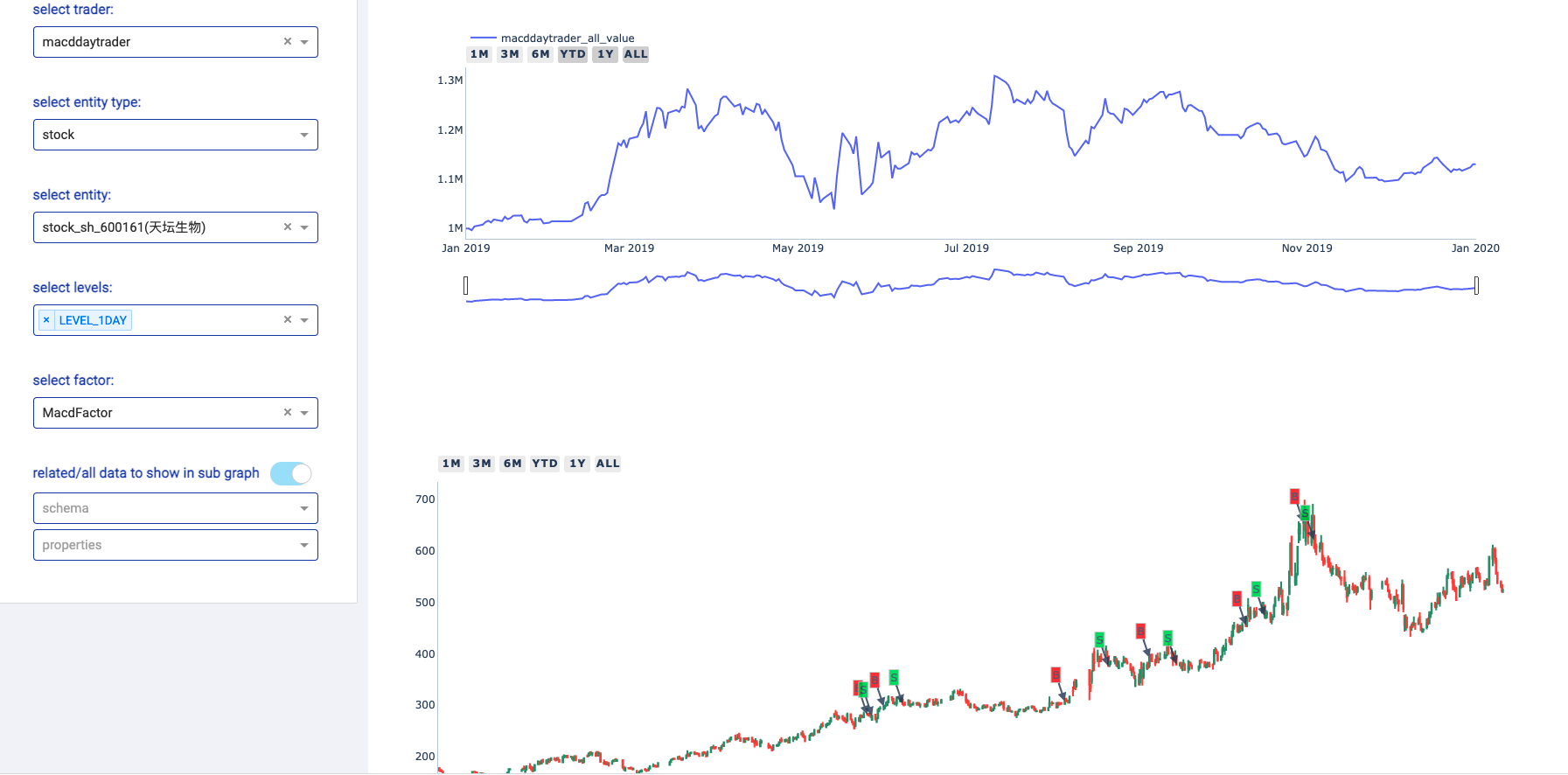

-ZVT is a quant trading platform written after rethinking trading based on [fooltrader](https://github.com/foolcage/fooltrader),

-which includes scalable data recorder, api, factor calculation, stock picking, backtesting, and unified visualization layer,

-focus on **low frequency**, **multi-level**, **multi-targets** full market analysis and trading framework.

-

-## 🔖 Useage examples

-

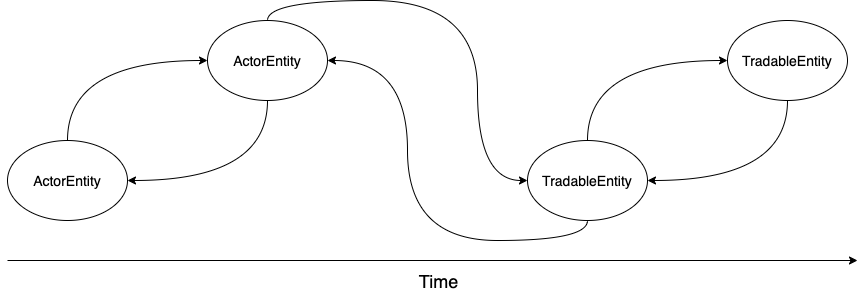

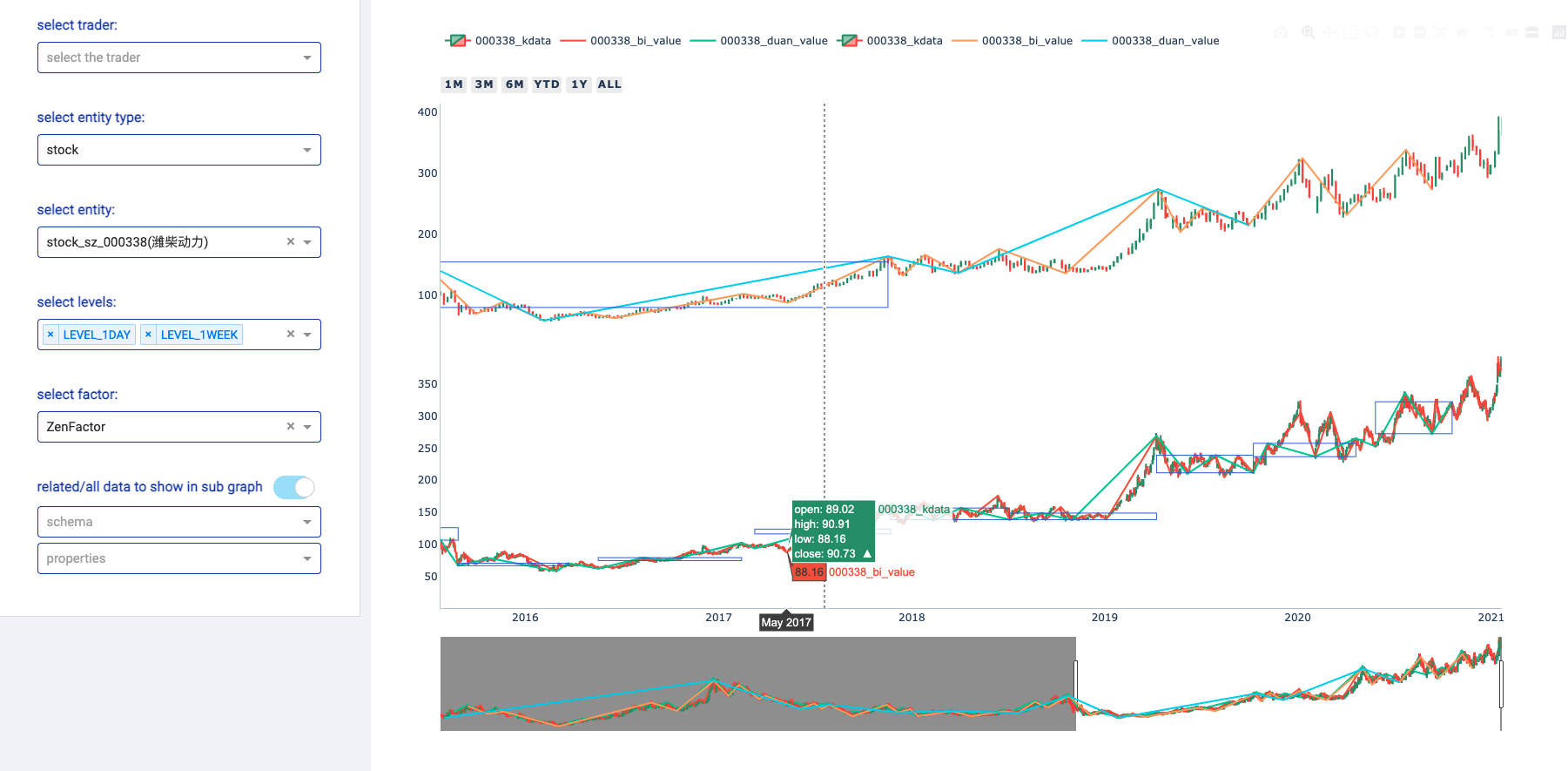

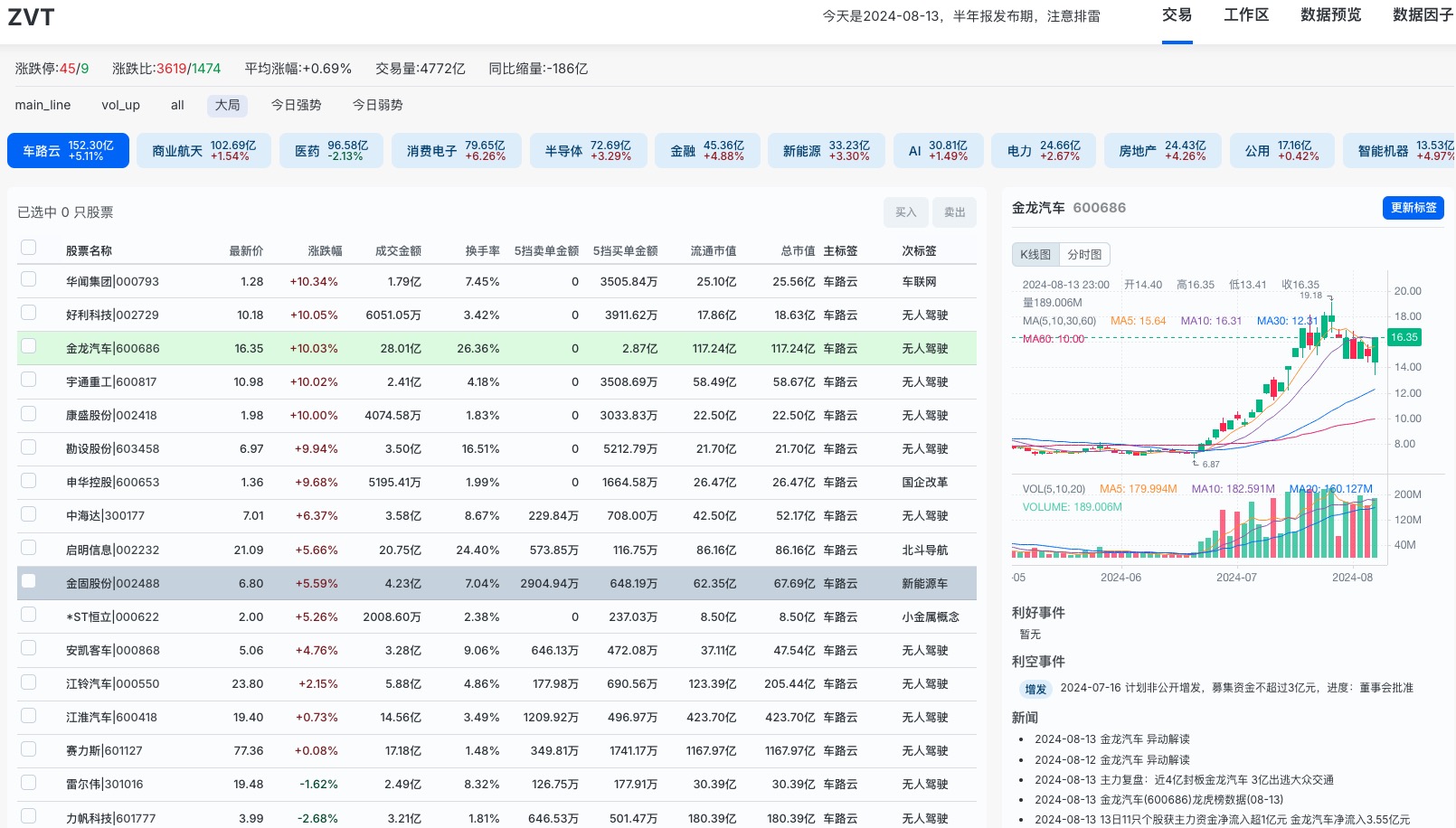

-### Sector fund flow analysis

-

+

+知乎专栏:

+https://zhuanlan.zhihu.com/automoney

+

+## Thanks

+

diff --git a/README-en.md b/README-en.md

deleted file mode 100644

index 92622de3..00000000

--- a/README-en.md

+++ /dev/null

@@ -1,155 +0,0 @@

-[](https://pypi.org/project/zvt/)

-[](https://pypi.org/project/zvt/)

-[](https://pypi.org/project/zvt/)

-[](https://travis-ci.org/zvtvz/zvt)

-[](https://codecov.io/github/zvtvz/zvt)

-[](https://pepy.tech/project/zvt)

-

-**Read this in other languages: [English](README-en.md).**

-

-ZVT is a quant trading platform written after rethinking trading based on [fooltrader](https://github.com/foolcage/fooltrader),

-which includes scalable data recorder, api, factor calculation, stock picking, backtesting, and unified visualization layer,

-focus on **low frequency**, **multi-level**, **multi-targets** full market analysis and trading framework.

-

-## 🔖 Useage examples

-

-### Sector fund flow analysis

-

-

- -

-

-## 💡 contribution

-

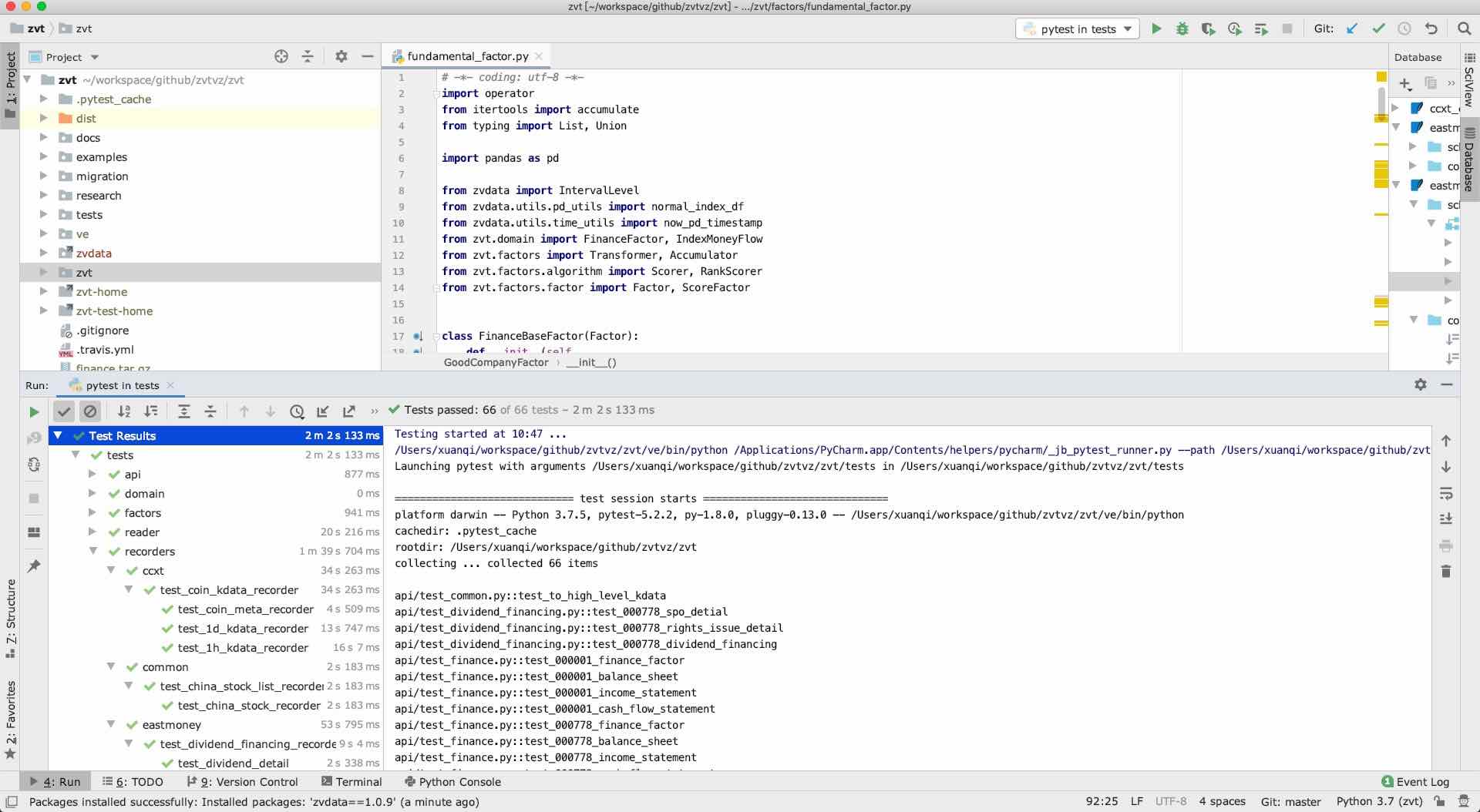

-Looking forward to more developers participating in the development of zvt, I will promise Reivew PR as soon as possible and respond promptly. But submit PR please make sure

-

-1. Pass all unit tests, if it is new, please add unit test to it

-2. Compliance with development specifications

-3. Update the corresponding document if needed

-

-Developers are also welcome to provide more examples for zvt to complement the documentation, located at [zvt/docs] (https://github.com/zvtvz/zvt/docs)

-

-

-## Contact information

-QQ group:300911873

-check http://www.imqq.com/html/FAQ_en/html/Discussions_3.html

-

-

-wechat Public number (some tutorials would be here):

-

-

-

-## 💡 contribution

-

-Looking forward to more developers participating in the development of zvt, I will promise Reivew PR as soon as possible and respond promptly. But submit PR please make sure

-

-1. Pass all unit tests, if it is new, please add unit test to it

-2. Compliance with development specifications

-3. Update the corresponding document if needed

-

-Developers are also welcome to provide more examples for zvt to complement the documentation, located at [zvt/docs] (https://github.com/zvtvz/zvt/docs)

-

-

-## Contact information

-QQ group:300911873

-check http://www.imqq.com/html/FAQ_en/html/Discussions_3.html

-

-

-wechat Public number (some tutorials would be here):

- -

-## Thanks

-

diff --git a/README.md b/README.md

index 93bdf1cc..f965d98e 100644

--- a/README.md

+++ b/README.md

@@ -2,365 +2,535 @@

[](https://pypi.org/project/zvt/)

[](https://pypi.org/project/zvt/)

[](https://pypi.org/project/zvt/)

-[](https://travis-ci.org/zvtvz/zvt)

+[](https://github.com/zvtvz/zvt/actions/workflows/build.yml)

+[](https://github.com/zvtvz/zvt/actions/workflows/package.yaml)

+[](https://zvt.readthedocs.io/en/latest/?badge=latest)

[](https://codecov.io/github/zvtvz/zvt)

[](https://pepy.tech/project/zvt)

-**Read this in other languages: [English](README-en.md).**

+**The origin of ZVT**

-ZVT是对[fooltrader](https://github.com/foolcage/fooltrader)重新思考后编写的量化项目,其包含可扩展的交易标的,数据recorder,api,因子计算,选股,回测,交易,以及统一的可视化,定位为**中低频** **多级别** **多因子** **多标的** 全市场分析和交易框架。

+[The Three Major Principles of Stock Trading](https://mp.weixin.qq.com/s/FoFR63wFSQIE_AyFubkZ6Q)

-相比其他的量化系统,其不依赖任何中间件,**非常轻,可测试,可推断,可扩展**。

+**Declaration**

-编写该系统的初心:

-* 构造统一可扩展的数据schema

-* 能够容易地把各provider的数据适配到系统

-* 相同的算法,只写一次,可以应用到任何市场

-* 适用于低耗能的人脑+个人电脑

+This project does not currently guarantee any backward compatibility, so please upgrade with caution.

+As the author's thoughts evolve, some things that were once considered important may become less so, and thus may not be maintained.

+Whether the addition of some new elements will be useful to you needs to be assessed by yourself.

-## 详细文档

-[https://zvtvz.github.io/zvt](https://zvtvz.github.io/zvt)

+**Read this in other languages: [中文](README-cn.md).**

->详细文档有部分已落后代码不少,其实认真看完README并结合代码理解下面的几句话,基本上不需要什么文档了

+**Read the docs:[https://zvt.readthedocs.io/en/latest/](https://zvt.readthedocs.io/en/latest/)**

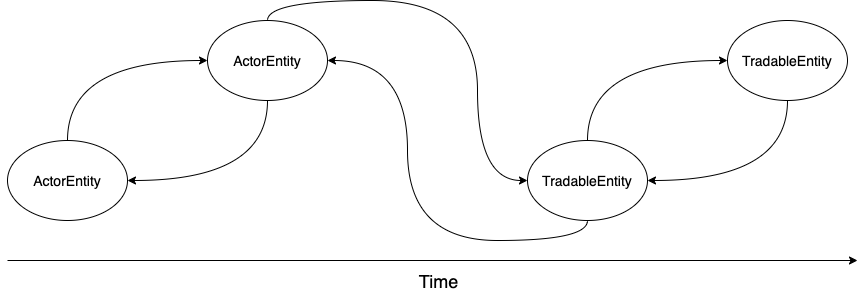

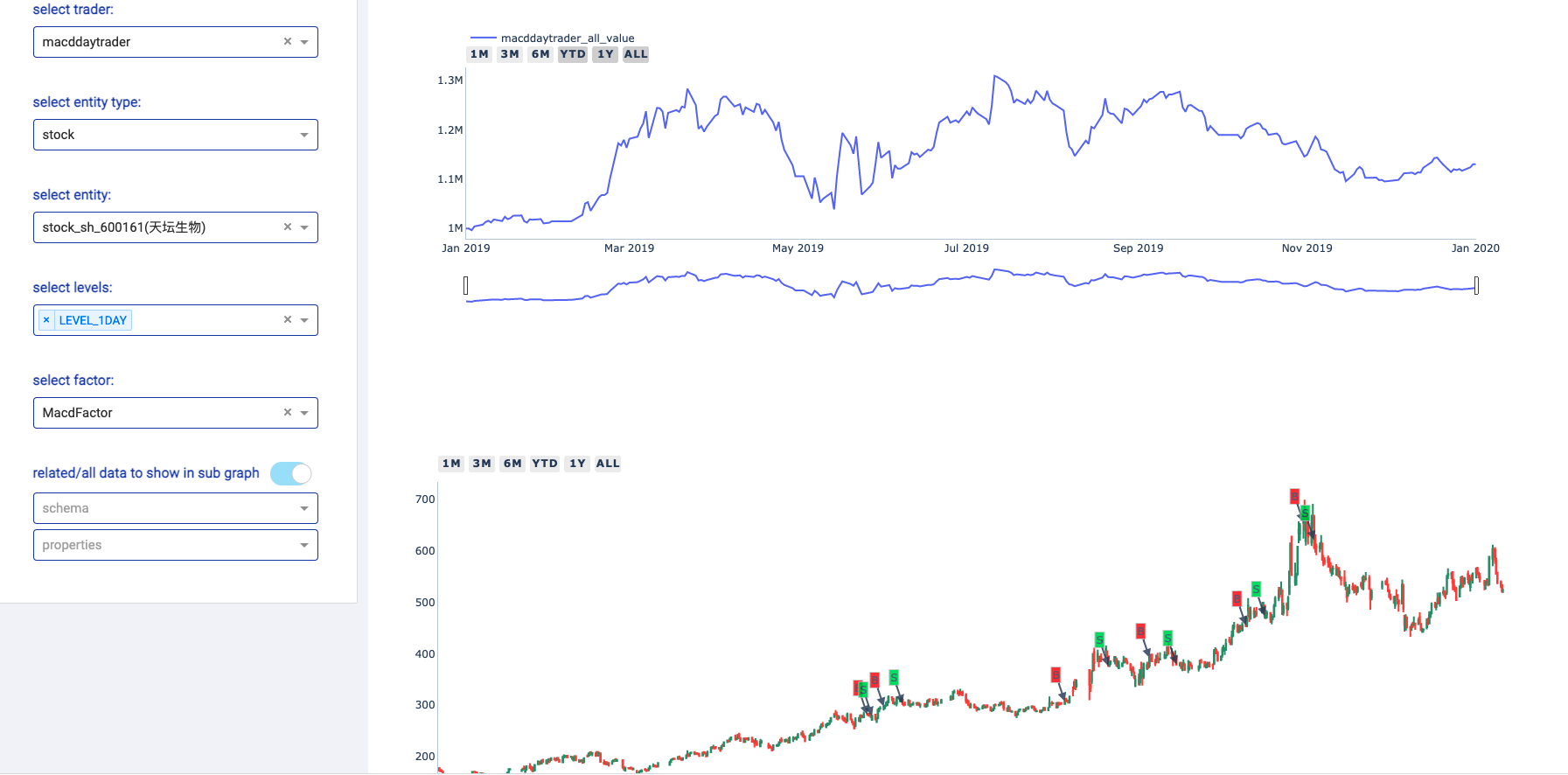

-* ### entity抽象了 *交易标的* 不变的东西

-* ### 数据就是entity和entity发生的event,数据即api,数据即策略

-* ### 数据是可插入的,发信号到哪是可插入的

+### Install

+```

+python3 -m pip install -U zvt

+```

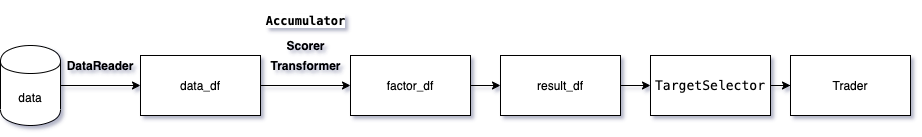

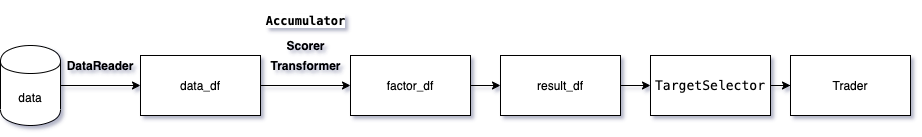

-### 架构图:

-

-

-## Thanks

-

diff --git a/README.md b/README.md

index 93bdf1cc..f965d98e 100644

--- a/README.md

+++ b/README.md

@@ -2,365 +2,535 @@

[](https://pypi.org/project/zvt/)

[](https://pypi.org/project/zvt/)

[](https://pypi.org/project/zvt/)

-[](https://travis-ci.org/zvtvz/zvt)

+[](https://github.com/zvtvz/zvt/actions/workflows/build.yml)

+[](https://github.com/zvtvz/zvt/actions/workflows/package.yaml)

+[](https://zvt.readthedocs.io/en/latest/?badge=latest)

[](https://codecov.io/github/zvtvz/zvt)

[](https://pepy.tech/project/zvt)

-**Read this in other languages: [English](README-en.md).**

+**The origin of ZVT**

-ZVT是对[fooltrader](https://github.com/foolcage/fooltrader)重新思考后编写的量化项目,其包含可扩展的交易标的,数据recorder,api,因子计算,选股,回测,交易,以及统一的可视化,定位为**中低频** **多级别** **多因子** **多标的** 全市场分析和交易框架。

+[The Three Major Principles of Stock Trading](https://mp.weixin.qq.com/s/FoFR63wFSQIE_AyFubkZ6Q)

-相比其他的量化系统,其不依赖任何中间件,**非常轻,可测试,可推断,可扩展**。

+**Declaration**

-编写该系统的初心:

-* 构造统一可扩展的数据schema

-* 能够容易地把各provider的数据适配到系统

-* 相同的算法,只写一次,可以应用到任何市场

-* 适用于低耗能的人脑+个人电脑

+This project does not currently guarantee any backward compatibility, so please upgrade with caution.

+As the author's thoughts evolve, some things that were once considered important may become less so, and thus may not be maintained.

+Whether the addition of some new elements will be useful to you needs to be assessed by yourself.

-## 详细文档

-[https://zvtvz.github.io/zvt](https://zvtvz.github.io/zvt)

+**Read this in other languages: [中文](README-cn.md).**

->详细文档有部分已落后代码不少,其实认真看完README并结合代码理解下面的几句话,基本上不需要什么文档了

+**Read the docs:[https://zvt.readthedocs.io/en/latest/](https://zvt.readthedocs.io/en/latest/)**

-* ### entity抽象了 *交易标的* 不变的东西

-* ### 数据就是entity和entity发生的event,数据即api,数据即策略

-* ### 数据是可插入的,发信号到哪是可插入的

+### Install

+```

+python3 -m pip install -U zvt

+```

-### 架构图:

-

-## 🤝联系方式

+## Contact

-加微信进群:foolcage 添加暗号:zvt

+wechat:foolcage

-## 🤝联系方式

+## Contact

-加微信进群:foolcage 添加暗号:zvt

+wechat:foolcage

------

-微信公众号:

+wechat subscription:

------

-微信公众号:

+wechat subscription:

-知乎专栏:

+zhihu:

https://zhuanlan.zhihu.com/automoney

## Thanks

-

\ No newline at end of file

+

diff --git a/api-tests/create_stock_pool_info.http b/api-tests/create_stock_pool_info.http

new file mode 100644

index 00000000..1c43a8de

--- /dev/null

+++ b/api-tests/create_stock_pool_info.http

@@ -0,0 +1,8 @@

+POST http://127.0.0.1:8090/api/work/create_stock_pool_info

+accept: application/json

+Content-Type: application/json

+

+{

+ "stock_pool_name": "核心资产",

+ "stock_pool_type": "custom"

+}

\ No newline at end of file

diff --git a/api-tests/create_stock_pools.http b/api-tests/create_stock_pools.http

new file mode 100644

index 00000000..39dc53f2

--- /dev/null

+++ b/api-tests/create_stock_pools.http

@@ -0,0 +1,10 @@

+POST http://127.0.0.1:8090/api/work/create_stock_pools

+accept: application/json

+Content-Type: application/json

+

+{

+ "stock_pool_name": "核心资产",

+ "entity_ids": [

+ "stock_sh_600519"

+ ]

+}

\ No newline at end of file

diff --git a/api-tests/event/create_stock_topic.http b/api-tests/event/create_stock_topic.http

new file mode 100644

index 00000000..ea127104

--- /dev/null

+++ b/api-tests/event/create_stock_topic.http

@@ -0,0 +1,19 @@

+POST http://127.0.0.1:8090/api/event/create_stock_topic

+accept: application/json

+Content-Type: application/json

+

+{

+ "name": "特斯拉FSD入华",

+ "desc": "Tesla Al在社交媒体平台“X”上发帖称,特斯拉计划明年第一季度在中国和欧洲推出被其称为“全自动驾驶”(Full Self Driving)的高级驾驶辅助系统,目前正在等待监管部门的批准。",

+ "created_timestamp": "2024-09-05 09:00:00",

+ "trigger_date": "2024-09-05",

+ "due_date": "2025-01-01",

+ "main_tag": "车路云",

+ "sub_tag_list": [

+ "自动驾驶",

+ "车路云"

+ ]

+}

+

+

+

diff --git a/api-tests/event/get_stock_event.http b/api-tests/event/get_stock_event.http

new file mode 100644

index 00000000..9c0bcf4f

--- /dev/null

+++ b/api-tests/event/get_stock_event.http

@@ -0,0 +1,5 @@

+GET http://127.0.0.1:8090/api/event/get_stock_event?entity_id=stock_sz_000034

+accept: application/json

+

+

+

diff --git a/api-tests/event/get_stock_news_analysis.http b/api-tests/event/get_stock_news_analysis.http

new file mode 100644

index 00000000..ae2dc136

--- /dev/null

+++ b/api-tests/event/get_stock_news_analysis.http

@@ -0,0 +1,5 @@

+GET http://127.0.0.1:8090/api/event/get_stock_news_analysis

+accept: application/json

+

+

+

diff --git a/api-tests/event/get_tag_suggestions_stats.http b/api-tests/event/get_tag_suggestions_stats.http

new file mode 100644

index 00000000..31bb40b5

--- /dev/null

+++ b/api-tests/event/get_tag_suggestions_stats.http

@@ -0,0 +1,5 @@

+GET http://127.0.0.1:8090/api/event/get_tag_suggestions_stats

+accept: application/json

+

+

+

diff --git a/api-tests/event/ignore_stock_news.http b/api-tests/event/ignore_stock_news.http

new file mode 100644

index 00000000..0e533764

--- /dev/null

+++ b/api-tests/event/ignore_stock_news.http

@@ -0,0 +1,10 @@

+POST http://127.0.0.1:8090/api/event/ignore_stock_news

+accept: application/json

+Content-Type: application/json

+

+{

+ "news_id": "stock_sz_000034_2024-07-17 16:08:17"

+}

+

+

+

diff --git a/api-tests/event/query_stock_topic.http b/api-tests/event/query_stock_topic.http

new file mode 100644

index 00000000..045d0d3f

--- /dev/null

+++ b/api-tests/event/query_stock_topic.http

@@ -0,0 +1,10 @@

+POST http://127.0.0.1:8090/api/event/query_stock_topic

+accept: application/json

+Content-Type: application/json

+

+{

+ "limit": 20

+}

+

+

+

diff --git a/api-tests/event/update_stock_topic.http b/api-tests/event/update_stock_topic.http

new file mode 100644

index 00000000..7046d462

--- /dev/null

+++ b/api-tests/event/update_stock_topic.http

@@ -0,0 +1,18 @@

+POST http://127.0.0.1:8090/api/event/update_stock_topic

+accept: application/json

+Content-Type: application/json

+

+{

+ "id": "admin_特斯拉FSD入华",

+ "desc": "Tesla Al在社交媒体平台“X”上发帖称,特斯拉计划明年第一季度在中国和欧洲推出被其称为“全自动驾驶”(Full Self Driving)的高级驾驶辅助系统,目前正在等待监管部门的批准。",

+ "created_timestamp": "2024-09-05 09:00:00",

+ "trigger_date": "2024-09-05",

+ "due_date": "2025-01-01",

+ "main_tag": "车路云",

+ "sub_tag_list": [

+ "自动驾驶",

+ "车路云"

+ ]

+}

+

+

diff --git a/api-tests/factor/get_factors.http b/api-tests/factor/get_factors.http

new file mode 100644

index 00000000..36d5791e

--- /dev/null

+++ b/api-tests/factor/get_factors.http

@@ -0,0 +1,5 @@

+GET http://127.0.0.1:8090/api/factor/get_factors

+accept: application/json

+

+

+

diff --git a/api-tests/factor/query_factor_result.http b/api-tests/factor/query_factor_result.http

new file mode 100644

index 00000000..55367c53

--- /dev/null

+++ b/api-tests/factor/query_factor_result.http

@@ -0,0 +1,11 @@

+POST http://127.0.0.1:8090/api/factor/query_factor_result

+accept: application/json

+Content-Type: application/json

+

+

+{

+ "entity_ids": [

+ "stock_sz_300133"

+ ],

+ "factor_name": "LiveOrDeadFactor"

+}

diff --git a/api-tests/get_stock_pool_info.http b/api-tests/get_stock_pool_info.http

new file mode 100644

index 00000000..ad78231c

--- /dev/null

+++ b/api-tests/get_stock_pool_info.http

@@ -0,0 +1,5 @@

+GET http://127.0.0.1:8090/api/work/get_stock_pool_info

+accept: application/json

+

+

+

diff --git a/api-tests/get_stock_pools.http b/api-tests/get_stock_pools.http

new file mode 100644

index 00000000..0c6f1c15

--- /dev/null

+++ b/api-tests/get_stock_pools.http

@@ -0,0 +1,5 @@

+GET http://127.0.0.1:8090/api/work/get_stock_pools?stock_pool_name=main_line

+accept: application/json

+

+

+

diff --git a/api-tests/tag/batch_set_stock_tags.http b/api-tests/tag/batch_set_stock_tags.http

new file mode 100644

index 00000000..a74c2cc3

--- /dev/null

+++ b/api-tests/tag/batch_set_stock_tags.http

@@ -0,0 +1,18 @@

+POST http://127.0.0.1:8090/api/work/batch_set_stock_tags

+accept: application/json

+Content-Type: application/json

+

+

+{

+ "tag": "脑机接口",

+ "tag_type": "sub_tag",

+ "entity_ids": [

+ "stock_sh_600775",

+ "stock_sz_002173",

+ "stock_sz_301293",

+ "stock_sz_300753",

+ "stock_sz_300430",

+ "stock_sz_002243"

+ ],

+ "tag_reason": "脑机接口消息刺激"

+}

diff --git a/api-tests/tag/build_main_tag_industry_relation.http b/api-tests/tag/build_main_tag_industry_relation.http

new file mode 100644

index 00000000..ff521d0e

--- /dev/null

+++ b/api-tests/tag/build_main_tag_industry_relation.http

@@ -0,0 +1,8 @@

+POST http://127.0.0.1:8090/api/work/build_main_tag_industry_relation

+accept: application/json

+Content-Type: application/json

+

+{

+ "main_tag": "华为",

+ "industry_list": ["通信设备"]

+}

\ No newline at end of file

diff --git a/api-tests/tag/build_main_tag_sub_tag_relation.http b/api-tests/tag/build_main_tag_sub_tag_relation.http

new file mode 100644

index 00000000..5c9da65c

--- /dev/null

+++ b/api-tests/tag/build_main_tag_sub_tag_relation.http

@@ -0,0 +1,13 @@

+POST http://127.0.0.1:8090/api/work/build_main_tag_sub_tag_relation

+accept: application/json

+Content-Type: application/json

+

+{

+ "main_tag": "华为",

+ "sub_tag_list": [

+ "华为汽车",

+ "华为概念",

+ "华为欧拉",

+ "华为昇腾"

+ ]

+}

\ No newline at end of file

diff --git a/api-tests/tag/build_stock_tags.http b/api-tests/tag/build_stock_tags.http

new file mode 100644

index 00000000..ba957231

--- /dev/null

+++ b/api-tests/tag/build_stock_tags.http

@@ -0,0 +1,15 @@

+POST http://127.0.0.1:8090/api/work/build_stock_tags

+accept: application/json

+Content-Type: application/json

+

+[

+ {

+ "entity_id": "stock_sz_002085",

+ "name": "万丰奥威",

+ "main_tag": "低空经济",

+ "main_tag_reason": "2023年12月27日回复称,公司钻石eDA40纯电动飞机已成功首飞;eVTOL项目已联动海外钻石技术开发团队,在绿色、智能、垂直起降等方面的设计体现未来领域应用场景。",

+ "sub_tag": "低空经济",

+ "sub_tag_reason": "2023年12月27日回复称,公司钻石eDA40纯电动飞机已成功首飞;eVTOL项目已联动海外钻石技术开发团队,在绿色、智能、垂直起降等方面的设计体现未来领域应用场景。",

+ "active_hidden_tags": null

+ }

+]

\ No newline at end of file

diff --git a/api-tests/tag/change_main_tag.http b/api-tests/tag/change_main_tag.http

new file mode 100644

index 00000000..48cb614e

--- /dev/null

+++ b/api-tests/tag/change_main_tag.http

@@ -0,0 +1,8 @@

+POST http://127.0.0.1:8090/api/work/change_main_tag

+accept: application/json

+Content-Type: application/json

+

+{

+ "current_main_tag": "医疗器械",

+ "new_main_tag": "医药"

+}

\ No newline at end of file

diff --git a/api-tests/tag/create_hidden_tag_info.http b/api-tests/tag/create_hidden_tag_info.http

new file mode 100644

index 00000000..d5c65844

--- /dev/null

+++ b/api-tests/tag/create_hidden_tag_info.http

@@ -0,0 +1,8 @@

+POST http://127.0.0.1:8090/api/work/create_hidden_tag_info

+accept: application/json

+Content-Type: application/json

+

+{

+ "tag": "中字头",

+ "tag_reason": "央企,国资委控股"

+}

\ No newline at end of file

diff --git a/api-tests/tag/create_main_tag_info.http b/api-tests/tag/create_main_tag_info.http

new file mode 100644

index 00000000..7caa64ba

--- /dev/null

+++ b/api-tests/tag/create_main_tag_info.http

@@ -0,0 +1,8 @@

+POST http://127.0.0.1:8090/api/work/create_main_tag_info

+accept: application/json

+Content-Type: application/json

+

+{

+ "tag": "未知",

+ "tag_reason": "行业定位不清晰"

+}

\ No newline at end of file

diff --git a/api-tests/tag/create_sub_tag_info.http b/api-tests/tag/create_sub_tag_info.http

new file mode 100644

index 00000000..3183a3ff

--- /dev/null

+++ b/api-tests/tag/create_sub_tag_info.http

@@ -0,0 +1,8 @@

+POST http://127.0.0.1:8090/api/work/create_sub_tag_info

+accept: application/json

+Content-Type: application/json

+

+{

+ "tag": "低空经济",

+ "tag_reason": "低空经济是飞行器和各种产业形态的融合,例如\"无人机+配送\"、\"直升机或evto载人飞行\"、\"无人机+应急救援\"、\"无人机+工业场景巡检\"等"

+}

\ No newline at end of file

diff --git a/api-tests/tag/get_hidden_tag_info.http b/api-tests/tag/get_hidden_tag_info.http

new file mode 100644

index 00000000..007750a1

--- /dev/null

+++ b/api-tests/tag/get_hidden_tag_info.http

@@ -0,0 +1,4 @@

+GET http://127.0.0.1:8090/api/work/get_hidden_tag_info

+accept: application/json

+

+

diff --git a/api-tests/tag/get_industry_info.http b/api-tests/tag/get_industry_info.http

new file mode 100644

index 00000000..c1fac747

--- /dev/null

+++ b/api-tests/tag/get_industry_info.http

@@ -0,0 +1,4 @@

+GET http://127.0.0.1:8090/api/work/get_industry_info

+accept: application/json

+

+

diff --git a/api-tests/tag/get_main_tag_industry_relation.http b/api-tests/tag/get_main_tag_industry_relation.http

new file mode 100644

index 00000000..7b44ce9b

--- /dev/null

+++ b/api-tests/tag/get_main_tag_industry_relation.http

@@ -0,0 +1,4 @@

+GET http://127.0.0.1:8090/api/work/get_main_tag_industry_relation?main_tag=华为

+accept: application/json

+

+

diff --git a/api-tests/tag/get_main_tag_info.http b/api-tests/tag/get_main_tag_info.http

new file mode 100644

index 00000000..dc1d8387

--- /dev/null

+++ b/api-tests/tag/get_main_tag_info.http

@@ -0,0 +1,4 @@

+GET http://127.0.0.1:8090/api/work/get_main_tag_info

+accept: application/json

+

+

diff --git a/api-tests/tag/get_main_tag_sub_tag_relation.http b/api-tests/tag/get_main_tag_sub_tag_relation.http

new file mode 100644

index 00000000..a4886faa

--- /dev/null

+++ b/api-tests/tag/get_main_tag_sub_tag_relation.http

@@ -0,0 +1,4 @@

+GET http://127.0.0.1:8090/api/work/get_main_tag_sub_tag_relation?main_tag=华为

+accept: application/json

+

+

diff --git a/api-tests/tag/get_stock_tag_options.http b/api-tests/tag/get_stock_tag_options.http

new file mode 100644

index 00000000..4b567d0d

--- /dev/null

+++ b/api-tests/tag/get_stock_tag_options.http

@@ -0,0 +1,4 @@

+GET http://127.0.0.1:8090/api/work/get_stock_tag_options?entity_id=stock_sh_600733

+accept: application/json

+

+

diff --git a/api-tests/tag/get_sub_tag_info.http b/api-tests/tag/get_sub_tag_info.http

new file mode 100644

index 00000000..58329c1e

--- /dev/null

+++ b/api-tests/tag/get_sub_tag_info.http

@@ -0,0 +1,4 @@

+GET http://127.0.0.1:8090/api/work/get_sub_tag_info

+accept: application/json

+

+

diff --git a/api-tests/tag/query_simple_stock_tags.http b/api-tests/tag/query_simple_stock_tags.http

new file mode 100644

index 00000000..7ec4c6f3

--- /dev/null

+++ b/api-tests/tag/query_simple_stock_tags.http

@@ -0,0 +1,12 @@

+POST http://127.0.0.1:8090/api/work/query_simple_stock_tags

+accept: application/json

+Content-Type: application/json

+

+

+{

+ "entity_ids": [

+ "stock_sz_002085",

+ "stock_sz_000099",

+ "stock_sz_002130"

+ ]

+}

\ No newline at end of file

diff --git a/api-tests/tag/query_stock_tag_stats.http b/api-tests/tag/query_stock_tag_stats.http

new file mode 100644

index 00000000..20f9b59b

--- /dev/null

+++ b/api-tests/tag/query_stock_tag_stats.http

@@ -0,0 +1,7 @@

+POST http://127.0.0.1:8090/api/work/query_stock_tag_stats

+accept: application/json

+Content-Type: application/json

+

+{

+ "stock_pool_name": "main_line"

+}

\ No newline at end of file

diff --git a/api-tests/tag/query_stock_tags.http b/api-tests/tag/query_stock_tags.http

new file mode 100644

index 00000000..a1e655d5

--- /dev/null

+++ b/api-tests/tag/query_stock_tags.http

@@ -0,0 +1,12 @@

+POST http://127.0.0.1:8090/api/work/query_stock_tags

+accept: application/json

+Content-Type: application/json

+

+

+{

+ "entity_ids": [

+ "stock_sz_000099",

+ "stock_sz_002085",

+ "stock_sz_001696"

+ ]

+}

diff --git a/api-tests/tag/set_stock_tags.http b/api-tests/tag/set_stock_tags.http

new file mode 100644

index 00000000..39774a3d

--- /dev/null

+++ b/api-tests/tag/set_stock_tags.http

@@ -0,0 +1,17 @@

+POST http://127.0.0.1:8090/api/work/set_stock_tags

+accept: application/json

+Content-Type: application/json

+

+{

+ "entity_id": "stock_sz_001366",

+ "name": "播恩集团",

+ "main_tag": "医药",

+ "main_tag_reason": "合成生物概念",

+ "main_tags": {

+ "农业": "来自行业:农牧饲渔"

+ },

+ "sub_tag": "生物医药",

+ "sub_tag_reason": "合成生物概念",

+ "sub_tags": null,

+ "active_hidden_tags": null

+ }

\ No newline at end of file

diff --git a/api-tests/test.http b/api-tests/test.http

new file mode 100644

index 00000000..8b137891

--- /dev/null

+++ b/api-tests/test.http

@@ -0,0 +1 @@

+

diff --git a/api-tests/trading/build_query_stock_quote_setting.http b/api-tests/trading/build_query_stock_quote_setting.http

new file mode 100644

index 00000000..5ef65fc1

--- /dev/null

+++ b/api-tests/trading/build_query_stock_quote_setting.http

@@ -0,0 +1,8 @@

+POST http://127.0.0.1:8090/api/trading/build_query_stock_quote_setting

+accept: application/json

+Content-Type: application/json

+

+{

+ "stock_pool_name": "main_line",

+ "main_tags": ["低空经济","新能源"]

+}

\ No newline at end of file

diff --git a/api-tests/trading/build_trading_plan.http b/api-tests/trading/build_trading_plan.http

new file mode 100644

index 00000000..668df7c5

--- /dev/null

+++ b/api-tests/trading/build_trading_plan.http

@@ -0,0 +1,13 @@

+POST http://127.0.0.1:8090/api/trading/build_trading_plan

+accept: application/json

+Content-Type: application/json

+

+{

+ "stock_id": "stock_sz_300133",

+ "trading_date": "2024-04-23",

+ "expected_open_pct": 0.02,

+ "buy_price": 6.9,

+ "sell_price": null,

+ "trading_reason": "主线",

+ "trading_signal_type": "open_long"

+}

diff --git a/api-tests/trading/get_current_trading_plan.http b/api-tests/trading/get_current_trading_plan.http

new file mode 100644

index 00000000..12b434b4

--- /dev/null

+++ b/api-tests/trading/get_current_trading_plan.http

@@ -0,0 +1,5 @@

+GET http://127.0.0.1:8090/api/trading/get_current_trading_plan

+accept: application/json

+

+

+

diff --git a/api-tests/trading/get_future_trading_plan.http b/api-tests/trading/get_future_trading_plan.http

new file mode 100644

index 00000000..bdda5385

--- /dev/null

+++ b/api-tests/trading/get_future_trading_plan.http

@@ -0,0 +1,5 @@

+GET http://127.0.0.1:8090/api/trading/get_future_trading_plan

+accept: application/json

+

+

+

diff --git a/api-tests/trading/get_query_stock_quote_setting.http b/api-tests/trading/get_query_stock_quote_setting.http

new file mode 100644

index 00000000..8f4ec923

--- /dev/null

+++ b/api-tests/trading/get_query_stock_quote_setting.http

@@ -0,0 +1,5 @@

+GET http://127.0.0.1:8090/api/trading/get_query_stock_quote_setting

+accept: application/json

+

+

+

diff --git a/api-tests/trading/get_quote_stats.http b/api-tests/trading/get_quote_stats.http

new file mode 100644

index 00000000..abad3cbb

--- /dev/null

+++ b/api-tests/trading/get_quote_stats.http

@@ -0,0 +1,2 @@

+GET http://127.0.0.1:8090/api/trading/get_quote_stats

+accept: application/json

diff --git a/api-tests/trading/query_kdata.http b/api-tests/trading/query_kdata.http

new file mode 100644

index 00000000..9bf63c61

--- /dev/null

+++ b/api-tests/trading/query_kdata.http

@@ -0,0 +1,13 @@

+POST http://127.0.0.1:8090/api/trading/query_kdata

+accept: application/json

+Content-Type: application/json

+

+

+{

+ "data_provider": "em",

+ "entity_ids": [

+ "stock_sz_002085",

+ "stock_sz_300133"

+ ],

+ "adjust_type": "hfq"

+}

diff --git a/api-tests/trading/query_stock_quotes.http b/api-tests/trading/query_stock_quotes.http

new file mode 100644

index 00000000..5805111a

--- /dev/null

+++ b/api-tests/trading/query_stock_quotes.http

@@ -0,0 +1,10 @@

+POST http://127.0.0.1:8090/api/trading/query_stock_quotes

+accept: application/json

+Content-Type: application/json

+

+{

+ "main_tag": "低空经济",

+ "stock_pool_name": "vol_up",

+ "limit": 500,

+ "order_by_field": "change_pct"

+}

diff --git a/api-tests/trading/query_tag_quotes.http b/api-tests/trading/query_tag_quotes.http

new file mode 100644

index 00000000..bfadce6f

--- /dev/null

+++ b/api-tests/trading/query_tag_quotes.http

@@ -0,0 +1,13 @@

+POST http://127.0.0.1:8090/api/trading/query_tag_quotes

+accept: application/json

+Content-Type: application/json

+

+{

+ "main_tags": [

+ "低空经济",

+ "半导体",

+ "化工",

+ "消费电子"

+ ],

+ "stock_pool_name": "main_line"

+}

diff --git a/api-tests/trading/query_trading_plan.http b/api-tests/trading/query_trading_plan.http

new file mode 100644

index 00000000..944ad194

--- /dev/null

+++ b/api-tests/trading/query_trading_plan.http

@@ -0,0 +1,12 @@

+POST http://127.0.0.1:8090/api/trading/query_trading_plan

+accept: application/json

+Content-Type: application/json

+

+{

+ "time_range": {

+ "relative_time_range": {

+ "interval": -30,

+ "time_unit": "day"

+ }

+ }

+}

diff --git a/api-tests/trading/query_ts.http b/api-tests/trading/query_ts.http

new file mode 100644

index 00000000..f91f7d6e

--- /dev/null

+++ b/api-tests/trading/query_ts.http

@@ -0,0 +1,11 @@

+POST http://127.0.0.1:8090/api/trading/query_ts

+accept: application/json

+Content-Type: application/json

+

+

+{

+ "entity_ids": [

+ "stock_sz_002085",

+ "stock_sz_300133"

+ ]

+}

diff --git a/build.sh b/build.sh

new file mode 100644

index 00000000..c6ac05d8

--- /dev/null

+++ b/build.sh

@@ -0,0 +1 @@

+python3 setup.py sdist bdist_wheel

\ No newline at end of file

diff --git a/docs/.nojekyll b/docs/.nojekyll

deleted file mode 100644

index e69de29b..00000000

diff --git a/docs/Makefile b/docs/Makefile

new file mode 100644

index 00000000..d0c3cbf1

--- /dev/null

+++ b/docs/Makefile

@@ -0,0 +1,20 @@

+# Minimal makefile for Sphinx documentation

+#

+

+# You can set these variables from the command line, and also

+# from the environment for the first two.

+SPHINXOPTS ?=

+SPHINXBUILD ?= sphinx-build

+SOURCEDIR = source

+BUILDDIR = build

+

+# Put it first so that "make" without argument is like "make help".

+help:

+ @$(SPHINXBUILD) -M help "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

+

+.PHONY: help Makefile

+

+# Catch-all target: route all unknown targets to Sphinx using the new

+# "make mode" option. $(O) is meant as a shortcut for $(SPHINXOPTS).

+%: Makefile

+ @$(SPHINXBUILD) -M $@ "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

diff --git a/docs/_navbar.md b/docs/_navbar.md

deleted file mode 100644

index 8bab00f4..00000000

--- a/docs/_navbar.md

+++ /dev/null

@@ -1,4 +0,0 @@

-

-

-* [中文](/)

-* [english](/en/)

\ No newline at end of file

diff --git a/docs/_sidebar.md b/docs/_sidebar.md

deleted file mode 100644

index 89e88935..00000000

--- a/docs/_sidebar.md

+++ /dev/null

@@ -1,14 +0,0 @@

-- 入坑

- - [简介](intro.md "zvt intro")

- - [快速开始](quick-start.md "zvt quick start")

-- 数据

- - [数据总览](data_overview.md "zvt data overview")

- - [数据列表](data_list.md "zvt data list")

- - [数据更新](data_recorder.md "zvt data recorder")

- - [数据使用](data_usage.md "zvt data usage")

- - [数据扩展](data_extending.md "zvt data extending")

-- 计算

- - [因子计算](factor.md "zvt factor")

- - [回测通知](trader.md "zvt trader")

-- [设计哲学](design-philosophy.md "zvt design philosophy")

-- [支持项目](donate.md "donate for zvt")

diff --git a/docs/arch.png b/docs/arch.png

deleted file mode 100644

index fcb3e80a..00000000

Binary files a/docs/arch.png and /dev/null differ

diff --git a/docs/architecture.eddx b/docs/architecture.eddx

deleted file mode 100644

index 8b2dedf6..00000000

Binary files a/docs/architecture.eddx and /dev/null differ

diff --git a/docs/architecture.png b/docs/architecture.png

deleted file mode 100644

index ccdfe9b8..00000000

Binary files a/docs/architecture.png and /dev/null differ

diff --git a/docs/data.eddx b/docs/data.eddx

deleted file mode 100644

index 71a125f8..00000000

Binary files a/docs/data.eddx and /dev/null differ

diff --git a/docs/data.png b/docs/data.png

deleted file mode 100644

index 71fa714d..00000000

Binary files a/docs/data.png and /dev/null differ

diff --git a/docs/data_extending.md b/docs/data_extending.md

deleted file mode 100644

index 51c22ed7..00000000

--- a/docs/data_extending.md

+++ /dev/null

@@ -1,266 +0,0 @@

-## 数据扩展要点

-

-* zvt里面只有两种数据,EntityMixin和Mixin

-

- EntityMixin为投资标的信息,Mixin为其发生的事。任何一个投资品种,首先是定义EntityMixin,然后是其相关的Mixin。

- 比如Stock(EntityMixin),及其相关的BalanceSheet,CashFlowStatement(Mixin)等。

-

-* zvt的数据可以记录(record_data方法)

-

- 记录数据可以通过扩展以下类来实现:

-

- * Recorder

-

- 最基本的类,实现了关联data_schema和recorder的功能。记录EntityMixin一般继承该类。

-

- * RecorderForEntities

-

- 实现了初始化需要记录的**投资标的列表**的功能,有了标的,才能记录标的发生的事。

-

- * TimeSeriesDataRecorder

-

- 实现了增量记录,实时和非实时数据处理的功能。

-

- * FixedCycleDataRecorder

-

- 实现了计算固定周期数据剩余size的功能。

-

- * TimestampsDataRecorder

-

- 实现记录时间集合可知的数据记录功能。

-

-继承Recorder必须指定data_schema和provider两个字段,系统通过python meta programing的方式对data_schema和recorder class进行了关联:

-```

-class Meta(type):

- def __new__(meta, name, bases, class_dict):

- cls = type.__new__(meta, name, bases, class_dict)

- # register the recorder class to the data_schema

- if hasattr(cls, 'data_schema') and hasattr(cls, 'provider'):

- if cls.data_schema and issubclass(cls.data_schema, Mixin):

- print(f'{cls.__name__}:{cls.data_schema.__name__}')

- cls.data_schema.register_recorder_cls(cls.provider, cls)

- return cls

-

-

-class Recorder(metaclass=Meta):

- logger = logging.getLogger(__name__)

-

- # overwrite them to setup the data you want to record

- provider: str = None

- data_schema: Mixin = None

-```

-

-

-下面以**个股估值数据**为例对具体步骤做一个说明。

-

-## 1. 定义数据

-在domain package(或者其子package)下新建一个文件(module)valuation.py,内容如下:

-```

-# -*- coding: utf-8 -*-

-from sqlalchemy import Column, String, Float

-from sqlalchemy.ext.declarative import declarative_base

-

-from zvdata import Mixin

-from zvdata.contract import register_schema

-

-ValuationBase = declarative_base()

-

-

-class StockValuation(ValuationBase, Mixin):

- __tablename__ = 'stock_valuation'

-

- code = Column(String(length=32))

- name = Column(String(length=32))

- # 总股本(股)

- capitalization = Column(Float)

- # 公司已发行的普通股股份总数(包含A股,B股和H股的总股本)

- circulating_cap = Column(Float)

- # 市值

- market_cap = Column(Float)

- # 流通市值

- circulating_market_cap = Column(Float)

- # 换手率

- turnover_ratio = Column(Float)

- # 静态pe

- pe = Column(Float)

- # 动态pe

- pe_ttm = Column(Float)

- # 市净率

- pb = Column(Float)

- # 市销率

- ps = Column(Float)

- # 市现率

- pcf = Column(Float)

-

-

-class EtfValuation(ValuationBase, Mixin):

- __tablename__ = 'etf_valuation'

-

- code = Column(String(length=32))

- name = Column(String(length=32))

- # 静态pe

- pe = Column(Float)

- # 动态pe

- pe_ttm = Column(Float)

- # 市净率

- pb = Column(Float)

- # 市销率

- ps = Column(Float)

- # 市现率

- pcf = Column(Float)

-

-

-register_schema(providers=['joinquant'], db_name='valuation', schema_base=ValuationBase)

-

-__all__ = ['StockValuation', 'EtfValuation']

-

-```

-将其分解为以下步骤:

-### 1.1 数据库base

-```

-ValuationBase = declarative_base()

-```

-一个数据库可有多个table(schema),table(schema)应继承自该类

-

-### 1.2 table(schema)的定义

-```

-class StockValuation(ValuationBase, Mixin):

- __tablename__ = 'stock_valuation'

-

- code = Column(String(length=32))

- name = Column(String(length=32))

- # 总股本(股)

- capitalization = Column(Float)

- # 公司已发行的普通股股份总数(包含A股,B股和H股的总股本)

- circulating_cap = Column(Float)

- # 市值

- market_cap = Column(Float)

- # 流通市值

- circulating_market_cap = Column(Float)

- # 换手率

- turnover_ratio = Column(Float)

- # 静态pe

- pe = Column(Float)

- # 动态pe

- pe_ttm = Column(Float)

- # 市净率

- pb = Column(Float)

- # 市销率

- ps = Column(Float)

- # 市现率

- pcf = Column(Float)

-

-

-class EtfValuation(ValuationBase, Mixin):

- __tablename__ = 'etf_valuation'

-

- code = Column(String(length=32))

- name = Column(String(length=32))

- # 静态pe

- pe = Column(Float)

- # 动态pe

- pe_ttm = Column(Float)

- # 市净率

- pb = Column(Float)

- # 市销率

- ps = Column(Float)

- # 市现率

- pcf = Column(Float)

-```

-这里定义了两个table(schema),继承ValuationBase表明其隶属的数据库,继承Mixin让其获得zvt统一的字段和方法。

-schema里面的__tablename__为表名。

-### 1.3 注册数据

-```

-register_schema(providers=['joinquant'], db_name='valuation', schema_base=ValuationBase)

-

-__all__ = ['StockValuation', 'EtfValuation']

-```

-register_schema会将数据注册到zvt的数据系统中,providers为数据的提供商列表,db_name为数据库名字标识,schema_base为上面定义的数据库base。

-

-__all__为该module定义的数据结构,为了使得整个系统的数据依赖干净明确,所有的module都应该手动定义该字段。

-

-

-## 2 实现相应的recorder

-```

-# -*- coding: utf-8 -*-

-

-import pandas as pd

-from jqdatasdk import auth, logout, query, valuation, get_fundamentals_continuously

-

-from zvdata.api import df_to_db

-from zvdata.recorder import TimeSeriesDataRecorder

-from zvdata.utils.time_utils import now_pd_timestamp, now_time_str, to_time_str

-from zvt import zvt_config

-from zvt.domain import Stock, StockValuation, EtfStock

-from zvt.recorders.joinquant.common import to_jq_entity_id

-

-

-class JqChinaStockValuationRecorder(TimeSeriesDataRecorder):

- entity_provider = 'joinquant'

- entity_schema = Stock

-

- # 数据来自jq

- provider = 'joinquant'

-

- data_schema = StockValuation

-

- def __init__(self, entity_type='stock', exchanges=['sh', 'sz'], entity_ids=None, codes=None, day_data=False, batch_size=10,

- force_update=False, sleeping_time=5, default_size=2000, real_time=False, fix_duplicate_way='add',

- start_timestamp=None, end_timestamp=None, close_hour=0, close_minute=0) -> None:

- super().__init__(entity_type, exchanges, entity_ids, codes, day_data, batch_size, force_update, sleeping_time,

- default_size, real_time, fix_duplicate_way, start_timestamp, end_timestamp, close_hour,

- close_minute)

- auth(zvt_config['jq_username'], zvt_config['jq_password'])

-

- def on_finish(self):

- super().on_finish()

- logout()

-

- def record(self, entity, start, end, size, timestamps):

- q = query(

- valuation

- ).filter(

- valuation.code == to_jq_entity_id(entity)

- )

- count: pd.Timedelta = now_pd_timestamp() - start

- df = get_fundamentals_continuously(q, end_date=now_time_str(), count=count.days + 1, panel=False)

- df['entity_id'] = entity.id

- df['timestamp'] = pd.to_datetime(df['day'])

- df['code'] = entity.code

- df['name'] = entity.name

- df['id'] = df['timestamp'].apply(lambda x: "{}_{}".format(entity.id, to_time_str(x)))

- df = df.rename({'pe_ratio_lyr': 'pe',

- 'pe_ratio': 'pe_ttm',

- 'pb_ratio': 'pb',

- 'ps_ratio': 'ps',

- 'pcf_ratio': 'pcf'},

- axis='columns')

-

- df['market_cap'] = df['market_cap'] * 100000000

- df['circulating_cap'] = df['circulating_cap'] * 100000000

- df['capitalization'] = df['capitalization'] * 10000

- df['circulating_cap'] = df['circulating_cap'] * 10000

- df_to_db(df=df, data_schema=self.data_schema, provider=self.provider, force_update=self.force_update)

-

- return None

-

-__all__ = ['JqChinaStockValuationRecorder']

-```

-

-# 3. 获得的能力

-

-# 4. recorder原理

-将各provider提供(或者自己爬取)的数据**变成**符合data schema的数据需要做好以下几点:

-* 初始化要抓取的标的

-可抓取单标的来调试,然后抓取全量标的

-* 能够从上次抓取的地方接着抓

-减少不必要的请求,增量抓取

-* 封装常用的请求方式

-对时间序列数据的请求,无非start,end,size,time list的组合

-* 能够自动去重

-* 能够设置抓取速率

-* 提供抓取完成的回调函数

-方便数据校验和多provider数据补全

-

-流程图如下:

-

-知乎专栏:

+zhihu:

https://zhuanlan.zhihu.com/automoney

## Thanks

-

\ No newline at end of file

+

diff --git a/api-tests/create_stock_pool_info.http b/api-tests/create_stock_pool_info.http

new file mode 100644

index 00000000..1c43a8de

--- /dev/null

+++ b/api-tests/create_stock_pool_info.http

@@ -0,0 +1,8 @@

+POST http://127.0.0.1:8090/api/work/create_stock_pool_info

+accept: application/json

+Content-Type: application/json

+

+{

+ "stock_pool_name": "核心资产",

+ "stock_pool_type": "custom"

+}

\ No newline at end of file

diff --git a/api-tests/create_stock_pools.http b/api-tests/create_stock_pools.http

new file mode 100644

index 00000000..39dc53f2

--- /dev/null

+++ b/api-tests/create_stock_pools.http

@@ -0,0 +1,10 @@

+POST http://127.0.0.1:8090/api/work/create_stock_pools

+accept: application/json

+Content-Type: application/json

+

+{

+ "stock_pool_name": "核心资产",

+ "entity_ids": [

+ "stock_sh_600519"

+ ]

+}

\ No newline at end of file

diff --git a/api-tests/event/create_stock_topic.http b/api-tests/event/create_stock_topic.http

new file mode 100644

index 00000000..ea127104

--- /dev/null

+++ b/api-tests/event/create_stock_topic.http

@@ -0,0 +1,19 @@

+POST http://127.0.0.1:8090/api/event/create_stock_topic

+accept: application/json

+Content-Type: application/json

+

+{

+ "name": "特斯拉FSD入华",

+ "desc": "Tesla Al在社交媒体平台“X”上发帖称,特斯拉计划明年第一季度在中国和欧洲推出被其称为“全自动驾驶”(Full Self Driving)的高级驾驶辅助系统,目前正在等待监管部门的批准。",

+ "created_timestamp": "2024-09-05 09:00:00",

+ "trigger_date": "2024-09-05",

+ "due_date": "2025-01-01",

+ "main_tag": "车路云",

+ "sub_tag_list": [

+ "自动驾驶",

+ "车路云"

+ ]

+}

+

+

+

diff --git a/api-tests/event/get_stock_event.http b/api-tests/event/get_stock_event.http

new file mode 100644

index 00000000..9c0bcf4f

--- /dev/null

+++ b/api-tests/event/get_stock_event.http

@@ -0,0 +1,5 @@

+GET http://127.0.0.1:8090/api/event/get_stock_event?entity_id=stock_sz_000034

+accept: application/json

+

+

+

diff --git a/api-tests/event/get_stock_news_analysis.http b/api-tests/event/get_stock_news_analysis.http

new file mode 100644

index 00000000..ae2dc136

--- /dev/null

+++ b/api-tests/event/get_stock_news_analysis.http

@@ -0,0 +1,5 @@

+GET http://127.0.0.1:8090/api/event/get_stock_news_analysis

+accept: application/json

+

+

+

diff --git a/api-tests/event/get_tag_suggestions_stats.http b/api-tests/event/get_tag_suggestions_stats.http

new file mode 100644

index 00000000..31bb40b5

--- /dev/null

+++ b/api-tests/event/get_tag_suggestions_stats.http

@@ -0,0 +1,5 @@

+GET http://127.0.0.1:8090/api/event/get_tag_suggestions_stats

+accept: application/json

+

+

+

diff --git a/api-tests/event/ignore_stock_news.http b/api-tests/event/ignore_stock_news.http

new file mode 100644

index 00000000..0e533764

--- /dev/null

+++ b/api-tests/event/ignore_stock_news.http

@@ -0,0 +1,10 @@

+POST http://127.0.0.1:8090/api/event/ignore_stock_news

+accept: application/json

+Content-Type: application/json

+

+{

+ "news_id": "stock_sz_000034_2024-07-17 16:08:17"

+}

+

+

+

diff --git a/api-tests/event/query_stock_topic.http b/api-tests/event/query_stock_topic.http

new file mode 100644

index 00000000..045d0d3f

--- /dev/null

+++ b/api-tests/event/query_stock_topic.http

@@ -0,0 +1,10 @@

+POST http://127.0.0.1:8090/api/event/query_stock_topic

+accept: application/json

+Content-Type: application/json

+

+{

+ "limit": 20

+}

+

+

+

diff --git a/api-tests/event/update_stock_topic.http b/api-tests/event/update_stock_topic.http

new file mode 100644

index 00000000..7046d462

--- /dev/null

+++ b/api-tests/event/update_stock_topic.http

@@ -0,0 +1,18 @@

+POST http://127.0.0.1:8090/api/event/update_stock_topic

+accept: application/json

+Content-Type: application/json

+

+{

+ "id": "admin_特斯拉FSD入华",

+ "desc": "Tesla Al在社交媒体平台“X”上发帖称,特斯拉计划明年第一季度在中国和欧洲推出被其称为“全自动驾驶”(Full Self Driving)的高级驾驶辅助系统,目前正在等待监管部门的批准。",

+ "created_timestamp": "2024-09-05 09:00:00",

+ "trigger_date": "2024-09-05",

+ "due_date": "2025-01-01",

+ "main_tag": "车路云",

+ "sub_tag_list": [

+ "自动驾驶",

+ "车路云"

+ ]

+}

+

+

diff --git a/api-tests/factor/get_factors.http b/api-tests/factor/get_factors.http

new file mode 100644

index 00000000..36d5791e

--- /dev/null

+++ b/api-tests/factor/get_factors.http

@@ -0,0 +1,5 @@

+GET http://127.0.0.1:8090/api/factor/get_factors

+accept: application/json

+

+

+

diff --git a/api-tests/factor/query_factor_result.http b/api-tests/factor/query_factor_result.http

new file mode 100644

index 00000000..55367c53

--- /dev/null

+++ b/api-tests/factor/query_factor_result.http

@@ -0,0 +1,11 @@

+POST http://127.0.0.1:8090/api/factor/query_factor_result

+accept: application/json

+Content-Type: application/json

+

+

+{

+ "entity_ids": [

+ "stock_sz_300133"

+ ],

+ "factor_name": "LiveOrDeadFactor"

+}

diff --git a/api-tests/get_stock_pool_info.http b/api-tests/get_stock_pool_info.http

new file mode 100644

index 00000000..ad78231c

--- /dev/null

+++ b/api-tests/get_stock_pool_info.http

@@ -0,0 +1,5 @@

+GET http://127.0.0.1:8090/api/work/get_stock_pool_info

+accept: application/json

+

+

+

diff --git a/api-tests/get_stock_pools.http b/api-tests/get_stock_pools.http

new file mode 100644

index 00000000..0c6f1c15

--- /dev/null

+++ b/api-tests/get_stock_pools.http

@@ -0,0 +1,5 @@

+GET http://127.0.0.1:8090/api/work/get_stock_pools?stock_pool_name=main_line

+accept: application/json

+

+

+

diff --git a/api-tests/tag/batch_set_stock_tags.http b/api-tests/tag/batch_set_stock_tags.http

new file mode 100644

index 00000000..a74c2cc3

--- /dev/null

+++ b/api-tests/tag/batch_set_stock_tags.http

@@ -0,0 +1,18 @@

+POST http://127.0.0.1:8090/api/work/batch_set_stock_tags

+accept: application/json

+Content-Type: application/json

+

+

+{

+ "tag": "脑机接口",

+ "tag_type": "sub_tag",

+ "entity_ids": [

+ "stock_sh_600775",

+ "stock_sz_002173",

+ "stock_sz_301293",

+ "stock_sz_300753",

+ "stock_sz_300430",

+ "stock_sz_002243"

+ ],

+ "tag_reason": "脑机接口消息刺激"

+}

diff --git a/api-tests/tag/build_main_tag_industry_relation.http b/api-tests/tag/build_main_tag_industry_relation.http

new file mode 100644

index 00000000..ff521d0e

--- /dev/null

+++ b/api-tests/tag/build_main_tag_industry_relation.http

@@ -0,0 +1,8 @@

+POST http://127.0.0.1:8090/api/work/build_main_tag_industry_relation

+accept: application/json

+Content-Type: application/json

+

+{

+ "main_tag": "华为",

+ "industry_list": ["通信设备"]

+}

\ No newline at end of file

diff --git a/api-tests/tag/build_main_tag_sub_tag_relation.http b/api-tests/tag/build_main_tag_sub_tag_relation.http

new file mode 100644

index 00000000..5c9da65c

--- /dev/null

+++ b/api-tests/tag/build_main_tag_sub_tag_relation.http

@@ -0,0 +1,13 @@

+POST http://127.0.0.1:8090/api/work/build_main_tag_sub_tag_relation

+accept: application/json

+Content-Type: application/json

+

+{

+ "main_tag": "华为",

+ "sub_tag_list": [

+ "华为汽车",

+ "华为概念",

+ "华为欧拉",

+ "华为昇腾"

+ ]

+}

\ No newline at end of file

diff --git a/api-tests/tag/build_stock_tags.http b/api-tests/tag/build_stock_tags.http

new file mode 100644

index 00000000..ba957231

--- /dev/null

+++ b/api-tests/tag/build_stock_tags.http

@@ -0,0 +1,15 @@

+POST http://127.0.0.1:8090/api/work/build_stock_tags

+accept: application/json

+Content-Type: application/json

+

+[

+ {

+ "entity_id": "stock_sz_002085",

+ "name": "万丰奥威",

+ "main_tag": "低空经济",

+ "main_tag_reason": "2023年12月27日回复称,公司钻石eDA40纯电动飞机已成功首飞;eVTOL项目已联动海外钻石技术开发团队,在绿色、智能、垂直起降等方面的设计体现未来领域应用场景。",

+ "sub_tag": "低空经济",

+ "sub_tag_reason": "2023年12月27日回复称,公司钻石eDA40纯电动飞机已成功首飞;eVTOL项目已联动海外钻石技术开发团队,在绿色、智能、垂直起降等方面的设计体现未来领域应用场景。",

+ "active_hidden_tags": null

+ }

+]

\ No newline at end of file

diff --git a/api-tests/tag/change_main_tag.http b/api-tests/tag/change_main_tag.http

new file mode 100644

index 00000000..48cb614e

--- /dev/null

+++ b/api-tests/tag/change_main_tag.http

@@ -0,0 +1,8 @@

+POST http://127.0.0.1:8090/api/work/change_main_tag

+accept: application/json

+Content-Type: application/json

+

+{

+ "current_main_tag": "医疗器械",

+ "new_main_tag": "医药"

+}

\ No newline at end of file

diff --git a/api-tests/tag/create_hidden_tag_info.http b/api-tests/tag/create_hidden_tag_info.http

new file mode 100644

index 00000000..d5c65844

--- /dev/null

+++ b/api-tests/tag/create_hidden_tag_info.http

@@ -0,0 +1,8 @@

+POST http://127.0.0.1:8090/api/work/create_hidden_tag_info

+accept: application/json

+Content-Type: application/json

+

+{

+ "tag": "中字头",

+ "tag_reason": "央企,国资委控股"

+}

\ No newline at end of file

diff --git a/api-tests/tag/create_main_tag_info.http b/api-tests/tag/create_main_tag_info.http

new file mode 100644

index 00000000..7caa64ba

--- /dev/null

+++ b/api-tests/tag/create_main_tag_info.http

@@ -0,0 +1,8 @@

+POST http://127.0.0.1:8090/api/work/create_main_tag_info

+accept: application/json

+Content-Type: application/json

+

+{

+ "tag": "未知",

+ "tag_reason": "行业定位不清晰"

+}

\ No newline at end of file

diff --git a/api-tests/tag/create_sub_tag_info.http b/api-tests/tag/create_sub_tag_info.http

new file mode 100644

index 00000000..3183a3ff

--- /dev/null

+++ b/api-tests/tag/create_sub_tag_info.http

@@ -0,0 +1,8 @@

+POST http://127.0.0.1:8090/api/work/create_sub_tag_info

+accept: application/json

+Content-Type: application/json

+

+{

+ "tag": "低空经济",

+ "tag_reason": "低空经济是飞行器和各种产业形态的融合,例如\"无人机+配送\"、\"直升机或evto载人飞行\"、\"无人机+应急救援\"、\"无人机+工业场景巡检\"等"

+}

\ No newline at end of file

diff --git a/api-tests/tag/get_hidden_tag_info.http b/api-tests/tag/get_hidden_tag_info.http

new file mode 100644

index 00000000..007750a1

--- /dev/null

+++ b/api-tests/tag/get_hidden_tag_info.http

@@ -0,0 +1,4 @@

+GET http://127.0.0.1:8090/api/work/get_hidden_tag_info

+accept: application/json

+

+

diff --git a/api-tests/tag/get_industry_info.http b/api-tests/tag/get_industry_info.http

new file mode 100644

index 00000000..c1fac747

--- /dev/null

+++ b/api-tests/tag/get_industry_info.http

@@ -0,0 +1,4 @@

+GET http://127.0.0.1:8090/api/work/get_industry_info

+accept: application/json

+

+

diff --git a/api-tests/tag/get_main_tag_industry_relation.http b/api-tests/tag/get_main_tag_industry_relation.http

new file mode 100644

index 00000000..7b44ce9b

--- /dev/null

+++ b/api-tests/tag/get_main_tag_industry_relation.http

@@ -0,0 +1,4 @@

+GET http://127.0.0.1:8090/api/work/get_main_tag_industry_relation?main_tag=华为

+accept: application/json

+

+

diff --git a/api-tests/tag/get_main_tag_info.http b/api-tests/tag/get_main_tag_info.http

new file mode 100644

index 00000000..dc1d8387

--- /dev/null

+++ b/api-tests/tag/get_main_tag_info.http

@@ -0,0 +1,4 @@

+GET http://127.0.0.1:8090/api/work/get_main_tag_info

+accept: application/json

+

+

diff --git a/api-tests/tag/get_main_tag_sub_tag_relation.http b/api-tests/tag/get_main_tag_sub_tag_relation.http

new file mode 100644

index 00000000..a4886faa

--- /dev/null

+++ b/api-tests/tag/get_main_tag_sub_tag_relation.http

@@ -0,0 +1,4 @@

+GET http://127.0.0.1:8090/api/work/get_main_tag_sub_tag_relation?main_tag=华为

+accept: application/json

+

+

diff --git a/api-tests/tag/get_stock_tag_options.http b/api-tests/tag/get_stock_tag_options.http

new file mode 100644

index 00000000..4b567d0d

--- /dev/null

+++ b/api-tests/tag/get_stock_tag_options.http

@@ -0,0 +1,4 @@

+GET http://127.0.0.1:8090/api/work/get_stock_tag_options?entity_id=stock_sh_600733

+accept: application/json

+

+

diff --git a/api-tests/tag/get_sub_tag_info.http b/api-tests/tag/get_sub_tag_info.http

new file mode 100644

index 00000000..58329c1e

--- /dev/null

+++ b/api-tests/tag/get_sub_tag_info.http

@@ -0,0 +1,4 @@

+GET http://127.0.0.1:8090/api/work/get_sub_tag_info

+accept: application/json

+

+

diff --git a/api-tests/tag/query_simple_stock_tags.http b/api-tests/tag/query_simple_stock_tags.http

new file mode 100644

index 00000000..7ec4c6f3

--- /dev/null

+++ b/api-tests/tag/query_simple_stock_tags.http

@@ -0,0 +1,12 @@

+POST http://127.0.0.1:8090/api/work/query_simple_stock_tags

+accept: application/json

+Content-Type: application/json

+

+

+{

+ "entity_ids": [

+ "stock_sz_002085",

+ "stock_sz_000099",

+ "stock_sz_002130"

+ ]

+}

\ No newline at end of file

diff --git a/api-tests/tag/query_stock_tag_stats.http b/api-tests/tag/query_stock_tag_stats.http

new file mode 100644

index 00000000..20f9b59b

--- /dev/null

+++ b/api-tests/tag/query_stock_tag_stats.http

@@ -0,0 +1,7 @@

+POST http://127.0.0.1:8090/api/work/query_stock_tag_stats

+accept: application/json

+Content-Type: application/json

+

+{

+ "stock_pool_name": "main_line"

+}

\ No newline at end of file

diff --git a/api-tests/tag/query_stock_tags.http b/api-tests/tag/query_stock_tags.http

new file mode 100644

index 00000000..a1e655d5

--- /dev/null

+++ b/api-tests/tag/query_stock_tags.http

@@ -0,0 +1,12 @@

+POST http://127.0.0.1:8090/api/work/query_stock_tags

+accept: application/json

+Content-Type: application/json

+

+

+{

+ "entity_ids": [

+ "stock_sz_000099",

+ "stock_sz_002085",

+ "stock_sz_001696"

+ ]

+}

diff --git a/api-tests/tag/set_stock_tags.http b/api-tests/tag/set_stock_tags.http

new file mode 100644

index 00000000..39774a3d

--- /dev/null

+++ b/api-tests/tag/set_stock_tags.http

@@ -0,0 +1,17 @@

+POST http://127.0.0.1:8090/api/work/set_stock_tags

+accept: application/json

+Content-Type: application/json

+

+{

+ "entity_id": "stock_sz_001366",

+ "name": "播恩集团",

+ "main_tag": "医药",

+ "main_tag_reason": "合成生物概念",

+ "main_tags": {

+ "农业": "来自行业:农牧饲渔"

+ },

+ "sub_tag": "生物医药",

+ "sub_tag_reason": "合成生物概念",

+ "sub_tags": null,

+ "active_hidden_tags": null

+ }

\ No newline at end of file

diff --git a/api-tests/test.http b/api-tests/test.http

new file mode 100644

index 00000000..8b137891

--- /dev/null

+++ b/api-tests/test.http

@@ -0,0 +1 @@

+

diff --git a/api-tests/trading/build_query_stock_quote_setting.http b/api-tests/trading/build_query_stock_quote_setting.http

new file mode 100644

index 00000000..5ef65fc1

--- /dev/null

+++ b/api-tests/trading/build_query_stock_quote_setting.http

@@ -0,0 +1,8 @@

+POST http://127.0.0.1:8090/api/trading/build_query_stock_quote_setting

+accept: application/json

+Content-Type: application/json

+

+{

+ "stock_pool_name": "main_line",

+ "main_tags": ["低空经济","新能源"]

+}

\ No newline at end of file

diff --git a/api-tests/trading/build_trading_plan.http b/api-tests/trading/build_trading_plan.http

new file mode 100644

index 00000000..668df7c5

--- /dev/null

+++ b/api-tests/trading/build_trading_plan.http

@@ -0,0 +1,13 @@

+POST http://127.0.0.1:8090/api/trading/build_trading_plan

+accept: application/json

+Content-Type: application/json

+

+{

+ "stock_id": "stock_sz_300133",

+ "trading_date": "2024-04-23",

+ "expected_open_pct": 0.02,

+ "buy_price": 6.9,

+ "sell_price": null,

+ "trading_reason": "主线",

+ "trading_signal_type": "open_long"

+}

diff --git a/api-tests/trading/get_current_trading_plan.http b/api-tests/trading/get_current_trading_plan.http

new file mode 100644

index 00000000..12b434b4

--- /dev/null

+++ b/api-tests/trading/get_current_trading_plan.http

@@ -0,0 +1,5 @@

+GET http://127.0.0.1:8090/api/trading/get_current_trading_plan

+accept: application/json

+

+

+

diff --git a/api-tests/trading/get_future_trading_plan.http b/api-tests/trading/get_future_trading_plan.http

new file mode 100644

index 00000000..bdda5385

--- /dev/null

+++ b/api-tests/trading/get_future_trading_plan.http

@@ -0,0 +1,5 @@

+GET http://127.0.0.1:8090/api/trading/get_future_trading_plan

+accept: application/json

+

+

+

diff --git a/api-tests/trading/get_query_stock_quote_setting.http b/api-tests/trading/get_query_stock_quote_setting.http

new file mode 100644

index 00000000..8f4ec923

--- /dev/null

+++ b/api-tests/trading/get_query_stock_quote_setting.http

@@ -0,0 +1,5 @@

+GET http://127.0.0.1:8090/api/trading/get_query_stock_quote_setting

+accept: application/json

+

+

+

diff --git a/api-tests/trading/get_quote_stats.http b/api-tests/trading/get_quote_stats.http

new file mode 100644

index 00000000..abad3cbb

--- /dev/null

+++ b/api-tests/trading/get_quote_stats.http

@@ -0,0 +1,2 @@

+GET http://127.0.0.1:8090/api/trading/get_quote_stats

+accept: application/json

diff --git a/api-tests/trading/query_kdata.http b/api-tests/trading/query_kdata.http

new file mode 100644

index 00000000..9bf63c61

--- /dev/null

+++ b/api-tests/trading/query_kdata.http

@@ -0,0 +1,13 @@

+POST http://127.0.0.1:8090/api/trading/query_kdata

+accept: application/json

+Content-Type: application/json

+

+

+{

+ "data_provider": "em",

+ "entity_ids": [

+ "stock_sz_002085",

+ "stock_sz_300133"

+ ],

+ "adjust_type": "hfq"

+}

diff --git a/api-tests/trading/query_stock_quotes.http b/api-tests/trading/query_stock_quotes.http

new file mode 100644

index 00000000..5805111a

--- /dev/null

+++ b/api-tests/trading/query_stock_quotes.http

@@ -0,0 +1,10 @@

+POST http://127.0.0.1:8090/api/trading/query_stock_quotes

+accept: application/json

+Content-Type: application/json

+

+{

+ "main_tag": "低空经济",

+ "stock_pool_name": "vol_up",

+ "limit": 500,

+ "order_by_field": "change_pct"

+}

diff --git a/api-tests/trading/query_tag_quotes.http b/api-tests/trading/query_tag_quotes.http

new file mode 100644

index 00000000..bfadce6f

--- /dev/null

+++ b/api-tests/trading/query_tag_quotes.http

@@ -0,0 +1,13 @@

+POST http://127.0.0.1:8090/api/trading/query_tag_quotes

+accept: application/json

+Content-Type: application/json

+

+{

+ "main_tags": [

+ "低空经济",

+ "半导体",

+ "化工",

+ "消费电子"

+ ],

+ "stock_pool_name": "main_line"

+}

diff --git a/api-tests/trading/query_trading_plan.http b/api-tests/trading/query_trading_plan.http

new file mode 100644

index 00000000..944ad194

--- /dev/null

+++ b/api-tests/trading/query_trading_plan.http

@@ -0,0 +1,12 @@

+POST http://127.0.0.1:8090/api/trading/query_trading_plan

+accept: application/json

+Content-Type: application/json

+

+{

+ "time_range": {

+ "relative_time_range": {

+ "interval": -30,

+ "time_unit": "day"

+ }

+ }

+}

diff --git a/api-tests/trading/query_ts.http b/api-tests/trading/query_ts.http

new file mode 100644

index 00000000..f91f7d6e

--- /dev/null

+++ b/api-tests/trading/query_ts.http

@@ -0,0 +1,11 @@

+POST http://127.0.0.1:8090/api/trading/query_ts

+accept: application/json

+Content-Type: application/json

+

+

+{

+ "entity_ids": [

+ "stock_sz_002085",

+ "stock_sz_300133"

+ ]

+}

diff --git a/build.sh b/build.sh

new file mode 100644

index 00000000..c6ac05d8

--- /dev/null

+++ b/build.sh

@@ -0,0 +1 @@

+python3 setup.py sdist bdist_wheel

\ No newline at end of file

diff --git a/docs/.nojekyll b/docs/.nojekyll

deleted file mode 100644

index e69de29b..00000000

diff --git a/docs/Makefile b/docs/Makefile

new file mode 100644

index 00000000..d0c3cbf1

--- /dev/null

+++ b/docs/Makefile

@@ -0,0 +1,20 @@

+# Minimal makefile for Sphinx documentation

+#

+

+# You can set these variables from the command line, and also

+# from the environment for the first two.

+SPHINXOPTS ?=

+SPHINXBUILD ?= sphinx-build

+SOURCEDIR = source

+BUILDDIR = build

+

+# Put it first so that "make" without argument is like "make help".

+help:

+ @$(SPHINXBUILD) -M help "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

+

+.PHONY: help Makefile

+

+# Catch-all target: route all unknown targets to Sphinx using the new

+# "make mode" option. $(O) is meant as a shortcut for $(SPHINXOPTS).

+%: Makefile

+ @$(SPHINXBUILD) -M $@ "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

diff --git a/docs/_navbar.md b/docs/_navbar.md

deleted file mode 100644

index 8bab00f4..00000000

--- a/docs/_navbar.md

+++ /dev/null

@@ -1,4 +0,0 @@

-

-

-* [中文](/)

-* [english](/en/)

\ No newline at end of file

diff --git a/docs/_sidebar.md b/docs/_sidebar.md

deleted file mode 100644

index 89e88935..00000000

--- a/docs/_sidebar.md

+++ /dev/null

@@ -1,14 +0,0 @@

-- 入坑

- - [简介](intro.md "zvt intro")

- - [快速开始](quick-start.md "zvt quick start")

-- 数据

- - [数据总览](data_overview.md "zvt data overview")

- - [数据列表](data_list.md "zvt data list")

- - [数据更新](data_recorder.md "zvt data recorder")

- - [数据使用](data_usage.md "zvt data usage")

- - [数据扩展](data_extending.md "zvt data extending")

-- 计算

- - [因子计算](factor.md "zvt factor")

- - [回测通知](trader.md "zvt trader")

-- [设计哲学](design-philosophy.md "zvt design philosophy")

-- [支持项目](donate.md "donate for zvt")

diff --git a/docs/arch.png b/docs/arch.png

deleted file mode 100644

index fcb3e80a..00000000

Binary files a/docs/arch.png and /dev/null differ

diff --git a/docs/architecture.eddx b/docs/architecture.eddx

deleted file mode 100644

index 8b2dedf6..00000000

Binary files a/docs/architecture.eddx and /dev/null differ

diff --git a/docs/architecture.png b/docs/architecture.png

deleted file mode 100644

index ccdfe9b8..00000000

Binary files a/docs/architecture.png and /dev/null differ

diff --git a/docs/data.eddx b/docs/data.eddx

deleted file mode 100644

index 71a125f8..00000000

Binary files a/docs/data.eddx and /dev/null differ

diff --git a/docs/data.png b/docs/data.png

deleted file mode 100644

index 71fa714d..00000000

Binary files a/docs/data.png and /dev/null differ

diff --git a/docs/data_extending.md b/docs/data_extending.md

deleted file mode 100644

index 51c22ed7..00000000

--- a/docs/data_extending.md

+++ /dev/null

@@ -1,266 +0,0 @@

-## 数据扩展要点

-

-* zvt里面只有两种数据,EntityMixin和Mixin

-

- EntityMixin为投资标的信息,Mixin为其发生的事。任何一个投资品种,首先是定义EntityMixin,然后是其相关的Mixin。

- 比如Stock(EntityMixin),及其相关的BalanceSheet,CashFlowStatement(Mixin)等。

-

-* zvt的数据可以记录(record_data方法)

-

- 记录数据可以通过扩展以下类来实现:

-

- * Recorder

-

- 最基本的类,实现了关联data_schema和recorder的功能。记录EntityMixin一般继承该类。

-

- * RecorderForEntities

-

- 实现了初始化需要记录的**投资标的列表**的功能,有了标的,才能记录标的发生的事。

-

- * TimeSeriesDataRecorder

-

- 实现了增量记录,实时和非实时数据处理的功能。

-

- * FixedCycleDataRecorder

-

- 实现了计算固定周期数据剩余size的功能。

-

- * TimestampsDataRecorder

-

- 实现记录时间集合可知的数据记录功能。

-

-继承Recorder必须指定data_schema和provider两个字段,系统通过python meta programing的方式对data_schema和recorder class进行了关联:

-```

-class Meta(type):

- def __new__(meta, name, bases, class_dict):

- cls = type.__new__(meta, name, bases, class_dict)

- # register the recorder class to the data_schema

- if hasattr(cls, 'data_schema') and hasattr(cls, 'provider'):

- if cls.data_schema and issubclass(cls.data_schema, Mixin):

- print(f'{cls.__name__}:{cls.data_schema.__name__}')

- cls.data_schema.register_recorder_cls(cls.provider, cls)

- return cls

-

-

-class Recorder(metaclass=Meta):

- logger = logging.getLogger(__name__)

-

- # overwrite them to setup the data you want to record

- provider: str = None

- data_schema: Mixin = None

-```

-

-

-下面以**个股估值数据**为例对具体步骤做一个说明。

-

-## 1. 定义数据

-在domain package(或者其子package)下新建一个文件(module)valuation.py,内容如下:

-```

-# -*- coding: utf-8 -*-

-from sqlalchemy import Column, String, Float

-from sqlalchemy.ext.declarative import declarative_base

-

-from zvdata import Mixin

-from zvdata.contract import register_schema

-

-ValuationBase = declarative_base()

-

-

-class StockValuation(ValuationBase, Mixin):

- __tablename__ = 'stock_valuation'

-

- code = Column(String(length=32))

- name = Column(String(length=32))

- # 总股本(股)

- capitalization = Column(Float)

- # 公司已发行的普通股股份总数(包含A股,B股和H股的总股本)

- circulating_cap = Column(Float)

- # 市值

- market_cap = Column(Float)

- # 流通市值

- circulating_market_cap = Column(Float)

- # 换手率

- turnover_ratio = Column(Float)

- # 静态pe

- pe = Column(Float)

- # 动态pe

- pe_ttm = Column(Float)

- # 市净率

- pb = Column(Float)

- # 市销率

- ps = Column(Float)

- # 市现率

- pcf = Column(Float)

-

-

-class EtfValuation(ValuationBase, Mixin):

- __tablename__ = 'etf_valuation'

-

- code = Column(String(length=32))

- name = Column(String(length=32))

- # 静态pe

- pe = Column(Float)

- # 动态pe

- pe_ttm = Column(Float)

- # 市净率

- pb = Column(Float)

- # 市销率

- ps = Column(Float)

- # 市现率

- pcf = Column(Float)

-

-

-register_schema(providers=['joinquant'], db_name='valuation', schema_base=ValuationBase)

-

-__all__ = ['StockValuation', 'EtfValuation']

-

-```

-将其分解为以下步骤:

-### 1.1 数据库base

-```

-ValuationBase = declarative_base()

-```

-一个数据库可有多个table(schema),table(schema)应继承自该类

-

-### 1.2 table(schema)的定义

-```

-class StockValuation(ValuationBase, Mixin):

- __tablename__ = 'stock_valuation'

-

- code = Column(String(length=32))

- name = Column(String(length=32))

- # 总股本(股)

- capitalization = Column(Float)

- # 公司已发行的普通股股份总数(包含A股,B股和H股的总股本)

- circulating_cap = Column(Float)

- # 市值

- market_cap = Column(Float)

- # 流通市值

- circulating_market_cap = Column(Float)

- # 换手率

- turnover_ratio = Column(Float)

- # 静态pe

- pe = Column(Float)

- # 动态pe

- pe_ttm = Column(Float)

- # 市净率

- pb = Column(Float)

- # 市销率

- ps = Column(Float)

- # 市现率

- pcf = Column(Float)

-

-

-class EtfValuation(ValuationBase, Mixin):

- __tablename__ = 'etf_valuation'

-

- code = Column(String(length=32))

- name = Column(String(length=32))

- # 静态pe

- pe = Column(Float)

- # 动态pe

- pe_ttm = Column(Float)

- # 市净率

- pb = Column(Float)

- # 市销率

- ps = Column(Float)

- # 市现率

- pcf = Column(Float)

-```

-这里定义了两个table(schema),继承ValuationBase表明其隶属的数据库,继承Mixin让其获得zvt统一的字段和方法。

-schema里面的__tablename__为表名。

-### 1.3 注册数据

-```

-register_schema(providers=['joinquant'], db_name='valuation', schema_base=ValuationBase)

-

-__all__ = ['StockValuation', 'EtfValuation']

-```

-register_schema会将数据注册到zvt的数据系统中,providers为数据的提供商列表,db_name为数据库名字标识,schema_base为上面定义的数据库base。

-

-__all__为该module定义的数据结构,为了使得整个系统的数据依赖干净明确,所有的module都应该手动定义该字段。

-

-

-## 2 实现相应的recorder

-```

-# -*- coding: utf-8 -*-

-

-import pandas as pd

-from jqdatasdk import auth, logout, query, valuation, get_fundamentals_continuously

-

-from zvdata.api import df_to_db

-from zvdata.recorder import TimeSeriesDataRecorder

-from zvdata.utils.time_utils import now_pd_timestamp, now_time_str, to_time_str

-from zvt import zvt_config

-from zvt.domain import Stock, StockValuation, EtfStock

-from zvt.recorders.joinquant.common import to_jq_entity_id

-

-

-class JqChinaStockValuationRecorder(TimeSeriesDataRecorder):

- entity_provider = 'joinquant'

- entity_schema = Stock

-

- # 数据来自jq

- provider = 'joinquant'

-

- data_schema = StockValuation

-

- def __init__(self, entity_type='stock', exchanges=['sh', 'sz'], entity_ids=None, codes=None, day_data=False, batch_size=10,

- force_update=False, sleeping_time=5, default_size=2000, real_time=False, fix_duplicate_way='add',

- start_timestamp=None, end_timestamp=None, close_hour=0, close_minute=0) -> None:

- super().__init__(entity_type, exchanges, entity_ids, codes, day_data, batch_size, force_update, sleeping_time,

- default_size, real_time, fix_duplicate_way, start_timestamp, end_timestamp, close_hour,

- close_minute)

- auth(zvt_config['jq_username'], zvt_config['jq_password'])

-

- def on_finish(self):

- super().on_finish()

- logout()

-

- def record(self, entity, start, end, size, timestamps):

- q = query(

- valuation

- ).filter(

- valuation.code == to_jq_entity_id(entity)

- )

- count: pd.Timedelta = now_pd_timestamp() - start

- df = get_fundamentals_continuously(q, end_date=now_time_str(), count=count.days + 1, panel=False)

- df['entity_id'] = entity.id

- df['timestamp'] = pd.to_datetime(df['day'])

- df['code'] = entity.code

- df['name'] = entity.name

- df['id'] = df['timestamp'].apply(lambda x: "{}_{}".format(entity.id, to_time_str(x)))

- df = df.rename({'pe_ratio_lyr': 'pe',

- 'pe_ratio': 'pe_ttm',

- 'pb_ratio': 'pb',

- 'ps_ratio': 'ps',

- 'pcf_ratio': 'pcf'},

- axis='columns')

-

- df['market_cap'] = df['market_cap'] * 100000000

- df['circulating_cap'] = df['circulating_cap'] * 100000000

- df['capitalization'] = df['capitalization'] * 10000

- df['circulating_cap'] = df['circulating_cap'] * 10000

- df_to_db(df=df, data_schema=self.data_schema, provider=self.provider, force_update=self.force_update)

-

- return None

-

-__all__ = ['JqChinaStockValuationRecorder']

-```

-

-# 3. 获得的能力

-

-# 4. recorder原理

-将各provider提供(或者自己爬取)的数据**变成**符合data schema的数据需要做好以下几点:

-* 初始化要抓取的标的

-可抓取单标的来调试,然后抓取全量标的

-* 能够从上次抓取的地方接着抓

-减少不必要的请求,增量抓取

-* 封装常用的请求方式

-对时间序列数据的请求,无非start,end,size,time list的组合

-* 能够自动去重

-* 能够设置抓取速率

-* 提供抓取完成的回调函数

-方便数据校验和多provider数据补全

-

-流程图如下:

-

| db | -schema | -更新方法 | -下载地址 | -stock_meta | - -

|---|---|---|---|

| Stock | -Stock.record_data(provider='joinquant') Stock.record_data(provider='eastmoney') |

- wait... | -|

| Block | -Block.record_data(provider='sina') Block.record_data(provider='eastmoney') |

- ||

| BlockStock | -BlockStock.record_data(provider='sina') BlockStock.record_data(provider='eastmoney') |

- ||

| Etf | -Etf.record_data(provider='joinquant') | -||

| EtfStock | -EtfStock.record_data(provider='joinquant') | -||

| Index | -Index.record_data | -||

| IndexStock | -IndexStock.record_data | -||

| StockDetail | -StockDetail.record_data(provider='eastmoney') | -

-

- -

-## 🤝联系方式

-

-QQ群:300911873

-

-个人微信:foolcage 添加暗号:zvt

-

-

-## 🤝联系方式

-

-QQ群:300911873

-

-个人微信:foolcage 添加暗号:zvt

- -

-------

-公众号:

-

-

-------

-公众号:

- -

-------

-知乎专栏:

-https://zhuanlan.zhihu.com/automoney

diff --git a/docs/en/README.md b/docs/en/README.md

deleted file mode 100644

index 5f58984a..00000000

--- a/docs/en/README.md

+++ /dev/null

@@ -1,28 +0,0 @@

-[](https://github.com/zvtvz/zvt)

-[](https://pypi.org/project/zvt/)

-[](https://travis-ci.org/zvtvz/zvt)

-[](https://codecov.io/github/zvtvz/zvt)

-## what's zvt?

-

-ZVT is a quant trading platform written after rethinking about [fooltrader] (https://github.com/foolcage/fooltrader), which includes scalable data recorder, api, factor calculation, stock picking, backtesting, trading and focus on **low frequency**, **multi-level**, **multi-factors** **multi-targets** full market analysis and trading framework.

-

-## what else can ZVT be?

-

- - From the text, zero vector trader, meaning the market is the result of a variety of vector synergy, you could only be a zero vector to see the market clearly.

- - From the perspective of shape, Z V T itself coincides with the typical form of the market, implying the importance of market geometry.

- - The meaning of the zvt icon, you can interpret it yourself

-

-

-

-------

-知乎专栏:

-https://zhuanlan.zhihu.com/automoney

diff --git a/docs/en/README.md b/docs/en/README.md

deleted file mode 100644

index 5f58984a..00000000

--- a/docs/en/README.md

+++ /dev/null

@@ -1,28 +0,0 @@

-[](https://github.com/zvtvz/zvt)

-[](https://pypi.org/project/zvt/)

-[](https://travis-ci.org/zvtvz/zvt)

-[](https://codecov.io/github/zvtvz/zvt)

-## what's zvt?

-