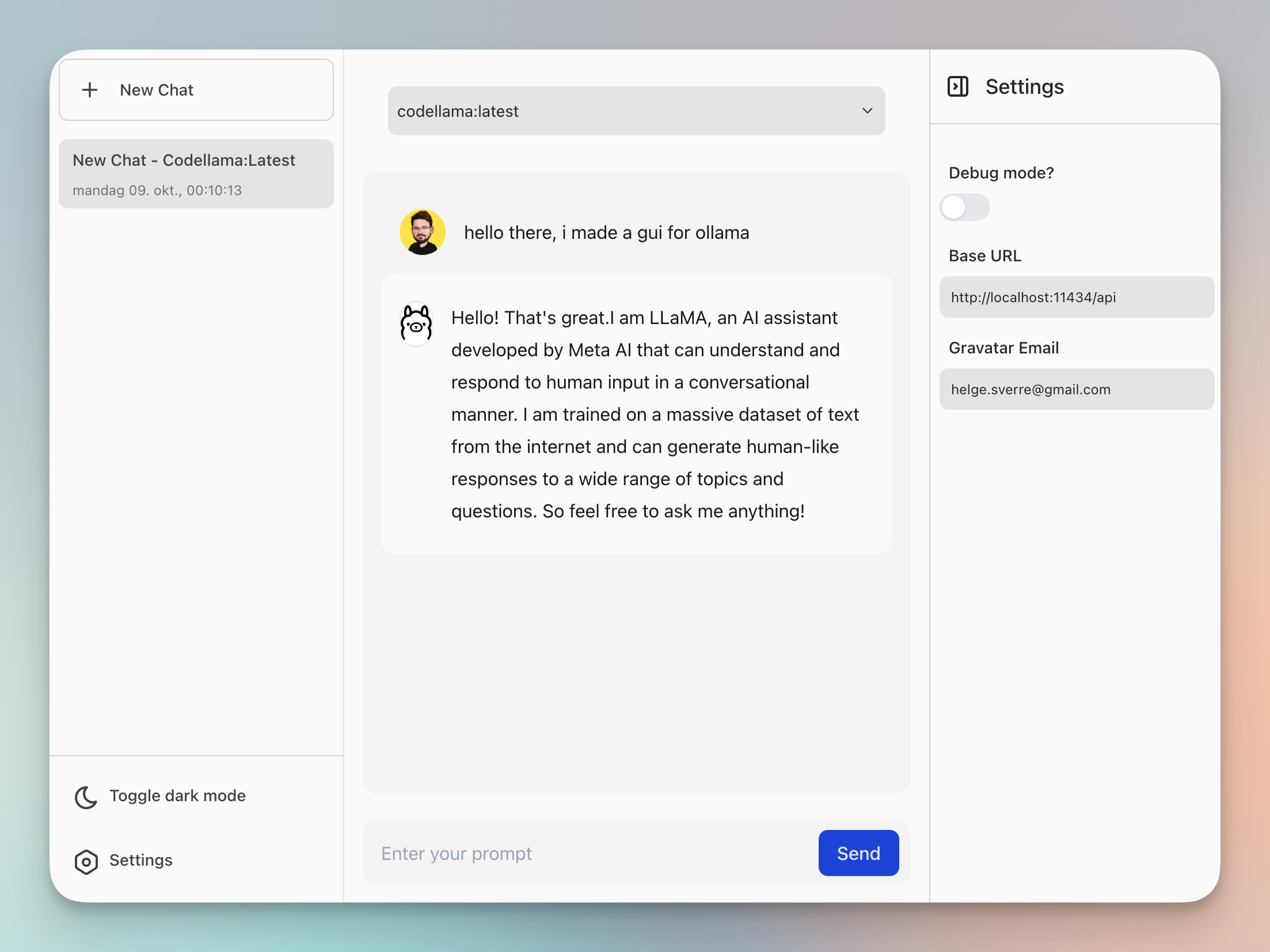

Ollama GUI is a web interface for ollama.ai, a tool that enables running Large Language Models (LLMs) on your local machine.

- Download and install ollama CLI.

ollama pull <model-name>

ollama serve- Clone the repository and start the development server.

git clone https://github.com/HelgeSverre/ollama-gui.git

cd ollama-gui

yarn install

yarn devOr use the hosted web version, by running ollama with the following origin command (docs)

OLLAMA_ORIGINS=https://ollama-gui.vercel.app ollama serveFor convenience and copy-pastability, here is a table of interesting models you might want to try out.

For a complete list of models Ollama supports, go to ollama.ai/library.

| Model | Parameters | Size | Download |

|---|---|---|---|

| Mistral | 7B | 4.1GB | ollama pull mistral |

| Mistral (instruct) | 7B | 4.1GB | ollama pull mistral:7b-instruct |

| Llama 2 | 7B | 3.8GB | ollama pull llama2 |

| Code Llama | 7B | 3.8GB | ollama pull codellama |

| Llama 2 Uncensored | 7B | 3.8GB | ollama pull llama2-uncensored |

| Orca Mini | 3B | 1.9GB | ollama pull orca-mini |

| Vicuna | 7B | 3.8GB | ollama pull falcon |

| Vicuna | 7B | 3.8GB | ollama pull vicuna |

| Vicuna (16K context) | 7B | 3.8GB | ollama pull vicuna:7b-16k |

| Vicuna (16K context) | 13B | 7.4GB | ollama pull vicuna:13b-16k |

| nexusraven | 13B | 7.4gB | ollama pull nexusraven |

| starcoder | 7B | 4.3GB | ollama pull starcoder:7b |

| wizardlm-uncensored | 13B | 7.4GB | ollama pull wizardlm-uncensored |

- Properly format newlines in the chat message (PHP-land has

nl2brbasically want the same thing) - Store chat history using IndexedDB locally

- Cleanup the code, I made a mess of it for the sake of speed and getting something out the door.

- Add markdown parsing lib

- Allow browsing and installation of available models (library)

- Ensure mobile responsiveness (non-prioritized use-case atm.)

- Add file uploads with OCR and stuff.

- Ollama.ai - CLI tool for models.

- LangUI

- Vue.js

- Vite

- Tailwind CSS

- VueUse

- @tabler/icons-vue

Licensed under the MIT License. See the LICENSE.md file for details.