The official implementation of the paper "Vision Transformer Adapter for Dense Predictions".

(2022/06/02) Detection is released and segmentation will come soon.

(2022/05/17) ViT-Adapter-L yields 60.1 box AP and 52.1 mask AP on COCO test-dev.

(2022/05/12) ViT-Adapter-L reaches 85.2 mIoU on Cityscapes test set without coarse data.

(2022/05/05) ViT-Adapter-L achieves the SOTA on ADE20K val set with 60.5 mIoU!

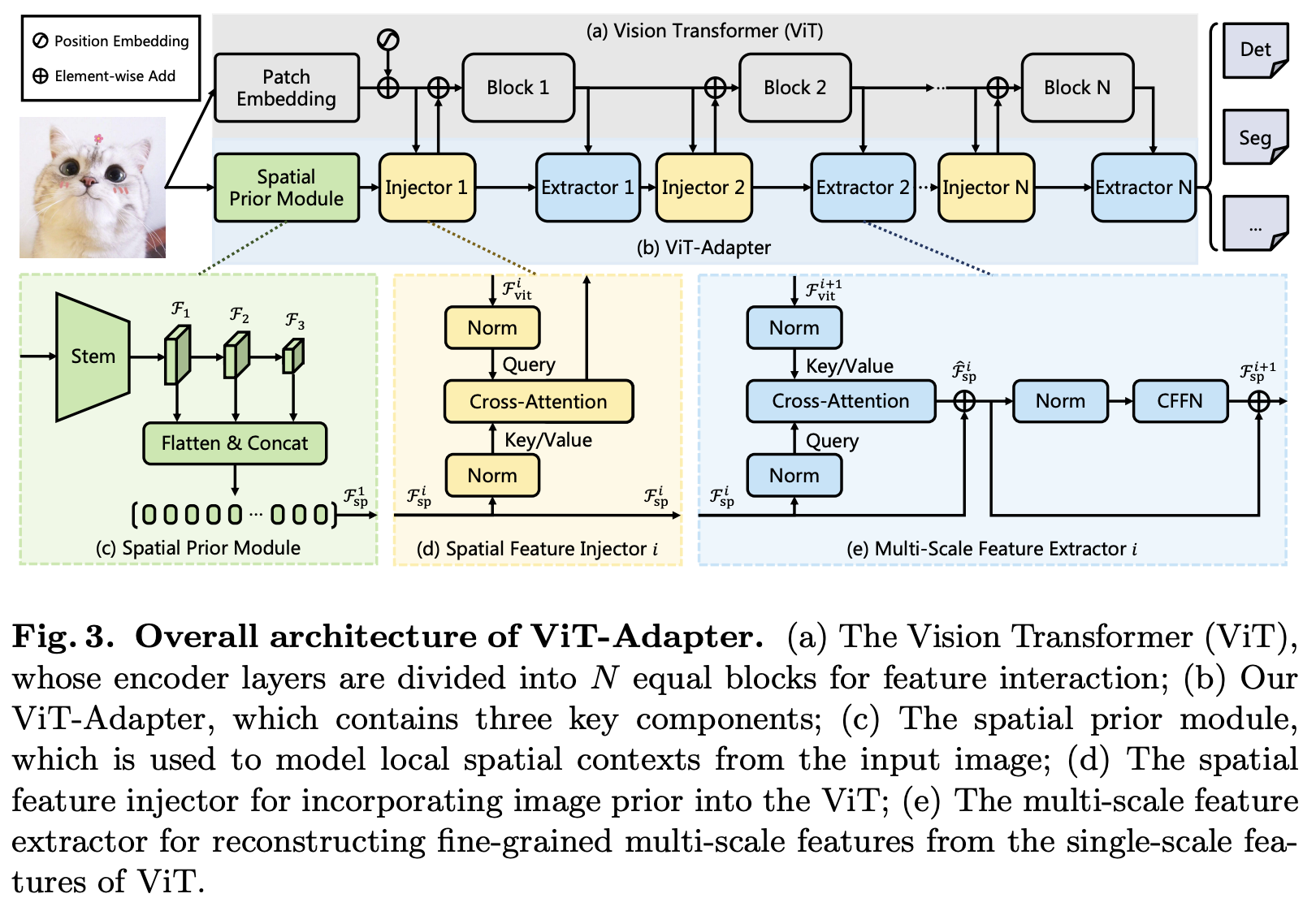

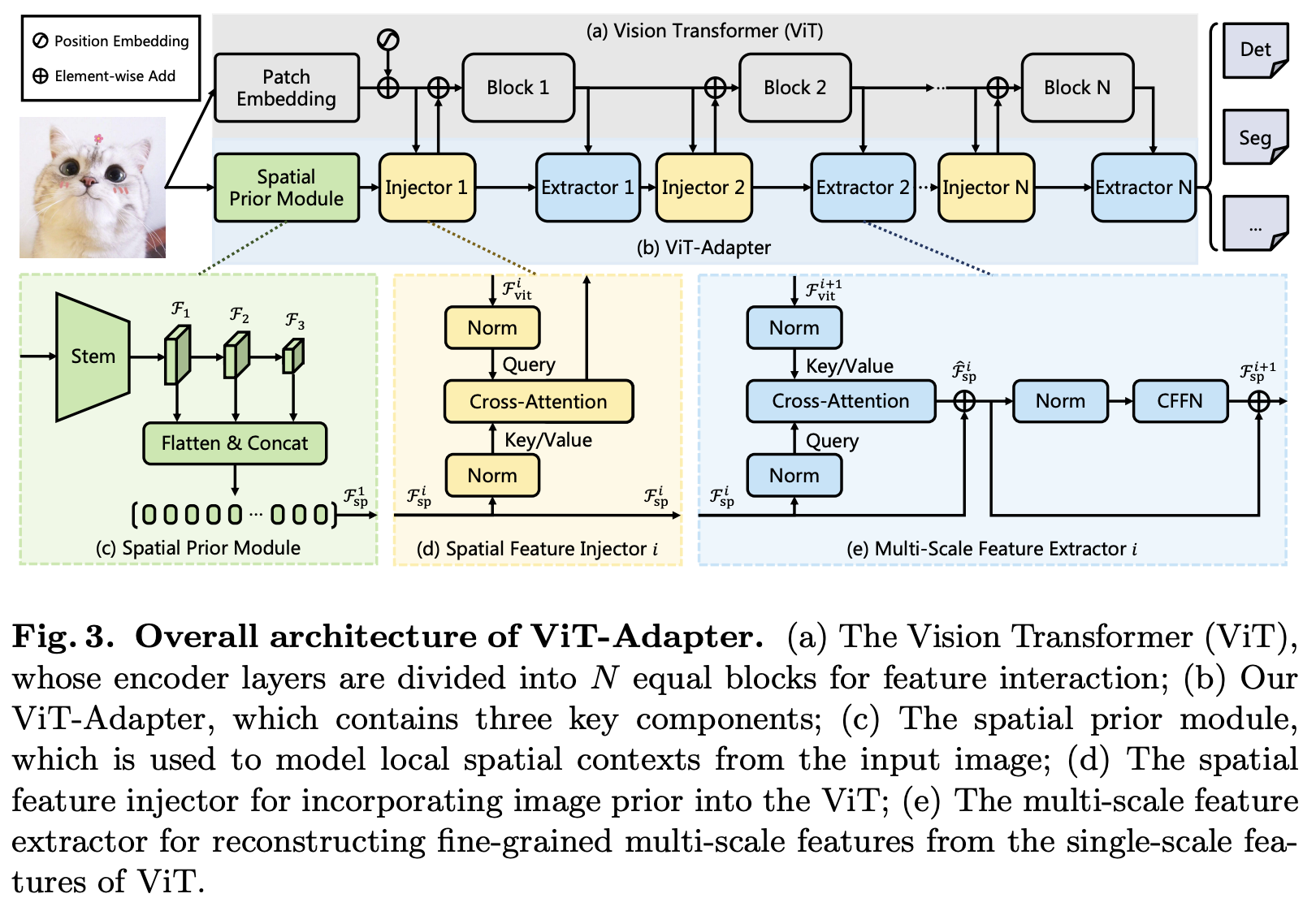

This work investigates a simple yet powerful adapter for Vision Transformer (ViT). Unlike recent visual transformers that introduce vision-specific inductive biases into their architectures, ViT achieves inferior performance on dense prediction tasks due to lacking prior information of images. To solve this issue, we propose a Vision Transformer Adapter (ViT-Adapter), which can remedy the defects of ViT and achieve comparable performance to vision-specific models by introducing inductive biases via an additional architecture. Specifically, the backbone in our framework is a vanilla transformer that can be pre-trained with multi-modal data. When fine-tuning on downstream tasks, a modality-specific adapter is used to introduce the data and tasks' prior information into the model, making it suitable for these tasks. We verify the effectiveness of our ViT-Adapter on multiple downstream tasks, including object detection, instance segmentation, and semantic segmentation. Notably, when using HTC++, our ViT-Adapter-L yields 60.1 box AP and 52.1 mask AP on COCO test-dev, surpassing Swin-L by 1.4 box AP and 1.0 mask AP. For semantic segmentation, our ViT-Adapter-L establishes a new state-of-the-art of 60.5 mIoU on ADE20K val. We hope that the proposed ViT-Adapter could serve as an alternative for vision-specific transformers and facilitate future research.

| Method |

Framework |

Pre-train |

Lr schd |

box AP |

mask AP |

#Param |

| ViT-Adapter-L |

HTC++ |

BEiT |

3x |

58.5 |

50.8 |

401M |

| ViT-Adapter-L (MS) |

HTC++ |

BEiT |

3x |

60.1 |

52.1 |

401M |

| Method |

Framework |

Pre-train |

Iters |

Crop Size |

mIoU |

+MS |

#Param |

| ViT-Adapter-L |

UperNet |

BEiT |

160k |

640 |

58.0 |

58.4 |

451M |

| ViT-Adapter-L |

Mask2Former |

BEiT |

160k |

640 |

58.3 |

59.0 |

568M |

| ViT-Adapter-L |

Mask2Former |

COCO-Stuff-164k |

80k |

896 |

59.4 |

60.5 |

571M |

| Method |

Framework |

Pre-train |

Iters |

Crop Size |

val mIoU |

val/test +MS |

#Param |

| ViT-Adapter-L |

Mask2Former |

Mapillary |

80k |

896 |

84.9 |

85.8/85.2 |

571M |

| Method |

Framework |

Pre-train |

Iters |

Crop Size |

mIoU |

+MS |

#Param |

| ViT-Adapter-L |

UperNet |

BEiT |

80k |

512 |

51.0 |

51.4 |

451M |

| ViT-Adapter-L |

Mask2Former |

BEiT |

40k |

512 |

53.2 |

54.2 |

568M |

| Method |

Framework |

Pre-train |

Iters |

Crop Size |

mIoU |

+MS |

#Param |

| ViT-Adapter-L |

UperNet |

BEiT |

80k |

480 |

67.0 |

67.5 |

451M |

| ViT-Adapter-L |

Mask2Former |

BEiT |

40k |

480 |

67.8 |

68.2 |

568M |

| Method |

Framework |

Pre-train |

Lr schd |

Aug |

box AP |

mask AP |

#Param |

| ViT-Adapter-T |

Mask R-CNN |

DeiT |

3x |

Yes |

46.0 |

41.0 |

28M |

| ViT-Adapter-S |

Mask R-CNN |

DeiT |

3x |

Yes |

48.2 |

42.8 |

48M |

| ViT-Adapter-B |

Mask R-CNN |

DeiT |

3x |

Yes |

49.6 |

43.6 |

120M |

| ViT-Adapter-L |

Mask R-CNN |

AugReg |

3x |

Yes |

50.9 |

44.8 |

348M |

| Method |

Framework |

Pre-train |

Lr schd |

Aug |

box AP |

mask AP |

#Param |

| ViT-Adapter-S |

Cascade Mask R-CNN |

DeiT |

3x |

Yes |

51.5 |

44.5 |

86M |

| ViT-Adapter-S |

ATSS |

DeiT |

3x |

Yes |

49.6 |

- |

36M |

| ViT-Adapter-S |

GFL |

DeiT |

3x |

Yes |

50.0 |

- |

36M |

| ViT-Adapter-S |

Sparse R-CNN |

DeiT |

3x |

Yes |

48.1 |

- |

110M |

| ViT-Adapter-B |

Upgraded Mask R-CNN |

MAE |

25ep |

LSJ |

50.3 |

44.7 |

122M |

| ViT-Adapter-B |

Upgraded Mask R-CNN |

MAE |

50ep |

LSJ |

50.8 |

45.1 |

122M |

| Method |

Framework |

Pre-train |

Iters |

Crop Size |

mIoU |

+MS |

#Param |

| ViT-Adapter-T |

UperNet |

DeiT |

160k |

512 |

42.6 |

43.6 |

36M |

| ViT-Adapter-S |

UperNet |

DeiT |

160k |

512 |

46.6 |

47.4 |

58M |

| ViT-Adapter-B |

UperNet |

DeiT |

160k |

512 |

48.1 |

49.2 |

134M |

| ViT-Adapter-B |

UperNet |

AugReg |

160k |

512 |

51.9 |

52.5 |

134M |

| ViT-Adapter-L |

UperNet |

AugReg |

160k |

512 |

53.4 |

54.4 |

364M |

If this work is helpful for your research, please consider citing the following BibTeX entry.

@article{chen2021vitadapter,

title={Vision Transformer Adapter for Dense Predictions},

author={Chen, Zhe and Duan, Yuchen and Wang, Wenhai and He, Junjun and Lu, Tong and Dai, Jifeng and Qiao, Yu},

journal={arXiv preprint arXiv:2205.08534},

year={2022}

}

This repository is released under the Apache 2.0 license as found in the LICENSE file.