Delight is a free and cross-platform Spark UI replacement with new metrics and visualizations that will delight you!

The Delight web dashboard lists your completed Spark applications with high-level information and metrics.

When you click on a specific line, you can view Executor CPU Metrics, aligned with a timeline of your Spark jobs and stages, so that it's easy for you to understand what is the performance bottleneck of your application.

For example, Delight made it obvious that this application (left) suffered from slow shuffle. After using instances with mounted local SSDs (right), the application performance improved by over 10x.

- June 2020: Project starts with a widely shared blog post detailing our vision.

- November 2020: First release. A dashboard with one-click access to a Hosted Spark History Server (Spark UI).

- March 2021: Release of the overview screen with Executor CPU metrics and Spark timeline.

- Coming Next: Executor Memory Metrics, Stage page, Executor Page.

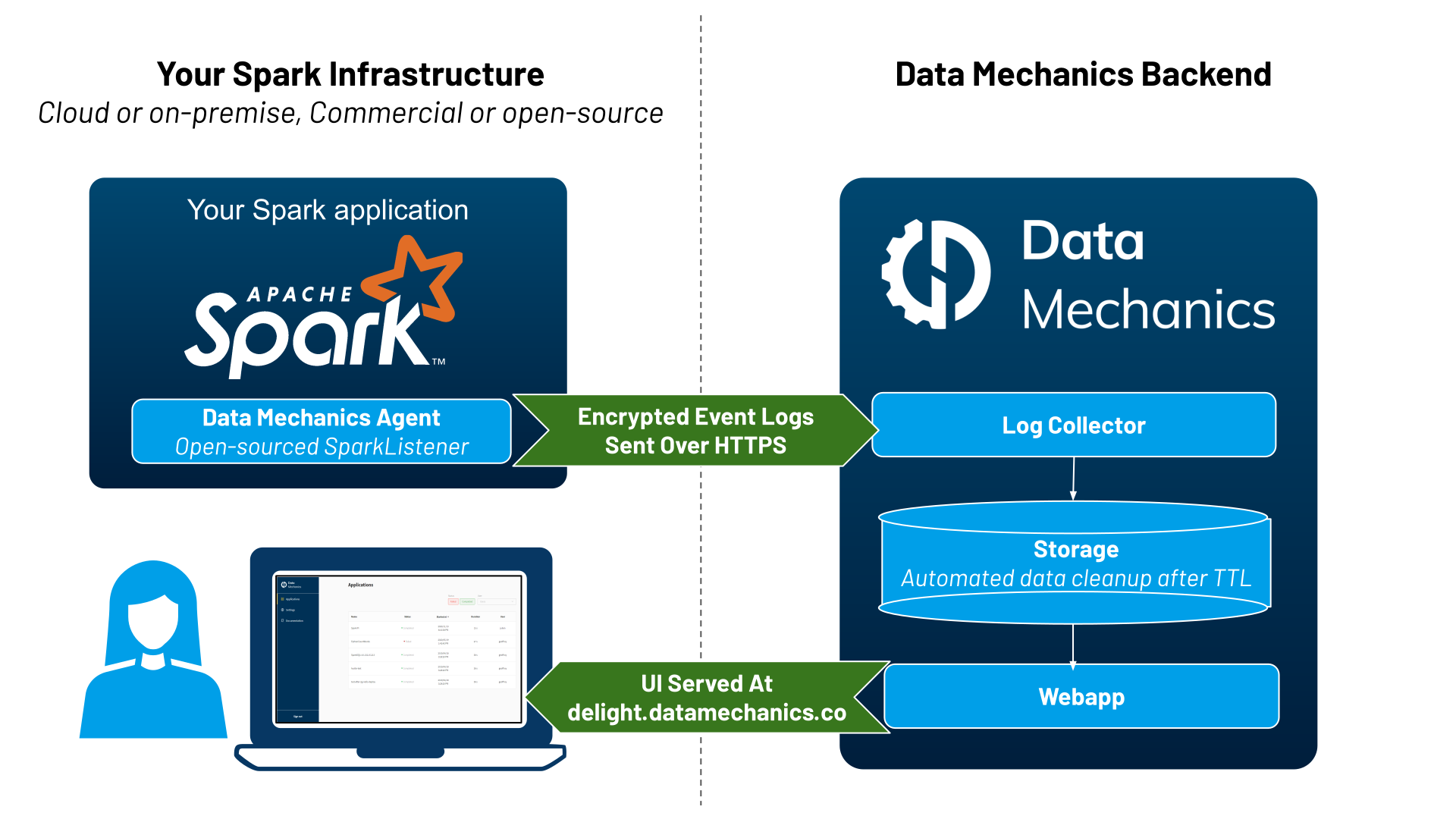

Delight consists of an open-sourced SparkListener which runs inside your Spark applications and which is very simple to install.

This agent streams Spark events to our servers. These are not your application logs, these are non-sensitive metadata about your Spark application execution: how long each task took, how much data was read/written, how much memory was used, etc. In particular, Spark events do not contain personally identifiable information. Here's a sample Spark event and a full Spark event log.

You can then access the Spark UI for all your Spark applications through our website.

To use Delight:

- Create an account and generate an access token on our website. To share a single dashboard with your colleagues, you should use your company's Google account on signup.

- Follow the installation instructions below for your platform.

Here are the available instructions:

- Local run with the

spark-submitCLI - Generic instructions for the

spark-submitCLI - AWS EMR

- Google Cloud Dataproc

- Spark on Kubernetes operator

- Databricks

- Apache Livy

Delight is compatible with Spark 2.4.0 to Spark 3.1.1 with the following Maven coordinates:

co.datamechanics:delight_<replace-with-your-scala-version-2.11-or-2.12>:latest-SNAPSHOT

We also maintain a version compatible with Spark 2.3.x.

Please use the following Maven coordinates to use it:

co.datamechanics:delight_2.11:2.3-latest-SNAPSHOT

Delight is compatible with Pyspark. But even if you use Python, you'll have to determine the Scala version used by your Spark distribution and fill out the placeholder above in the Maven coordinates!

If you have a question, first please read our FAQ. You can also contact us using the chat window once you're logged on your dashboard. If you want to report a bug or issue a feature request, please use Github issues. Thank you!

I installed Delight and saw the following error in the driver logs. How do I solve it?

Exception in thread "main" java.lang.NoSuchMethodError: org.apache.spark.internal.Logging.$init$(Lorg/apache/spark/internal/Logging;)V

at co.datamechanics.delight.DelightListener.<init>(DelightListener.scala:11)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

This probably means that the Scala version of Delight does not match the Scala version of the Spark distribution.

If you specified co.datamechanics:delight_2.11:latest-SNAPSHOT, please change to co.datamechanics:delight_2.12:latest-SNAPSHOT. And vice versa!

| Config | Explanation | Default value |

|---|---|---|

spark.delight.accessToken.secret |

An access token to authenticate yourself with Data Mechanics Delight. If the access token is missing, the listener will not stream events | (none) |

spark.delight.appNameOverride |

The name of the app that will appear in Data Mechanics Delight. This is only useful if your platform does not allow you to set spark.app.name. |

spark.app.name |

We've listed more technical configurations in this section for completeness. You should not need to change the values of these configurations though, so drop us a line if you do, we'll be interested to know more!

| Config | Explanation | Default value |

|---|---|---|

spark.delight.collector.url |

URL of the Data Mechanics Delight collector API | https://api.delight.datamechanics.co/collector/ |

spark.delight.buffer.maxNumEvents |

The number of Spark events to reach before triggering a call to Data Mechanics Collector API. Special events like job ends also trigger a call. | 1000 |

spark.delight.payload.maxNumEvents |

The maximum number of Spark events to be sent in one call to Data Mechanics Collector API. | 10000 |

spark.delight.heartbeatIntervalSecs |

(Internal config) the interval at which the listener send an heartbeat requests to the API. It allow us to detect if the app was prematurely finished and start the processing ASAP | 10s |

spark.delight.pollingIntervalSecs |

(Internal config) the interval at which the object responsible for calling the API checks whether there are new payloads to be sent | 0.5s |

spark.delight.maxPollingIntervalSecs |

(Internal config) upon connection error, the polling interval increases exponentially until this value. It returns to its initial value once a call to the API passes through | 60s |

spark.delight.maxWaitOnEndSecs |

(Internal config) the time the Spark application waits for remaining payloads to be sent after the event SparkListenerApplicationEnd. Not applicable in the case of Databricks |

10s |

spark.delight.waitForPendingPayloadsSleepIntervalSecs |

(Internal config) the interval at which the object responsible for calling the API checks whether there are new remaining to be sent, after the event SparkListenerApplicationEnd is received. Not applicable in the case of Databricks |

1s |

spark.delight.logDuration |

(Debugging config) whether to log the duration of the operations performed by the Spark listener | false |