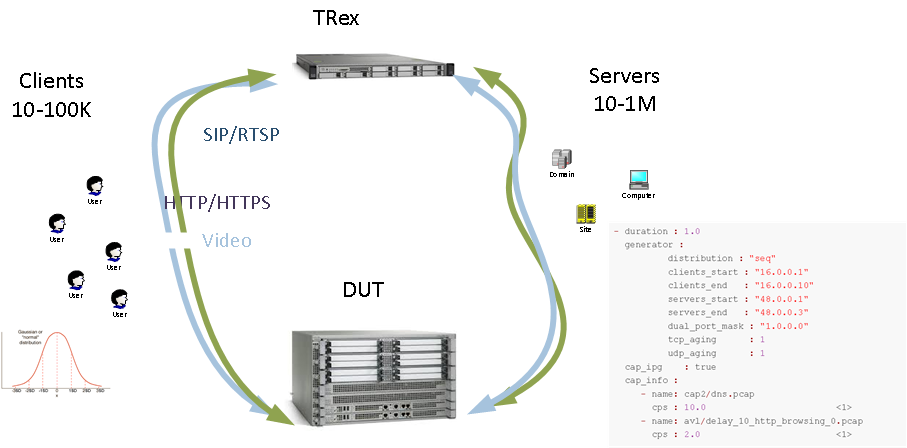

The following is a simple example helpful for understanding how TRex works. The example uses the TRex simulator. This simulator can be run on any Cisco Linux including on the TRex itself. TRex simulates clients and servers and generates traffic based on the pcap files provided.

The following is an example YAML-format traffic configuration file (cap2/dns_test.yaml), with explanatory notes.

[bash]>cat cap2/dns_test.yaml

- duration : 10.0

generator :

distribution : "seq"

clients_start : "16.0.0.1" (1)

clients_end : "16.0.0.255"

servers_start : "48.0.0.1" (2)

servers_end : "48.0.0.255"

clients_per_gb : 201

min_clients : 101

dual_port_mask : "1.0.0.0"

tcp_aging : 1

udp_aging : 1

cap_info :

- name: cap2/dns.pcap (3)

cps : 1.0 (4)

ipg : 10000 (5)

rtt : 10000 (6)

w : 1-

Range of clients (IPv4 format).

-

Range of servers (IPv4 format).

-

pcap file, which includes the DNS cap file that will be used as a template.

-

Number of connections per second to generate. In the example, 1.0 means 1 connection per secod.

-

Inter-packet gap (microseconds). 10,000 = 10 msec.

-

Should be the same as ipg.

The DNS template file includes:

-

One flow

-

Two packets

-

First packet: from the initiator (client → server)

-

Second packet: response (server → client)

TRex replaces the client_ip, client_port, and server_ip. The server_port will be remain the same.

[bash]>./bp-sim-64-debug -f cap2/dns.yaml -o my.erf -v 3

-- loading cap file cap2/dns.pcap

id,name , tps, cps,f-pkts,f-bytes, duration, Mb/sec, MB/sec, #(1)

00, cap2/dns.pcap ,1.00,1.00, 2 , 170 , 0.02 , 0.00 , 0.00 ,

00, sum ,1.00,1.00, 2 , 170 , 0.00 , 0.00 , 0.00 ,

Generating erf file ...

pkt_id,time,fid,pkt_info,pkt,len,type,is_init,is_last,type,thread_id,src_ip,dest_ip,src_port #(2)

1 ,0.010000,1,0x9055598,1,77,0,1,0,0,0,10000001,30000001,1024

2 ,0.020000,1,0x9054760,2,93,0,0,1,0,0,10000001,30000001,1024

3 ,2.010000,2,0x9055598,1,77,0,1,0,0,0,10000002,30000002,1024

4 ,2.020000,2,0x9054760,2,93,0,0,1,0,0,10000002,30000002,1024

5 ,3.010000,3,0x9055598,1,77,0,1,0,0,0,10000003,30000003,1024

6 ,3.020000,3,0x9054760,2,93,0,0,1,0,0,10000003,30000003,1024

7 ,4.010000,4,0x9055598,1,77,0,1,0,0,0,10000004,30000004,1024

8 ,4.020000,4,0x9054760,2,93,0,0,1,0,0,10000004,30000004,1024

9 ,5.010000,5,0x9055598,1,77,0,1,0,0,0,10000005,30000005,1024

10 ,5.020000,5,0x9054760,2,93,0,0,1,0,0,10000005,30000005,1024

11 ,6.010000,6,0x9055598,1,77,0,1,0,0,0,10000006,30000006,1024

12 ,6.020000,6,0x9054760,2,93,0,0,1,0,0,10000006,30000006,1024

13 ,7.010000,7,0x9055598,1,77,0,1,0,0,0,10000007,30000007,1024

14 ,7.020000,7,0x9054760,2,93,0,0,1,0,0,10000007,30000007,1024

15 ,8.010000,8,0x9055598,1,77,0,1,0,0,0,10000008,30000008,1024

16 ,8.020000,8,0x9054760,2,93,0,0,1,0,0,10000008,30000008,1024

17 ,9.010000,9,0x9055598,1,77,0,1,0,0,0,10000009,30000009,1024

18 ,9.020000,9,0x9054760,2,93,0,0,1,0,0,10000009,30000009,1024

19 ,10.010000,a,0x9055598,1,77,0,1,0,0,0,1000000a,3000000a,1024

20 ,10.020000,a,0x9054760,2,93,0,0,1,0,0,1000000a,3000000a,1024

file stats

=================

m_total_bytes : 1.66 Kbytes

m_total_pkt : 20.00 pkt

m_total_open_flows : 10.00 flows

m_total_pkt : 20

m_total_open_flows : 10

m_total_close_flows : 10

m_total_bytes : 1700-

Global statistics on the templates given. cps=connection per second. tps is template per second. they might be different in case of plugins where one template includes more than one flow. For example RTP flow in SFR profile (avl/delay_10_rtp_160k_full.pcap)

-

Generator output.

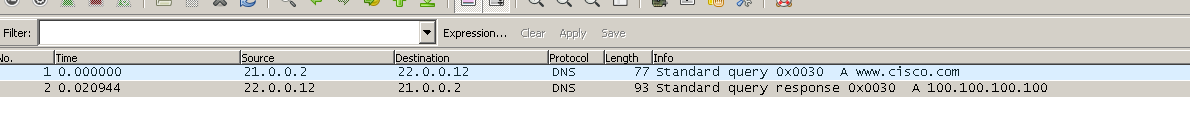

[bash]>wireshark my.erfgives

As the output file shows…

-

TRex generates a new flow every 1 sec.

-

Client IP values are taken from client IP pool .

-

Servers IP values are taken from server IP pool .

-

IPG (inter packet gap) values are taken from the configuration file (10 msec).

|

Note

|

In basic usage, TRex does not wait for an initiator packet to be received.

The response packet will be triggered based only on timeout (IPG in this example). |

Converting the simulator text results in a table similar to the following:

| pkt | time sec | fid | flow-pkt-id | client_ip | client_port | server_ip | direction |

|---|---|---|---|---|---|---|---|

1 |

0.010000 |

1 |

1 |

16.0.0.1 |

1024 |

48.0.0.1 |

→ |

2 |

0.020000 |

1 |

2 |

16.0.0.1 |

1024 |

48.0.0.1 |

← |

3 |

2.010000 |

2 |

1 |

16.0.0.2 |

1024 |

48.0.0.2 |

→ |

4 |

2.020000 |

2 |

2 |

16.0.0.2 |

1024 |

48.0.0.2 |

← |

5 |

3.010000 |

3 |

1 |

16.0.0.3 |

1024 |

48.0.0.3 |

→ |

6 |

3.020000 |

3 |

2 |

16.0.0.3 |

1024 |

48.0.0.3 |

← |

7 |

4.010000 |

4 |

1 |

16.0.0.4 |

1024 |

48.0.0.4 |

→ |

8 |

4.020000 |

4 |

2 |

16.0.0.4 |

1024 |

48.0.0.4 |

← |

9 |

5.010000 |

5 |

1 |

16.0.0.5 |

1024 |

48.0.0.5 |

→ |

10 |

5.020000 |

5 |

2 |

16.0.0.5 |

1024 |

48.0.0.5 |

← |

11 |

6.010000 |

6 |

1 |

16.0.0.6 |

1024 |

48.0.0.6 |

→ |

12 |

6.020000 |

6 |

2 |

16.0.0.6 |

1024 |

48.0.0.6 |

← |

13 |

7.010000 |

7 |

1 |

16.0.0.7 |

1024 |

48.0.0.7 |

→ |

14 |

7.020000 |

7 |

2 |

16.0.0.7 |

1024 |

48.0.0.7 |

← |

15 |

8.010000 |

8 |

1 |

16.0.0.8 |

1024 |

48.0.0.8 |

→ |

16 |

8.020000 |

8 |

2 |

16.0.0.8 |

1024 |

48.0.0.8 |

← |

17 |

9.010000 |

9 |

1 |

16.0.0.9 |

1024 |

48.0.0.9 |

→ |

18 |

9.020000 |

9 |

2 |

16.0.0.9 |

1024 |

48.0.0.9 |

← |

19 |

10.010000 |

a |

1 |

16.0.0.10 |

1024 |

48.0.0.10 |

→ |

20 |

10.020000 |

a |

2 |

16.0.0.10 |

1024 |

48.0.0.10 |

← |

where: fid:: Flow ID - different IDs for each flow.

- low-pkt-id

-

Packet ID within the flow. Numbering begins with 1.

- client_ip

-

Client IP address.

- client_port

-

Client IP port.

- server_ip

-

Server IP address.

- direction

-

Direction. "→" is client-to-server; "←" is server-to-client.

The following enlarges the CPS and reduces the duration.

[bash]>more cap2/dns_test.yaml

- duration : 1.0 (1)

generator :

distribution : "seq"

clients_start : "16.0.0.1"

clients_end : "16.0.0.255"

servers_start : "48.0.0.1"

servers_end : "48.0.0.255"

clients_per_gb : 201

min_clients : 101

dual_port_mask : "1.0.0.0"

tcp_aging : 1

udp_aging : 1

mac : [0x00,0x00,0x00,0x01,0x00,0x00]

cap_info :

- name: cap2/dns.pcap

cps : 10.0 (2)

ipg : 50000 (3)

rtt : 50000

w : 1-

Duration is 1 second.

-

CPS is 10.0.

-

IPG is 50 msec.

Running this produces the following output:

[bash]>./bp-sim-64-debug -f cap2/dns_test.yaml -o my.erf -v 3| pkt | time sec | template | fid | flow-pkt-id | client_ip | client_port | server_ip | desc |

|---|---|---|---|---|---|---|---|---|

1 |

0.010000 |

0 |

1 |

1 |

16.0.0.1 |

1024 |

48.0.0.1 |

→ |

2 |

0.060000 |

0 |

1 |

2 |

16.0.0.1 |

1024 |

48.0.0.1 |

← |

3 |

0.210000 |

0 |

2 |

1 |

16.0.0.2 |

1024 |

48.0.0.2 |

→ |

4 |

0.260000 |

0 |

2 |

2 |

16.0.0.2 |

1024 |

48.0.0.2 |

← |

5 |

0.310000 |

0 |

3 |

1 |

16.0.0.3 |

1024 |

48.0.0.3 |

→ |

6 |

0.360000 |

0 |

3 |

2 |

16.0.0.3 |

1024 |

48.0.0.3 |

← |

7 |

0.410000 |

0 |

4 |

1 |

16.0.0.4 |

1024 |

48.0.0.4 |

→ |

8 |

0.460000 |

0 |

4 |

2 |

16.0.0.4 |

1024 |

48.0.0.4 |

← |

9 |

0.510000 |

0 |

5 |

1 |

16.0.0.5 |

1024 |

48.0.0.5 |

→ |

10 |

0.560000 |

0 |

5 |

2 |

16.0.0.5 |

1024 |

48.0.0.5 |

← |

11 |

0.610000 |

0 |

6 |

1 |

16.0.0.6 |

1024 |

48.0.0.6 |

→ |

12 |

0.660000 |

0 |

6 |

2 |

16.0.0.6 |

1024 |

48.0.0.6 |

← |

13 |

0.710000 |

0 |

7 |

1 |

16.0.0.7 |

1024 |

48.0.0.7 |

→ |

14 |

0.760000 |

0 |

7 |

2 |

16.0.0.7 |

1024 |

48.0.0.7 |

← |

15 |

0.810000 |

0 |

8 |

1 |

16.0.0.8 |

1024 |

48.0.0.8 |

→ |

16 |

0.860000 |

0 |

8 |

2 |

16.0.0.8 |

1024 |

48.0.0.8 |

← |

17 |

0.910000 |

0 |

9 |

1 |

16.0.0.9 |

1024 |

48.0.0.9 |

→ |

18 |

0.960000 |

0 |

9 |

2 |

16.0.0.9 |

1024 |

48.0.0.9 |

← |

19 |

1.010000 |

0 |

a |

1 |

16.0.0.10 |

1024 |

48.0.0.10 |

→ |

20 |

1.060000 |

0 |

a |

2 |

16.0.0.10 |

1024 |

48.0.0.10 |

← |

Use the following to display the output as a chart, with:

x axis: time (seconds)

y axis: flow ID

The output indicates that there are 10 flows in 1 second, as expected, and the IPG is 50 msec

|

Note

|

Note the gap in the second flow generation. This is an expected schedular artifact and does not have an effect. |

In the following example the IPG is taken from the IPG itself.

- duration : 1.0

generator :

distribution : "seq"

clients_start : "16.0.0.1"

clients_end : "16.0.0.255"

servers_start : "48.0.0.1"

servers_end : "48.0.0.255"

clients_per_gb : 201

min_clients : 101

dual_port_mask : "1.0.0.0"

tcp_aging : 0

udp_aging : 0

mac : [0x00,0x00,0x00,0x01,0x00,0x00]

cap_ipg : true (1)

#cap_ipg_min : 30

#cap_override_ipg : 200

cap_info :

- name: cap2/dns.pcap

cps : 10.0

ipg : 10000

rtt : 10000

w : 1-

IPG is taken from pcap.

| pkt | time sec | template | fid | flow-pkt-id | client_ip | client_port | server_ip | desc |

|---|---|---|---|---|---|---|---|---|

1 |

0.010000 |

0 |

1 |

1 |

16.0.0.1 |

1024 |

48.0.0.1 |

→ |

2 |

0.030944 |

0 |

1 |

2 |

16.0.0.1 |

1024 |

48.0.0.1 |

← |

3 |

0.210000 |

0 |

2 |

1 |

16.0.0.2 |

1024 |

48.0.0.2 |

→ |

4 |

0.230944 |

0 |

2 |

2 |

16.0.0.2 |

1024 |

48.0.0.2 |

← |

5 |

0.310000 |

0 |

3 |

1 |

16.0.0.3 |

1024 |

48.0.0.3 |

→ |

6 |

0.330944 |

0 |

3 |

2 |

16.0.0.3 |

1024 |

48.0.0.3 |

← |

7 |

0.410000 |

0 |

4 |

1 |

16.0.0.4 |

1024 |

48.0.0.4 |

→ |

8 |

0.430944 |

0 |

4 |

2 |

16.0.0.4 |

1024 |

48.0.0.4 |

← |

9 |

0.510000 |

0 |

5 |

1 |

16.0.0.5 |

1024 |

48.0.0.5 |

→ |

10 |

0.530944 |

0 |

5 |

2 |

16.0.0.5 |

1024 |

48.0.0.5 |

← |

11 |

0.610000 |

0 |

6 |

1 |

16.0.0.6 |

1024 |

48.0.0.6 |

→ |

12 |

0.630944 |

0 |

6 |

2 |

16.0.0.6 |

1024 |

48.0.0.6 |

← |

13 |

0.710000 |

0 |

7 |

1 |

16.0.0.7 |

1024 |

48.0.0.7 |

→ |

14 |

0.730944 |

0 |

7 |

2 |

16.0.0.7 |

1024 |

48.0.0.7 |

← |

15 |

0.810000 |

0 |

8 |

1 |

16.0.0.8 |

1024 |

48.0.0.8 |

→ |

16 |

0.830944 |

0 |

8 |

2 |

16.0.0.8 |

1024 |

48.0.0.8 |

← |

17 |

0.910000 |

0 |

9 |

1 |

16.0.0.9 |

1024 |

48.0.0.9 |

→ |

18 |

0.930944 |

0 |

9 |

2 |

16.0.0.9 |

1024 |

48.0.0.9 |

← |

19 |

1.010000 |

0 |

a |

1 |

16.0.0.10 |

1024 |

48.0.0.10 |

→ |

20 |

1.030944 |

0 |

a |

2 |

16.0.0.10 |

1024 |

48.0.0.10 |

← |

In this example, the IPG was taken from the pcap file, which is closer to 20 msec and not 50 msec (taken from the configuration file).

#cap_ipg_min : 30 (1)

#cap_override_ipg : 200 (2)-

Sets the minimum IPG (microseconds) which should be overriden : ( if (pkt_ipg<cap_ipg_min) { pkt_ipg = cap_override_ipg } )

-

Value to override (microseconds).

In this example the server IP is taken from the template.

- duration : 10.0

generator :

distribution : "seq"

clients_start : "16.0.0.1"

clients_end : "16.0.1.255"

servers_start : "48.0.0.1"

servers_end : "48.0.0.255"

clients_per_gb : 201

min_clients : 101

dual_port_mask : "1.0.0.0"

tcp_aging : 1

udp_aging : 1

mac : [0x00,0x00,0x00,0x01,0x00,0x00]

cap_ipg : true

#cap_ipg_min : 30

#cap_override_ipg : 200

cap_info :

- name: cap2/dns.pcap

cps : 1.0

ipg : 10000

rtt : 10000

server_addr : "48.0.0.7" (1)

one_app_server : true (2)

w : 1-

All templates will use the same server.

-

Must be set to "true".

| pkt | time sec | fid | flow-pkt-id | client_ip | client_port | server_ip | desc |

|---|---|---|---|---|---|---|---|

1 |

0.010000 |

1 |

1 |

16.0.0.1 |

1024 |

48.0.0.7 |

→ |

2 |

0.030944 |

1 |

2 |

16.0.0.1 |

1024 |

48.0.0.7 |

← |

3 |

2.010000 |

2 |

1 |

16.0.0.2 |

1024 |

48.0.0.7 |

→ |

4 |

2.030944 |

2 |

2 |

16.0.0.2 |

1024 |

48.0.0.7 |

← |

5 |

3.010000 |

3 |

1 |

16.0.0.3 |

1024 |

48.0.0.7 |

→ |

6 |

3.030944 |

3 |

2 |

16.0.0.3 |

1024 |

48.0.0.7 |

← |

7 |

4.010000 |

4 |

1 |

16.0.0.4 |

1024 |

48.0.0.7 |

→ |

8 |

4.030944 |

4 |

2 |

16.0.0.4 |

1024 |

48.0.0.7 |

← |

9 |

5.010000 |

5 |

1 |

16.0.0.5 |

1024 |

48.0.0.7 |

→ |

10 |

5.030944 |

5 |

2 |

16.0.0.5 |

1024 |

48.0.0.7 |

← |

11 |

6.010000 |

6 |

1 |

16.0.0.6 |

1024 |

48.0.0.7 |

→ |

12 |

6.030944 |

6 |

2 |

16.0.0.6 |

1024 |

48.0.0.7 |

← |

13 |

7.010000 |

7 |

1 |

16.0.0.7 |

1024 |

48.0.0.7 |

→ |

14 |

7.030944 |

7 |

2 |

16.0.0.7 |

1024 |

48.0.0.7 |

← |

15 |

8.010000 |

8 |

1 |

16.0.0.8 |

1024 |

48.0.0.7 |

→ |

16 |

8.030944 |

8 |

2 |

16.0.0.8 |

1024 |

48.0.0.7 |

← |

17 |

9.010000 |

9 |

1 |

16.0.0.9 |

1024 |

48.0.0.7 |

→ |

18 |

9.030944 |

9 |

2 |

16.0.0.9 |

1024 |

48.0.0.7 |

← |

19 |

10.010000 |

a |

1 |

16.0.0.10 |

1024 |

48.0.0.7 |

→ |

20 |

10.030944 |

a |

2 |

16.0.0.10 |

1024 |

48.0.0.7 |

← |

- duration : 10.0

generator :

distribution : "seq"

clients_start : "16.0.0.1" (1)

clients_end : "16.0.0.1"

servers_start : "48.0.0.1"

servers_end : "48.0.0.3"

clients_per_gb : 201

min_clients : 101

dual_port_mask : "1.0.0.0"

tcp_aging : 1

udp_aging : 1

mac : [0x00,0x00,0x00,0x01,0x00,0x00]

cap_ipg : true

#cap_ipg_min : 30

#cap_override_ipg : 200

cap_info :

- name: cap2/dns.pcap

cps : 1.0

ipg : 10000

rtt : 10000

w : 1-

Only one client.

| pkt | time sec | fid | flow-pkt-id | client_ip | client_port | server_ip | desc |

|---|---|---|---|---|---|---|---|

1 |

0.010000 |

1 |

1 |

16.0.0.1 |

1024 |

48.0.0.1 |

→ |

2 |

0.030944 |

1 |

2 |

16.0.0.1 |

1024 |

48.0.0.1 |

← |

3 |

2.010000 |

2 |

1 |

16.0.0.1 |

1025 |

48.0.0.2 |

→ |

4 |

2.030944 |

2 |

2 |

16.0.0.1 |

1025 |

48.0.0.2 |

← |

5 |

3.010000 |

3 |

1 |

16.0.0.1 |

1026 |

48.0.0.3 |

→ |

6 |

3.030944 |

3 |

2 |

16.0.0.1 |

1026 |

48.0.0.3 |

← |

7 |

4.010000 |

4 |

1 |

16.0.0.1 |

1027 |

48.0.0.4 |

→ |

8 |

4.030944 |

4 |

2 |

16.0.0.1 |

1027 |

48.0.0.4 |

← |

9 |

5.010000 |

5 |

1 |

16.0.0.1 |

1028 |

48.0.0.5 |

→ |

10 |

5.030944 |

5 |

2 |

16.0.0.1 |

1028 |

48.0.0.5 |

← |

11 |

6.010000 |

6 |

1 |

16.0.0.1 |

1029 |

48.0.0.6 |

→ |

12 |

6.030944 |

6 |

2 |

16.0.0.1 |

1029 |

48.0.0.6 |

← |

13 |

7.010000 |

7 |

1 |

16.0.0.1 |

1030 |

48.0.0.7 |

→ |

14 |

7.030944 |

7 |

2 |

16.0.0.1 |

1030 |

48.0.0.7 |

← |

15 |

8.010000 |

8 |

1 |

16.0.0.1 |

1031 |

48.0.0.8 |

→ |

16 |

8.030944 |

8 |

2 |

16.0.0.1 |

1031 |

48.0.0.8 |

← |

17 |

9.010000 |

9 |

1 |

16.0.0.1 |

1032 |

48.0.0.9 |

→ |

18 |

9.030944 |

9 |

2 |

16.0.0.1 |

1032 |

48.0.0.9 |

← |

19 |

10.010000 |

a |

1 |

16.0.0.1 |

1033 |

48.0.0.10 |

→ |

20 |

10.030944 |

a |

2 |

16.0.0.1 |

1033 |

48.0.0.10 |

← |

In this case there is only one client so only ports are used to distinc the flows you need to be sure that you have enogth free sockets when running TRex in high rates

Active-flows : 0 Clients : 1 <1> Socket-util : 0.0000 % (2)

Open-flows : 1 Servers : 254 Socket : 1 Socket/Clients : 0.0

drop-rate : 0.00 bps-

Number of clients

-

sockets utilization (should be lower than 20%, enlarge the number of clients in case of an issue).

w is a tunable to the IP clients/servers generator. w=1 is the default behavior.

Setting w=2 configures a burst of two allocations from the same client. See the following example.

- duration : 10.0

generator :

distribution : "seq"

clients_start : "16.0.0.1"

clients_end : "16.0.0.10"

servers_start : "48.0.0.1"

servers_end : "48.0.0.3"

clients_per_gb : 201

min_clients : 101

dual_port_mask : "1.0.0.0"

tcp_aging : 1

udp_aging : 1

mac : [0x00,0x00,0x00,0x01,0x00,0x00]

cap_ipg : true

#cap_ipg_min : 30

#cap_override_ipg : 200

cap_info :

- name: cap2/dns.pcap

cps : 1.0

ipg : 10000

rtt : 10000

w : 2| pkt | time sec | fid | flow-pkt-id | client_ip | client_port | server_ip | desc |

|---|---|---|---|---|---|---|---|

1 |

0.010000 |

1 |

1 |

16.0.0.1 |

1024 |

48.0.0.1 |

→ |

2 |

0.030944 |

1 |

2 |

16.0.0.1 |

1024 |

48.0.0.1 |

← |

3 |

2.010000 |

2 |

1 |

16.0.0.1 |

1025 |

48.0.0.1 |

→ |

4 |

2.030944 |

2 |

2 |

16.0.0.1 |

1025 |

48.0.0.1 |

← |

5 |

3.010000 |

3 |

1 |

16.0.0.2 |

1024 |

48.0.0.2 |

→ |

6 |

3.030944 |

3 |

2 |

16.0.0.2 |

1024 |

48.0.0.2 |

← |

7 |

4.010000 |

4 |

1 |

16.0.0.2 |

1025 |

48.0.0.2 |

→ |

8 |

4.030944 |

4 |

2 |

16.0.0.2 |

1025 |

48.0.0.2 |

← |

9 |

5.010000 |

5 |

1 |

16.0.0.3 |

1024 |

48.0.0.3 |

→ |

10 |

5.030944 |

5 |

2 |

16.0.0.3 |

1024 |

48.0.0.3 |

← |

11 |

6.010000 |

6 |

1 |

16.0.0.3 |

1025 |

48.0.0.3 |

→ |

12 |

6.030944 |

6 |

2 |

16.0.0.3 |

1025 |

48.0.0.3 |

← |

13 |

7.010000 |

7 |

1 |

16.0.0.4 |

1024 |

48.0.0.4 |

→ |

14 |

7.030944 |

7 |

2 |

16.0.0.4 |

1024 |

48.0.0.4 |

← |

15 |

8.010000 |

8 |

1 |

16.0.0.4 |

1025 |

48.0.0.4 |

→ |

16 |

8.030944 |

8 |

2 |

16.0.0.4 |

1025 |

48.0.0.4 |

← |

17 |

9.010000 |

9 |

1 |

16.0.0.5 |

1024 |

48.0.0.5 |

→ |

18 |

9.030944 |

9 |

2 |

16.0.0.5 |

1024 |

48.0.0.5 |

← |

19 |

10.010000 |

a |

1 |

16.0.0.5 |

1025 |

48.0.0.5 |

→ |

20 |

10.030944 |

a |

2 |

16.0.0.5 |

1025 |

48.0.0.5 |

← |

The following example combines elements of HTTP and DNS templates:

- duration : 1.0

generator :

distribution : "seq"

clients_start : "16.0.0.1"

clients_end : "16.0.0.10"

servers_start : "48.0.0.1"

servers_end : "48.0.0.3"

clients_per_gb : 201

min_clients : 101

dual_port_mask : "1.0.0.0"

tcp_aging : 1

udp_aging : 1

mac : [0x00,0x00,0x00,0x01,0x00,0x00]

cap_ipg : true

cap_info :

- name: cap2/dns.pcap

cps : 10.0 (1)

ipg : 10000

rtt : 10000

w : 1

- name: avl/delay_10_http_browsing_0.pcap

cps : 2.0 (1)

ipg : 10000

rtt : 10000

w : 1-

Same CPS for both templates.

This creates the following output:

| pkt | time sec | template | fid | flow-pkt-id | client_ip | client_port | server_ip | desc |

|---|---|---|---|---|---|---|---|---|

1 |

0.010000 |

0 |

1 |

1 |

16.0.0.1 |

1024 |

48.0.0.1 |

→ |

2 |

0.030944 |

0 |

1 |

2 |

16.0.0.1 |

1024 |

48.0.0.1 |

← |

3 |

0.093333 |

1 |

2 |

1 |

16.0.0.2 |

1024 |

48.0.0.2 |

→ |

4 |

0.104362 |

1 |

2 |

2 |

16.0.0.2 |

1024 |

48.0.0.2 |

← |

5 |

0.115385 |

1 |

2 |

3 |

16.0.0.2 |

1024 |

48.0.0.2 |

→ |

6 |

0.115394 |

1 |

2 |

4 |

16.0.0.2 |

1024 |

48.0.0.2 |

→ |

7 |

0.126471 |

1 |

2 |

5 |

16.0.0.2 |

1024 |

48.0.0.2 |

← |

8 |

0.126484 |

1 |

2 |

6 |

16.0.0.2 |

1024 |

48.0.0.2 |

← |

9 |

0.137530 |

1 |

2 |

7 |

16.0.0.2 |

1024 |

48.0.0.2 |

→ |

10 |

0.148609 |

1 |

2 |

8 |

16.0.0.2 |

1024 |

48.0.0.2 |

← |

11 |

0.148621 |

1 |

2 |

9 |

16.0.0.2 |

1024 |

48.0.0.2 |

← |

12 |

0.148635 |

1 |

2 |

10 |

16.0.0.2 |

1024 |

48.0.0.2 |

← |

13 |

0.159663 |

1 |

2 |

11 |

16.0.0.2 |

1024 |

48.0.0.2 |

→ |

14 |

0.170750 |

1 |

2 |

12 |

16.0.0.2 |

1024 |

48.0.0.2 |

← |

15 |

0.170762 |

1 |

2 |

13 |

16.0.0.2 |

1024 |

48.0.0.2 |

← |

16 |

0.170774 |

1 |

2 |

14 |

16.0.0.2 |

1024 |

48.0.0.2 |

← |

17 |

0.176667 |

0 |

3 |

1 |

16.0.0.3 |

1024 |

48.0.0.3 |

→ |

18 |

0.181805 |

1 |

2 |

15 |

16.0.0.2 |

1024 |

48.0.0.2 |

→ |

19 |

0.181815 |

1 |

2 |

16 |

16.0.0.2 |

1024 |

48.0.0.2 |

→ |

20 |

0.192889 |

1 |

2 |

17 |

16.0.0.2 |

1024 |

48.0.0.2 |

← |

21 |

0.192902 |

1 |

2 |

18 |

16.0.0.2 |

1024 |

48.0.0.2 |

← |

- Template_id

-

0: DNS template 1: HTTP template

The output above illustrates two HTTP flows and ten DNS flows in 1 second, as expected.

SFR traffic includes a combination of traffic templates. This traffic mix in the example below was defined by SFR France. This SFR traffic profile is used as our traffic profile for our ASR1k/ISR-G2 benchmark. It is also possible to use EMIX instead of IMIX traffic.

The traffic was recorded from a Spirent C100 with a Pagent that introduce 10msec delay from client and server side.

- duration : 0.1

generator :

distribution : "seq"

clients_start : "16.0.0.1"

clients_end : "16.0.1.255"

servers_start : "48.0.0.1"

servers_end : "48.0.20.255"

clients_per_gb : 201

min_clients : 101

dual_port_mask : "1.0.0.0"

tcp_aging : 0

udp_aging : 0

mac : [0x0,0x0,0x0,0x1,0x0,0x00]

cap_ipg : true

cap_info :

- name: avl/delay_10_http_get_0.pcap

cps : 404.52

ipg : 10000

rtt : 10000

w : 1

- name: avl/delay_10_http_post_0.pcap

cps : 404.52

ipg : 10000

rtt : 10000

w : 1

- name: avl/delay_10_https_0.pcap

cps : 130.8745

ipg : 10000

rtt : 10000

w : 1

- name: avl/delay_10_http_browsing_0.pcap

cps : 709.89

ipg : 10000

rtt : 10000

w : 1

- name: avl/delay_10_exchange_0.pcap

cps : 253.81

ipg : 10000

rtt : 10000

w : 1

- name: avl/delay_10_mail_pop_0.pcap

cps : 4.759

ipg : 10000

rtt : 10000

w : 1

- name: avl/delay_10_mail_pop_1.pcap

cps : 4.759

ipg : 10000

rtt : 10000

w : 1

- name: avl/delay_10_mail_pop_2.pcap

cps : 4.759

ipg : 10000

rtt : 10000

w : 1

- name: avl/delay_10_oracle_0.pcap

cps : 79.3178

ipg : 10000

rtt : 10000

w : 1

- name: avl/delay_10_rtp_160k_full.pcap

cps : 2.776

ipg : 10000

rtt : 10000

w : 1

one_app_server : false

plugin_id : 1 (2)

- name: avl/delay_10_rtp_250k_full.pcap

cps : 1.982

ipg : 10000

rtt : 10000

w : 1

one_app_server : false

plugin_id : 1

- name: avl/delay_10_smtp_0.pcap

cps : 7.3369

ipg : 10000

rtt : 10000

w : 1

- name: avl/delay_10_smtp_1.pcap

cps : 7.3369

ipg : 10000

rtt : 10000

w : 1

- name: avl/delay_10_smtp_2.pcap

cps : 7.3369

ipg : 10000

rtt : 10000

w : 1

- name: avl/delay_10_video_call_0.pcap

cps : 11.8976

ipg : 10000

rtt : 10000

w : 1

one_app_server : false

- name: avl/delay_10_sip_video_call_full.pcap

cps : 29.347

ipg : 10000

rtt : 10000

w : 1

plugin_id : 2 (1)

one_app_server : false

- name: avl/delay_10_citrix_0.pcap

cps : 43.6248

ipg : 10000

rtt : 10000

w : 1

- name: avl/delay_10_dns_0.pcap

cps : 1975.015

ipg : 10000

rtt : 10000

w : 1-

Plugin for SIP protocol, used to replace the IP/port in the control flow base on the data-flow.

-

Plugin for RTSP protocol used to replace the IP/port in the control flow base on the data-flow.

TRex commands typically include the following main arguments, but only -f is required.

[bash]>sudo ./t-rex-64 -f <traffic_yaml> -m <multiplier> -d <duration> -l <latency test rate> -c <cores>Full command line reference can be found here

[bash]>sudo ./t-rex-64 -f cap2/simple_http.yaml -c 4 -m 100 -d 100[bash]>sudo ./t-rex-64 -f cap2/simple_http.yaml -c 4 -m 100 -d 100 -l 1000[bash]>sudo ./t-rex-64 -f avl/sfr_delay_10_1g.yaml -c 4 -m 35 -d 100 -p[bash]>sudo ./t-rex-64 -f avl/sfr_delay_10_1g.yaml -c 4 -m 20 -d 100 -l 1000[bash]>sudo ./t-rex-64 -f avl/sfr_delay_10_1g_no_bundeling.yaml -c 4 -m 20 -d 100 -l 1000 --ipv6[bash]>sudo ./t-rex-64 -f cap2/simple_http.yaml -c 4 -m 100 -d 100 -l 1000 --learn-mode 1[bash]>sudo ./t-rex-64 -f cap2/imix_fast_1g.yaml -c 4 -m 1 -d 100 -l 1000[bash]>sudo ./t-rex-64 -f cap2/imix_fast_1g_100k.yaml -c 4 -m 1 -d 100 -l 1000[bash]>sudo ./t-rex-64 -f cap2/imix_64.yaml -c 4 -m 1 -d 100 -l 1000| name | description |

|---|---|

cap2/dns.yaml |

simple dns pcap file |

cap2/http_simple.yaml |

simple http cap file |

avl/sfr_delay_10_1g_no_bundeling.yaml |

sfr traffic profile capture from Avalanche - Spirent without bundeling support with RTT=10msec ( a delay machine), this can be used with --ipv6 and --learn-mode |

avl/sfr_delay_10_1g.yaml |

head-end sfr traffic profile capture from Avalanche - Spirent with bundeling support with RTT=10msec ( a delay machine), it is normalized to 1Gb/sec for m=1 |

avl/sfr_branch_profile_delay_10.yaml |

branch sfr profile capture from Avalanche - Spirent with bundeling support with RTT=10msec it, is normalized to 1Gb/sec for m=1 |

cap2/imix_fast_1g.yaml |

imix profile with 1600 flows normalized to 1Gb/sec. |

cap2/imix_fast_1g_100k_flows.yaml |

imix profile with 100k flows normalized to 1Gb/sec. |

cap2/imix_64.yaml |

64byte UDP packets profile |

|

Note

|

TRex supports also true stateless traffic generation. If you are looking for stateless traffic, please visit the following link: TRex Stateless Support |

With this feature you can "repeat" flows and create stateless, IXIA like streams.

After injecting the number of flows defined by limit, TRex repeats the same flows. If all templates have limit the CPS will be zero after some time as there are no new flows after the first iteration.

- IMIX support:

-

Example:

[bash]>sudo ./t-rex-64 -f cap2/imix_64.yaml -d 1000 -m 40000 -c 4 -p|

Warning

|

The -p is used here to send the client side packets from both interfaces.

(Normally it is sent from client ports only.)

With this option, the port is selected by the client IP.

All the packets of a flow are sent from the same interface. This may create an issue with routing, as the client’s IP will be sent from the server interface. PBR router configuration solves this issue but cannot be used in all cases. So use this |

cap_info :

- name: cap2/udp_64B.pcap

cps : 1000.0

ipg : 10000

rtt : 10000

w : 1

limit : 1000 (1)-

Repeats the flows in a loop, generating 1000 flows from this type. In this example, udp_64B includes only one packet.

The cap file "cap2/udp_64B.pcap" includes only one packet of 64B. This configuration file creates 1000 flows that will be repeated as follows: f1 , f2 , f3 …. f1000 , f1 , f2 … where the PPS == CPS for -m=1. In this case it will have PPS=1000 in sec for -m==1. It is possible to mix stateless templates and stateful templates.

cap2/imix_fast_1g.yaml example- duration : 3

generator :

distribution : "seq"

clients_start : "16.0.0.1"

clients_end : "16.0.0.255"

servers_start : "48.0.0.1"

servers_end : "48.0.255.255"

clients_per_gb : 201

min_clients : 101

dual_port_mask : "1.0.0.0"

tcp_aging : 0

udp_aging : 0

mac : [0x0,0x0,0x0,0x1,0x0,0x00]

cap_info :

- name: cap2/udp_64B.pcap

cps : 90615

ipg : 10000

rtt : 10000

w : 1

limit : 199

- name: cap2/udp_576B.pcap

cps : 64725

ipg : 10000

rtt : 10000

w : 1

limit : 199

- name: cap2/udp_1500B.pcap

cps : 12945

ipg : 10000

rtt : 10000

w : 1

limit : 199

- name: cap2/udp_64B.pcap

cps : 90615

ipg : 10000

rtt : 10000

w : 1

limit : 199

- name: cap2/udp_576B.pcap

cps : 64725

ipg : 10000

rtt : 10000

w : 1

limit : 199

- name: cap2/udp_1500B.pcap

cps : 12945

ipg : 10000

rtt : 10000

w : 1

limit : 199The templates are duplicated here to better utilize DRAM and to get better performance.

cap2/imix_fast_1g_100k_flows.yaml example- duration : 3

generator :

distribution : "seq"

clients_start : "16.0.0.1"

clients_end : "16.0.0.255"

servers_start : "48.0.0.1"

servers_end : "48.0.255.255"

clients_per_gb : 201

min_clients : 101

dual_port_mask : "1.0.0.0"

tcp_aging : 0

udp_aging : 0

mac : [0x0,0x0,0x0,0x1,0x0,0x00]

cap_info :

- name: cap2/udp_64B.pcap

cps : 90615

ipg : 10000

rtt : 10000

w : 1

limit : 16666

- name: cap2/udp_576B.pcap

cps : 64725

ipg : 10000

rtt : 10000

w : 1

limit : 16666

- name: cap2/udp_1500B.pcap

cps : 12945

ipg : 10000

rtt : 10000

w : 1

limit : 16667

- name: cap2/udp_64B.pcap

cps : 90615

ipg : 10000

rtt : 10000

w : 1

limit : 16667

- name: cap2/udp_576B.pcap

cps : 64725

ipg : 10000

rtt : 10000

w : 1

limit : 16667

- name: cap2/udp_1500B.pcap

cps : 12945

ipg : 10000

rtt : 10000

w : 1

limit : 16667The following example of a simple simulation includes 3 flows, with CPS=10.

$more cap2/imix_example.yaml

#

# Simple IMIX test (7x64B, 5x576B, 1x1500B)

#

- duration : 3

generator :

distribution : "seq"

clients_start : "16.0.0.1"

clients_end : "16.0.0.255"

servers_start : "48.0.0.1"

servers_end : "48.0.255.255"

clients_per_gb : 201

min_clients : 101

dual_port_mask : "1.0.0.0"

tcp_aging : 0

udp_aging : 0

mac : [0x0,0x0,0x0,0x1,0x0,0x00]

cap_info :

- name: cap2/udp_64B.pcap

cps : 10.0

ipg : 10000

rtt : 10000

w : 1

limit : 3 (1)-

Number of flows: 3

[bash]>./bp-sim-64-debug -f cap2/imix_example.yaml -o my.erf -v 3 > a.txt| pkt | time sec | template | fid | flow-pkt-id | client_ip | client_port | server_ip | desc |

|---|---|---|---|---|---|---|---|---|

1 |

0.010000 |

0 |

1 |

1 |

16.0.0.1 |

1024 |

48.0.0.1 |

→ |

2 |

0.210000 |

0 |

2 |

0 |

16.0.0.2 |

1024 |

48.0.0.2 |

→ |

3 |

0.310000 |

0 |

3 |

0 |

16.0.0.3 |

1024 |

48.0.0.3 |

→ |

4 |

0.310000 |

0 |

1 |

0 |

16.0.0.1 |

1024 |

48.0.0.1 |

→ |

5 |

0.510000 |

0 |

2 |

0 |

16.0.0.2 |

1024 |

48.0.0.2 |

→ |

6 |

0.610000 |

0 |

3 |

0 |

16.0.0.3 |

1024 |

48.0.0.3 |

→ |

7 |

0.610000 |

0 |

1 |

0 |

16.0.0.1 |

1024 |

48.0.0.1 |

→ |

8 |

0.810000 |

0 |

2 |

0 |

16.0.0.2 |

1024 |

48.0.0.2 |

→ |

9 |

0.910000 |

0 |

1 |

0 |

16.0.0.1 |

1024 |

48.0.0.1 |

→ |

10 |

0.910000 |

0 |

3 |

0 |

16.0.0.3 |

1024 |

48.0.0.3 |

→ |

11 |

1.110000 |

0 |

2 |

0 |

16.0.0.2 |

1024 |

48.0.0.2 |

→ |

12 |

1.210000 |

0 |

3 |

0 |

16.0.0.3 |

1024 |

48.0.0.3 |

→ |

13 |

1.210000 |

0 |

1 |

0 |

16.0.0.1 |

1024 |

48.0.0.1 |

→ |

14 |

1.410000 |

0 |

2 |

0 |

16.0.0.2 |

1024 |

48.0.0.2 |

→ |

15 |

1.510000 |

0 |

1 |

0 |

16.0.0.1 |

1024 |

48.0.0.1 |

→ |

16 |

1.510000 |

0 |

3 |

0 |

16.0.0.3 |

1024 |

48.0.0.3 |

→ |

17 |

1.710000 |

0 |

2 |

0 |

16.0.0.2 |

1024 |

48.0.0.2 |

→ |

18 |

1.810000 |

0 |

3 |

0 |

16.0.0.3 |

1024 |

48.0.0.3 |

→ |

19 |

1.810000 |

0 |

1 |

0 |

16.0.0.1 |

1024 |

48.0.0.1 |

→ |

20 |

2.010000 |

0 |

2 |

0 |

16.0.0.2 |

1024 |

48.0.0.2 |

→ |

21 |

2.110000 |

0 |

1 |

0 |

16.0.0.1 |

1024 |

48.0.0.1 |

→ |

22 |

2.110000 |

0 |

3 |

0 |

16.0.0.3 |

1024 |

48.0.0.3 |

→ |

23 |

2.310000 |

0 |

2 |

0 |

16.0.0.2 |

1024 |

48.0.0.2 |

→ |

24 |

2.410000 |

0 |

3 |

0 |

16.0.0.3 |

1024 |

48.0.0.3 |

→ |

25 |

2.410000 |

0 |

1 |

0 |

16.0.0.1 |

1024 |

48.0.0.1 |

→ |

26 |

2.610000 |

0 |

2 |

0 |

16.0.0.2 |

1024 |

48.0.0.2 |

→ |

27 |

2.710000 |

0 |

1 |

0 |

16.0.0.1 |

1024 |

48.0.0.1 |

→ |

28 |

2.710000 |

0 |

3 |

0 |

16.0.0.3 |

1024 |

48.0.0.3 |

→ |

29 |

2.910000 |

0 |

2 |

0 |

16.0.0.2 |

1024 |

48.0.0.2 |

→ |

30 |

3.010000 |

0 |

3 |

0 |

16.0.0.3 |

1024 |

48.0.0.3 |

→ |

31 |

3.010000 |

0 |

1 |

0 |

16.0.0.1 |

1024 |

48.0.0.1 |

→ |

-

Average CPS: 10 packets per second (30 packets in 3 sec).

-

Total of 3 flows, as specified in the configuration file.

-

The flows come in bursts, as specified in the configuration file.

Currently, there is one global IP pool for clients and servers. It serves all templates. All templates will allocate IP from this global pool.

Each TRex client/server "dual-port" (pair of ports, such as port 0 for client, port 1 for server) has its own generator offset, taken from the config file. The offset is called dual_port_mask.

Example:

generator :

distribution : "seq"

clients_start : "16.0.0.1"

clients_end : "16.0.0.255"

servers_start : "48.0.0.1"

servers_end : "48.0.0.255"

dual_port_mask : "1.0.0.0" (1)

tcp_aging : 0

udp_aging : 0-

Offset to add per port pair. The reason for the ``dual_port_mask'' is to make static route configuration per port possible. With this offset, different ports have different prefixes.

For example, with four ports, TRex will produce the following ip ranges:

port pair-0 (0,1) --> C (16.0.0.1-16.0.0.128 ) <-> S( 48.0.0.1 - 48.0.0.128)

port pair-1 (2,3) --> C (17.0.0.129-17.0.0.255 ) <-> S( 49.0.0.129 - 49.0.0.255) + mask ("1.0.0.0")-

Number of clients : 255

-

Number of servers : 255

-

The offset defined by ``dual_port_mask'' (1.0.0.0) is added for each port pair, but the total number of clients/servers will remain constant (255), and will not depend on the amount of ports.

-

TCP/UDP aging is the time it takes to return the socket to the pool. It is required when the number of clients is very small and the template defines a very long duration.

If ``dual_port_mask'' was set to 0.0.0.0, both port pairs would have used the same ip range. For example, with four ports, we would have get the following ip range is :

port pair-0 (0,1) --> C (16.0.0.1-16.0.0.128 ) <-> S( 48.0.0.1 - 48.0.0.128)

port pair-1 (2,3) --> C (16.0.0.129-16.0.0.255 ) <-> S( 48.0.0.129 - 48.0.0.255)- Router configuration for this mode:

-

PBR is not necessary. The following configuration is sufficient.

interface TenGigabitEthernet1/0/0 (1)

mac-address 0000.0001.0000

mtu 4000

ip address 11.11.11.11 255.255.255.0

!

`

interface TenGigabitEthernet1/1/0 (2)

mac-address 0000.0001.0000

mtu 4000

ip address 22.11.11.11 255.255.255.0

!

interface TenGigabitEthernet1/2/0 (3)

mac-address 0000.0001.0000

mtu 4000

ip address 33.11.11.11 255.255.255.0

!

interface TenGigabitEthernet1/3/0 (4)

mac-address 0000.0001.0000

mtu 4000

ip address 44.11.11.11 255.255.255.0

load-interval 30

ip route 16.0.0.0 255.0.0.0 22.11.11.12

ip route 48.0.0.0 255.0.0.0 11.11.11.12

ip route 17.0.0.0 255.0.0.0 44.11.11.12

ip route 49.0.0.0 255.0.0.0 33.11.11.12-

Connected to TRex port 0 (client side)

-

Connected to TRex port 1 (server side)

-

Connected to TRex port 2 (client side)

-

Connected to TRex port 3(server side)

- One server:

-

To support a template with one server, you can add

server_addr'' keyword. Each port pair will be get different server IP (According to thedual_port_mask'' offset).

- name: cap2/dns.pcap

cps : 1.0

ipg : 10000

rtt : 10000

w : 1

server_addr : "48.0.0.1" (1)

one_app_server : true (2)-

Server IP.

-

Enable one server mode.

In TRex server, you will see the following statistics.

Active-flows : 19509 Clients : 504 Socket-util : 0.0670 %

Open-flows : 247395 Servers : 65408 Socket : 21277 Socket/Clients : 42.2|

Note

|

|

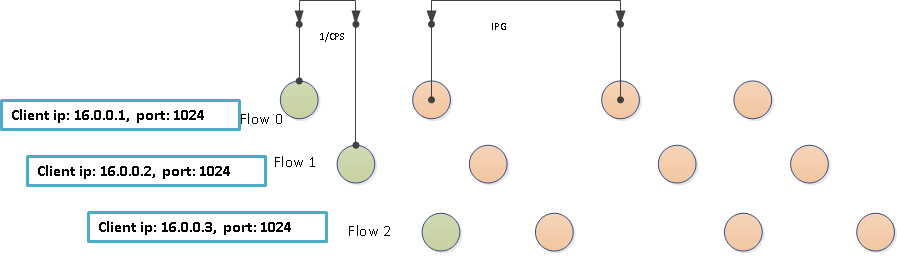

Each time a new flow is created, TRex allocates new Client IP/port and Server IP. This 3-tuple should be distinct among active flows.

Currently, only sequential distribution is supported in IP allocation. This means the IP address is increased by one for each flow.

For example, if we have a pool of two IP addresses: 16.0.0.1 and 16.0.0.2, the allocation of client src/port pairs will be

16.0.0.0.1 [1024]

16.0.0.0.2 [1024]

16.0.0.0.1 [1025]

16.0.0.0.2 [1025]

16.0.0.0.1 [1026]

16.0.0.0.2 [1026]

...-

Let’s look at an example of one flow with 4 packets.

-

Green circles represent the first packet of each flow.

-

The client ip pool starts from 16.0.0.1, and the distribution is seq.

\(Total PPS = \sum_{k=0}^{n}(CPS_{k}\times {flow\_pkts}_{k})\)

\(Concurrent flow = \sum_{k=0}^{n}CPS_{k}\times flow\_duration_k \)

The above formulas can be used to calculate the PPS. The TRex throughput depends on the PPS calculated above and the value of m (a multiplier given as command line argument -m).

The m value is a multiplier of total pcap files CPS. CPS of pcap file is configured on yaml file.

Let’s take a simple example as below.

cap_info :

- name: avl/first.pcap < -- has 2 packets

cps : 102.0

ipg : 10000

rtt : 10000

w : 1

- name: avl/second.pcap < -- has 20 packets

cps : 50.0

ipg : 10000

rtt : 10000

w : 1The throughput is: 'm*(CPS_1*flow_pkts+CPS_2*flow_pkts)'

So if the m is set as 1, the total PPS is : 102*2+50*20 = 1204 PPS.

The BPS depends on the packet size. You can refer to your packet size and get the BPS = PPS*Packet_size.

-

1) per-template generator

Multiple generators can be defined and assigned to different pcap file templates.

The YAML configuration is something like this:

generator :

distribution : "seq"

clients_start : "16.0.0.1"

clients_end : "16.0.1.255"

servers_start : "48.0.0.1"

servers_end : "48.0.20.255"

clients_per_gb : 201

min_clients : 101

dual_port_mask : "1.0.0.0"

tcp_aging : 0

udp_aging : 0

generator_clients :

- name : "c1"

distribution : "random"

ip_start : "38.0.0.1"

ip_end : "38.0.1.255"

clients_per_gb : 201

min_clients : 101

dual_port_mask : "1.0.0.0"

tcp_aging : 0

udp_aging : 0

generator_servers :

- name : "s1"

distribution : "seq"

ip_start : "58.0.0.1"

ip_end : "58.0.1.255"

dual_port_mask : "1.0.0.0"

cap_info :

- name: avl/delay_10_http_get_0.pcap

cps : 404.52

ipg : 10000

rtt : 10000

w : 1

- name: avl/delay_10_http_post_0.pcap

client_pool : "c1"

server_pool : "s1"

cps : 404.52

ipg : 10000

rtt : 10000

w : 1-

2) More distributions will be supported in the future (normal distribution for example)

Currently, only sequence and random are supported.

-

3) Histogram of tuple pool will be supported

This feature will give the user more flexibility in defining the IP generator.

generator :

client_pools:

- name : "a"

distribution : "seq"

clients_start : "16.0.0.1"

clients_end : "16.0.1.255"

tcp_aging : 0

udp_aging : 0

- name : "b"

distribution : "random"

clients_start : 26.0.0.1"

clients_end : 26.0.1.255"

tcp_aging : 0

udp_aging : 0

- name : "c"

pools_list :

- name:"a"

probability: 0.8

- name:"b"

probability: 0.2To measure jitter/latency using independent flows (SCTP or ICMP), use -l <Hz> where Hz defines the number of packets to send from each port per second. This option measures latency and jitter. We can define the type of traffic used for the latency measurement using the --l-pkt-mode <0-3> option.

| Option ID | Type |

|---|---|

0 |

default, SCTP packets |

1 |

ICMP echo request packets from both sides |

2 |

Send ICMP requests from one side, and matching ICMP responses from other side. This is particulary usefull if your DUT drops traffic from outside, and you need to open pin hole to get the outside traffic in (for example when testing a firewall) |

3 |

Send ICMP request packets with a constant 0 sequence number from both sides. |

The command output of the t-rex utility with latency stats enabled looks similar to the following:

-Latency stats enabled

Cpu Utilization : 0.2 % <1> <5> <6> <7> (8)

if| tx_ok , rx_ok , rx check ,error, latency (usec) , Jitter max window

| , <2> , <3> , <4> , average , max , (usec)

------------------------------------------------------------------------------------------------------

0 | 1002, 1002, 0, 0, 51 , 69, 3 | 0 69 67 ...

1 | 1002, 1002, 0, 0, 53 , 196, 2 | 0 196 53 ...

2 | 1002, 1002, 0, 0, 54 , 71, 5 | 0 71 69 ...

3 | 1002, 1002, 0, 0, 53 , 193, 2 | 0 193 52 ...-

Rx check and latency thread CPU utilization.

-

tx_okon port 0 should equalrx_okon port 1, and vice versa, for all paired ports. -

Rx check rate as per

--rx-check. For more information on Rx check, see Flow order/latency verification section. -

Number of packet errors detected. (various reasons, see

stateful_rx_core.cpp) -

Average latency (in microseconds) across all samples since startup.

-

Maximum latency (in microseconds) across all samples since startup.

-

Jitter calculated as per RC3550 Appendix-A.8.

-

Maximum latency within a sliding window of 500 milliseconds. There are few values shown per port according to terminal width. The odlest value is on the left and most recent value (latest 500msec sample) on the right. This can help in identifying spikes of high latency clearing after some time. Maximum latency (<6>) is the total maximum over the entire test duration. To best understand this, run TRex with the latency option (-l) and watch the results.