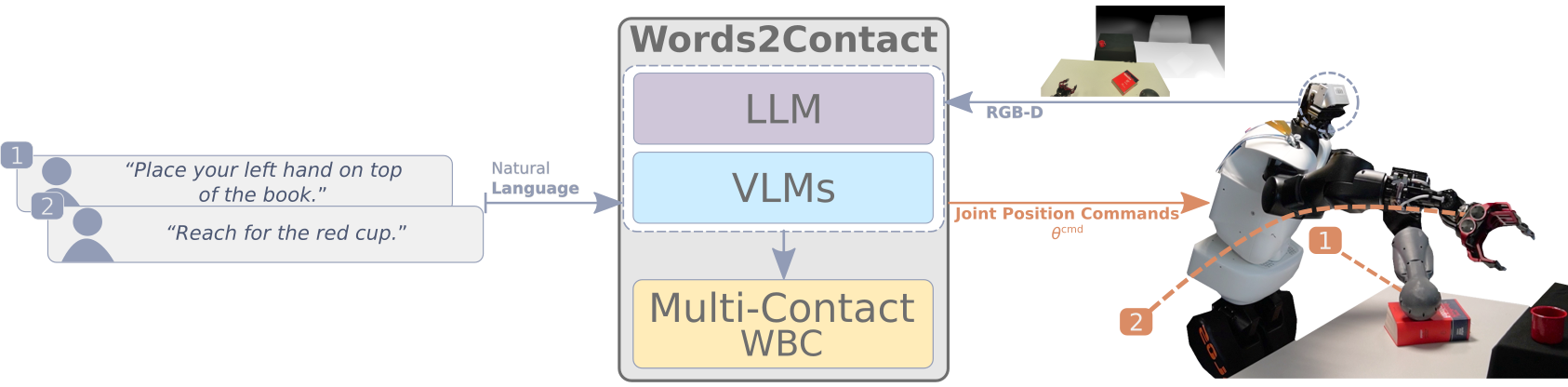

Official implementation of the paper "Words2Contact: Identifying Support Contacts from Verbal Instructions Using Foundation Models" presented at IEEE-RAS Humanoids 2024.

This repository contains the implementation of the LLMs/VLMs part of the project. For the multi-contact whole-body controller, please visit this repo.

For more details, visit the paper website.

.

├── .ci/ # Docker configurations

│ └── Dockerfile # Dockerfile to build the project's container

├── config/ # Configuration files for models

│ └── GroundingDINO_SwinT_OGC.py # GroundingDINO configuration

├── data/ # Test data and outputs

│ ├── test.png # Example input image

│ └── test_output.png # Example output image

├── media/ # Media assets

│ ├── ack.png # Acknowledgment image

│ └── concept_figure_wide.png # Conceptual figure for the project

├── submodules/ # External submodules

│ └── CLIP_Surgery/ # CLIP Surgery code and resources

├── words2contact/ # Core project source code

│ ├── grammar/ # Grammars for constraining language models

│ │ ├── classifier.gbnf # Grammar for classifying outputs

│ │ └── README.md # Grammar module documentation

│ ├── prompts/ # Prompts for LLMs

│ │ └── prompts.json # JSON file with pre-defined prompts

│ ├── geom_utils.py # Utilities for geometric calculations

│ ├── math_pars.py # Parsing mathematical expressions

│ ├── saygment.py # Language-grounded segmentation

│ ├── words2contacts.py # Core script for Words2Contact

│ └── yello.py # Language-grounded object detection

├── main.py # Entry point for the project

├── launch.sh # Docker launch script

├── object_detection.py # Object detection testing

├── object_segmentation.py # Object segmentation testing

└── README.md # Documentation (this file)

Before starting, ensure you have the following:

- Docker

- NVIDIA Container Toolkit (if using GPU (recommended))

- An OpenAI API Key (if using GPT-based LLMs). You can obtain it from OpenAI.

For now only Docker is supported, conda and pip installations will be added soon.

-

Clone the repository:

git clone https://github.com/hucebot/words2contact.git cd words2contact -

Build the Docker image:

docker build -t words2contact -f .ci/Dockerfile .

If you plan to use OpenAI's GPT-based LLMs, set your API key as an environment variable before launching the Docker container:

export OPENAI_KEY=<your_openai_api_key>Run the following command to start the container:

bash launch.shThis will create a models/ folder in the root of the project where models will be downloaded and stored.

To test Words2Contact with the provided example image:

python main.py --image_path data/test.png --prompt "Place your hand above the red bowl."The output will be saved as data/test_output.png.

More examples coming soon!

usage: main.py [-h] [--image_path IMAGE_PATH] [--prompt PROMPT] [--use_gpt] [--yello_vlm YELLO_VLM] [--output_path OUTPUT_PATH] [--llm_path LLM_PATH] [--chat_template CHAT_TEMPLATE]

Run Words2Contact with an image and a text prompt.

options:

-h, --help show this help message and exit

--image_path IMAGE_PATH Path to the input image file. Default: 'data/test.png'.

--prompt PROMPT Text prompt for Words2Contact. Default: 'Place your hand above the red bowl.'.

--use_gpt Use OpenAI API for the LLM (requires `OPENAI_KEY`).

--yello_vlm YELLO_VLM Model to use for YELLO VLM. Default: 'GroundingDINO'.

--output_path OUTPUT_PATH Path to save the output image. Default: 'data/test_output.png'.

--llm_path LLM_PATH Path to the `.gguf` LLM model weights.

--chat_template CHAT_TEMPLATE Chat template to use for local LLMs. Default: 'ChatML'.

- Download

.ggufweights for local LLMs from a trusted source (e.g., TheBloke's Hugging Face models). - Place the weights in the

models/folder. - Specify the

--llm_pathargument when running the script:python main.py --image_path data/test.png --llm_path models/local_model.gguf

For questions or support, please contact:

- Dionis Totsila: [email protected]

If you use Words2Contact, our dataset or part of this code in your research, please cite our paper:

@INPROCEEDINGS{10769902,

author={Totsila, Dionis and Rouxel, Quentin and Mouret, Jean-Baptiste and Ivaldi, Serena},

booktitle={2024 IEEE-RAS 23rd International Conference on Humanoid Robots (Humanoids)},

title={Words2Contact: Identifying Support Contacts from Verbal Instructions Using Foundation Models},

year={2024},

volume={},

number={},

pages={9-16},

keywords={Accuracy;Large language models;Pipelines;Natural languages;Humanoid robots;Transforms;Benchmark testing;Iterative methods;Surface treatment},

doi={10.1109/Humanoids58906.2024.10769902}}This research was supported by:

- CPER CyberEntreprises

- Creativ’Lab platform of Inria/LORIA

- EU Horizon project euROBIN (GA n.101070596)

- France 2030 program through the PEPR O2R projects AS3 and PI3 (ANR-22-EXOD-007, ANR-22-EXOD-004)