A tensorflow implementation of style transfer (neural style) described in the papers:

- A Neural Algorithm of Artistic Style : submitted version

- Image Style Transfer Using Convolutional Neural Networks : published version

by Leon A. Gatys, Alexander S. Ecker, Matthias Bethge

- Tensorflow

- Python packages : numpy, scipy, PIL, matplotlib

- Pretrained VGG19 file : imagenet-vgg-verydeep-19.mat

* Please download the file from link above.

* Save the file under pre_trained_models

python run_main.py --content <content file> --style <style file> --output <output file>

Example:

python run_main.py --content images/tubingen.jpg --style images/starry-night.jpg --output result.jpg

Required :

--content: Filename of the content image. Default:images/tubingen.jpg--style: Filename of the style image. Default:images/starry-night.jpg--output: Filename of the output image. Default:result.jpg

Optional :

--model_path: Filename of the content image. Default:pre_trained_model--loss_ratio: Weight of content-loss relative to style-loss. Alpha over beta in the paper. Default:1e-3--content_layers: Space-separated VGG-19 layer names used for content loss computation. Default:conv4_2--style_layers: Space-separated VGG-19 layer names used for style loss computation. Default:relu1_1 relu2_1 relu3_1 relu4_1 relu5_1--content_layer_weights: Space-separated weights of each content layer to the content loss. Default:1.0--style_layer_weights: Space-separated weights of each style layer to loss. Default:0.2 0.2 0.2 0.2 0.2--max_size: Maximum width or height of the input images. Default:512--num_iter: The number of iterations to run. Default:1000--initial_type: The initial image for optimization. (notation in the paper : x) Choices: content, style, random. Default:'content'--content_loss_norm_type: Different types of normalization for content loss. Choices: 1, 2, 3. Default:3

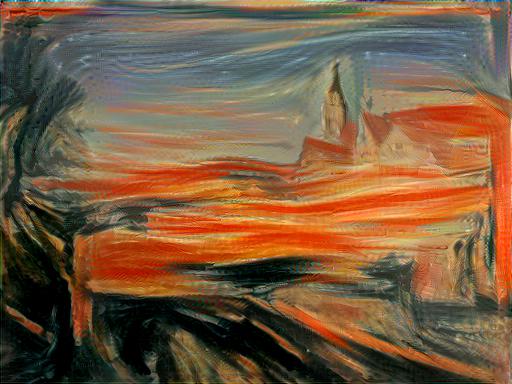

There results were obtained from default setting. An image was generated approximately after 4 mins on GTX 980 ti.

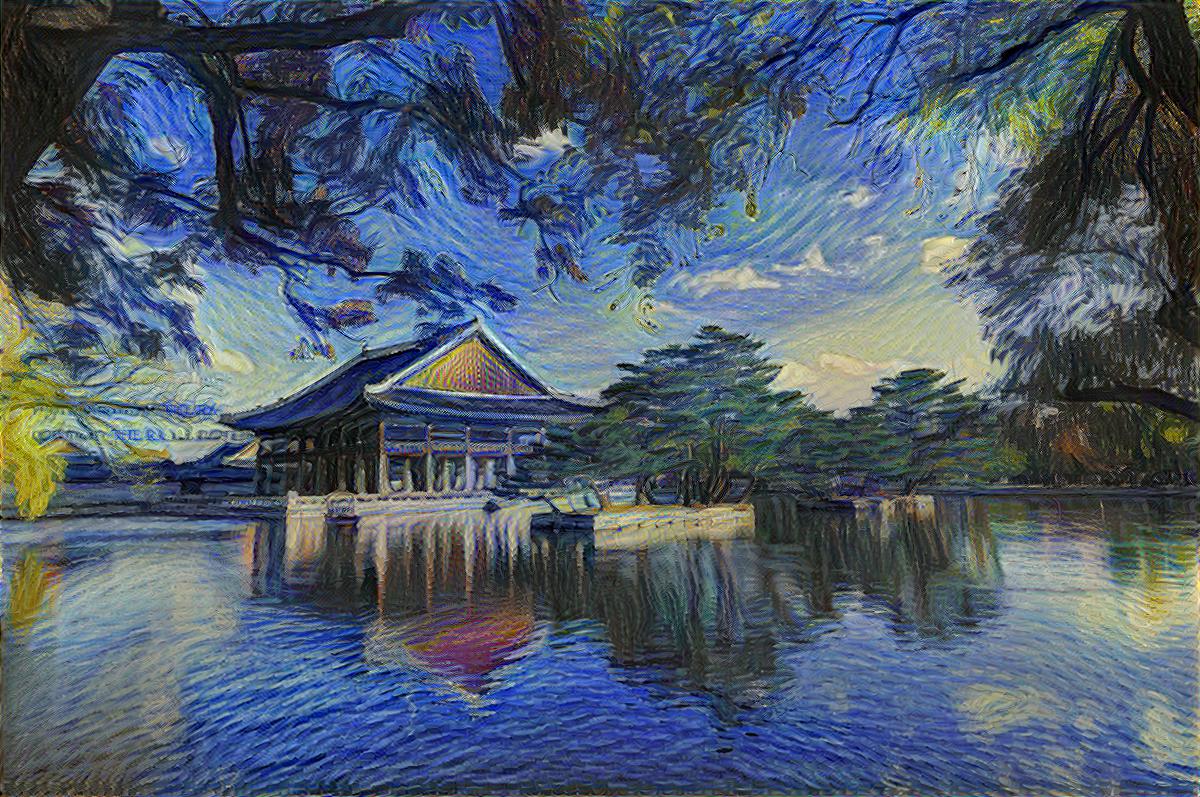

There results were obtained from default setting except --max_size 1200

An image was generated approximately after 19.5 mins on GTX 980 ti.

There are some tensorflow implementations about the paper.

- This is a tutorial version. Comments on code are well provided. Some exercises are given to check what you learn.

- This is a simple implemetation, but optimizer is not L-BFGS but Adam.

- There are other implementations related to style transfer like video style transfer, color-preserving style transfer etc.

I went through these implementations and found some differences from each other.

- Style image shape : there are some variations how to resize a style image.

- Optimizer : gradient descent, Adam, L-BFGS

- Scale factor of loss : scale factors for content-loss and style-loss are different

- Total loss : total variance denoising is implemented differently

This implementation has been tested on Tensorflow r0.12.