diff --git a/README.md b/README.md

index 93e3358f024a..294f4cc6edc0 100644

--- a/README.md

+++ b/README.md

@@ -388,7 +388,8 @@ Current number of checkpoints: ** (from Berkeley) released with the paper [SqueezeBERT: What can computer vision teach NLP about efficient neural networks?](https://arxiv.org/abs/2006.11316) by Forrest N. Iandola, Albert E. Shaw, Ravi Krishna, and Kurt W. Keutzer.

1. **[Swin Transformer](https://huggingface.co/docs/transformers/model_doc/swin)** (from Microsoft) released with the paper [Swin Transformer: Hierarchical Vision Transformer using Shifted Windows](https://arxiv.org/abs/2103.14030) by Ze Liu, Yutong Lin, Yue Cao, Han Hu, Yixuan Wei, Zheng Zhang, Stephen Lin, Baining Guo.

1. **[Swin Transformer V2](https://huggingface.co/docs/transformers/model_doc/swinv2)** (from Microsoft) released with the paper [Swin Transformer V2: Scaling Up Capacity and Resolution](https://arxiv.org/abs/2111.09883) by Ze Liu, Han Hu, Yutong Lin, Zhuliang Yao, Zhenda Xie, Yixuan Wei, Jia Ning, Yue Cao, Zheng Zhang, Li Dong, Furu Wei, Baining Guo.

-1. **[SwitchTransformers](https://huggingface.co/docs/transformers/model_doc/switch_transformers)** (from Google) released with the paper [Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient Sparsity](https://arxiv.org/abs/2101.03961) by William Fedus, Barret Zoph, Noam Shazeer.

+1. **[Swin2SR](https://huggingface.co/docs/transformers/main/model_doc/swin2sr)** (from University of Würzburg) released with the paper [Swin2SR: SwinV2 Transformer for Compressed Image Super-Resolution and Restoration](https://arxiv.org/abs/2209.11345) by Marcos V. Conde, Ui-Jin Choi, Maxime Burchi, Radu Timofte.

+1. **[SwitchTransformers](https://huggingface.co/docs/transformers/main/model_doc/switch_transformers)** (from Google) released with the paper [Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient Sparsity](https://arxiv.org/abs/2101.03961) by William Fedus, Barret Zoph, Noam Shazeer.

1. **[T5](https://huggingface.co/docs/transformers/model_doc/t5)** (from Google AI) released with the paper [Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer](https://arxiv.org/abs/1910.10683) by Colin Raffel and Noam Shazeer and Adam Roberts and Katherine Lee and Sharan Narang and Michael Matena and Yanqi Zhou and Wei Li and Peter J. Liu.

1. **[T5v1.1](https://huggingface.co/docs/transformers/model_doc/t5v1.1)** (from Google AI) released in the repository [google-research/text-to-text-transfer-transformer](https://github.com/google-research/text-to-text-transfer-transformer/blob/main/released_checkpoints.md#t511) by Colin Raffel and Noam Shazeer and Adam Roberts and Katherine Lee and Sharan Narang and Michael Matena and Yanqi Zhou and Wei Li and Peter J. Liu.

1. **[Table Transformer](https://huggingface.co/docs/transformers/model_doc/table-transformer)** (from Microsoft Research) released with the paper [PubTables-1M: Towards Comprehensive Table Extraction From Unstructured Documents](https://arxiv.org/abs/2110.00061) by Brandon Smock, Rohith Pesala, Robin Abraham.

diff --git a/README_es.md b/README_es.md

index d4585a2b9559..4e262ab440a8 100644

--- a/README_es.md

+++ b/README_es.md

@@ -388,7 +388,8 @@ Número actual de puntos de control: ** (from Berkeley) released with the paper [SqueezeBERT: What can computer vision teach NLP about efficient neural networks?](https://arxiv.org/abs/2006.11316) by Forrest N. Iandola, Albert E. Shaw, Ravi Krishna, and Kurt W. Keutzer.

1. **[Swin Transformer](https://huggingface.co/docs/transformers/model_doc/swin)** (from Microsoft) released with the paper [Swin Transformer: Hierarchical Vision Transformer using Shifted Windows](https://arxiv.org/abs/2103.14030) by Ze Liu, Yutong Lin, Yue Cao, Han Hu, Yixuan Wei, Zheng Zhang, Stephen Lin, Baining Guo.

1. **[Swin Transformer V2](https://huggingface.co/docs/transformers/model_doc/swinv2)** (from Microsoft) released with the paper [Swin Transformer V2: Scaling Up Capacity and Resolution](https://arxiv.org/abs/2111.09883) by Ze Liu, Han Hu, Yutong Lin, Zhuliang Yao, Zhenda Xie, Yixuan Wei, Jia Ning, Yue Cao, Zheng Zhang, Li Dong, Furu Wei, Baining Guo.

-1. **[SwitchTransformers](https://huggingface.co/docs/transformers/model_doc/switch_transformers)** (from Google) released with the paper [Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient Sparsity](https://arxiv.org/abs/2101.03961) by William Fedus, Barret Zoph, Noam Shazeer.

+1. **[Swin2SR](https://huggingface.co/docs/transformers/main/model_doc/swin2sr)** (from University of Würzburg) released with the paper [Swin2SR: SwinV2 Transformer for Compressed Image Super-Resolution and Restoration](https://arxiv.org/abs/2209.11345) by Marcos V. Conde, Ui-Jin Choi, Maxime Burchi, Radu Timofte.

+1. **[SwitchTransformers](https://huggingface.co/docs/transformers/main/model_doc/switch_transformers)** (from Google) released with the paper [Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient Sparsity](https://arxiv.org/abs/2101.03961) by William Fedus, Barret Zoph, Noam Shazeer.

1. **[T5](https://huggingface.co/docs/transformers/model_doc/t5)** (from Google AI) released with the paper [Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer](https://arxiv.org/abs/1910.10683) by Colin Raffel and Noam Shazeer and Adam Roberts and Katherine Lee and Sharan Narang and Michael Matena and Yanqi Zhou and Wei Li and Peter J. Liu.

1. **[T5v1.1](https://huggingface.co/docs/transformers/model_doc/t5v1.1)** (from Google AI) released in the repository [google-research/text-to-text-transfer-transformer](https://github.com/google-research/text-to-text-transfer-transformer/blob/main/released_checkpoints.md#t511) by Colin Raffel and Noam Shazeer and Adam Roberts and Katherine Lee and Sharan Narang and Michael Matena and Yanqi Zhou and Wei Li and Peter J. Liu.

1. **[Table Transformer](https://huggingface.co/docs/transformers/model_doc/table-transformer)** (from Microsoft Research) released with the paper [PubTables-1M: Towards Comprehensive Table Extraction From Unstructured Documents](https://arxiv.org/abs/2110.00061) by Brandon Smock, Rohith Pesala, Robin Abraham.

diff --git a/README_hd.md b/README_hd.md

index d91e02d6bdd3..9102503b65a1 100644

--- a/README_hd.md

+++ b/README_hd.md

@@ -361,7 +361,8 @@ conda install -c huggingface transformers

1. **[SqueezeBERT](https://huggingface.co/docs/transformers/model_doc/squeezebert)** (बर्कले से) कागज के साथ [SqueezeBERT: कुशल तंत्रिका नेटवर्क के बारे में NLP को कंप्यूटर विज़न क्या सिखा सकता है?](https: //arxiv.org/abs/2006.11316) फॉरेस्ट एन. इनडोला, अल्बर्ट ई. शॉ, रवि कृष्णा, और कर्ट डब्ल्यू. केटज़र द्वारा।

1. **[Swin Transformer](https://huggingface.co/docs/transformers/model_doc/swin)** (माइक्रोसॉफ्ट से) साथ में कागज [स्वाइन ट्रांसफॉर्मर: शिफ्टेड विंडोज का उपयोग कर पदानुक्रमित विजन ट्रांसफॉर्मर](https://arxiv .org/abs/2103.14030) ज़ी लियू, युटोंग लिन, यू काओ, हान हू, यिक्सुआन वेई, झेंग झांग, स्टीफन लिन, बैनिंग गुओ द्वारा।

1. **[Swin Transformer V2](https://huggingface.co/docs/transformers/model_doc/swinv2)** (Microsoft से) साथ वाला पेपर [Swin Transformer V2: स्केलिंग अप कैपेसिटी एंड रेजोल्यूशन](https:// ज़ी लियू, हान हू, युटोंग लिन, ज़ुलिआंग याओ, ज़ेंडा ज़ी, यिक्सुआन वेई, जिया निंग, यू काओ, झेंग झांग, ली डोंग, फुरु वेई, बैनिंग गुओ द्वारा arxiv.org/abs/2111.09883।

-1. **[SwitchTransformers](https://huggingface.co/docs/transformers/model_doc/switch_transformers)** (from Google) released with the paper [Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient Sparsity](https://arxiv.org/abs/2101.03961) by William Fedus, Barret Zoph, Noam Shazeer.

+1. **[Swin2SR](https://huggingface.co/docs/transformers/main/model_doc/swin2sr)** (from University of Würzburg) released with the paper [Swin2SR: SwinV2 Transformer for Compressed Image Super-Resolution and Restoration](https://arxiv.org/abs/2209.11345) by Marcos V. Conde, Ui-Jin Choi, Maxime Burchi, Radu Timofte.

+1. **[SwitchTransformers](https://huggingface.co/docs/transformers/main/model_doc/switch_transformers)** (from Google) released with the paper [Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient Sparsity](https://arxiv.org/abs/2101.03961) by William Fedus, Barret Zoph, Noam Shazeer.

1. **[T5](https://huggingface.co/docs/transformers/model_doc/t5)** (来自 Google AI)कॉलिन रैफेल और नोम शज़ीर और एडम रॉबर्ट्स और कैथरीन ली और शरण नारंग और माइकल मटेना द्वारा साथ में पेपर [एक एकीकृत टेक्स्ट-टू-टेक्स्ट ट्रांसफॉर्मर के साथ स्थानांतरण सीखने की सीमा की खोज] (https://arxiv.org/abs/1910.10683) और यांकी झोउ और वेई ली और पीटर जे लियू।

1. **[T5v1.1](https://huggingface.co/docs/transformers/model_doc/t5v1.1)** (Google AI से) साथ वाला पेपर [google-research/text-to-text-transfer- ट्रांसफॉर्मर](https://github.com/google-research/text-to-text-transfer-transformer/blob/main/released_checkpoints.md#t511) कॉलिन रैफेल और नोम शज़ीर और एडम रॉबर्ट्स और कैथरीन ली और शरण नारंग द्वारा और माइकल मटेना और यांकी झोउ और वेई ली और पीटर जे लियू।

1. **[Table Transformer](https://huggingface.co/docs/transformers/model_doc/table-transformer)** (माइक्रोसॉफ्ट रिसर्च से) साथ में पेपर [पबटेबल्स-1एम: टूवर्ड्स कॉम्प्रिहेंसिव टेबल एक्सट्रैक्शन फ्रॉम अनस्ट्रक्चर्ड डॉक्यूमेंट्स ](https://arxiv.org/abs/2110.00061) ब्रैंडन स्मॉक, रोहित पेसाला, रॉबिन अब्राहम द्वारा पोस्ट किया गया।

diff --git a/README_ja.md b/README_ja.md

index f0cc1d31a3a9..171f7b0579f6 100644

--- a/README_ja.md

+++ b/README_ja.md

@@ -423,7 +423,8 @@ Flax、PyTorch、TensorFlowをcondaでインストールする方法は、それ

1. **[SqueezeBERT](https://huggingface.co/docs/transformers/model_doc/squeezebert)** (from Berkeley) released with the paper [SqueezeBERT: What can computer vision teach NLP about efficient neural networks?](https://arxiv.org/abs/2006.11316) by Forrest N. Iandola, Albert E. Shaw, Ravi Krishna, and Kurt W. Keutzer.

1. **[Swin Transformer](https://huggingface.co/docs/transformers/model_doc/swin)** (from Microsoft) released with the paper [Swin Transformer: Hierarchical Vision Transformer using Shifted Windows](https://arxiv.org/abs/2103.14030) by Ze Liu, Yutong Lin, Yue Cao, Han Hu, Yixuan Wei, Zheng Zhang, Stephen Lin, Baining Guo.

1. **[Swin Transformer V2](https://huggingface.co/docs/transformers/model_doc/swinv2)** (from Microsoft) released with the paper [Swin Transformer V2: Scaling Up Capacity and Resolution](https://arxiv.org/abs/2111.09883) by Ze Liu, Han Hu, Yutong Lin, Zhuliang Yao, Zhenda Xie, Yixuan Wei, Jia Ning, Yue Cao, Zheng Zhang, Li Dong, Furu Wei, Baining Guo.

-1. **[SwitchTransformers](https://huggingface.co/docs/transformers/model_doc/switch_transformers)** (from Google) released with the paper [Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient Sparsity](https://arxiv.org/abs/2101.03961) by William Fedus, Barret Zoph, Noam Shazeer.

+1. **[Swin2SR](https://huggingface.co/docs/transformers/main/model_doc/swin2sr)** (from University of Würzburg) released with the paper [Swin2SR: SwinV2 Transformer for Compressed Image Super-Resolution and Restoration](https://arxiv.org/abs/2209.11345) by Marcos V. Conde, Ui-Jin Choi, Maxime Burchi, Radu Timofte.

+1. **[SwitchTransformers](https://huggingface.co/docs/transformers/main/model_doc/switch_transformers)** (from Google) released with the paper [Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient Sparsity](https://arxiv.org/abs/2101.03961) by William Fedus, Barret Zoph, Noam Shazeer.

1. **[T5](https://huggingface.co/docs/transformers/model_doc/t5)** (from Google AI) released with the paper [Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer](https://arxiv.org/abs/1910.10683) by Colin Raffel and Noam Shazeer and Adam Roberts and Katherine Lee and Sharan Narang and Michael Matena and Yanqi Zhou and Wei Li and Peter J. Liu.

1. **[T5v1.1](https://huggingface.co/docs/transformers/model_doc/t5v1.1)** (from Google AI) released in the repository [google-research/text-to-text-transfer-transformer](https://github.com/google-research/text-to-text-transfer-transformer/blob/main/released_checkpoints.md#t511) by Colin Raffel and Noam Shazeer and Adam Roberts and Katherine Lee and Sharan Narang and Michael Matena and Yanqi Zhou and Wei Li and Peter J. Liu.

1. **[Table Transformer](https://huggingface.co/docs/transformers/model_doc/table-transformer)** (from Microsoft Research) released with the paper [PubTables-1M: Towards Comprehensive Table Extraction From Unstructured Documents](https://arxiv.org/abs/2110.00061) by Brandon Smock, Rohith Pesala, Robin Abraham.

diff --git a/README_ko.md b/README_ko.md

index 023d71f90d68..0c0b951aa8ee 100644

--- a/README_ko.md

+++ b/README_ko.md

@@ -338,7 +338,8 @@ Flax, PyTorch, TensorFlow 설치 페이지에서 이들을 conda로 설치하는

1. **[SqueezeBERT](https://huggingface.co/docs/transformers/model_doc/squeezebert)** (from Berkeley) released with the paper [SqueezeBERT: What can computer vision teach NLP about efficient neural networks?](https://arxiv.org/abs/2006.11316) by Forrest N. Iandola, Albert E. Shaw, Ravi Krishna, and Kurt W. Keutzer.

1. **[Swin Transformer](https://huggingface.co/docs/transformers/model_doc/swin)** (from Microsoft) released with the paper [Swin Transformer: Hierarchical Vision Transformer using Shifted Windows](https://arxiv.org/abs/2103.14030) by Ze Liu, Yutong Lin, Yue Cao, Han Hu, Yixuan Wei, Zheng Zhang, Stephen Lin, Baining Guo.

1. **[Swin Transformer V2](https://huggingface.co/docs/transformers/model_doc/swinv2)** (from Microsoft) released with the paper [Swin Transformer V2: Scaling Up Capacity and Resolution](https://arxiv.org/abs/2111.09883) by Ze Liu, Han Hu, Yutong Lin, Zhuliang Yao, Zhenda Xie, Yixuan Wei, Jia Ning, Yue Cao, Zheng Zhang, Li Dong, Furu Wei, Baining Guo.

-1. **[SwitchTransformers](https://huggingface.co/docs/transformers/model_doc/switch_transformers)** (from Google) released with the paper [Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient Sparsity](https://arxiv.org/abs/2101.03961) by William Fedus, Barret Zoph, Noam Shazeer.

+1. **[Swin2SR](https://huggingface.co/docs/transformers/main/model_doc/swin2sr)** (from University of Würzburg) released with the paper [Swin2SR: SwinV2 Transformer for Compressed Image Super-Resolution and Restoration](https://arxiv.org/abs/2209.11345) by Marcos V. Conde, Ui-Jin Choi, Maxime Burchi, Radu Timofte.

+1. **[SwitchTransformers](https://huggingface.co/docs/transformers/main/model_doc/switch_transformers)** (from Google) released with the paper [Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient Sparsity](https://arxiv.org/abs/2101.03961) by William Fedus, Barret Zoph, Noam Shazeer.

1. **[T5](https://huggingface.co/docs/transformers/model_doc/t5)** (from Google AI) released with the paper [Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer](https://arxiv.org/abs/1910.10683) by Colin Raffel and Noam Shazeer and Adam Roberts and Katherine Lee and Sharan Narang and Michael Matena and Yanqi Zhou and Wei Li and Peter J. Liu.

1. **[T5v1.1](https://huggingface.co/docs/transformers/model_doc/t5v1.1)** (from Google AI) released in the repository [google-research/text-to-text-transfer-transformer](https://github.com/google-research/text-to-text-transfer-transformer/blob/main/released_checkpoints.md#t511) by Colin Raffel and Noam Shazeer and Adam Roberts and Katherine Lee and Sharan Narang and Michael Matena and Yanqi Zhou and Wei Li and Peter J. Liu.

1. **[Table Transformer](https://huggingface.co/docs/transformers/model_doc/table-transformer)** (from Microsoft Research) released with the paper [PubTables-1M: Towards Comprehensive Table Extraction From Unstructured Documents](https://arxiv.org/abs/2110.00061) by Brandon Smock, Rohith Pesala, Robin Abraham.

diff --git a/README_zh-hans.md b/README_zh-hans.md

index 0f29ab657507..2a823dab8b43 100644

--- a/README_zh-hans.md

+++ b/README_zh-hans.md

@@ -362,7 +362,8 @@ conda install -c huggingface transformers

1. **[SqueezeBERT](https://huggingface.co/docs/transformers/model_doc/squeezebert)** (来自 Berkeley) 伴随论文 [SqueezeBERT: What can computer vision teach NLP about efficient neural networks?](https://arxiv.org/abs/2006.11316) 由 Forrest N. Iandola, Albert E. Shaw, Ravi Krishna, and Kurt W. Keutzer 发布。

1. **[Swin Transformer](https://huggingface.co/docs/transformers/model_doc/swin)** (来自 Microsoft) 伴随论文 [Swin Transformer: Hierarchical Vision Transformer using Shifted Windows](https://arxiv.org/abs/2103.14030) 由 Ze Liu, Yutong Lin, Yue Cao, Han Hu, Yixuan Wei, Zheng Zhang, Stephen Lin, Baining Guo 发布。

1. **[Swin Transformer V2](https://huggingface.co/docs/transformers/model_doc/swinv2)** (来自 Microsoft) 伴随论文 [Swin Transformer V2: Scaling Up Capacity and Resolution](https://arxiv.org/abs/2111.09883) 由 Ze Liu, Han Hu, Yutong Lin, Zhuliang Yao, Zhenda Xie, Yixuan Wei, Jia Ning, Yue Cao, Zheng Zhang, Li Dong, Furu Wei, Baining Guo 发布。

-1. **[SwitchTransformers](https://huggingface.co/docs/transformers/model_doc/switch_transformers)** (from Google) released with the paper [Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient Sparsity](https://arxiv.org/abs/2101.03961) by William Fedus, Barret Zoph, Noam Shazeer.

+1. **[Swin2SR](https://huggingface.co/docs/transformers/main/model_doc/swin2sr)** (来自 University of Würzburg) 伴随论文 [Swin2SR: SwinV2 Transformer for Compressed Image Super-Resolution and Restoration](https://arxiv.org/abs/2209.11345) 由 Marcos V. Conde, Ui-Jin Choi, Maxime Burchi, Radu Timofte 发布。

+1. **[SwitchTransformers](https://huggingface.co/docs/transformers/main/model_doc/switch_transformers)** (from Google) released with the paper [Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient Sparsity](https://arxiv.org/abs/2101.03961) by William Fedus, Barret Zoph, Noam Shazeer.

1. **[T5](https://huggingface.co/docs/transformers/model_doc/t5)** (来自 Google AI) 伴随论文 [Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer](https://arxiv.org/abs/1910.10683) 由 Colin Raffel and Noam Shazeer and Adam Roberts and Katherine Lee and Sharan Narang and Michael Matena and Yanqi Zhou and Wei Li and Peter J. Liu 发布。

1. **[T5v1.1](https://huggingface.co/docs/transformers/model_doc/t5v1.1)** (来自 Google AI) 伴随论文 [google-research/text-to-text-transfer-transformer](https://github.com/google-research/text-to-text-transfer-transformer/blob/main/released_checkpoints.md#t511) 由 Colin Raffel and Noam Shazeer and Adam Roberts and Katherine Lee and Sharan Narang and Michael Matena and Yanqi Zhou and Wei Li and Peter J. Liu 发布。

1. **[Table Transformer](https://huggingface.co/docs/transformers/model_doc/table-transformer)** (来自 Microsoft Research) 伴随论文 [PubTables-1M: Towards Comprehensive Table Extraction From Unstructured Documents](https://arxiv.org/abs/2110.00061) 由 Brandon Smock, Rohith Pesala, Robin Abraham 发布。

diff --git a/README_zh-hant.md b/README_zh-hant.md

index 93b23aa84431..86f35867a0ec 100644

--- a/README_zh-hant.md

+++ b/README_zh-hant.md

@@ -374,7 +374,8 @@ conda install -c huggingface transformers

1. **[SqueezeBERT](https://huggingface.co/docs/transformers/model_doc/squeezebert)** (from Berkeley) released with the paper [SqueezeBERT: What can computer vision teach NLP about efficient neural networks?](https://arxiv.org/abs/2006.11316) by Forrest N. Iandola, Albert E. Shaw, Ravi Krishna, and Kurt W. Keutzer.

1. **[Swin Transformer](https://huggingface.co/docs/transformers/model_doc/swin)** (from Microsoft) released with the paper [Swin Transformer: Hierarchical Vision Transformer using Shifted Windows](https://arxiv.org/abs/2103.14030) by Ze Liu, Yutong Lin, Yue Cao, Han Hu, Yixuan Wei, Zheng Zhang, Stephen Lin, Baining Guo.

1. **[Swin Transformer V2](https://huggingface.co/docs/transformers/model_doc/swinv2)** (from Microsoft) released with the paper [Swin Transformer V2: Scaling Up Capacity and Resolution](https://arxiv.org/abs/2111.09883) by Ze Liu, Han Hu, Yutong Lin, Zhuliang Yao, Zhenda Xie, Yixuan Wei, Jia Ning, Yue Cao, Zheng Zhang, Li Dong, Furu Wei, Baining Guo.

-1. **[SwitchTransformers](https://huggingface.co/docs/transformers/model_doc/switch_transformers)** (from Google) released with the paper [Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient Sparsity](https://arxiv.org/abs/2101.03961) by William Fedus, Barret Zoph, Noam Shazeer.

+1. **[Swin2SR](https://huggingface.co/docs/transformers/main/model_doc/swin2sr)** (from University of Würzburg) released with the paper [Swin2SR: SwinV2 Transformer for Compressed Image Super-Resolution and Restoration](https://arxiv.org/abs/2209.11345) by Marcos V. Conde, Ui-Jin Choi, Maxime Burchi, Radu Timofte.

+1. **[SwitchTransformers](https://huggingface.co/docs/transformers/main/model_doc/switch_transformers)** (from Google) released with the paper [Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient Sparsity](https://arxiv.org/abs/2101.03961) by William Fedus, Barret Zoph, Noam Shazeer.

1. **[T5](https://huggingface.co/docs/transformers/model_doc/t5)** (from Google AI) released with the paper [Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer](https://arxiv.org/abs/1910.10683) by Colin Raffel and Noam Shazeer and Adam Roberts and Katherine Lee and Sharan Narang and Michael Matena and Yanqi Zhou and Wei Li and Peter J. Liu.

1. **[T5v1.1](https://huggingface.co/docs/transformers/model_doc/t5v1.1)** (from Google AI) released with the paper [google-research/text-to-text-transfer-transformer](https://github.com/google-research/text-to-text-transfer-transformer/blob/main/released_checkpoints.md#t511) by Colin Raffel and Noam Shazeer and Adam Roberts and Katherine Lee and Sharan Narang and Michael Matena and Yanqi Zhou and Wei Li and Peter J. Liu.

1. **[Table Transformer](https://huggingface.co/docs/transformers/model_doc/table-transformer)** (from Microsoft Research) released with the paper [PubTables-1M: Towards Comprehensive Table Extraction From Unstructured Documents](https://arxiv.org/abs/2110.00061) by Brandon Smock, Rohith Pesala, Robin Abraham.

diff --git a/docs/source/en/_toctree.yml b/docs/source/en/_toctree.yml

index 11f5c0b0d4fc..362fd0791aaf 100644

--- a/docs/source/en/_toctree.yml

+++ b/docs/source/en/_toctree.yml

@@ -438,6 +438,8 @@

title: Swin Transformer

- local: model_doc/swinv2

title: Swin Transformer V2

+ - local: model_doc/swin2sr

+ title: Swin2SR

- local: model_doc/table-transformer

title: Table Transformer

- local: model_doc/timesformer

@@ -564,4 +566,4 @@

- local: internal/file_utils

title: General Utilities

title: Internal Helpers

- title: API

+ title: API

\ No newline at end of file

diff --git a/docs/source/en/index.mdx b/docs/source/en/index.mdx

index 01376ebed0ee..5d463e5f7dc6 100644

--- a/docs/source/en/index.mdx

+++ b/docs/source/en/index.mdx

@@ -175,6 +175,7 @@ The documentation is organized into five sections:

1. **[SqueezeBERT](model_doc/squeezebert)** (from Berkeley) released with the paper [SqueezeBERT: What can computer vision teach NLP about efficient neural networks?](https://arxiv.org/abs/2006.11316) by Forrest N. Iandola, Albert E. Shaw, Ravi Krishna, and Kurt W. Keutzer.

1. **[Swin Transformer](model_doc/swin)** (from Microsoft) released with the paper [Swin Transformer: Hierarchical Vision Transformer using Shifted Windows](https://arxiv.org/abs/2103.14030) by Ze Liu, Yutong Lin, Yue Cao, Han Hu, Yixuan Wei, Zheng Zhang, Stephen Lin, Baining Guo.

1. **[Swin Transformer V2](model_doc/swinv2)** (from Microsoft) released with the paper [Swin Transformer V2: Scaling Up Capacity and Resolution](https://arxiv.org/abs/2111.09883) by Ze Liu, Han Hu, Yutong Lin, Zhuliang Yao, Zhenda Xie, Yixuan Wei, Jia Ning, Yue Cao, Zheng Zhang, Li Dong, Furu Wei, Baining Guo.

+1. **[Swin2SR](model_doc/swin2sr)** (from University of Würzburg) released with the paper [Swin2SR: SwinV2 Transformer for Compressed Image Super-Resolution and Restoration](https://arxiv.org/abs/2209.11345) by Marcos V. Conde, Ui-Jin Choi, Maxime Burchi, Radu Timofte.

1. **[SwitchTransformers](model_doc/switch_transformers)** (from Google) released with the paper [Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient Sparsity](https://arxiv.org/abs/2101.03961) by William Fedus, Barret Zoph, Noam Shazeer.

1. **[T5](model_doc/t5)** (from Google AI) released with the paper [Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer](https://arxiv.org/abs/1910.10683) by Colin Raffel and Noam Shazeer and Adam Roberts and Katherine Lee and Sharan Narang and Michael Matena and Yanqi Zhou and Wei Li and Peter J. Liu.

1. **[T5v1.1](model_doc/t5v1.1)** (from Google AI) released in the repository [google-research/text-to-text-transfer-transformer](https://github.com/google-research/text-to-text-transfer-transformer/blob/main/released_checkpoints.md#t511) by Colin Raffel and Noam Shazeer and Adam Roberts and Katherine Lee and Sharan Narang and Michael Matena and Yanqi Zhou and Wei Li and Peter J. Liu.

@@ -342,6 +343,7 @@ Flax), PyTorch, and/or TensorFlow.

| SqueezeBERT | ✅ | ✅ | ✅ | ❌ | ❌ |

| Swin Transformer | ❌ | ❌ | ✅ | ✅ | ❌ |

| Swin Transformer V2 | ❌ | ❌ | ✅ | ❌ | ❌ |

+| Swin2SR | ❌ | ❌ | ✅ | ❌ | ❌ |

| SwitchTransformers | ❌ | ❌ | ✅ | ❌ | ❌ |

| T5 | ✅ | ✅ | ✅ | ✅ | ✅ |

| Table Transformer | ❌ | ❌ | ✅ | ❌ | ❌ |

diff --git a/docs/source/en/model_doc/swin2sr.mdx b/docs/source/en/model_doc/swin2sr.mdx

new file mode 100644

index 000000000000..edb073d1ee38

--- /dev/null

+++ b/docs/source/en/model_doc/swin2sr.mdx

@@ -0,0 +1,57 @@

+

+

+# Swin2SR

+

+## Overview

+

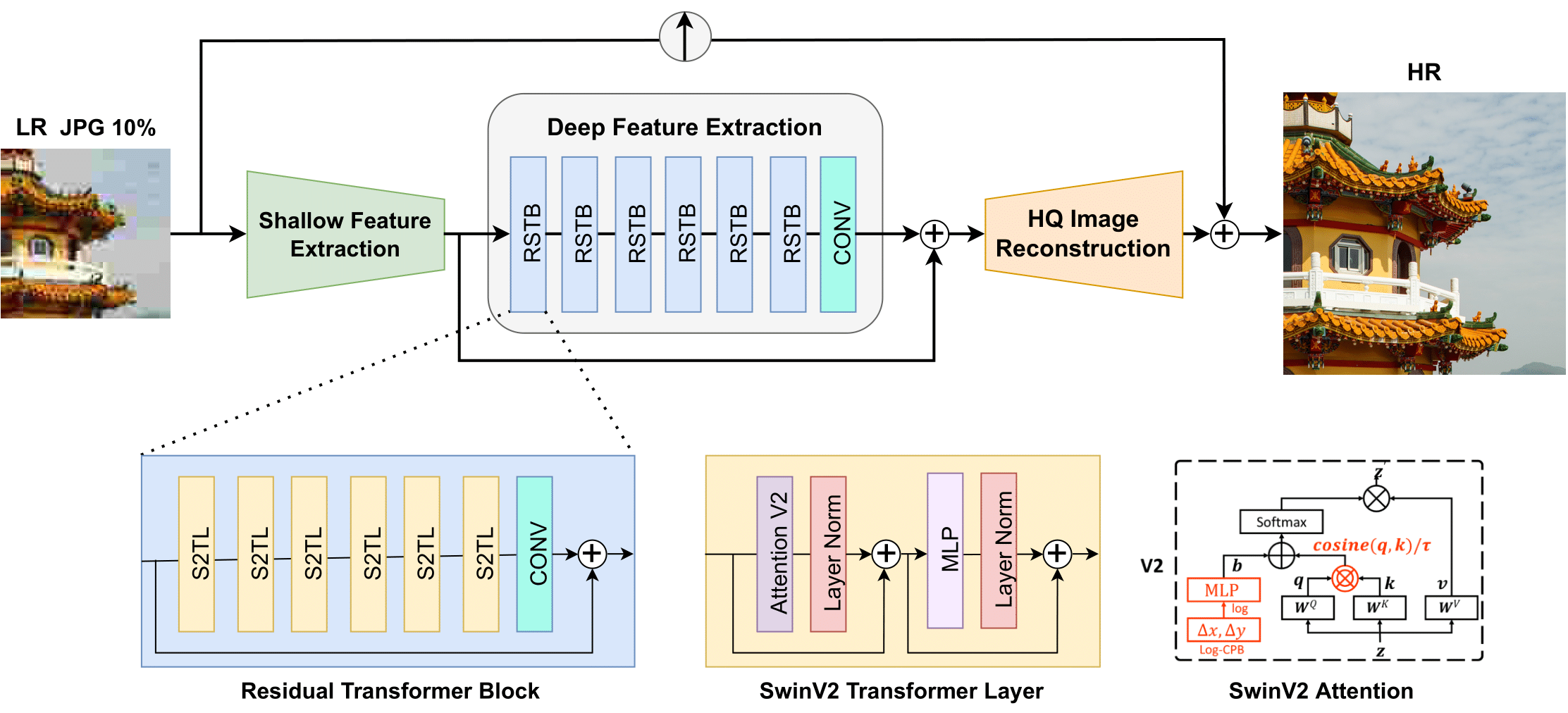

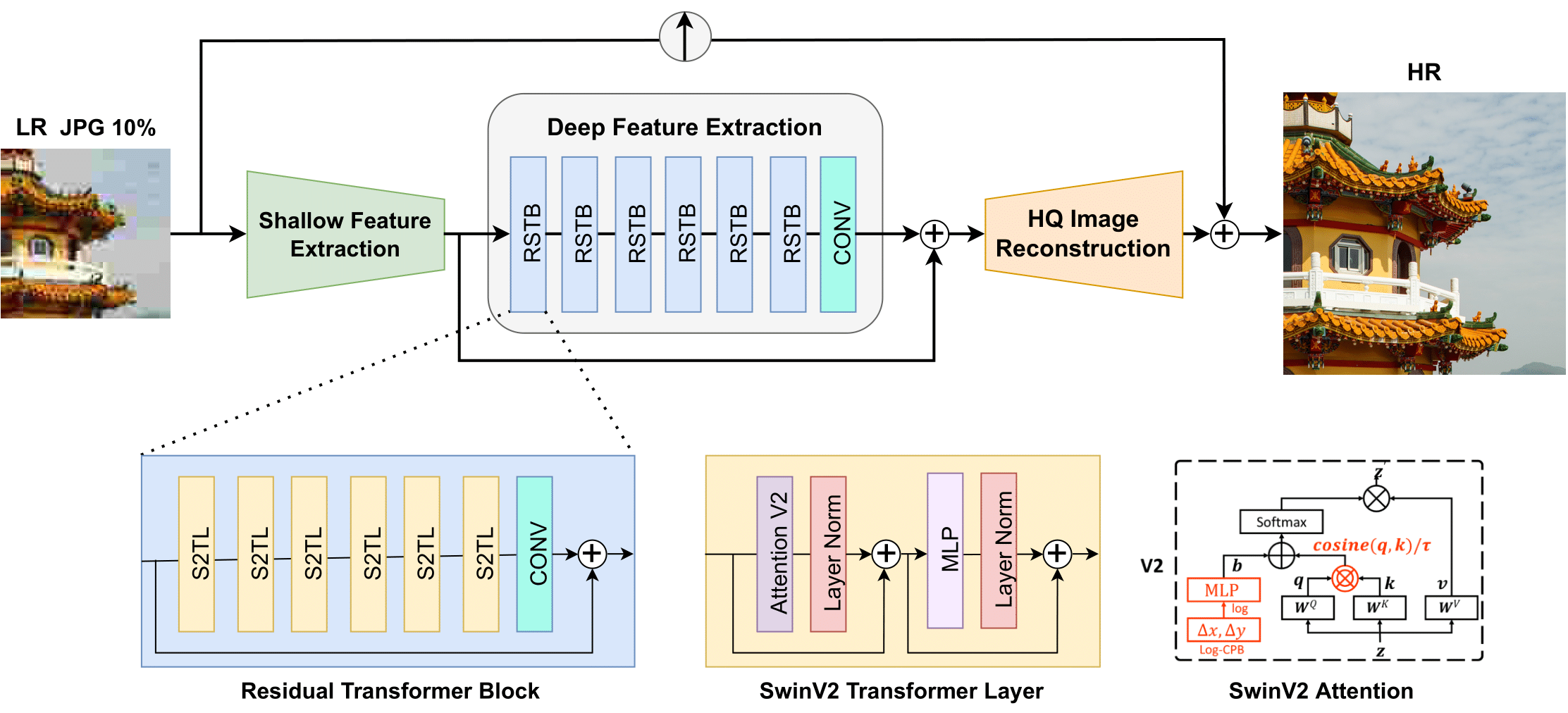

+The Swin2SR model was proposed in [Swin2SR: SwinV2 Transformer for Compressed Image Super-Resolution and Restoration](https://arxiv.org/abs/2209.11345) by Marcos V. Conde, Ui-Jin Choi, Maxime Burchi, Radu Timofte.

+Swin2R improves the [SwinIR](https://github.com/JingyunLiang/SwinIR/) model by incorporating [Swin Transformer v2](swinv2) layers which mitigates issues such as training instability, resolution gaps between pre-training

+and fine-tuning, and hunger on data.

+

+The abstract from the paper is the following:

+

+*Compression plays an important role on the efficient transmission and storage of images and videos through band-limited systems such as streaming services, virtual reality or videogames. However, compression unavoidably leads to artifacts and the loss of the original information, which may severely degrade the visual quality. For these reasons, quality enhancement of compressed images has become a popular research topic. While most state-of-the-art image restoration methods are based on convolutional neural networks, other transformers-based methods such as SwinIR, show impressive performance on these tasks.

+In this paper, we explore the novel Swin Transformer V2, to improve SwinIR for image super-resolution, and in particular, the compressed input scenario. Using this method we can tackle the major issues in training transformer vision models, such as training instability, resolution gaps between pre-training and fine-tuning, and hunger on data. We conduct experiments on three representative tasks: JPEG compression artifacts removal, image super-resolution (classical and lightweight), and compressed image super-resolution. Experimental results demonstrate that our method, Swin2SR, can improve the training convergence and performance of SwinIR, and is a top-5 solution at the "AIM 2022 Challenge on Super-Resolution of Compressed Image and Video".*

+

+ +

+ Swin2SR architecture. Taken from the original paper.

+

+This model was contributed by [nielsr](https://huggingface.co/nielsr).

+The original code can be found [here](https://github.com/mv-lab/swin2sr).

+

+## Resources

+

+Demo notebooks for Swin2SR can be found [here](https://github.com/NielsRogge/Transformers-Tutorials/tree/master/Swin2SR).

+

+A demo Space for image super-resolution with SwinSR can be found [here](https://huggingface.co/spaces/jjourney1125/swin2sr).

+

+## Swin2SRImageProcessor

+

+[[autodoc]] Swin2SRImageProcessor

+ - preprocess

+

+## Swin2SRConfig

+

+[[autodoc]] Swin2SRConfig

+

+## Swin2SRModel

+

+[[autodoc]] Swin2SRModel

+ - forward

+

+## Swin2SRForImageSuperResolution

+

+[[autodoc]] Swin2SRForImageSuperResolution

+ - forward

diff --git a/src/transformers/__init__.py b/src/transformers/__init__.py

index f51cb5caf7ae..bcd2e1cebee6 100644

--- a/src/transformers/__init__.py

+++ b/src/transformers/__init__.py

@@ -373,6 +373,7 @@

"models.splinter": ["SPLINTER_PRETRAINED_CONFIG_ARCHIVE_MAP", "SplinterConfig", "SplinterTokenizer"],

"models.squeezebert": ["SQUEEZEBERT_PRETRAINED_CONFIG_ARCHIVE_MAP", "SqueezeBertConfig", "SqueezeBertTokenizer"],

"models.swin": ["SWIN_PRETRAINED_CONFIG_ARCHIVE_MAP", "SwinConfig"],

+ "models.swin2sr": ["SWIN2SR_PRETRAINED_CONFIG_ARCHIVE_MAP", "Swin2SRConfig"],

"models.swinv2": ["SWINV2_PRETRAINED_CONFIG_ARCHIVE_MAP", "Swinv2Config"],

"models.switch_transformers": ["SWITCH_TRANSFORMERS_PRETRAINED_CONFIG_ARCHIVE_MAP", "SwitchTransformersConfig"],

"models.t5": ["T5_PRETRAINED_CONFIG_ARCHIVE_MAP", "T5Config"],

@@ -779,6 +780,7 @@

_import_structure["models.perceiver"].extend(["PerceiverFeatureExtractor", "PerceiverImageProcessor"])

_import_structure["models.poolformer"].extend(["PoolFormerFeatureExtractor", "PoolFormerImageProcessor"])

_import_structure["models.segformer"].extend(["SegformerFeatureExtractor", "SegformerImageProcessor"])

+ _import_structure["models.swin2sr"].append("Swin2SRImageProcessor")

_import_structure["models.videomae"].extend(["VideoMAEFeatureExtractor", "VideoMAEImageProcessor"])

_import_structure["models.vilt"].extend(["ViltFeatureExtractor", "ViltImageProcessor", "ViltProcessor"])

_import_structure["models.vit"].extend(["ViTFeatureExtractor", "ViTImageProcessor"])

@@ -2087,6 +2089,14 @@

"SwinPreTrainedModel",

]

)

+ _import_structure["models.swin2sr"].extend(

+ [

+ "SWIN2SR_PRETRAINED_MODEL_ARCHIVE_LIST",

+ "Swin2SRForImageSuperResolution",

+ "Swin2SRModel",

+ "Swin2SRPreTrainedModel",

+ ]

+ )

_import_structure["models.swinv2"].extend(

[

"SWINV2_PRETRAINED_MODEL_ARCHIVE_LIST",

@@ -3630,6 +3640,7 @@

from .models.splinter import SPLINTER_PRETRAINED_CONFIG_ARCHIVE_MAP, SplinterConfig, SplinterTokenizer

from .models.squeezebert import SQUEEZEBERT_PRETRAINED_CONFIG_ARCHIVE_MAP, SqueezeBertConfig, SqueezeBertTokenizer

from .models.swin import SWIN_PRETRAINED_CONFIG_ARCHIVE_MAP, SwinConfig

+ from .models.swin2sr import SWIN2SR_PRETRAINED_CONFIG_ARCHIVE_MAP, Swin2SRConfig

from .models.swinv2 import SWINV2_PRETRAINED_CONFIG_ARCHIVE_MAP, Swinv2Config

from .models.switch_transformers import SWITCH_TRANSFORMERS_PRETRAINED_CONFIG_ARCHIVE_MAP, SwitchTransformersConfig

from .models.t5 import T5_PRETRAINED_CONFIG_ARCHIVE_MAP, T5Config

@@ -3978,6 +3989,7 @@

from .models.perceiver import PerceiverFeatureExtractor, PerceiverImageProcessor

from .models.poolformer import PoolFormerFeatureExtractor, PoolFormerImageProcessor

from .models.segformer import SegformerFeatureExtractor, SegformerImageProcessor

+ from .models.swin2sr import Swin2SRImageProcessor

from .models.videomae import VideoMAEFeatureExtractor, VideoMAEImageProcessor

from .models.vilt import ViltFeatureExtractor, ViltImageProcessor, ViltProcessor

from .models.vit import ViTFeatureExtractor, ViTImageProcessor

@@ -5052,6 +5064,12 @@

SwinModel,

SwinPreTrainedModel,

)

+ from .models.swin2sr import (

+ SWIN2SR_PRETRAINED_MODEL_ARCHIVE_LIST,

+ Swin2SRForImageSuperResolution,

+ Swin2SRModel,

+ Swin2SRPreTrainedModel,

+ )

from .models.swinv2 import (

SWINV2_PRETRAINED_MODEL_ARCHIVE_LIST,

Swinv2ForImageClassification,

diff --git a/src/transformers/modeling_outputs.py b/src/transformers/modeling_outputs.py

index 57a01fa7c69c..1a38d75b4612 100644

--- a/src/transformers/modeling_outputs.py

+++ b/src/transformers/modeling_outputs.py

@@ -1204,6 +1204,34 @@ class DepthEstimatorOutput(ModelOutput):

attentions: Optional[Tuple[torch.FloatTensor]] = None

+@dataclass

+class ImageSuperResolutionOutput(ModelOutput):

+ """

+ Base class for outputs of image super resolution models.

+

+ Args:

+ loss (`torch.FloatTensor` of shape `(1,)`, *optional*, returned when `labels` is provided):

+ Reconstruction loss.

+ reconstruction (`torch.FloatTensor` of shape `(batch_size, num_channels, height, width)`):

+ Reconstructed images, possibly upscaled.

+ hidden_states (`tuple(torch.FloatTensor)`, *optional*, returned when `output_hidden_states=True` is passed or when `config.output_hidden_states=True`):

+ Tuple of `torch.FloatTensor` (one for the output of the embeddings, if the model has an embedding layer, +

+ one for the output of each stage) of shape `(batch_size, sequence_length, hidden_size)`. Hidden-states

+ (also called feature maps) of the model at the output of each stage.

+ attentions (`tuple(torch.FloatTensor)`, *optional*, returned when `output_attentions=True` is passed or when `config.output_attentions=True`):

+ Tuple of `torch.FloatTensor` (one for each layer) of shape `(batch_size, num_heads, patch_size,

+ sequence_length)`.

+

+ Attentions weights after the attention softmax, used to compute the weighted average in the self-attention

+ heads.

+ """

+

+ loss: Optional[torch.FloatTensor] = None

+ reconstruction: torch.FloatTensor = None

+ hidden_states: Optional[Tuple[torch.FloatTensor]] = None

+ attentions: Optional[Tuple[torch.FloatTensor]] = None

+

+

@dataclass

class Wav2Vec2BaseModelOutput(ModelOutput):

"""

diff --git a/src/transformers/models/__init__.py b/src/transformers/models/__init__.py

index 9c73d52fc174..342044e1f38d 100644

--- a/src/transformers/models/__init__.py

+++ b/src/transformers/models/__init__.py

@@ -148,6 +148,7 @@

splinter,

squeezebert,

swin,

+ swin2sr,

swinv2,

switch_transformers,

t5,

diff --git a/src/transformers/models/auto/configuration_auto.py b/src/transformers/models/auto/configuration_auto.py

index 4c7e4280e719..cb079657f839 100644

--- a/src/transformers/models/auto/configuration_auto.py

+++ b/src/transformers/models/auto/configuration_auto.py

@@ -145,6 +145,7 @@

("splinter", "SplinterConfig"),

("squeezebert", "SqueezeBertConfig"),

("swin", "SwinConfig"),

+ ("swin2sr", "Swin2SRConfig"),

("swinv2", "Swinv2Config"),

("switch_transformers", "SwitchTransformersConfig"),

("t5", "T5Config"),

@@ -291,6 +292,7 @@

("splinter", "SPLINTER_PRETRAINED_CONFIG_ARCHIVE_MAP"),

("squeezebert", "SQUEEZEBERT_PRETRAINED_CONFIG_ARCHIVE_MAP"),

("swin", "SWIN_PRETRAINED_CONFIG_ARCHIVE_MAP"),

+ ("swin2sr", "SWIN2SR_PRETRAINED_CONFIG_ARCHIVE_MAP"),

("swinv2", "SWINV2_PRETRAINED_CONFIG_ARCHIVE_MAP"),

("switch_transformers", "SWITCH_TRANSFORMERS_PRETRAINED_CONFIG_ARCHIVE_MAP"),

("t5", "T5_PRETRAINED_CONFIG_ARCHIVE_MAP"),

@@ -459,6 +461,7 @@

("splinter", "Splinter"),

("squeezebert", "SqueezeBERT"),

("swin", "Swin Transformer"),

+ ("swin2sr", "Swin2SR"),

("swinv2", "Swin Transformer V2"),

("switch_transformers", "SwitchTransformers"),

("t5", "T5"),

diff --git a/src/transformers/models/auto/image_processing_auto.py b/src/transformers/models/auto/image_processing_auto.py

index ea08c0fe8dc5..a2065abe5827 100644

--- a/src/transformers/models/auto/image_processing_auto.py

+++ b/src/transformers/models/auto/image_processing_auto.py

@@ -73,6 +73,7 @@

("resnet", "ConvNextImageProcessor"),

("segformer", "SegformerImageProcessor"),

("swin", "ViTImageProcessor"),

+ ("swin2sr", "Swin2SRImageProcessor"),

("swinv2", "ViTImageProcessor"),

("table-transformer", "DetrImageProcessor"),

("timesformer", "VideoMAEImageProcessor"),

diff --git a/src/transformers/models/auto/modeling_auto.py b/src/transformers/models/auto/modeling_auto.py

index 7fef8a21a03b..ad6285fc011c 100644

--- a/src/transformers/models/auto/modeling_auto.py

+++ b/src/transformers/models/auto/modeling_auto.py

@@ -141,6 +141,7 @@

("splinter", "SplinterModel"),

("squeezebert", "SqueezeBertModel"),

("swin", "SwinModel"),

+ ("swin2sr", "Swin2SRModel"),

("swinv2", "Swinv2Model"),

("switch_transformers", "SwitchTransformersModel"),

("t5", "T5Model"),

diff --git a/src/transformers/models/swin2sr/__init__.py b/src/transformers/models/swin2sr/__init__.py

new file mode 100644

index 000000000000..3b0c885a7dc3

--- /dev/null

+++ b/src/transformers/models/swin2sr/__init__.py

@@ -0,0 +1,80 @@

+# flake8: noqa

+# There's no way to ignore "F401 '...' imported but unused" warnings in this

+# module, but to preserve other warnings. So, don't check this module at all.

+

+# Copyright 2022 The HuggingFace Team. All rights reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+from typing import TYPE_CHECKING

+

+# rely on isort to merge the imports

+from ...utils import OptionalDependencyNotAvailable, _LazyModule, is_torch_available, is_vision_available

+

+

+_import_structure = {

+ "configuration_swin2sr": ["SWIN2SR_PRETRAINED_CONFIG_ARCHIVE_MAP", "Swin2SRConfig"],

+}

+

+

+try:

+ if not is_torch_available():

+ raise OptionalDependencyNotAvailable()

+except OptionalDependencyNotAvailable:

+ pass

+else:

+ _import_structure["modeling_swin2sr"] = [

+ "SWIN2SR_PRETRAINED_MODEL_ARCHIVE_LIST",

+ "Swin2SRForImageSuperResolution",

+ "Swin2SRModel",

+ "Swin2SRPreTrainedModel",

+ ]

+

+

+try:

+ if not is_vision_available():

+ raise OptionalDependencyNotAvailable()

+except OptionalDependencyNotAvailable:

+ pass

+else:

+ _import_structure["image_processing_swin2sr"] = ["Swin2SRImageProcessor"]

+

+

+if TYPE_CHECKING:

+ from .configuration_swin2sr import SWIN2SR_PRETRAINED_CONFIG_ARCHIVE_MAP, Swin2SRConfig

+

+ try:

+ if not is_torch_available():

+ raise OptionalDependencyNotAvailable()

+ except OptionalDependencyNotAvailable:

+ pass

+ else:

+ from .modeling_swin2sr import (

+ SWIN2SR_PRETRAINED_MODEL_ARCHIVE_LIST,

+ Swin2SRForImageSuperResolution,

+ Swin2SRModel,

+ Swin2SRPreTrainedModel,

+ )

+

+ try:

+ if not is_vision_available():

+ raise OptionalDependencyNotAvailable()

+ except OptionalDependencyNotAvailable:

+ pass

+ else:

+ from .image_processing_swin2sr import Swin2SRImageProcessor

+

+

+else:

+ import sys

+

+ sys.modules[__name__] = _LazyModule(__name__, globals()["__file__"], _import_structure, module_spec=__spec__)

diff --git a/src/transformers/models/swin2sr/configuration_swin2sr.py b/src/transformers/models/swin2sr/configuration_swin2sr.py

new file mode 100644

index 000000000000..4547b5848a1b

--- /dev/null

+++ b/src/transformers/models/swin2sr/configuration_swin2sr.py

@@ -0,0 +1,156 @@

+# coding=utf-8

+# Copyright 2022 The HuggingFace Inc. team. All rights reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+""" Swin2SR Transformer model configuration"""

+

+from ...configuration_utils import PretrainedConfig

+from ...utils import logging

+

+

+logger = logging.get_logger(__name__)

+

+SWIN2SR_PRETRAINED_CONFIG_ARCHIVE_MAP = {

+ "caidas/swin2sr-classicalsr-x2-64": (

+ "https://huggingface.co/caidas/swin2sr-classicalsr-x2-64/resolve/main/config.json"

+ ),

+}

+

+

+class Swin2SRConfig(PretrainedConfig):

+ r"""

+ This is the configuration class to store the configuration of a [`Swin2SRModel`]. It is used to instantiate a Swin

+ Transformer v2 model according to the specified arguments, defining the model architecture. Instantiating a

+ configuration with the defaults will yield a similar configuration to that of the Swin Transformer v2

+ [caidas/swin2sr-classicalsr-x2-64](https://huggingface.co/caidas/swin2sr-classicalsr-x2-64) architecture.

+

+ Configuration objects inherit from [`PretrainedConfig`] and can be used to control the model outputs. Read the

+ documentation from [`PretrainedConfig`] for more information.

+

+ Args:

+ image_size (`int`, *optional*, defaults to 64):

+ The size (resolution) of each image.

+ patch_size (`int`, *optional*, defaults to 1):

+ The size (resolution) of each patch.

+ num_channels (`int`, *optional*, defaults to 3):

+ The number of input channels.

+ embed_dim (`int`, *optional*, defaults to 180):

+ Dimensionality of patch embedding.

+ depths (`list(int)`, *optional*, defaults to `[6, 6, 6, 6, 6, 6]`):

+ Depth of each layer in the Transformer encoder.

+ num_heads (`list(int)`, *optional*, defaults to `[6, 6, 6, 6, 6, 6]`):

+ Number of attention heads in each layer of the Transformer encoder.

+ window_size (`int`, *optional*, defaults to 8):

+ Size of windows.

+ mlp_ratio (`float`, *optional*, defaults to 2.0):

+ Ratio of MLP hidden dimensionality to embedding dimensionality.

+ qkv_bias (`bool`, *optional*, defaults to `True`):

+ Whether or not a learnable bias should be added to the queries, keys and values.

+ hidden_dropout_prob (`float`, *optional*, defaults to 0.0):

+ The dropout probability for all fully connected layers in the embeddings and encoder.

+ attention_probs_dropout_prob (`float`, *optional*, defaults to 0.0):

+ The dropout ratio for the attention probabilities.

+ drop_path_rate (`float`, *optional*, defaults to 0.1):

+ Stochastic depth rate.

+ hidden_act (`str` or `function`, *optional*, defaults to `"gelu"`):

+ The non-linear activation function (function or string) in the encoder. If string, `"gelu"`, `"relu"`,

+ `"selu"` and `"gelu_new"` are supported.

+ use_absolute_embeddings (`bool`, *optional*, defaults to `False`):

+ Whether or not to add absolute position embeddings to the patch embeddings.

+ patch_norm (`bool`, *optional*, defaults to `True`):

+ Whether or not to add layer normalization after patch embedding.

+ initializer_range (`float`, *optional*, defaults to 0.02):

+ The standard deviation of the truncated_normal_initializer for initializing all weight matrices.

+ layer_norm_eps (`float`, *optional*, defaults to 1e-12):

+ The epsilon used by the layer normalization layers.

+ upscale (`int`, *optional*, defaults to 2):

+ The upscale factor for the image. 2/3/4/8 for image super resolution, 1 for denoising and compress artifact

+ reduction

+ img_range (`float`, *optional*, defaults to 1.):

+ The range of the values of the input image.

+ resi_connection (`str`, *optional*, defaults to `"1conv"`):

+ The convolutional block to use before the residual connection in each stage.

+ upsampler (`str`, *optional*, defaults to `"pixelshuffle"`):

+ The reconstruction reconstruction module. Can be 'pixelshuffle'/'pixelshuffledirect'/'nearest+conv'/None.

+

+ Example:

+

+ ```python

+ >>> from transformers import Swin2SRConfig, Swin2SRModel

+

+ >>> # Initializing a Swin2SR caidas/swin2sr-classicalsr-x2-64 style configuration

+ >>> configuration = Swin2SRConfig()

+

+ >>> # Initializing a model (with random weights) from the caidas/swin2sr-classicalsr-x2-64 style configuration

+ >>> model = Swin2SRModel(configuration)

+

+ >>> # Accessing the model configuration

+ >>> configuration = model.config

+ ```"""

+ model_type = "swin2sr"

+

+ attribute_map = {

+ "hidden_size": "embed_dim",

+ "num_attention_heads": "num_heads",

+ "num_hidden_layers": "num_layers",

+ }

+

+ def __init__(

+ self,

+ image_size=64,

+ patch_size=1,

+ num_channels=3,

+ embed_dim=180,

+ depths=[6, 6, 6, 6, 6, 6],

+ num_heads=[6, 6, 6, 6, 6, 6],

+ window_size=8,

+ mlp_ratio=2.0,

+ qkv_bias=True,

+ hidden_dropout_prob=0.0,

+ attention_probs_dropout_prob=0.0,

+ drop_path_rate=0.1,

+ hidden_act="gelu",

+ use_absolute_embeddings=False,

+ patch_norm=True,

+ initializer_range=0.02,

+ layer_norm_eps=1e-5,

+ upscale=2,

+ img_range=1.0,

+ resi_connection="1conv",

+ upsampler="pixelshuffle",

+ **kwargs

+ ):

+ super().__init__(**kwargs)

+

+ self.image_size = image_size

+ self.patch_size = patch_size

+ self.num_channels = num_channels

+ self.embed_dim = embed_dim

+ self.depths = depths

+ self.num_layers = len(depths)

+ self.num_heads = num_heads

+ self.window_size = window_size

+ self.mlp_ratio = mlp_ratio

+ self.qkv_bias = qkv_bias

+ self.hidden_dropout_prob = hidden_dropout_prob

+ self.attention_probs_dropout_prob = attention_probs_dropout_prob

+ self.drop_path_rate = drop_path_rate

+ self.hidden_act = hidden_act

+ self.use_absolute_embeddings = use_absolute_embeddings

+ self.path_norm = patch_norm

+ self.layer_norm_eps = layer_norm_eps

+ self.initializer_range = initializer_range

+ self.upscale = upscale

+ self.img_range = img_range

+ self.resi_connection = resi_connection

+ self.upsampler = upsampler

diff --git a/src/transformers/models/swin2sr/convert_swin2sr_original_to_pytorch.py b/src/transformers/models/swin2sr/convert_swin2sr_original_to_pytorch.py

new file mode 100644

index 000000000000..38a11496f7ee

--- /dev/null

+++ b/src/transformers/models/swin2sr/convert_swin2sr_original_to_pytorch.py

@@ -0,0 +1,278 @@

+# coding=utf-8

+# Copyright 2022 The HuggingFace Inc. team.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+"""Convert Swin2SR checkpoints from the original repository. URL: https://github.com/mv-lab/swin2sr"""

+

+import argparse

+

+import torch

+from PIL import Image

+from torchvision.transforms import Compose, Normalize, Resize, ToTensor

+

+import requests

+from transformers import Swin2SRConfig, Swin2SRForImageSuperResolution, Swin2SRImageProcessor

+

+

+def get_config(checkpoint_url):

+ config = Swin2SRConfig()

+

+ if "Swin2SR_ClassicalSR_X4_64" in checkpoint_url:

+ config.upscale = 4

+ elif "Swin2SR_CompressedSR_X4_48" in checkpoint_url:

+ config.upscale = 4

+ config.image_size = 48

+ config.upsampler = "pixelshuffle_aux"

+ elif "Swin2SR_Lightweight_X2_64" in checkpoint_url:

+ config.depths = [6, 6, 6, 6]

+ config.embed_dim = 60

+ config.num_heads = [6, 6, 6, 6]

+ config.upsampler = "pixelshuffledirect"

+ elif "Swin2SR_RealworldSR_X4_64_BSRGAN_PSNR" in checkpoint_url:

+ config.upscale = 4

+ config.upsampler = "nearest+conv"

+ elif "Swin2SR_Jpeg_dynamic" in checkpoint_url:

+ config.num_channels = 1

+ config.upscale = 1

+ config.image_size = 126

+ config.window_size = 7

+ config.img_range = 255.0

+ config.upsampler = ""

+

+ return config

+

+

+def rename_key(name, config):

+ if "patch_embed.proj" in name and "layers" not in name:

+ name = name.replace("patch_embed.proj", "embeddings.patch_embeddings.projection")

+ if "patch_embed.norm" in name:

+ name = name.replace("patch_embed.norm", "embeddings.patch_embeddings.layernorm")

+ if "layers" in name:

+ name = name.replace("layers", "encoder.stages")

+ if "residual_group.blocks" in name:

+ name = name.replace("residual_group.blocks", "layers")

+ if "attn.proj" in name:

+ name = name.replace("attn.proj", "attention.output.dense")

+ if "attn" in name:

+ name = name.replace("attn", "attention.self")

+ if "norm1" in name:

+ name = name.replace("norm1", "layernorm_before")

+ if "norm2" in name:

+ name = name.replace("norm2", "layernorm_after")

+ if "mlp.fc1" in name:

+ name = name.replace("mlp.fc1", "intermediate.dense")

+ if "mlp.fc2" in name:

+ name = name.replace("mlp.fc2", "output.dense")

+ if "q_bias" in name:

+ name = name.replace("q_bias", "query.bias")

+ if "k_bias" in name:

+ name = name.replace("k_bias", "key.bias")

+ if "v_bias" in name:

+ name = name.replace("v_bias", "value.bias")

+ if "cpb_mlp" in name:

+ name = name.replace("cpb_mlp", "continuous_position_bias_mlp")

+ if "patch_embed.proj" in name:

+ name = name.replace("patch_embed.proj", "patch_embed.projection")

+

+ if name == "norm.weight":

+ name = "layernorm.weight"

+ if name == "norm.bias":

+ name = "layernorm.bias"

+

+ if "conv_first" in name:

+ name = name.replace("conv_first", "first_convolution")

+

+ if (

+ "upsample" in name

+ or "conv_before_upsample" in name

+ or "conv_bicubic" in name

+ or "conv_up" in name

+ or "conv_hr" in name

+ or "conv_last" in name

+ or "aux" in name

+ ):

+ # heads

+ if "conv_last" in name:

+ name = name.replace("conv_last", "final_convolution")

+ if config.upsampler in ["pixelshuffle", "pixelshuffle_aux", "nearest+conv"]:

+ if "conv_before_upsample.0" in name:

+ name = name.replace("conv_before_upsample.0", "conv_before_upsample")

+ if "upsample.0" in name:

+ name = name.replace("upsample.0", "upsample.convolution_0")

+ if "upsample.2" in name:

+ name = name.replace("upsample.2", "upsample.convolution_1")

+ name = "upsample." + name

+ elif config.upsampler == "pixelshuffledirect":

+ name = name.replace("upsample.0.weight", "upsample.conv.weight")

+ name = name.replace("upsample.0.bias", "upsample.conv.bias")

+ else:

+ pass

+ else:

+ name = "swin2sr." + name

+

+ return name

+

+

+def convert_state_dict(orig_state_dict, config):

+ for key in orig_state_dict.copy().keys():

+ val = orig_state_dict.pop(key)

+

+ if "qkv" in key:

+ key_split = key.split(".")

+ stage_num = int(key_split[1])

+ block_num = int(key_split[4])

+ dim = config.embed_dim

+

+ if "weight" in key:

+ orig_state_dict[

+ f"swin2sr.encoder.stages.{stage_num}.layers.{block_num}.attention.self.query.weight"

+ ] = val[:dim, :]

+ orig_state_dict[

+ f"swin2sr.encoder.stages.{stage_num}.layers.{block_num}.attention.self.key.weight"

+ ] = val[dim : dim * 2, :]

+ orig_state_dict[

+ f"swin2sr.encoder.stages.{stage_num}.layers.{block_num}.attention.self.value.weight"

+ ] = val[-dim:, :]

+ else:

+ orig_state_dict[

+ f"swin2sr.encoder.stages.{stage_num}.layers.{block_num}.attention.self.query.bias"

+ ] = val[:dim]

+ orig_state_dict[

+ f"swin2sr.encoder.stages.{stage_num}.layers.{block_num}.attention.self.key.bias"

+ ] = val[dim : dim * 2]

+ orig_state_dict[

+ f"swin2sr.encoder.stages.{stage_num}.layers.{block_num}.attention.self.value.bias"

+ ] = val[-dim:]

+ pass

+ else:

+ orig_state_dict[rename_key(key, config)] = val

+

+ return orig_state_dict

+

+

+def convert_swin2sr_checkpoint(checkpoint_url, pytorch_dump_folder_path, push_to_hub):

+ config = get_config(checkpoint_url)

+ model = Swin2SRForImageSuperResolution(config)

+ model.eval()

+

+ state_dict = torch.hub.load_state_dict_from_url(checkpoint_url, map_location="cpu")

+ new_state_dict = convert_state_dict(state_dict, config)

+ missing_keys, unexpected_keys = model.load_state_dict(new_state_dict, strict=False)

+

+ if len(missing_keys) > 0:

+ raise ValueError("Missing keys when converting: {}".format(missing_keys))

+ for key in unexpected_keys:

+ if not ("relative_position_index" in key or "relative_coords_table" in key or "self_mask" in key):

+ raise ValueError(f"Unexpected key {key} in state_dict")

+

+ # verify values

+ url = "https://github.com/mv-lab/swin2sr/blob/main/testsets/real-inputs/shanghai.jpg?raw=true"

+ image = Image.open(requests.get(url, stream=True).raw).convert("RGB")

+ processor = Swin2SRImageProcessor()

+ # pixel_values = processor(image, return_tensors="pt").pixel_values

+

+ image_size = 126 if "Jpeg" in checkpoint_url else 256

+ transforms = Compose(

+ [

+ Resize((image_size, image_size)),

+ ToTensor(),

+ Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),

+ ]

+ )

+ pixel_values = transforms(image).unsqueeze(0)

+

+ if config.num_channels == 1:

+ pixel_values = pixel_values[:, 0, :, :].unsqueeze(1)

+

+ outputs = model(pixel_values)

+

+ # assert values

+ if "Swin2SR_ClassicalSR_X2_64" in checkpoint_url:

+ expected_shape = torch.Size([1, 3, 512, 512])

+ expected_slice = torch.tensor(

+ [[-0.7087, -0.7138, -0.6721], [-0.8340, -0.8095, -0.7298], [-0.9149, -0.8414, -0.7940]]

+ )

+ elif "Swin2SR_ClassicalSR_X4_64" in checkpoint_url:

+ expected_shape = torch.Size([1, 3, 1024, 1024])

+ expected_slice = torch.tensor(

+ [[-0.7775, -0.8105, -0.8933], [-0.7764, -0.8356, -0.9225], [-0.7976, -0.8686, -0.9579]]

+ )

+ elif "Swin2SR_CompressedSR_X4_48" in checkpoint_url:

+ # TODO values didn't match exactly here

+ expected_shape = torch.Size([1, 3, 1024, 1024])

+ expected_slice = torch.tensor(

+ [[-0.8035, -0.7504, -0.7491], [-0.8538, -0.8124, -0.7782], [-0.8804, -0.8651, -0.8493]]

+ )

+ elif "Swin2SR_Lightweight_X2_64" in checkpoint_url:

+ expected_shape = torch.Size([1, 3, 512, 512])

+ expected_slice = torch.tensor(

+ [[-0.7669, -0.8662, -0.8767], [-0.8810, -0.9962, -0.9820], [-0.9340, -1.0322, -1.1149]]

+ )

+ elif "Swin2SR_RealworldSR_X4_64_BSRGAN_PSNR" in checkpoint_url:

+ expected_shape = torch.Size([1, 3, 1024, 1024])

+ expected_slice = torch.tensor(

+ [[-0.5238, -0.5557, -0.6321], [-0.6016, -0.5903, -0.6391], [-0.6244, -0.6334, -0.6889]]

+ )

+

+ assert (

+ outputs.reconstruction.shape == expected_shape

+ ), f"Shape of reconstruction should be {expected_shape}, but is {outputs.reconstruction.shape}"

+ assert torch.allclose(outputs.reconstruction[0, 0, :3, :3], expected_slice, atol=1e-3)

+ print("Looks ok!")

+

+ url_to_name = {

+ "https://github.com/mv-lab/swin2sr/releases/download/v0.0.1/Swin2SR_ClassicalSR_X2_64.pth": (

+ "swin2SR-classical-sr-x2-64"

+ ),

+ "https://github.com/mv-lab/swin2sr/releases/download/v0.0.1/Swin2SR_ClassicalSR_X4_64.pth": (

+ "swin2SR-classical-sr-x4-64"

+ ),

+ "https://github.com/mv-lab/swin2sr/releases/download/v0.0.1/Swin2SR_CompressedSR_X4_48.pth": (

+ "swin2SR-compressed-sr-x4-48"

+ ),

+ "https://github.com/mv-lab/swin2sr/releases/download/v0.0.1/Swin2SR_Lightweight_X2_64.pth": (

+ "swin2SR-lightweight-x2-64"

+ ),

+ "https://github.com/mv-lab/swin2sr/releases/download/v0.0.1/Swin2SR_RealworldSR_X4_64_BSRGAN_PSNR.pth": (

+ "swin2SR-realworld-sr-x4-64-bsrgan-psnr"

+ ),

+ }

+ model_name = url_to_name[checkpoint_url]

+

+ if pytorch_dump_folder_path is not None:

+ print(f"Saving model {model_name} to {pytorch_dump_folder_path}")

+ model.save_pretrained(pytorch_dump_folder_path)

+ print(f"Saving image processor to {pytorch_dump_folder_path}")

+ processor.save_pretrained(pytorch_dump_folder_path)

+

+ if push_to_hub:

+ model.push_to_hub(f"caidas/{model_name}")

+ processor.push_to_hub(f"caidas/{model_name}")

+

+

+if __name__ == "__main__":

+ parser = argparse.ArgumentParser()

+ # Required parameters

+ parser.add_argument(

+ "--checkpoint_url",

+ default="https://github.com/mv-lab/swin2sr/releases/download/v0.0.1/Swin2SR_ClassicalSR_X2_64.pth",

+ type=str,

+ help="URL of the original Swin2SR checkpoint you'd like to convert.",

+ )

+ parser.add_argument(

+ "--pytorch_dump_folder_path", default=None, type=str, help="Path to the output PyTorch model directory."

+ )

+ parser.add_argument("--push_to_hub", action="store_true", help="Whether to push the converted model to the hub.")

+

+ args = parser.parse_args()

+ convert_swin2sr_checkpoint(args.checkpoint_url, args.pytorch_dump_folder_path, args.push_to_hub)

diff --git a/src/transformers/models/swin2sr/image_processing_swin2sr.py b/src/transformers/models/swin2sr/image_processing_swin2sr.py

new file mode 100644

index 000000000000..c5c5458d8aa7

--- /dev/null

+++ b/src/transformers/models/swin2sr/image_processing_swin2sr.py

@@ -0,0 +1,175 @@

+# coding=utf-8

+# Copyright 2022 The HuggingFace Inc. team. All rights reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+"""Image processor class for Swin2SR."""

+

+from typing import Optional, Union

+

+import numpy as np

+

+from transformers.utils.generic import TensorType

+

+from ...image_processing_utils import BaseImageProcessor, BatchFeature

+from ...image_transforms import get_image_size, pad, rescale, to_channel_dimension_format

+from ...image_utils import ChannelDimension, ImageInput, is_batched, to_numpy_array, valid_images

+from ...utils import logging

+

+

+logger = logging.get_logger(__name__)

+

+

+class Swin2SRImageProcessor(BaseImageProcessor):

+ r"""

+ Constructs a Swin2SR image processor.

+

+ Args:

+ do_rescale (`bool`, *optional*, defaults to `True`):

+ Whether to rescale the image by the specified scale `rescale_factor`. Can be overridden by the `do_rescale`

+ parameter in the `preprocess` method.

+ rescale_factor (`int` or `float`, *optional*, defaults to `1/255`):

+ Scale factor to use if rescaling the image. Can be overridden by the `rescale_factor` parameter in the

+ `preprocess` method.

+ """

+

+ model_input_names = ["pixel_values"]

+

+ def __init__(

+ self,

+ do_rescale: bool = True,

+ rescale_factor: Union[int, float] = 1 / 255,

+ do_pad: bool = True,

+ pad_size: int = 8,

+ **kwargs

+ ) -> None:

+ super().__init__(**kwargs)

+

+ self.do_rescale = do_rescale

+ self.rescale_factor = rescale_factor

+ self.do_pad = do_pad

+ self.pad_size = pad_size

+

+ def rescale(

+ self, image: np.ndarray, scale: float, data_format: Optional[Union[str, ChannelDimension]] = None, **kwargs

+ ) -> np.ndarray:

+ """

+ Rescale an image by a scale factor. image = image * scale.

+

+ Args:

+ image (`np.ndarray`):

+ Image to rescale.

+ scale (`float`):

+ The scaling factor to rescale pixel values by.

+ data_format (`str` or `ChannelDimension`, *optional*):

+ The channel dimension format for the output image. If unset, the channel dimension format of the input

+ image is used. Can be one of:

+ - `"channels_first"` or `ChannelDimension.FIRST`: image in (num_channels, height, width) format.

+ - `"channels_last"` or `ChannelDimension.LAST`: image in (height, width, num_channels) format.

+

+ Returns:

+ `np.ndarray`: The rescaled image.

+ """

+ return rescale(image, scale=scale, data_format=data_format, **kwargs)

+

+ def pad(self, image: np.ndarray, size: int, data_format: Optional[Union[str, ChannelDimension]] = None):

+ """

+ Pad an image to make the height and width divisible by `size`.

+

+ Args:

+ image (`np.ndarray`):

+ Image to pad.

+ size (`int`):

+ The size to make the height and width divisible by.

+ data_format (`str` or `ChannelDimension`, *optional*):

+ The channel dimension format for the output image. If unset, the channel dimension format of the input

+ image is used. Can be one of:

+ - `"channels_first"` or `ChannelDimension.FIRST`: image in (num_channels, height, width) format.

+ - `"channels_last"` or `ChannelDimension.LAST`: image in (height, width, num_channels) format.

+

+ Returns:

+ `np.ndarray`: The padded image.

+ """

+ old_height, old_width = get_image_size(image)

+ pad_height = (old_height // size + 1) * size - old_height

+ pad_width = (old_width // size + 1) * size - old_width

+

+ return pad(image, ((0, pad_height), (0, pad_width)), mode="symmetric", data_format=data_format)

+

+ def preprocess(

+ self,

+ images: ImageInput,

+ do_rescale: Optional[bool] = None,

+ rescale_factor: Optional[float] = None,

+ do_pad: Optional[bool] = None,

+ pad_size: Optional[int] = None,

+ return_tensors: Optional[Union[str, TensorType]] = None,

+ data_format: Union[str, ChannelDimension] = ChannelDimension.FIRST,

+ **kwargs,

+ ):

+ """

+ Preprocess an image or batch of images.

+

+ Args:

+ images (`ImageInput`):

+ Image to preprocess.

+ do_rescale (`bool`, *optional*, defaults to `self.do_rescale`):

+ Whether to rescale the image values between [0 - 1].

+ rescale_factor (`float`, *optional*, defaults to `self.rescale_factor`):

+ Rescale factor to rescale the image by if `do_rescale` is set to `True`.

+ do_pad (`bool`, *optional*, defaults to `True`):

+ Whether to pad the image to make the height and width divisible by `window_size`.

+ pad_size (`int`, *optional*, defaults to `32`):

+ The size of the sliding window for the local attention.

+ return_tensors (`str` or `TensorType`, *optional*):

+ The type of tensors to return. Can be one of:

+ - Unset: Return a list of `np.ndarray`.

+ - `TensorType.TENSORFLOW` or `'tf'`: Return a batch of type `tf.Tensor`.

+ - `TensorType.PYTORCH` or `'pt'`: Return a batch of type `torch.Tensor`.

+ - `TensorType.NUMPY` or `'np'`: Return a batch of type `np.ndarray`.

+ - `TensorType.JAX` or `'jax'`: Return a batch of type `jax.numpy.ndarray`.

+ data_format (`ChannelDimension` or `str`, *optional*, defaults to `ChannelDimension.FIRST`):

+ The channel dimension format for the output image. Can be one of:

+ - `"channels_first"` or `ChannelDimension.FIRST`: image in (num_channels, height, width) format.

+ - `"channels_last"` or `ChannelDimension.LAST`: image in (height, width, num_channels) format.

+ - Unset: Use the channel dimension format of the input image.

+ """

+ do_rescale = do_rescale if do_rescale is not None else self.do_rescale

+ rescale_factor = rescale_factor if rescale_factor is not None else self.rescale_factor

+ do_pad = do_pad if do_pad is not None else self.do_pad

+ pad_size = pad_size if pad_size is not None else self.pad_size

+

+ if not is_batched(images):

+ images = [images]

+

+ if not valid_images(images):

+ raise ValueError(

+ "Invalid image type. Must be of type PIL.Image.Image, numpy.ndarray, "

+ "torch.Tensor, tf.Tensor or jax.ndarray."

+ )

+

+ if do_rescale and rescale_factor is None:

+ raise ValueError("Rescale factor must be specified if do_rescale is True.")

+

+ # All transformations expect numpy arrays.

+ images = [to_numpy_array(image) for image in images]

+

+ if do_rescale:

+ images = [self.rescale(image=image, scale=rescale_factor) for image in images]

+

+ if do_pad:

+ images = [self.pad(image, size=pad_size) for image in images]

+

+ images = [to_channel_dimension_format(image, data_format) for image in images]

+

+ data = {"pixel_values": images}

+ return BatchFeature(data=data, tensor_type=return_tensors)

diff --git a/src/transformers/models/swin2sr/modeling_swin2sr.py b/src/transformers/models/swin2sr/modeling_swin2sr.py

new file mode 100644

index 000000000000..0edc2feea883

--- /dev/null

+++ b/src/transformers/models/swin2sr/modeling_swin2sr.py

@@ -0,0 +1,1204 @@

+# coding=utf-8

+# Copyright 2022 Microsoft Research and The HuggingFace Inc. team. All rights reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+""" PyTorch Swin2SR Transformer model."""

+

+

+import collections.abc

+import math

+from dataclasses import dataclass

+from typing import Optional, Tuple, Union

+

+import torch

+import torch.utils.checkpoint

+from torch import nn

+

+from ...activations import ACT2FN

+from ...modeling_outputs import BaseModelOutput, ImageSuperResolutionOutput

+from ...modeling_utils import PreTrainedModel

+from ...pytorch_utils import find_pruneable_heads_and_indices, meshgrid, prune_linear_layer

+from ...utils import (

+ ModelOutput,

+ add_code_sample_docstrings,

+ add_start_docstrings,

+ add_start_docstrings_to_model_forward,

+ logging,

+ replace_return_docstrings,

+)

+from .configuration_swin2sr import Swin2SRConfig

+

+

+logger = logging.get_logger(__name__)

+

+# General docstring

+_CONFIG_FOR_DOC = "Swin2SRConfig"

+_FEAT_EXTRACTOR_FOR_DOC = "AutoFeatureExtractor"

+

+# Base docstring

+_CHECKPOINT_FOR_DOC = "caidas/swin2sr-classicalsr-x2-64"

+_EXPECTED_OUTPUT_SHAPE = [1, 64, 768]

+

+

+SWIN2SR_PRETRAINED_MODEL_ARCHIVE_LIST = [

+ "caidas/swin2SR-classical-sr-x2-64",

+ # See all Swin2SR models at https://huggingface.co/models?filter=swin2sr

+]

+

+

+@dataclass

+class Swin2SREncoderOutput(ModelOutput):

+ """

+ Swin2SR encoder's outputs, with potential hidden states and attentions.

+

+ Args:

+ last_hidden_state (`torch.FloatTensor` of shape `(batch_size, sequence_length, hidden_size)`):

+ Sequence of hidden-states at the output of the last layer of the model.

+ hidden_states (`tuple(torch.FloatTensor)`, *optional*, returned when `output_hidden_states=True` is passed or when `config.output_hidden_states=True`):

+ Tuple of `torch.FloatTensor` (one for the output of the embeddings + one for the output of each stage) of

+ shape `(batch_size, sequence_length, hidden_size)`.

+

+ Hidden-states of the model at the output of each layer plus the initial embedding outputs.

+ attentions (`tuple(torch.FloatTensor)`, *optional*, returned when `output_attentions=True` is passed or when `config.output_attentions=True`):

+ Tuple of `torch.FloatTensor` (one for each stage) of shape `(batch_size, num_heads, sequence_length,

+ sequence_length)`.

+

+ Attentions weights after the attention softmax, used to compute the weighted average in the self-attention

+ heads.

+ """

+

+ last_hidden_state: torch.FloatTensor = None

+ hidden_states: Optional[Tuple[torch.FloatTensor]] = None

+ attentions: Optional[Tuple[torch.FloatTensor]] = None

+

+

+# Copied from transformers.models.swin.modeling_swin.window_partition

+def window_partition(input_feature, window_size):

+ """

+ Partitions the given input into windows.

+ """

+ batch_size, height, width, num_channels = input_feature.shape

+ input_feature = input_feature.view(

+ batch_size, height // window_size, window_size, width // window_size, window_size, num_channels

+ )

+ windows = input_feature.permute(0, 1, 3, 2, 4, 5).contiguous().view(-1, window_size, window_size, num_channels)

+ return windows

+

+

+# Copied from transformers.models.swin.modeling_swin.window_reverse

+def window_reverse(windows, window_size, height, width):

+ """

+ Merges windows to produce higher resolution features.

+ """

+ num_channels = windows.shape[-1]

+ windows = windows.view(-1, height // window_size, width // window_size, window_size, window_size, num_channels)

+ windows = windows.permute(0, 1, 3, 2, 4, 5).contiguous().view(-1, height, width, num_channels)

+ return windows

+

+

+# Copied from transformers.models.swin.modeling_swin.drop_path

+def drop_path(input, drop_prob=0.0, training=False, scale_by_keep=True):

+ """

+ Drop paths (Stochastic Depth) per sample (when applied in main path of residual blocks).

+

+ Comment by Ross Wightman: This is the same as the DropConnect impl I created for EfficientNet, etc networks,

+ however, the original name is misleading as 'Drop Connect' is a different form of dropout in a separate paper...

+ See discussion: https://github.com/tensorflow/tpu/issues/494#issuecomment-532968956 ... I've opted for changing the

+ layer and argument names to 'drop path' rather than mix DropConnect as a layer name and use 'survival rate' as the

+ argument.

+ """

+ if drop_prob == 0.0 or not training:

+ return input

+ keep_prob = 1 - drop_prob

+ shape = (input.shape[0],) + (1,) * (input.ndim - 1) # work with diff dim tensors, not just 2D ConvNets

+ random_tensor = keep_prob + torch.rand(shape, dtype=input.dtype, device=input.device)

+ random_tensor.floor_() # binarize

+ output = input.div(keep_prob) * random_tensor

+ return output

+

+

+# Copied from transformers.models.swin.modeling_swin.SwinDropPath with Swin->Swin2SR

+class Swin2SRDropPath(nn.Module):

+ """Drop paths (Stochastic Depth) per sample (when applied in main path of residual blocks)."""

+

+ def __init__(self, drop_prob: Optional[float] = None) -> None:

+ super().__init__()

+ self.drop_prob = drop_prob

+

+ def forward(self, hidden_states: torch.Tensor) -> torch.Tensor:

+ return drop_path(hidden_states, self.drop_prob, self.training)

+

+ def extra_repr(self) -> str:

+ return "p={}".format(self.drop_prob)

+

+

+class Swin2SREmbeddings(nn.Module):

+ """

+ Construct the patch and optional position embeddings.

+ """

+

+ def __init__(self, config):

+ super().__init__()

+

+ self.patch_embeddings = Swin2SRPatchEmbeddings(config)

+ num_patches = self.patch_embeddings.num_patches

+

+ if config.use_absolute_embeddings:

+ self.position_embeddings = nn.Parameter(torch.zeros(1, num_patches + 1, config.embed_dim))

+ else:

+ self.position_embeddings = None

+

+ self.dropout = nn.Dropout(config.hidden_dropout_prob)

+ self.window_size = config.window_size

+

+ def forward(self, pixel_values: Optional[torch.FloatTensor]) -> Tuple[torch.Tensor]:

+ embeddings, output_dimensions = self.patch_embeddings(pixel_values)

+

+ if self.position_embeddings is not None:

+ embeddings = embeddings + self.position_embeddings

+

+ embeddings = self.dropout(embeddings)

+

+ return embeddings, output_dimensions

+

+

+class Swin2SRPatchEmbeddings(nn.Module):

+ def __init__(self, config, normalize_patches=True):

+ super().__init__()

+ num_channels = config.embed_dim

+ image_size, patch_size = config.image_size, config.patch_size

+

+ image_size = image_size if isinstance(image_size, collections.abc.Iterable) else (image_size, image_size)

+ patch_size = patch_size if isinstance(patch_size, collections.abc.Iterable) else (patch_size, patch_size)

+ patches_resolution = [image_size[0] // patch_size[0], image_size[1] // patch_size[1]]

+ self.patches_resolution = patches_resolution

+ self.num_patches = patches_resolution[0] * patches_resolution[1]

+

+ self.projection = nn.Conv2d(num_channels, config.embed_dim, kernel_size=patch_size, stride=patch_size)

+ self.layernorm = nn.LayerNorm(config.embed_dim) if normalize_patches else None

+

+ def forward(self, embeddings: Optional[torch.FloatTensor]) -> Tuple[torch.Tensor, Tuple[int]]:

+ embeddings = self.projection(embeddings)

+ _, _, height, width = embeddings.shape

+ output_dimensions = (height, width)

+ embeddings = embeddings.flatten(2).transpose(1, 2)

+

+ if self.layernorm is not None:

+ embeddings = self.layernorm(embeddings)

+

+ return embeddings, output_dimensions

+

+

+class Swin2SRPatchUnEmbeddings(nn.Module):

+ r"""Image to Patch Unembedding"""

+

+ def __init__(self, config):

+ super().__init__()

+

+ self.embed_dim = config.embed_dim

+

+ def forward(self, embeddings, x_size):

+ batch_size, height_width, num_channels = embeddings.shape

+ embeddings = embeddings.transpose(1, 2).view(batch_size, self.embed_dim, x_size[0], x_size[1]) # B Ph*Pw C

+ return embeddings

+

+

+# Copied from transformers.models.swinv2.modeling_swinv2.Swinv2PatchMerging with Swinv2->Swin2SR

+class Swin2SRPatchMerging(nn.Module):

+ """

+ Patch Merging Layer.

+

+ Args:

+ input_resolution (`Tuple[int]`):

+ Resolution of input feature.

+ dim (`int`):

+ Number of input channels.

+ norm_layer (`nn.Module`, *optional*, defaults to `nn.LayerNorm`):

+ Normalization layer class.

+ """

+

+ def __init__(self, input_resolution: Tuple[int], dim: int, norm_layer: nn.Module = nn.LayerNorm) -> None:

+ super().__init__()

+ self.input_resolution = input_resolution

+ self.dim = dim

+ self.reduction = nn.Linear(4 * dim, 2 * dim, bias=False)

+ self.norm = norm_layer(2 * dim)

+