LFS158x

Kubernetes is an open source system for automating deployment, scaling and management of containerzied applications

It means helmsman, or “ship pilot” in Greek. The analogy is to think of k8s as a manager for ships loaded with containers

K8s has new releases every 3 months. The latest is 1.10

Some of the lessons put in k8s come from Borg, like:

- api servers

- pods

- ip per pod

- services

- labels

K8s has features like:

- automatic binpacking

K8s automatically schedules the containers based on resource usage and constraints

- self healing

Following the declarative paradigm, k8s makes sure that the infra is always what it should be

- horizontal scaling

- service discovery and load balancing

K8s groups sets of containers and refers to them via a DNS. This DNS is also called k8s service. K8s can discover these services automatically and load balance requests b/w containers of a given service.

- automated rollouts and rollbacks without downtime

- secrets and configuration management

- storage orchestration

With k8s and its plugins we can automatically mount local, external and storage solutions to the containers in a seamless manner, based on software defined storage (SDS)

- batch execution

K8s supports batch execution

- role based access control

K8s also abstracts away the hardware and the same application can be run on aws, digital ocean, gcp, bare metal, VMs etc once you have the cluster up (and also given you don’t use the cloud native solutions like aws ebs etc)

K8s also has a very pluggable architecture, which means we can plug in any of our components and use it. The api can be extended as well. We can write custom plugins too

The CNCF is one of the projects hosted by the Linux Foundation. It aims to accelerate the adoption of containers, microservices, cloud native applications.

Some of the projects under the cncf:

- containerd

- a container runtime - used by docker

- rkt

- another container runtime from coreos

- k8s

- container orchestration engine

- linkerd

- for service mesh

- envoy

- for service mesh

- gRPC

- for remote procedure call (RPC)

- container network interface - CNI

- for networking api

- CoreDNS

- for service discovery

- Rook

- for cloud native storage

- notary

- for security

- The Update Framework - TUF

- for software updates

- prometheus

- for monitoring

- opentracing

- for tracing

- jaeger

- for distributed tracing

- fluentd

- for logging

- vitess

- for storage

this set of CNCF projects can cover the entire lifecycle of an application, from its execution using container runtimes, to its monitoring and logging

The cncf helps k8s by:

- neutral home for k8s trademark and enforces proper usage

- offers legal guidance on patent and copyright issues

- community building, training etc

K8s has 3 main components:

- master node

- worker node

- distributed k-v store, like etcd

The user contacts the api-server present in the master node via cli, apis, dashboard etc

The master node also has controller, scheduler etc

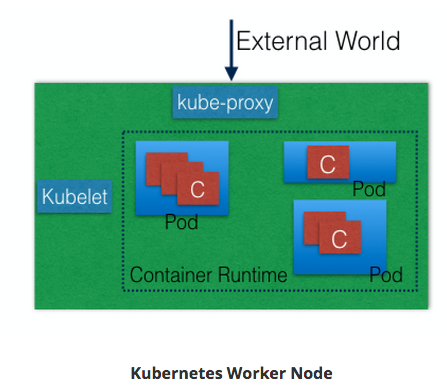

Each of the worker node has:

- kubelet

- kube-proxy

- pods

It is responsible for managing the kubernetes cluster. We can have more than 1 master node in our kubernetes cluster. This will enable HA mode. Only one will be master, others will be followers

The distributed k-v store, etcd can be a part of the master node, or it can be configured externally.

All the administrative tasks are performed via the api server. The user sends rest commands to the api server which then validates and processes the requests. After executing the requests, the resulting state of the cluster is stored in a distributed k-v store etcd

It schedules work on different worker nodes. It has the resource usage information for each worker node. It keeps in mind the constrains that the user might have set on each pod etc. The scheduler takes into account the quality of the service requirements, data locality, affinity, anti-affinity etc

It schedules pods and services

It manages non-terminating control loops which regulate the state of the kubernetes cluster. The CM knows about the descried state of the objects it manages and makes sure that the object stays in that state. In a control loop, it makes sure that the desired state and the current state are in sync

It is used to store the current state of the cluster.

It runs applications using Pods and is controlled by the master node. The master node has the necessary tools to connect and manage the pods. A pod is a scheduling unit in kubernetes. It is a logical collection of one or more containers which are always scheduled together.

A worker node has the following components:

- container runtime

- kubelet

- kube-proxy

To run and manage the container’s lifecycle, we need a container runtime on all the worker nodes. Examples include:

- containerd

- rkt

- lxd

It is an agent that runs on each worker node and communicates with the master node. It receives the pod definition (for eg from api server, can receive from other sources too) and runs the containers associated with the pod, also making sure that the pods are healthy.

The kublet connects to the container runtime using the CRI - container runtime interface The CRI consists of protocol buffers, gRPC API, libraries

The CRI shim converts the CRI commands into commands the container runtime understands

The CRI implements 2 services:

- ImageService

It is responsible for all the image related operations

- RuntimeService

It is responsible for all the pod and container related operations

With the CRI, kubernetes can use different container runtimes. Any container runtime that implements CRI can be used by kubernetes to manage pods, containers, container images

Some examples of CRI shims

- dockershim

With dockershim, containers are cerated using docker engine that is installed on the worker nodes. The docker engine talks to the containerd and manages the nodes

With cri-containerd, we directly talk to containerd by passing docker engine

There is an initiative called OCI - open container initiative that defines a spec for container runtimes. What cri-o does is, it implements the container runtime interface - CRI with a general purpose shim layer that can talk to all the container runtimes that comply with the OCI.

This way, we can use any oci compatible runtime with kubernetes (since cri-o will implement the cri)

Note here, the cri-o implements the CNI, and also has the image service and the runtime service

It can get a little messy sometimes, all these things.

Docker engine is the whole thing, it was a monolith that enabled users to run containers. Then it was broken down into individual components. It was broken down into:

- docker engine

- containerd

- runc

runC is the lowest level component that implements the OCI interface. It interacts with the kernel and does the “runs” the container

containerd does things like take care of setting up the networking, image transfer/storage etc - It takes care of the complete container runtime (which means, it manages and makes life easy for runC, which is the actual container runtime). Unlike the Docker daemon it has a reduced feature set; not supporting image download, for example.

Docker engine just does some high level things itself like accepting user commands, downloading the images from the docker registry etc. It offloads a lot of it to containerd.

“the Docker daemon prepares the image as an Open Container Image (OCI) bundle and makes an API call to containerd to start the OCI bundle. containerd then starts the container using runC.”

Note, the runtimes have to be OCI compliant, (like runC is), that is, they have to expose a fixed API to managers like containerd so that they(containerd) can make life easy for them(runC) (and ask them to stop/start containers)

rkt is another container runtime, which does not support OCI yet, but supports the appc specification. But it is a full fledged solution, it manages and makes it’s own life easy, so it needs no containerd like daddy.

So, that’s that. Now let’s add another component (and another interface) to the mix - Kubernetes

Kubernetes can run anything that satisfies the CRI - container runtime interface.

You can run rkt with k8s, as rkt satisfies CRI - container runtime interface. Kubernetes doesn’t ask for anything else, it just needs CRI, it doesn’t give a FF about how you run your containers, OCI or not.

containerd does not support CRI, but cri-containerd which is a shim around containerd does. So, if you want to run containerd with Kubernetes, you have to use cri-containerd (this also is the default runtime for Kubernetes). cri-containerd recently got renamed to CRI Plugin.

If you want to get the docker engine in the mix as well, you can do it. Use dockershim, it will add the CRI shim to the docker engine.

Now, like containerd can manage and make life easy for runC (the container runtime), it can manage and make life easy for other container runtimes as well - in fact, for every container runtime that supports OCI - like Kata container runtime (known as kata-runtime - https://github.com/kata-containers/runtime.) - which runs kata containers, Clear Container runtime (by Intel).

Now we know that rkt satisfies the CRI, cri-containerd (aka CRI Plugin) does it too.

Note what containerd is doing here. It is not a runtime, it is a manager for runC which is the container runtime. It just manages the image download, storage etc. Heck, it doesn’t even satisfy CRI.

That’s why we have CRI-O. It is just like containerd, but it implements CRI. CRI-O needs a container runtime to run images. It will manage and make life easy for that runtime, but it needs a runtime. It will take any runtime that is OCI compliant. So, naturally, kata-runtime is CRI-O compliant, runC is CRI-O compliant.

Use with Kubernetes is simple, point Kubernetes to CRI-O as the container runtime. (yes yes, CRI-O, but CRI-O and the actual container runtime IS. And Kubernetes is referring to that happy couple when it says container runtime).

Like containerd has docker to make it REALLY usable, and to manage and make life easy for containerd, CRI-O needs someone to take care of image management - it has buildah, umochi etc.

crun is another runtime which is OCI compliant and written in C. It is by RedHat.

We already discussed, kata-runtime is another runtime which is OCI compliant. So, we can use kata-runtime with CRI-O like we discussed.

Note, here, the kubelet is talking to CRI-O via the CRI. CRI-O is talking to cc-runtime (which is another runtime for Intel’s clear containers, yes, OCI compliant), but it could be kata-runtime as well.

Don’t forget containerd, it can manage and make life easy for all OCI complaint runtimes too - runC sure, but also kata-runtime, cc-runtime

Here, note just the runtime is moved from runC to kata-runtime. To do this, in the containerd config, just change runtime to “kata”

Needless to say, it can run on Kubernetes either by CRI-O, or by cri-containerd (aka CRI Plugin).

This is really cool 🔝

Kubernetes, represented here by it’s Ambassador, Mr. Kubelet runs anything that satisfies the CRI. Now, we have several candidates that can.

- Cri-containerd makes containerd do it.

- CRI-O does it natively.

- Dockershim makes the docker engine do it.

Now, all the 3 guys above, can manage and make life easy for all OCI compliant runtimes - runC, kata-runtime, cc-runtimes.

We also have frakti, which satisfies CRI, like rkt, but doesn’t satisfy OCI, and comes bundled with it’s own container runtime.

Here we have CRI-O in action managing and making life easy for OCI compliant kata-runtime and runC both

We have some more runtimes as well:

- railcar - OCI compliant, written in rust

- Pouch - Alibaba’s modified runC

- nvidia runtime - nvidia’s fork of runC

To connect to the pods, we group them logically, and the use a Service to connect to them. The service exposes the pods to the external world and load balances across them

Kube-proxy is responsible for setting the routes in the iptables of the node when a new service is created such that the service is accessible from outside. The apiserver gives the service a IP which the kube-proxy puts in the node’s iptables

The kube-proxy is responsible for “implementing the service abstraction” - in that it is responsible for exposing a load balanced endpoint that can be reached from inside or outside the cluster to reach the pods that define the service.

Some of the modes in which it operates to achieve that 🔝

- Proxy-mode - userspace

In this scheme, it uses a proxy port.

The kube-proxy does 2 things:

- it opens up a proxy port on each node for each new service that is created

- it sets the iptable rules for each node so that whenever a request is made for the service’s

clusterIPand it’s port (as specified by the apiserver), the packets come to the proxy port that kube-proxy created. The kube-proxy then uses round robin to forward the packets to one of the pods in that service

So, let’s say the service has 3 pods A, B, C that belong to service S (let’s say the apiserver gave it the endpoint 10.0.1.2:44131). Also let’s say we have nodes X, Y, Z

earlier, in the userland scheme, each node got a new port opened, say 30333. Also, each node’s iptables got updated with the endpoints of service S (10.0.1.2:44131) pointing to <node A IP>:30333, <node B IP>:30333, <node C IP>:30333

Now, when the request comes to from and node, it goes to <node A IP>:30333 (say) and from there, kube-proxy sends it to the pod A, B or C whichever resides on it.

- iptables

Here, there is no central proxy port. For each pod that is there in the service, it updates the iptables of the nodes to point to the backend pod directly.

Continuing the above example, here each node’s iptables would get a separate entry for each of the 3 pods A, B, C that are part of the service S. So the traffic can be routed to them directly without the involvement of kube-proxy

This is faster since there is no involvement of kube-proxy here, everything can operate in the kernelspace. However, the iptables proxier cannot automatically retry another pod if the one it initially selects does not respond.

So we need a readiness probe to know which pods are healthy and keep the iptables up to date

- Proxy-mode: ipvs

The kernel implements a virtual server that can proxy requests to real server in a load balanced way. This is better since it operates in the kernelspace and also gives us more loadbalancing options

Etcd is used for state management. It is the truth store for the present state of the cluster. Since it has very important information, it has to be highly consistent. It uses the raft consensus protocol to cope with machine failures etc.

Raft allows a collection of machines to work as a coherent group that can survive the failures of some of its members. At any given time, one of the nodes in the group will be the master, and the rest of them will be the followers. Any node can be treated as a master.

In kubernetes, besides storing the cluster state, it is also used to store configuration details such as subnets, ConfigMaps, Secrets etc

To have a fully functional kubernetes cluster, we need to make sure:

- a unique ip is assigned to each pod

- containers in a pod can talk to each other (easy, make them share the same networking namespace )

- the pod is able to communicate with other pods in the cluster

- if configured, the pod is accessible from the external world

- Unique IP

For container networking, there are 2 main specifications:

- Container Network Model - CNM - proposed by docker

- Container Network Interface - CNI - proposed by CoreOS

kubernetes uses CNI to assign the IP address to each Pod

The runtime talks to the CNI, the CNI offloads the task of finding IP for the pod to the network plugin

- Containers in a Pod

Simple, make all the containers in a Pod share the same network namespace. This way, they can reach each other via localhost

- Pod-to-Pod communication across nodes

Kubernetes needs that there shouldn’t be any NAT - network address translation when doing pod-to-pod communication. This means, that each pod should have it’s own ip address and we shouldn’t have say, a subnet level distribution of pods on the nodes (this subent lives on this node, and the pods are accessible via NAT)

- Communication between external world and pods

This can be achieved by exposing our services to the external world using kube-proxy

Kubernetes can be installed in various configurations:

- all-in-one single node installation

Everything on a single node. Good for learning, development and testing. Minikube does this

- single node etcd, single master, multi-worker

- single node etcd, multi master, multi-worker

We have HA

- multi node etcd, multi master, multi-worker

Here, etcd runs outside Kubernetes in a clustered mode. We have HA. This is the recommended mode for production.

Kubernetes on-premise

- Kubernetes can be installed on VMs via Ansible, kubeadm etc

- Kubernetes can also be installed on on-premise bare metal, on top of different operating systems, like RHEL, CoreOS, CentOS, Fedora, Ubuntu, etc. Most of the tools used to install VMs can be used with bare metal as well.

Kubernetes in the cloud

- hosted solutions

Kubernetes is completely managed by the provider. The user just needs to pay hosting and management charges. Examples:

- GKE

- AKS

- EKS

- openshift dedicated

- IBM Cloud Container Service

- Turnkey solutions

These allow easy installation of Kubernetes with just a few clicks on underlying IaaS

- Google compute engine

- amazon aws

- tectonic by coreos

- Kubernetes installation tools

There are some tools which make the installation easy

- kubeadm

This is the recommended way to bootstrap the Kubernetes cluster. It does not support provisioning the machines

- KubeSpray

It can install HA Kubernetes clusters on AWS, GCE, Azure, OpenStack, bare metal etc. It is based on Ansible and is available for most Linux distributions. It is a Kubernetes incubator project

- Kops

Helps us create, destroy, upgrade and maintain production grade HA Kubernetes cluster from the command line. It can provision the machines as well. AWS is officially supported

You can setup Kubernetes manually by following the repo Kubernetes the hard way by Kelsey Hightower.

Prerequisites to run minikube:

- Minikube runs inside a VM on Linux, Mac, Windows. So the use minikube, we need to have the required hypervisor installed first. We can also use

--vm-driver=noneto start the Kubernetes single node “cluster” on your local machine - kubectl - it is a binary used to interact with the Kubernetes cluster

We know about cri-o, which is a general shim layer implementing CRI (container runtime interface) for all OCI (open containers initiative) compliant container runtimes

To use cri-o runtime with minikube, we can do:

minikube start --container-runtime=cri-o

then, docker commands won’t work. We have to use: sudo runc list to list the containers for example

We can use the kubectl cli to access Minikube via CLI, Kubernetes dashboard to access it via cli, or curl with the right credentials to access it via APIs

Kubernetes has an API server, which is the entry point to interact with the Kubernetes cluster - it is used by kubectl, by the gui, and by curl directly as well

The api space 🔝 is divided into 3 independent groups.

- core group

/api/v1- this includes objects such as pods, services, nodes etc

- named group

- these include objects in

/apis/$NAME/$VERSIONformat- The different levels imply different levels of stability and support:

- alpha - it may be dropped anytime without notice, eg:

/apis/batch/v2alpha1 - beta - it is well tested, but the semantics may change in incompatible ways in a subsequent beta or stable release. Eg:

/apis/certificates.k8s.io/v1beta1 - stable - appears in released software for many subsequent versions. Eg

apis/networking.k8s.io/v1

- alpha - it may be dropped anytime without notice, eg:

- The different levels imply different levels of stability and support:

- these include objects in

- system wide

- this group consists of system wide API endpoints, like

/healthz,/logs,/metrics,/uietc

- this group consists of system wide API endpoints, like

Minikube has a dashboard, start it with minikube dashboard

You can get a dashboard using the kubectl proxy command also. It starts a service called kubernetes-dashboard which runs inside the kube-system namespace

access the dashboard on localhost:8001

once kubectl proxy is configured, we can use curl to localhost on the proxy port - curl http://localhost:8001

If we don’t use kubectl proxy, we have to get a token from the api server by:

$ TOKEN=$(kubectl describe secret $(kubectl get secrets | grep default | cut -f1 -d ' ') | grep -E '^token' | cut -f2 -d':' | tr -d '\t' | tr -d " ")

Also, the api server endpoint:

$ APISERVER=$(kubectl config view | grep https | cut -f 2- -d ":" | tr -d " ")

Now, it’s a matter of a simple curl call:

$ curl $APISERVER --header "Authorization: Bearer $TOKEN" --insecure

Kubernetes has several objects like Pods, ReplicaSets, Deployments, Namespaces etc We also have Labels, Selectors which are used to group objects together.

Kubernetes has a rich object model which is used to represent persistent entities The persistent entities describe:

- what containerized applications we are running, and on which node

- application resource consumption

- different restart/upgrade/fault tolerance policies attached to applications

With each object, we declare our intent (or desired state) using spec field.

The Kubernetes api server always accepts only json input. Generally however, we write yaml files which are converted to json by kubectl before sending it

Example of deployment object:

apiVersion: apps/v1 # the api endpoint we want to connect to

kind: Deployment # the object type

metadata: # as the name implies, some info about deployment object

name: nginx-deployment

labels:

app: nginx

spec: # desired state of the deployment

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec: # desired state of the Pod

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80Once this is created, Kubernetes attaches the status field to the object

It is the smallest and simplest Kubernetes object, a unit of deployment in Kubernetes. It is a logical unit representing an application. The pod is a logical collection of containers which are deployed on the same host (colocated), share the same network namespace, mount the same external storage volume

Pods cannot self heal, so we use them with controllers, which can handle pod’s replication, fault tolerance, self heal etc. Examples of controllers:

- Deployments

- ReplicaSets

- ReplicationControllers

We attach the pod’s spec (specification) to other objects using pods templates like in previous example

They are key-value pairs that are attached to any Kubernetes objects (like pods) They are used to organize and select a subset of objects.

Kubernetes has 2 types of selectors:

- equality based selectors

We can use , =, or != operators

- set based selectors

Allows filtering based on a set of values. We can use in, notin, exist operators.

Eg: env in (dev, qa) which allows selecting objects where env label is dev or qa

A rc is a controller that is part of the master node’s controller manager. It makes sure that the specified number of replicas for a Pod is running - no more, no less. We generally don’t deploy pods on their own since they can’t self-heal, we almost always use ~ReplicationController~s to deploy and manage them.

rs is the next generation ReplicationController. It has both equality and set based selectors. RCs only support equality based controllers.

RSs can be used independently, but they are mostly used by Deployments to orchestrate pod creation, deletion and updates. A deployment automatically creates the ReplicaSets

Deployment objects provide declarative (just describe what you want, not how to get it) updates to Pods and ReplicaSets.

Here, 🔝, the Deployment creates a ReplicaSet A which creates 3 pods. In each pod, the container runs nginx:1.7.9 image.

Now, we can update the nginx to say 1.9.1. This will trigger a new ReplicaSet to be created. Now, this ReplicaSet will make sure that there are the required number of pods as specified in it’s spec (that’s what it does)

Once the ReplicaSet B is ready, Deployment starts pointing to it

The Deployments provide features like Deployment recording, which allows us to rollback if something goes wrong.

If we want to partition our Kubernetes cluster into different projects/teams, we can use Namespaces to logically divide the cluster into sub-clusters.

The names of the resources/objects created inside a namespace are unique, but not across Namespace.

$ kubectl get namespaces NAME STATUS AGE default Active 11h kube-public Active 11h kube-system Active 11h

The namespace above are:

- default

This is the default namespace

- kube-system

Objects created by the Kubernetes system

- kube-public

It is a special namespace, which is readable by all users and used for special purposes - like bootstrapping a cluster

We can use Resource Quotas to divide the cluster resources within Namespaces.

Each API access request goes thru the following 3 stages:

- authentication

You are who you say you are

- authorization

You are allowed to access this resource

- admission control

Further modify/reject requests based on some additional checks, like Quota.

Kubernetes does not have an object called user, not does it store usernames. There are 2 kinds of users:

- normal users

They are managed outside of Kubernetes cluster via independent services like user/client certificates, a file listing usernames/passwords, google accounts etc.

- service accounts

With Service Account users, in-cluster processes communicate with the API server. Most of the SA users are created automatically via the API server, or can be created manually. The SA users are tied to a given namespace and mount the respective credentials to communicate with the API server as Secrets.

For authentication, Kubernetes uses different authenticator modules.

- client certificates

We can enable client certificate authentication by giving a CA reference to the api server which will validate the client certificates presented to the API server. The flag is --client-ca-file=/path/to/file

- static token file

We can have pre-defined bearer tokens in a file which can be used with --token-auth-file=/path/to/file

the tokens would last indefinitely, and cannot be changed without restarting the api server

- bootstrap tokens

Can be used for bootstrapping a Kubernetes cluster

- static password file

Similar to static token file. The plag is: --basic-auth-file=/path/to/file. The passwords cannot be changed without restarting the api-server

- service account tokens

This authenticator uses bearer tokens which are attached to pods using the ServiceAccount admission controller (which allows the in-cluster processes to talk to the api server)

- OpenID Connect tokens

OpenID Connect helps us connect with OAuth 2 providers like Google etc to offload authentication to those services

- Webhook Token Authentication

We can offload verification to a remote service via webhooks

- Keystone password

- Authenticating Proxy

Such as nginx. We have this for our logs stack at Draup

After authentication, we need authorization.

Some of the API request attributes that are reviewed by Kubernetes are: user, group, extra, Resource, Namespace etc. They are evaluated against policies. There are several modules that are supported.

- Node Authorizer

It authorizes API requests made by kubelets (it authorizes the kubelet’s read operations for services, endpoints, nodes etc, and write operations for nodes, pods, events etc)

- ABAC authorizer - Attribute based access control

Here, Kubernetes grants access to API requests which combine policies with attributes. Eg:

{

"apiVersion": "abac.authorization.kubernetes.io/v1beta1",

"kind": "Policy",

"spec": {

"user": "nkhare",

"namespace": "lfs158",

"resource": "pods",

"readonly": true

}

}

Here, 🔝, nkhare has only read only access to pods in namespace lfs158.

To enable this, we have to start the API server with the --authorization-mode=ABAC option and specify the authorization policy with --authorization-policy-file=PolicyFile.json

- Webhook authorizer

We can offload authorizer decisions to 3rd party services. To use this, start the API server with authorization-webhook-config-file=/path/to/file where the file has the configuration of the remote authorization service.

- RBAC authorizer - role based access control

Kubernetes has different roles that can be attached to subjects like users, service accounts etc

while creating the roles, we restrict access to specific operations like create, get, update, patch etc

There are 2 kinds of roles:

- role

With role, we can grant access to 2 kinds of roles:

- Role

We can grant access to resources within a specific namespace

- ClusterRole

Can be used to grant the same permission as Role, but its scope is cluster-wide.

We will only focus on Role

Example:

kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: namespace: lfs158 name: pod-reader rules: - apiGroups: [""] # "" indicates the core API group resources: ["pods"] verbs: ["get", "watch", "list"]

Here, we created a pod-reader role which can only access pods in the lfs158 namespace

one we create this Role, we can bind users with RoleBinding

There are 2 kinds of ~RoleBinding~s:

- RoleBinding

This allows us to bind users to the same namespace as a Role.

- ClusterRoleBinding

It allows us to grant access to resources at cluster-level and to all namespaces

To start API server with rbac option, we use --authorization-mode=RBAC

we can also dynamically configure policies.

It is used to specify granular access control policies which include allowing privileged containers, checking on resource quota etc.

There are different admission controllers to enforce these eg: ResourceQuota, AlwaysAdmit, DefaultStorageClass etc.

They come into affect only after API requests are authenticated and authorized

To use them, we must start the api server with the flag admission-control which takes a comma separated ordered list of controller names.

--admission-control=NamespaceLifecycle,ResourceQuota,PodSecurityPolicy,DefaultStorageClass

We will learn about services, which are used to group Pods to provide common access points from the external world.

We will learn about kube-proxy daemon, which runs each on worker node to provide access to services.

Also, we’ll talk about service discovery and service types which decide the access scope of a service.

Pods are ephemeral, they can be terminated, rescheduled etc. We cannot connect to them using pod IP directly. Kubernetes provides a higher level abstraction called Service which logically groups Pods and a policy to access them.

The grouping is achieved with labels and selectors.

Example consider this:

Here, we have grouped the pods into 2 logical groups based on the selectors frontend and db.

We can assign a name to the logical group, called a Service name eg: frontend-svc and db-svc.

Example:

kind: Service

apiVersion: v1

metadata:

name: frontend-svc

spec:

selector:

app: frontend

ports:

- protocol: TCP

port: 80

targetPort: 5000

Here, 🔝, we are creating frontend-svc service. By default each service also gets an IP address which is routable only inside the cluster.

The IP attached to each service is aka as ClusterIP for that service (eg: 172.17.0.4 here in the diagram)

The user/client now connects to the IP address which forwards the traffic to the pods attached to it. It does the load balancing, routing etc.

We also in our service spec, defined a targetPort as 5000. So the service will route the traffic to port 5000 on the pods. If we don’t select it, it will be the same port as the service port (80 in the example above)

A tuple of Pods, IP addresses, along with the targetPort is referred to as a Service endpoint. In our case, frontend-svc has 3 endpoints: 10.0.1.3:5000, 10.0.1.4:5000, and 10.0.1.5:5000.

All the worker nodes run kube-proxy which watches the API server for addition and removal of services.

For each new service, the kube-proxy updates the iptables of all the nodes to route the traffic for its ClusterIP to the service endpoints (node-ip:port tuples). It does the load balancing etc. The kube-proxy implements the service abstraction.

Services are the primary mode of communication in Kubernetes, so we need a way to discover them at runtime. Kubernetes supports 2 methods of discovering a service:

- Environment Variables

As soon as a pod runs on any worker node, the kubelet daemon running on that node adds a set of environment variables in the pod for all the active services.

Eg: consider a service redis-master, with exposed port 6379 and ClusterIP as 172.17.0.6

This would lead to the following env vars to be declared in the pods:

REDIS_MASTER_SERVICE_HOST=172.17.0.6 REDIS_MASTER_SERVICE_PORT=6379 REDIS_MASTER_PORT=tcp://172.17.0.6:6379 REDIS_MASTER_PORT_6379_TCP=tcp://172.17.0.6:6379 REDIS_MASTER_PORT_6379_TCP_PROTO=tcp REDIS_MASTER_PORT_6379_TCP_PORT=6379 REDIS_MASTER_PORT_6379_TCP_ADDR=172.17.0.6

- DNS

Kubernetes has an add-on for DNS, which creates a DNS record for each Service and its format is my-svc.my-namespace.svc.cluster.local.

Services within the same namespace can reach other service with just their name. For example, if we add a Service redis-master in the my-ns Namespace, then all the Pods in the same Namespace can reach to the redis Service just by using its name, redis-master. Pods from other Namespaces can reach the Service by adding the respective Namespace as a suffix, like redis-master.my-ns.

This method is recommended.

While defining a Service, we can also choose it’s scope. We can decide if the Service

- is accessible only within the cluster

- is accessible from within the cluster AND the external world

- maps to an external entity which resides outside to the cluster

The scope is decided with the ServiceType declared when creating the service.

Service types - ClusterIP, NodePort

ClusterIP is the default ServiceType. A service gets its virtual IP using the ClusterIP. This IP is used for communicating with the service and is accessible only within the cluster

With the NodePort ServiceType in addition to creating a ClusterIP, a port from the range 30,000-32,767 also gets mapped to the Service from all the worker nodes.

Eg: if the frontend-svc has the NodePort 32233, then when we connect to any worked node on 32233, the packets are routed to the assigned ClusterIP 172.17.0.4

NodePort is useful when we want to make our service accessible to the outside world. The end user connects to the worker nodes on the specified port, which forwards the traffic to the applications running inside the cluster.

To access the service from the outside world, we need to configure a reverse proxy outside the Kubernetes cluster and map the specific endpoint to the respective port on the worked nodes.

There is another ServiceType: LoadBalancer

- With this ServiceType, NodePort and ClusterIP services are automatically created, and the external loadbalancer will route to them

- The Services are exposed at a static port on each worker node.

- the Service is exposed externally using the underlying cloud provider’s load balancer.

The cluster administrator can manually configure the service to be mapped to an external IP also. The traffic on the ExternalIP (and the service port) will be routed to one of the service endpoints

It is a special ServiceType that has no selectors, or endpoints.

When accessed within a cluster, it returns a CNAME record of an externally configured service.

This is primarily used to make an externally configured service like my-db.aws.com available inside the cluster using just the name my-db to other services inside the same namespace.

Example of using NodePort

apiVersion: v1

kind: Service

metadata:

name: web-service

labels:

run: web-service

spec:

type: NodePort

ports:

- port: 80

protocol: TCP

selector:

app: nginx

Create it using:

$ kubectl create -f webserver-svc.yaml service "web-service" created $ kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 1d web-service NodePort 10.110.47.84 <none> 80:31074/TCP 12s

We can access it at: $(CLUSTER_IP):31074

This is the port that will route the traffic to the service endpoint’s port 80

(recall again, the Service Endpoint is just the tuples of (node IP:service port), the service port is the targetPort in the service spec)

Deploying MongoDB We need a Deployment and a Service

apiVersion: apps/v1

kind: Deployment

metadata:

name: rsvp-db

labels:

appdb: rsvpdb

spec:

replicas: 1

selector:

matchLabels:

appdb: rsvpdb

template:

metadata:

labels:

appdb: rsvpdb

spec:

containers:

- name: rsvp-db

image: mongo:3.3

ports:

- containerPort: 27017

$ kubectl create -f rsvp-db.yaml

deployment "rsvp-db" created

apiVersion: v1

kind: Service

metadata:

name: mongodb

labels:

app: rsvpdb

spec:

ports:

- port: 27017

protocol: TCP

selector:

appdb: rsvpdb

$ kubectl create -f rsvp-db-service.yaml

service "mongodb" created

They are used by kubelet to control the health of the application running inside the Pod’s container. Liveness probe is like the health check on AWS’ ELB. If the health check fails, the container is restarted

It can be defined as:

- liveness command

- liveness HTTP request

- TCP liveness probe

Example:

apiVersion: v1

kind: Pod

metadata:

labels:

test: liveness

name: liveness-exec

spec:

containers:

- name: liveness

image: k8s.gcr.io/busybox

args:

- /bin/sh

- -c

- touch /tmp/healthy; sleep 30; rm -rf /tmp/healthy; sleep 600

livenessProbe:

exec:

command:

- cat

- /tmp/healthy

initialDelaySeconds: 3

periodSeconds: 5

Here, we start a container with a command which creates a new file in /tmp.

Next, we defined the livenessProbe to be a command which ~cat~s the file. If it exists, the container is healthy we say.

Deleting this file will trigger a restart

We can also define a HTTP request as the liveness test:

livenessProbe:

httpGet:

path: /healthz

port: 8080

httpHeaders:

- name: X-Custom-Header

value: Awesome

initialDelaySeconds: 3

periodSeconds: 3

Here, we hit the /healthz endpoint on port 8080

We can also do TCP liveness probes The kubelet attempts to open the TCP socket to the container which is running the application. If it succeeds, the application is considered healthy, otherwise the kubelet marks it as unhealthy and triggers a restart

livenessProbe:

tcpSocket:

port: 8080

initialDelaySeconds: 15

periodSeconds: 20

Sometimes, the pod has to do some task before it can serve traffic. This can be loading a file in memory, downloading some assets etc. We can use Readiness probes to signal that the container (in the context of Kubernetes, containers and Pods are used interchangeably) is ready to receive traffic.

readinessProbe:

exec:

command:

- cat

- /tmp/healthy

initialDelaySeconds: 5

periodSeconds: 5

Kubernetes uses Volumes for persistent storage. We’ll talk about PersistantVolume and PersistentVolumeClaim which help us attach volumes to Pods

A Volume is essentially a directory backed by a storage medium.

A Volume is attached to a Pod and shared by the containers of that Pod. The volume has the same lifespan as the Pod and it outlives the containers of the Pod - it allows data to be preserved across container restarts.

A directory which is mounted inside a Pod is backed by the underlying Volume Type. The Volume Type decides the properties of the directory, like: size, content etc

There are several volume types:

- emptyDir

An empty Volume is created for the Pod as soon as it is schedules on the worker node. The Volume’s life is coupled with the Pod’s. When the Pod dies, the contents of the emptyDir Volume are deleted

- hostPath

We can share a directory from the host to the Pod. If the Pod dies, the contents of the hostPath still exist. Their use is not recommended because not all the hosts would have the same directory structure

- gcePersistentDisk

We can mount Google Compute Engine’s PD (persistent disk) into a Pod

- awsElasticBlockStore

We can mount AWS EBS into a Pod

- nfs

We can mount nfs share

- iscsi

We can mount iSCSI into a Pod. Iscsi stands for (internet small computer systems interface), it is an IP based storage networking standard for linking data storage facilities.

- secret

With the secret volume type, we can pass sensitive information such as passwords to pods.

- persistentVolumeClaim

We can attach a PersistentVolume to a pod using PersistentVolumeClaim. PVC is a volume type

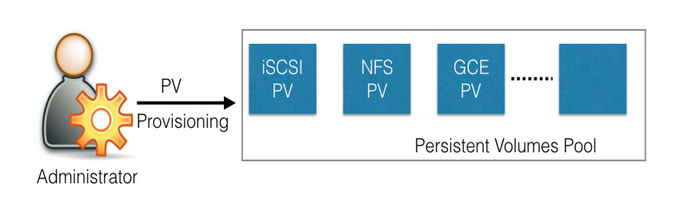

In a typical setup, storage is maintained by the system administrators. The developer just gets instructions to use the storage, and doesn’t have to worry about provisioning etc

Using vanilla Volume Types makes the same model difficult in the Kubernetes. So we have PersistentVolume (PV), which provides APIs for users and administrators to manage and consume storage of the above Volume Types. To manage - PV API resource type To consume - PVC API resource type

PVs can be dynamically provisioned as well - using the StorageClass resource. A StorageClass contains pre-defined provisioners and parameters to create a PV. How it works is, the user sends a PVC request and this results in the creation of a PV

Some of the Volume Types that support managing storage using PV:

- GCEPersistentDisk

- AWSElasticBlockStore

- AzureFile

- NFS

- iSCSI

A PVC is a request for storage by the user. User requests PV resources based on size, access modes etc. Once a suitable PV is found, it is bound to a PVC.

The administrator provisions PVs, the user requests them using PVC. Once the suitable PVs are found, they are bound to the PVC and given to the user to use.

After use, the PV can be released. The underlying PV can then be reclaimed and used by someone else.

Note: Kubernetes interfaces are always CXI - Container X Interface (eg: CNI, CSI etc)

We have several CO - Container Orchestraters (Kubernetes, Mesos, Cloud Foundry). Each manages volumes in its own way. This lead to a difficult time for the storage vendors as they have to support all the different COs. Also, the code written by the vendors has to live “in-tree” in the COs and has to be tied to the release cycle of the COs. This is not ideal

So, the volume interface is standardized now so that a volume plugin using the CSI would work for all COs.

While deploying an application, we may need to pass runtime parameters like endpoints, passwords etc. To do this we can use ConfigMap API resource.

We can use ConfigMaps to pass key-value pairs, which can be consumed by pods, or any other system components like controllers. There are 2 ways to create ConfigMaps:

Recall literal values are just values defined “in-place”

$ kubectl create configmap my-config --from-literal=key1=value1 --from-literal=key2=value2 configmap "my-config" created

apiVersion: v1 kind: ConfigMap metadata: name: customer1 data: TEXT1: Customer1_Company TEXT2: Welcomes You COMPANY: Customer1 Company Technology Pct. Ltd. $ kubectl create -f customer1-configmap.yaml configmap "customer1" created

We can use the ConfigMap values from inside the Pod using:

....

containers:

- name: rsvp-app

image: teamcloudyuga/rsvpapp

env:

- name: MONGODB_HOST

value: mongodb

- name: TEXT1

valueFrom:

configMapKeyRef:

name: customer1

key: TEXT1

- name: TEXT2

valueFrom:

configMapKeyRef:

name: customer1

key: TEXT2

- name: COMPANY

valueFrom:

configMapKeyRef:

name: customer1

key: COMPANY

....

We can also mount a ConfigMap as a Volume inside a Pod. For each key, we will see a file in the mount path and the content of that file becomes the respective key’s value.

Secrets are similar to ConfigMaps in that they are key-value pairs that can be passed on to Pods etc. The only difference being they deal with sensitive information like passwords, tokens, keys etc

The Secret data is stored as plain text inside etcd, so the administrators must restrict access to the api server and etcd

We can create a secret literally

$ kubectl create secret generic my-password --from-literal=password=mysqlpassword

The above command would create a secret called my-password, which has the value of the password key set to mysqlpassword.

Analyzing the get and describe examples below, we can see that they do not reveal the content of the Secret. The type is listed as Opaque.

$ kubectl get secret my-password NAME TYPE DATA AGE my-password Opaque 1 8m $ kubectl describe secret my-password Name: my-password Namespace: default Labels: <none> Annotations: <none> Type Opaque Data ==== password.txt: 13 bytes

We can also create a secret manually using a YAML configuration file. With secrets, each object data must be encoded using base64.

So:

# get the base64 encoding of password $ echo mysqlpassword | base64 bXlzcWxwYXNzd29yZAo= # now use it to create a secret apiVersion: v1 kind: Secret metadata: name: my-password type: Opaque data: password: bXlzcWxwYXNzd29yZAo=

Base64 is not encryption of course, so decrypting is easy:

$ echo "bXlzcWxwYXNzd29yZAo=" | base64 --decode

Like ConfigMaps, we can use Secrets in Pods using:

- environment variables

.....

spec:

containers:

- image: wordpress:4.7.3-apache

name: wordpress

env:

- name: WORDPRESS_DB_HOST

value: wordpress-mysql

- name: WORDPRESS_DB_PASSWORD

valueFrom:

secretKeyRef:

name: my-password

key: password

.....

- mounting secrets as a volume inside a Pod. A file would be created for each key mentioned in the Secret whose content would be the respective value.

We earlier saw how we can access our deployed containerized application from the external world using Services. We talked about LoadBalancer ServiceType which gives us a load balancer on the underlying cloud platform. This can get expensive if we use too many Load Balancers.

We also talked about NodePort which gives us a port on each worker node and we can have a reverse proxy that would route the requests to the (node-ip:service-port) tuples. However, this can get tricky, as we need to keep track of assigned ports etc.

Kubernetes has Ingress which is another method we can use to access our applications from the external world.

With Services, routing rules are attached to a given Service, they exist as long as the service exists. If we decouple the routing rules from the application, we can then update our application without worrying about its external access.

The Ingress resource helps us do that.

According to kubernetes.io

an ingress is a collection of rules that allow inbound connections to reach the cluster Services

To allow inbound connection to reach the cluster Services, ingress configures a L7 HTTP load balancer for Services and provides the following:

- TLS - transport layer security

- Name based virtual hosting

- Path based routing

- Custom rules

With Ingress, users don’t connect directly the a Service. They reach the Ingress endpoint, and from there, the request is forwarded to the respective Service. (note the usage of request, not packets since Ingress is L7 load balancer)

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: web-ingress

namespace: default

spec:

rules:

- host: blue.example.com

http:

paths:

- backend:

serviceName: webserver-blue-svc

servicePort: 80

- host: green.example.com

http:

paths:

- backend:

serviceName: webserver-green-svc

servicePort: 80

The requests for both (blue.example.com and green.example.com) will come to the same Ingress endpoint which will route it to the right Service endpoint

The example above 🔝 is an example of name based virtual hosting ingress rule

We can also have fan out ingress rules, in which we send the requests like example.com/blue and example.com/green which would be forwarded to the correct Service

The Ingress resource uses the Ingress Controller which does the request forwarding.

It is an application that watches the master node’s API server for changes in the ingress resources and updates the L7 load balancer accordingly.

Kubernetes has several different Ingress Controllers (eg: Nginx Ingress Controller) and you can write yours too.

Once the controller is deployed (recall it’s a normal application) we can use it with an ingress resource

$ kubectl create -f webserver-ingress.yaml

Kubernetes also has features like auto-scaling, rollbacks, quota management etc

We can attach arbitrary non-identifying metadata to any object, in a key-value format

"annotations": {

"key1" : "value1",

"key2" : "value2"

}

They are not used to identify and select objects, but for:

- storing release ids, git branch info etc

- phone/pages numbers

- pointers to logging etc

- descriptions

Example:

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: webserver

annotations:

description: Deployment based PoC dates 2nd June'2017

....

....

Annotations can be looked at by using describe

$ kubectl describe deployment webserver

If we have recorded our Deployment before doing our update, we can revert back to a know working state if the deployment fails

Deployments also has features like:

- autoscaling

- proportional scaling

- pausing and resuming

A deployment automatically creates a ReplicaSet - which makes sure the correct number of Pods are present and pass the liveness probe.

A Job creates 1 or more Pods to perform a given task. The Job object takes the responsibility of Pod failures and makes sure the task is completed successfully. After the task, the Pods are terminated automatically. We can also have cron jobs etc

In a multi tenant deployment, fair usage is vital. Administrators can use ResourceQuota object to limit resource consumption per Namespace

We can have the following types of quotas per namespace:

- Compute Resource Quota

We can limit the compute resources (CPU, memory etc) that can be requested in a given namespace

- Storage Resource Quota

We can limit the storage resources (PVC, requests.storage etc)

- Object Count Quota

We can restrict the number of objects of a given type (Pods, ConfigMaps, PVC, ReplicationControllers, Services, Secrets etc)

This is implemented using cgroups under the hood

If we want a “ghost” Pod(a pod that is running on all nodes at all times), for eg to collect monitoring data from all nodes etc we can use DaemonSet object.

Whenever a node is added to the cluster, a Pod from a given DaemonSet is created on it. If the DaemonSet is deleted, all Pods are deleted as well.

The StatefulSet controller is used for applications that require a unique identity such as name, network identifications, strict ordering etc - eg: mysql cluster, etcd cluster

The StatefulSet controller provides identity and guaranteed ordering of deployment and scaling to Pods.

We can manage multiple Kubernetes clusters from a single control plane using Kubernetes Federation. We can sync resources across the clusters and have cross-cluster discovery, allowing us to do Deployments across regions and access them using a global DNS record.

The Federation is very useful when we want to build a hybrid solution, in which we can have one cluster running inside our private datacenter and another one on the public cloud. We can also assign weights for each cluster in the Federation, to distribute the load as per our choice.

In Kubernetes, a resource is an API endpoint. It stores a collection of API objects. Eg: a Pod resource contains all the Pod objects.

If the existing Kubernetes resources are not sufficient to fulfill our requirements, we can create new resources using custom resources

To make a resource declarative(like the rest of Kubernetes), we have to write a custom controller - which can interpret the resource structure and perform the required actions.

There are 2 ways of adding custom resources:

- CRDs - custom resource definitions

- API aggregation

They are subordinate API servers which sit behind the primary API server and act as proxy. They offer more fine grained control.

When we deploy an application on Kubernetes, we have to deal with a lot of manifests (the yaml containing the spec) such as Deployments, Services, Volume Claims, Ingress etc. It can be too much work to deploy them one by one specially for the common use cases like deploying a redis cluster etc.

We can bundle these manifests after templatizing them into a well-defined format (with some metadata). This becomes a package essentially - we call them Charts. They can then be served by package managers like Helm.

Helm is a package manager (analogous to yum and apt) for Kubernetes, which can install/update/delete those Charts in the Kubernetes cluster.

Helm has two components:

- A client called helm, which runs on your user’s workstation

- A server called tiller, which runs inside your Kubernetes cluster.

The client helm connects to the server tiller to manage Charts

2 popular solutions are:

- Heapster

It is a cluster wide aggregator of monitoring and event data which is natively supported on Kubernetes.

- Prometheus

It can also be used to scrape resource usage from different Kubernetes components and objects.

We can collect logs from the different components of Kubernetes using fluentd, which is an open source data collector. We can ship the logs to Elasticsearch etc.

Kubernetes would like to thank every sysadmin who has woken up at 3am to restart a process.

Kubernetes intends to radically simplify the task of building, deploying, and maintaining distributed systems.

From the first programming languages, to object-oriented programming, to the development of virtualization and cloud infrastructure, the history of computer science is a history of the development of abstractions that hide complexity and empower you to build ever more sophisticated applications.

The speed with which you can deploy new features and components - while keeping the service up reliably. Kubernetes provides this by providing immutability, declarative configuration, self healing systems

Containers and Kubernetes encourage developers to build distributed systems that adhere to the principles of immutable infrastructure.

With immutable infrastructure, once an artifact is created in the system it does not change via user modifications.

Traditionally, this was not the case, they were treated as mutable infrastructure. With mutable infrastructure, changes are applied as incremental updates to an existing system.

A system upgrade via the apt-get update tool is a good example of an update to a mutable system. Running apt sequentially downloads any updated binaries, copies them on top of older binaries, and makes incremental updates to configuration files.

In contrast, in an immutable system, rather than a series of incremental updates and changes, an entirely new, complete image is built, where the update simply replaces the entire image with the newer image in a single operation. There are no incremental changes.

In Draup, we have the artifact which is the source code, which is replaced by a new git clone, but the pip packages etc maybe updated/removed incrementally. Hence, we use mutable infrastructure. In services, we have docker compose, and subsequently immutable infrastructure.

Consider containers. What would you rather do?

- You can login to a container,run a command to download your new software, kill the old server, and start the new one.

- You can build a new container image, push it to a container registry,kill the existing container, and start a new one.

In the 2nd case, the entire artifact replacement makes it easy to track changes that you made, and also to rollback your changes. Go-Jek’s VP’s lecture during the recent #go-include meetup comes to mind, where he spoke about “snowflakes” that are created by mutable infrastructure.

Everything in Kubernetes is a declarative configuration object that represents the desired state of the system. It is Kubernetes’s job to ensure that the actual state of the world matches this desired state.

declarative configuration is an alternative to imperative configuration, where the state of the world is defined by the execution of a series of instructions rather than a declaration of the desired state of the world.

While imperative commands define actions, declarative configurations define state.

To understand these two approaches, consider the task of producing three replicas of a piece of software. With an imperative approach, the configuration would say: “run A, run B, and run C.” The corresponding declarative configuration would be “replicas equals three.”

As a concrete example of the self-healing behavior, if you assert a desired state of three replicas to Kubernetes, it does not just create three replicas — it continuously ensures that there are exactly three replicas. If you manually create a fourth replica Kubernetes will destroy one to bring the number back to three. If you manually destroy a replica, Kubernetes will create one to again return you to the desired state.

Kubernetes achieves and enables scaling by favoring decoupled architectures.

In a decoupled architecture each component is separated from other components by defined APIs and service load balancers.

Decoupling components via load balancers makes it easy to scale the programs that make up your service, because increasing the size (and therefore the capacity) of the program can be done without adjusting or reconfiguring any of the other layers of your service. Each can be scaled independently.

Decoupling servers via APIs makes it easier to scale the development teams because each team can focus on a single, smaller microservice with a comprehensible surface area

Crisp APIs between microservices (defining an interface b/w services) limit the amount of cross-team communication overhead required to build and deploy software. Hence, teams can be scaled effectively.

We can have autoscaling at 2 levels

- pods

- they can be configured to be scaled up or down depending on some predefined condition

- this assumes that the nodes have resources to support the new number of pods

- cluster nodes

- since each node is exactly like the previous one, adding a new node to the cluster is trivial and can be done with a few commands or a prebaked image.

Also, since Kubernetes allows us to bin pack, we can place containers from different services onto a single server. This reduces stastical noise and allows us to have a more reliable forecast about growth of different services.

Here, we see each time is decoupled by APIs. The Hardware Ops team has to provide the hardware. The kernel team just needs the hardware to make sure their kernel is providing the system calls api. The cluster guys need the api so that they can provision the cluster. The application developers need the kube api to run their apps. Everyone is happy.

When your developers build their applications in terms of container images and deploy them in terms of portable Kubernetes APIs, transferring your application between environments, or even running in hybrid environments, is simply a matter of sending the declarative config to a new cluster.

Kubernetes has a number of plug-ins that can abstract you from a particular cloud. For example, Kubernetes services know how to create load balancers on all major public clouds as well as several different private and physical infrastructures. Likewise, Kubernetes PersistentVolumes and PersistentVolumeClaims can be used to abstract your applications away from specific storage implementations.

Container images bundle an application and its dependencies, under a root filesystem, into a single artifact. The most popular container image format is the Docker image format, the primary image format supported by Kubernetes.

Docker images also include additional metadata used by a container runtime to start a running application instance based on the contents of the container image.

Each layer adds, removes, or modifies files from the preceding layer in the filesystem. This is an example of an overlay filesystem. There are a variety of different concrete implementations of such filesystems, including aufs, overlay, and overlay2.

There are several gotchas that come when people begin to experiment with container images that lead to overly large images. The first thing to remember is that files that are removed by subsequent layers in the system are actually still present in the images; they’re just inaccessible.

Another pitfall that people fall into revolves around image caching and building. Remember that each layer is an independent delta from the layer below it. Every time you change a layer, it changes every layer that comes after it. Changing the preceding layers means that they need to be rebuilt, repushed, and repulled to deploy your image to development.

In general, you want to order your layers from least likely to change to most likely to change in order to optimize the image size for pushing and pulling.

In the 1st case, every time the server.js file changes, the node package layer has to be pushed and pulled.

Kubernetes relies on the fact that images described in a pod manifest are available across every machine in the cluster. So that the scheduler can schedule Pods on any container.

Recall we heard this point at the Kubernetes meetup organized by the Redhat guys at Go-Jek.

Docker provides an API for creating application containers on Linux and Windows systems. Note, docker now has windows containers as well.

It’s important to note that unless you explicitly delete an image it will live on your system forever, even if you build a new image with an identical name. Building this new image simply moves the tag to the new image; it doesn’t delete or replace the old image. Consequently, as you iterate while you are creating a new image, you will often create many, many different images that end up taking up unnecessary space on your computer. To see the images currently on your machine, you can use the docker images command.

kubectl can be used to manage most Kubernetes objects such as pods, ReplicaSets, and services. kubectl can also be used to explore and verify the overall health of the cluster.

This gives us information about the instance OS, memory, harddisk space, docker version, running pods etc

Non-terminated Pods: (2 in total) Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits --------- ---- ------------ ---------- --------------- ------------- kube-system aws-node-hl4lc 10m (0%) 0 (0%) 0 (0%) 0 (0%) kube-system kube-proxy-bgblb 100m (5%) 0 (0%) 0 (0%) 0 (0%)

Here, note the “requests” and “limits” Requests are the resources requested by the pod. It is guaranteed to be present. The “limit” is the maximum resources the pod can consume.

A pod’s limit can be higher than its request, in which case the extra resources are supplied on a best-effort basis. They are not guaranteed to be present on the node.

Many of the components of Kubernetes are deployed using Kubernetes itself. All of these components run in the kube-system namespace.

It implements the “service” abstraction. It is responsible for routing network traffic to load balanced services in the Kubernetes cluster.

kube-proxy is implemented in Kubernetes using the DaemonSet object.

Kubernetes also runs a DNS server, which provides naming and discovery for the services that are defined in the cluster. This DNS server also runs as a replicated service on the cluster.

There is also a Kubernetes service that performs load-balancing for the DNS server

$ k8s kubectl get services --namespace=kube-system kube-dns NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kube-dns ClusterIP 10.100.0.10 <none> 53/UDP,53/TCP 1h

For all containers, the DNS for that pod has been set to point to this internal ip in the /etc/resolve.conf file for that container.

Needs to be deployed.

There are some basic kubectl commands that apply to all Kubernetes objects.

Kubernetes uses namespaces to organize objects in the cluster. By default, the default namespace is used. If you want to use a different namespace, you can pass kubectl the –namespace flag.

If you want to change the default namespace more permanently, you can use a context.

A context is like a set of settings. It can either have just a different namespace configuration, or can even point to a whole new cluster.

Note, creating and using contexts gets recorded in the $HOME/.kube/config

Let’s create a different namespace context:

kubectl config set-context my-context --namespace=mystuff

This creates a new context, but it doesn’t actually start using it yet. To use this newly created context, you can run:

$ kubectl config use-context my-context

Everything contained in Kubernetes is represented by a RESTful resource.

Each Kubernetes object exists at a unique HTTP path; for example, https://your-k8s.com/api/v1/namespaces/default/pods/my-pod leads to the representation of a pod in the default namespace named my-pod. The kubectl command makes HTTP requests to these URLs to access the Kubernetes objects that reside at these paths. By default, it prunes information so that it fits on a single line. To get more info, use -o wide, or -o json, or -o yaml

The most basic command for viewing Kubernetes objects via kubectl is get.

Eg: kubectl get <resouce-name> will get a listing of all resources in the current namespace.

To get a particular resource, kubectl get <resouce-name> <object-name>

kubectl uses the JSONPath query language to select fields in the returned object.

kubectl get pods my-pod -o jsonpath --template={.status.podIP}

Objects in the Kubernetes API are represented as JSON or YAML files. These files are either returned by the server in response to a query or posted to the server as part of an API request.

To delete, kubectl delete -f obj.yaml

Labels and annotations are tags for your objects.

you can update the labels and annotations on any Kubernetes object using the annotate and label commands. For example, to

add the color=red label to a pod named bar, you can run: $ kubectl label pods bar color=red

To view logs of a container: $ kubectl logs <pod-name>

To execute command on a container kubectl exec -it <pod-name> -- bash

To copy files to and from a container using the cp command kubectl cp <pod-name>:/path/to/remote/file /path/to/local/file

Containers in a pod share the host volume.

Here, the web serving and git containers are part of the same logical group, so they are in the same pod. But they are still in separate containers since we don’t want one’s memory leak to OOM (out of memory, process terminated) the other.

The name goes with the whale theme of Docker containers, since a Pod is also a group of whales.

Each container within a Pod runs in its own cgroup (which means they have their own limits on resource usage), but they share a number of Linux namespaces (eg network)

Applications running in the same Pod share the same IP address and port space (network namespace), have the same hostname (UTS namespace), and can communicate using native interprocess communication channels over System V IPC or POSIX message queues (IPC namespace).

However, applications in different Pods are isolated from each other(since they don’t share the namespaces); they have different IP addresses, different hostnames, and more. Containers in different Pods running on the same node might as well be on different servers.

Before putting your containers in same Pod, think:

- do they have a truly symbiotic relationship?

- as in, can they work if they are on different machines

- do you want to scale them together?

- as in, it doesn’t make sense to scale 1st container without also scaling 2nd container

In general, the right question to ask yourself when designing Pods is, “Will these containers work correctly if they land on different machines?” If the answer is “no,” a Pod is the correct grouping for the containers. If the answer is “yes,” multiple Pods is probably the correct solution.

In the example of Git pod web server pod, two containers interact via a local filesystem. It would be impossible for them to operate correctly if the containers were scheduled on different machines.

Pods are described in a Pod manifest. The Pod manifest is just a text-file representation of the Pod Kubernetes API object.

Declarative configuration in Kubernetes is the basis for all of the self-healing behaviors in Kubernetes that keep applications running without user action.

The Kubernetes API server accepts and processes Pod manifests before storing them in persistent storage (etcd). The scheduler also uses the Kubernetes API to find Pods that haven’t been scheduled to a node. Once scheduled to a node, Pods don’t move and must be explicitly destroyed and rescheduled.

The simplest way to create a Pod is via the imperative kubectl run command.

Eg: $ kubectl run kuard --image=gcr.io/kuar-demo/kuard-amd64:1

apiVersion: v1

kind: Pod

metadata:

name: kuard

spec:

containers:

- image: gcr.io/kuar-demo/kuard-amd64:1

name: kuard

ports:

- containerPort: 8080

name: http

protocol: TCPThis is equivalent to:

The Pod manifest will be submitted to the Kubernetes API server. The Kubernetes system will then schedule that Pod to run on a healthy node in the cluster, where it will be monitored by the kubelet daemon process.

$ docker run -d --name kuard \ --publish 8080:8080 gcr.io/kuar-demo/kuard-amd64:1

Get running pods: $ kubectl get pods

Get more info: kubectl describe pods kuard

Deleting a pod: kubectl delete pods/kuard or via the file kubectl delete -f kuard-pod.yaml

When a Pod is deleted, it is not immediately killed. Instead, if you run kubectl get pods you will see that the Pod is in the Terminating state. All Pods have a termination grace period. By default, this is 30 seconds. When a Pod is transitioned to Terminating it no longer receives new requests. In a serving scenario, the grace period is important for reliability because it allows the Pod to finish any active requests that it may be in the middle of processing before it is terminated. It’s important to note that when you delete a Pod, any data stored in the containers associated with that Pod will be deleted as well. If you want to persist data across multiple instances of a Pod, you need to use PersistentVolumes.

The data is not deleted when the pod is restarted etc

you can port forward directly to localhost using kubectl kubectl port-forward kuard 8080:8080

On running this 🔝, a secure tunnel is created from your local machine, through the Kubernetes master, to the instance of the Pod running on one of the worker nodes.

You can run commands on the pod too:

$ kubectl exec kuard date, even an interactive one $ kubectl exec -it kuard bash

Copying files to and fro is easy:

$ kubectl cp <pod-name>:/captures/capture3.txt ./capture3.txt

Generally speaking, copying files into a container is an antipattern. You really should treat the contents of a container as immutable.

When you run your application as a container in Kubernetes, it is automatically kept alive for you using a process health check. This health check simply ensures that the main process of your application is always running. If it isn’t, Kubernetes restarts it.

However, in most cases, a simple process check is insufficient. For example, if your process has deadlocked and is unable to serve requests, a process health check will still believe that your application is healthy since its process is still running. To address this, Kubernetes introduced health checks for application liveness. Liveness health checks run application-specific logic (e.g., loading a web page) to verify that the application is not just still running, but is functioning properly. Since these liveness health checks are application-specific, you have to define them in your Pod manifest.

Liveness probes are defined per container, which means each container inside a Pod is health-checked separately.

apiVersion: v1

kind: Pod

metadata:

name: kuard

spec:

containers:

- image: gcr.io/kuar-demo/kuard-amd64:1

name: kuard

ports:

- containerPort: 8080

name: http

protocol: TCP

livenessProbe:

httpGet:

path: /healthy

port: 8080

initialDelaySeconds: 5

timeoutSeconds: 1

periodSeconds: 10

failureThreshold: 3

If the probe fails, the pod is restarted. Details of the restart can be found with kubectl describe kuard. The “Events” section will have text similar to the following: Killing container with id docker://2ac946…:pod “kuard_default(9ee84…)” container “kuard” is unhealthy, it will be killed and re-created.

Kubernetes makes a distinction between liveness and readiness. Liveness determines if an application is running properly. Containers that fail liveness checks are restarted. Readiness describes when a container is ready to serve user requests. Containers that fail readiness checks are removed from service load balancers. Readiness probes are configured similarly to liveness probes.

Kubernetes supports different kinds of healthchecks:

- tcpSocket

- it the tcp connection succeeds, considered healthy. This is for non http applications, like databases etc

- exec

- These execute a script or program in the context of the container. Following typical convention, if this script returns a zero exit code, the probe succeeds; otherwise, it fails. exec scripts are often useful for custom application validation logic that doesn’t fit neatly into an HTTP call.

Kubernetes allows users to specify two different resource metrics. Resource requests specify the minimum amount of a resource required to run the application. Resource limits specify the maximum amount of a resource that an application can consume.

apiVersion: v1

kind: Pod

metadata:

name: kuard

spec:

containers:

- image: gcr.io/kuar-demo/kuard-amd64:1

name: kuard

resources:

requests:

cpu: "500m"

memory: "128Mi"

ports:

- containerPort: 8080

name: http

protocol: TCP

Here, 🔝, we requested a machine with at least half cpu free, and 128mb of memory.

a Pod is guaranteed to have at least the requested resources when running on the node. Importantly, “request” specifies a minimum. It does not specify a maximum cap on the resources a Pod may use.

Imagine that we have container whose code attempts to use all available CPU cores. Suppose that we create a Pod with this container that requests 0.5 CPU.

Kubernetes schedules this Pod onto a machine with a total of 2 CPU cores. As long as it is the only Pod on the machine, it will consume all 2.0 of the available cores, despite only requesting 0.5 CPU.

If a second Pod with the same container and the same request of 0.5 CPU lands on the machine, then each Pod will receive 1.0 cores.