Good Questions Help Zero-Shot Image Reasoning

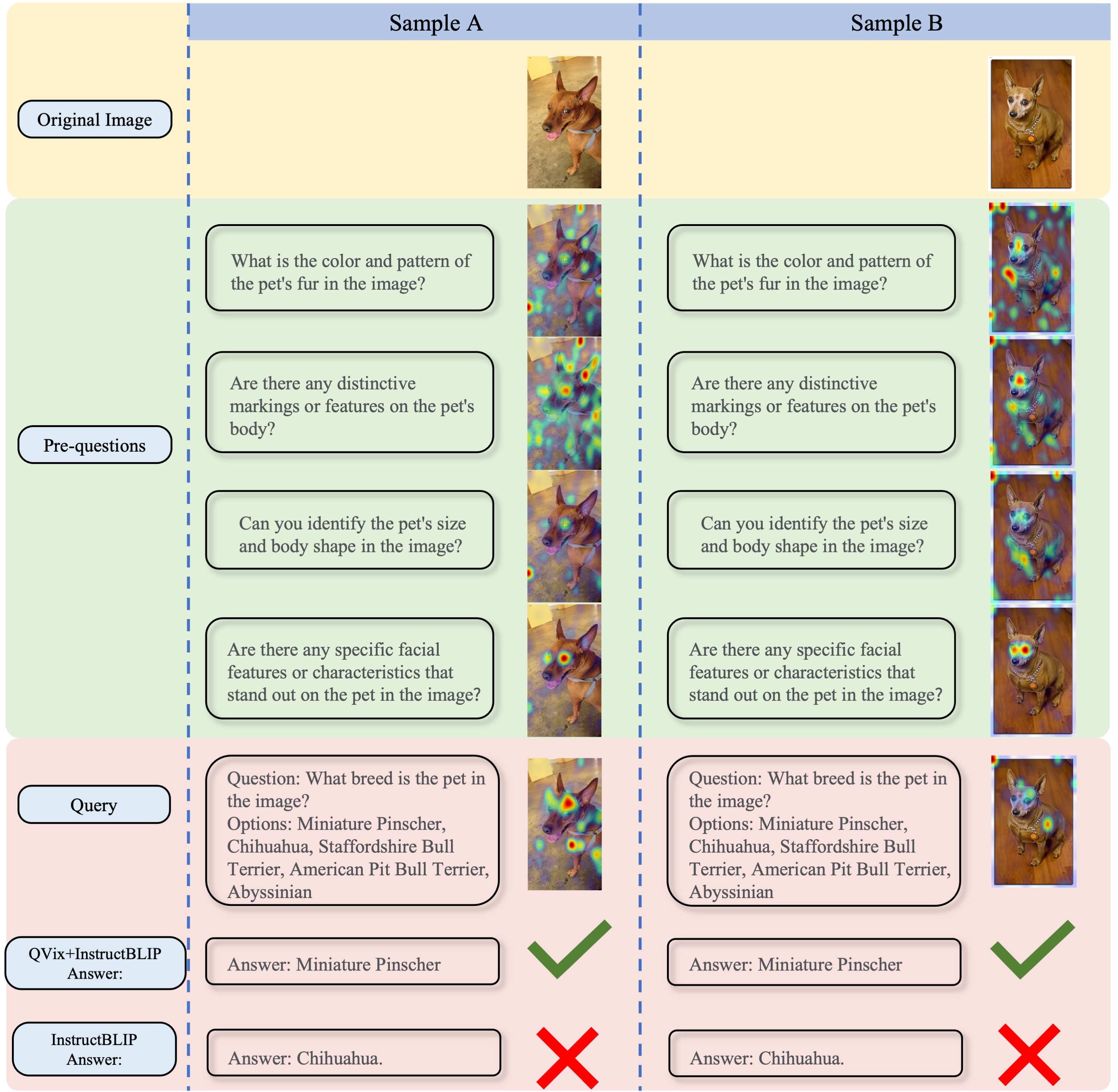

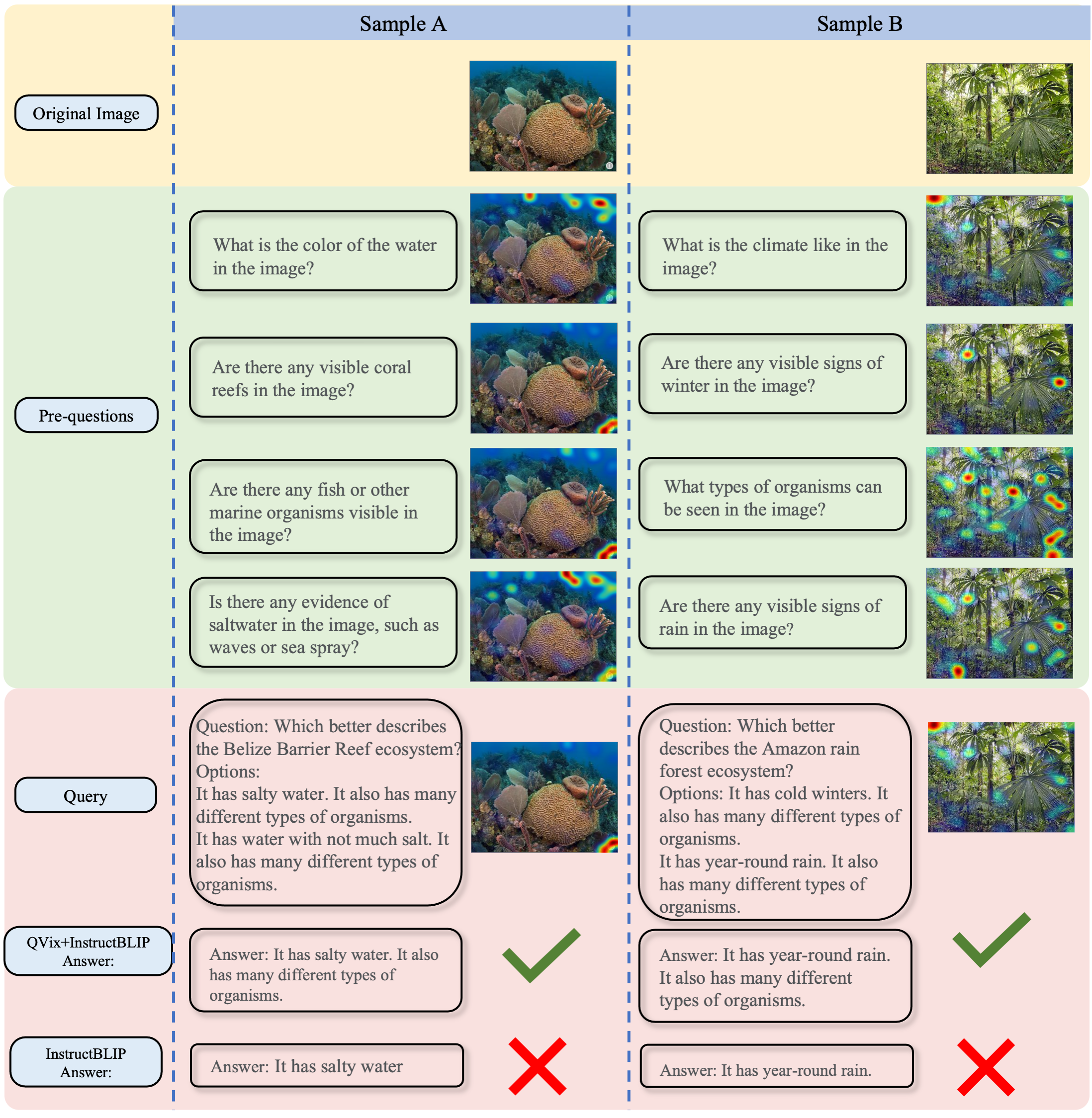

QVix leverages LLMs' strong language prior to generate input-exploratory questions with more details than the original query, guiding LVLMs to explore visual content more comprehensively and uncover subtle or peripheral details. QVix enables a wider exploration of visual scenes, improving the LVLMs’ reasoning accuracy and depth in tasks such as visual question answering and visual entailment.

QVix can utilize detailed information to better distinguish between options that are easily confused, and achieve a more comprehensive and systematic understanding of images through contextual information.

1. Installation

Git clone our repository and creating conda environment:

git clone https://github.com/kai-wen-yang/QVix.git

cd QVix

conda create -n QVix python=3.8

conda activate QVix

pip install -r requirement.txtAdd directory to PYTHONPATH:

cd QVix/models

export PYTHONPATH="$PYTHONPATH:$PWD"2. Prepare dataset

You should replace the variable DATA_DIR in the task_datasets/__init__.py with the directory you save dataset.

SciencQA: in initialing the ScienceQA dataset, the python script will download the test split of ScienceQA from huggingface directly and then saving the samples with image provided in the directory.