by Michael Tschannen, Cian Eastwood, Fabian Mentzer [arxiv] [colab]

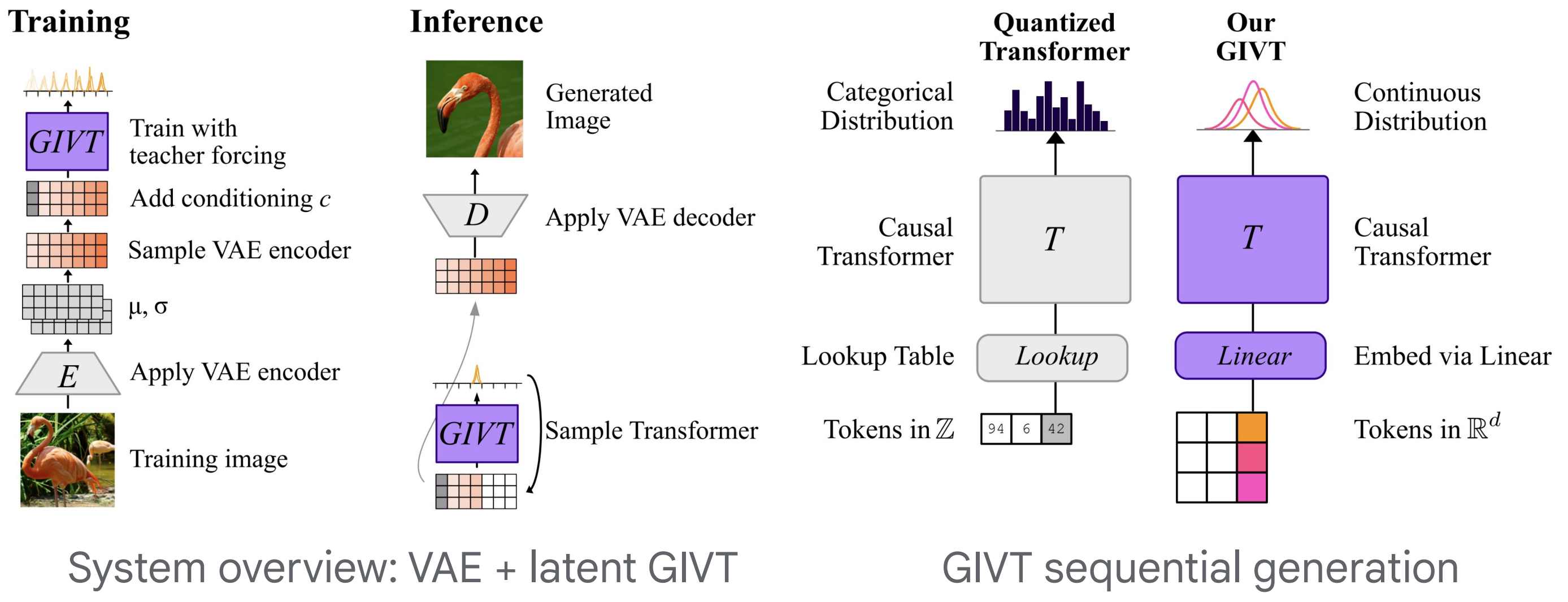

We introduce generative infinite-vocabulary transformers (GIVT) which generate vector sequences with real-valued entries, instead of discrete tokens from a finite vocabulary. To this end, we propose two surprisingly simple modifications to decoder-only transformers: 1) at the input, we replace the finite-vocabulary lookup table with a linear projection of the input vectors; and 2) at the output, we replace the logits prediction (usually mapped to a categorical distribution) with the parameters of a multivariate Gaussian mixture model. Inspired by the image-generation paradigm of VQ-GAN and MaskGIT, where transformers are used to model the discrete latent sequences of a VQ-VAE, we use GIVT to model the unquantized real-valued latent sequences of a β-VAE. In class-conditional image generation GIVT outperforms VQ-GAN (and improved variants thereof) as well as MaskGIT, and achieves performance competitive with recent latent diffusion models. Finally, we obtain strong results outside of image generation when applying GIVT to panoptic segmentation and depth estimation with a VAE variant of the UViM framework.

We provide model checkpoints for a subset of the models from the paper. These are meant as small-scale baselines for researchers interested in exploring GIVT, and are not optimized to provide the best possible visual quality (e.g. scaling the model size can substantially improve visual quality as shown in the paper). See below for instructions to train your own models.

ImageNet 2012 VAEs

| β | 1e-5 | 2.5e-5 | 5e-5 | 1e-4 | 2e-4 |

|---|---|---|---|---|---|

| checkpoint | link | link | link | link | link |

Class-conditional ImageNet 2012 generative models

| model | resolution | β | inference | FID | checkpoint |

|---|---|---|---|---|---|

| GIVT-Causal | 256 x 256 | 5e-5 | t=0.95, DB-CFG=0.4 | 3.35 | link |

| GIVT-MaskGIT | 256 x 256 | 5e-5 | t_C=35, DB-CFG=0.1 | 4.53 | link |

| GIVT-MaskGIT | 512 x 512 | 5e-5 | t_C=140 | 4.86 | link |

UViM

| task | model | dataset | accuracy | checkpoint |

|---|---|---|---|---|

| Panoptic segmentation | VAE (stage 1) | COCO (2017) | 71.0 (PQ) | link |

| Panoptic segmentation | GIVT (stage 2) | COCO (2017) | 40.2 (PQ) | link |

| Depth estimation | VAE (stage 1) | NYU Depth v2 | 0.195 (RMSE) | link |

| Depth estimation | GIVT (stage 2) | NYU Depth v2 | 0.474 (RMSE) | link |

This directory contains configs to train GIVT models as well as VAEs (for the UViM variants). For training the ImageNet 2012 VAE models we used a modified version of the MaskGIT code.

The big_vision input pipeline relies on TensorFlow Datasets (TFDS)

which supports some data sets out-of-the-box, whereas others require manual download of the data

(for example ImageNet and COCO (2017), see the big_vision main README and the UViM README, respectively, for details).

After setting up big_vision as described in the main README, training can be launched locally as follows

python -m big_vision.trainers.proj.givt.generative \

--config big_vision/configs/proj/givt/givt_imagenet2012.py \

--workdir gs://$GS_BUCKET_NAME/big_vision/`date '+%m-%d_%H%M'`

Add the suffix :key1=value1,key2=value2,... to the config path in the launch

command to modify the config with predefined arguments (see config for details). For example:

--config big_vision/configs/proj/givt/givt_imagenet_2012.py:model_size=large.

Note that givt_imagenet2012.py uses Imagenette to ensure that the config is runnable without manual ImageNet download.

This is only meant for testing and will overfit immediately. Please download ImageNet to reproduce the paper results.

VAE trainings for the GIVT variant of UViM can be launched as

python -m big_vision.trainers.proj.givt.vae \

--config big_vision/configs/proj/givt/vae_nyu_depth.py \

--workdir gs://$GS_BUCKET_NAME/big_vision/`date '+%m-%d_%H%M'`

Please refer to the main README for details on how to launch training on a (multi-host) TPU setup.

This is not an official Google Product.

@article{tschannen2023givt,

title={GIVT: Generative Infinite-Vocabulary Transformers},

author={Tschannen, Michael and Eastwood, Cian and Mentzer, Fabian},

journal={arXiv:2312.02116},

year={2023}

}