[ Paper ] [ Website ] [ Dataset (OpenDataLab)] [ Dataset (Hugging face) ]

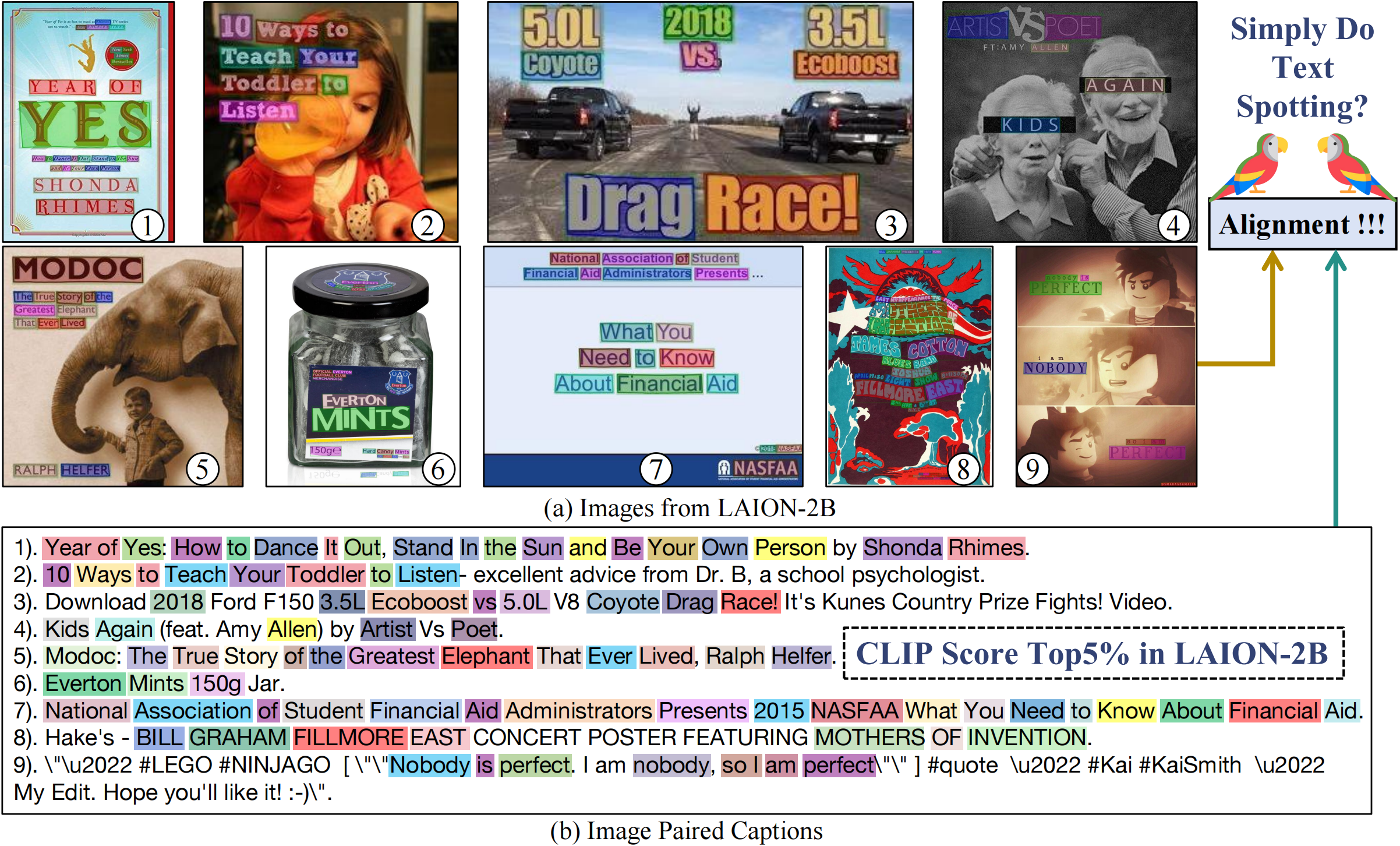

- Captions in LAION-2B have a significant bias towards describing visual text content embedded in the images.

- Released CLIP models have strong text spotting bias almost in every style of web images, resulting in the CLIP-filtering datasets inherently biased towards visual text dominant data.

- CLIP models easily learn text spotting capacity from parrot captions while failing to connect the vision-language semantics, just like a text spotting parrot.

- We provide a alternative solution by training a less biased filtered LAION-2B 100M subset and pre-trained CLIP models.

The Kmeans model we trained from LAION-400M dataset CLIP ViT-B-32 features using fassi. We frist use PCA to reduce the feature dimension. The training and inference code in kmeans.py.

| PCA weigths | Kmeans centrios |

|---|---|

| Download | Download |

The generation pipeline of synthetic images (sys_benchmark.py and Arial.ttf) and the N-gram Vocabulary we bulit from the dataset.

| LAION-2B Caption 1-gram | LAION-2B Caption 2-gram | LAION-2B Co-Emb Text 1-gram |

|---|---|---|

| Download | Download | Download |

- Selecting all the images without any embedded text using text spotting model DeepSolo.

- Filtering samples with CLIP score > 0.3 and Aesthetics score > 4.5

- Deduplication using CLIP features similarity based cluster labels.

- Finally, we got 107,166,507 (100M) LAION-2B subset.

our training code is based on OpenCLIP

- batch size 32k

- lr 5e-4

- epochs 32

- local loss

- precision amp

Note that the OCR model is not perfect, the images in our filtered subset still contain some text content. Therefore, we also benchmark our trained model on the synthetic images benchmark.

| 100M subset | ViT-B Models |

|---|---|

| Download | Download |

| 1-gram Synthetic Benchmark | Ours (100M) |

CLIP (WIT-400M) |

OpenCLIP (LAION-2B) |

DC medium 128M (DC) |

DC large 1.28B (DC) |

|---|---|---|---|---|---|

| Sync. Score (mean) |

0.163 | 0.317 | 0.368 | 0.268 | 0.338 |

| Sync. Score (std) | 0.659 | 0.305 | 0.427 | 0.247 | 0.341 |

| DataComp benchmark | Ours (100M) |

CLIP (WIT-400M) |

OpenCLIP (LAION-2B) |

DC medium 128M (DC) |

DC large 1.28B (DC) |

|---|---|---|---|---|---|

| ImageNet | 0.526 | 0.633 | 0.666 | 0.176 | 0.459 |

| ImageNet dist. shifts | 0.404 | 0.485 | 0.522 | 0.152 | 0.378 |

| VTAB | 0.481 | 0.526 | 0.565 | 0.259 | 0.426 |

| Retrieval | 0.421 | 0.501 | 0.560 | 0.219 | 0.419 |

| Average | 0.443 | 0.525 | 0.565 | 0.258 | 0.437 |

Thanks for these good works:

- fassi A library for efficient similarity search and clustering for buliding Kmeans model.

- DeepSolo A strong transformer based text spotting model for profilling LAION dataset.

- CLIP Pre-trained CLIP models on WIT-400M.

- OpenCLIP An open source CLIP implementation of training codes and pre-trained models on LIAON dataset.

- DataComp A comprehensive evaluation benchmark for CLIP models' downstream performace.

- Aesthetic Score Predictor An aesthetic score predictor ( how much people like on average an image ) based on a simple neural net that takes CLIP embeddings as inputs.

@misc{

}