Post-Hoc Methods for Debiasing Neural Networks

Yash Savani, Colin White, Naveen Sundar Govindarajulu.

arXiv:2006.08564.

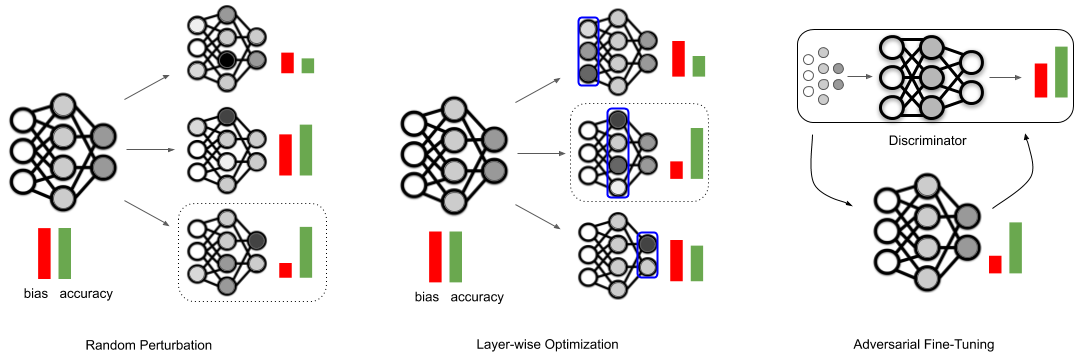

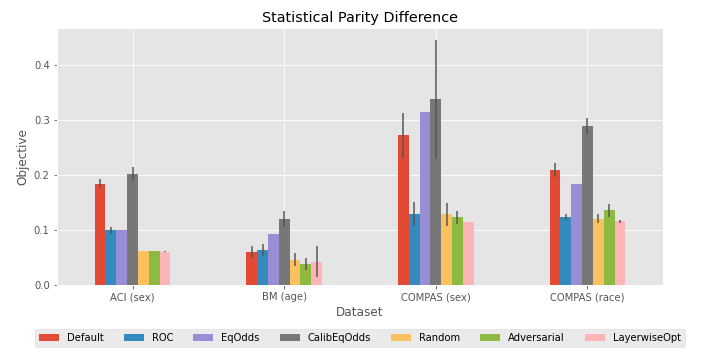

In this work, we introduce three new fine-tuning techniques to reduce bias in pretrained neural networks: random perturbation, layer-wise optimization, and adversarial fine-tuning. All three techniques work for any group fairness constraint. We include code that compares our three proposed methods with three popular post-processing methods, across three datasets provided by aif360, and three popular bias measures.

- pyyaml

- numpy

- torch

- aif360 == 0.3.0rc0

- sklearn

- numba

- jupyter

Install the requirements using

$ pip install -r requirements.txt

Create a config yaml file required to run the experiment by running

$ python create_configs.py <dataset> <bias measure> <protected variable> <number of replications>

For example:

$ python create_configs.py adult spd 1 10

where is one of "adult" (ACI), "bank" (BM), or "compas" (COMPAS), is one of "spd" (statistical parity difference), "eod" (equal opportunity difference), or "aod" (average odds difference), is 1 or 2 (described below), and is the number of trials to run, which can be any positive integer. This will create a config directory <dataset>_<bias measure>_<protected variable> (for example adult_spd_1) including all the corresponding config files for the experiment.

A table describing the relationship between the protected variable index and dataset is given below.

| dataset | 1 | 2 |

|---|---|---|

| adult | sex | race |

| compas | sex | race |

| bank | age | race |

To automatically create all 12 experiments used in the paper, run

$ bash create_all_configs.sh

Run all the experiments described by the config files in the config directory created in Step 1 by running

$ python run_experiments.py <config directory>

For example

$ python run_experiments.py adult_spd_1/

This will run a posthoc.py experiment for each config file in the config directory. All the biased, pretrained neural network models are saved in the models/ directory. All the results from the experiments are saved in the results/ directory in JSON format.

The posthoc.py includes benchmark code for the 3 post-processing debiasing techniques provided by the aif360 framework: reject option classification, equalized odds postprocessing, and calibrated equalized odds postprocessing. It also includes code for the random perturbation, and adversarial fine-tuning algorithms. To get results for the layer-wise optimization technique, follow the instructions in the deco directory.

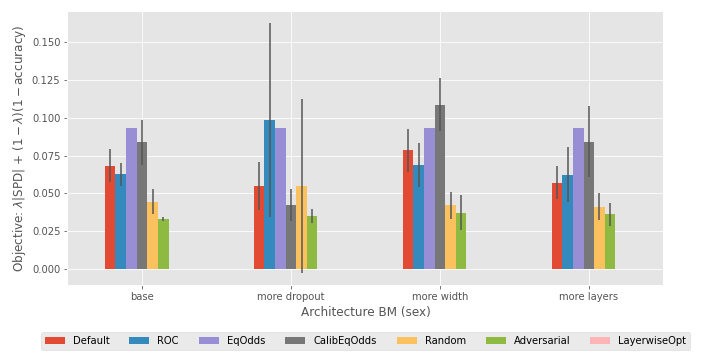

To analyze the results of the experiments and get the plots shown below you can run through the analyze_results.ipynb jupyter notebook.

To clean up the config directories run

$ bash cleanup.sh

_spd.png)