Using SimBA to create and access DeepLabCut tracking models (optional).

SimBA requires tracking data as input (currently in .csv file format), and this can be generated by pose-estimation tools like DeepLabCut. DeepLabCut projects can be created and managed through SimBA. However - if SimBA users prefer to work in the DeepLabCut interface to build and access DeepLabCut pose estimation models and generate SimBA input data, then the tracking data can instead be generated in the DeepLabCut interface and this SimBA step can be skipped. The generated pose estimation data can instead be imported directly in .csv file format when creating the behavioral classifier project. Note that at least some versions of DeepLabCut saves data in hdf5 format by default. For information on how to output DeepLabCut data in csv file format, go to the DeepLabcut repository.

Note: This part of SimBA was written early on - when DeepLabCut was accessed exclusively by the command line and did not come with the very nice graphical user interface it comes with today. At that early point we added the DeepLabCut parts of SimBA to make many of the important DeepLabCut functions accessible for all members of the lab, regardless of previous programming experience.

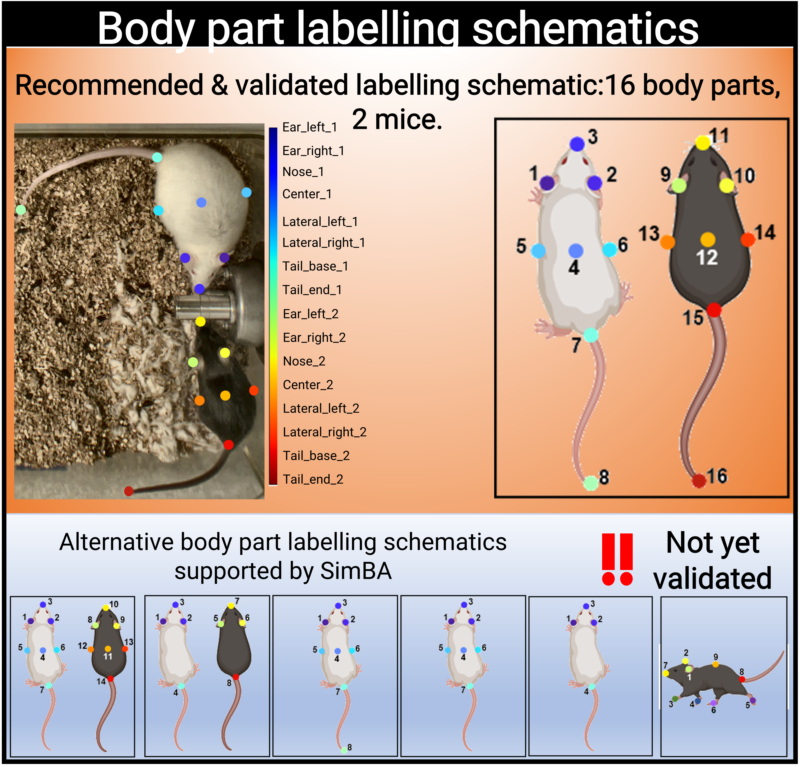

SimBA use the tracking data to construct a battery of features (for a list of features with example descriptions, click here). The number of labelled body parts, and which body parts are labelled and tracked, determines which features can be calculated. We have validated SimBA by labelling 16 body parts (8 body parts on two individual mice) and we strongly advice users to use the same body part labelling schematic if possible. To set the DeepLabCut config to use the 16 body part schematic, check the `Apply Golden Aggression Config' box (See Step 1, below). Pre-trained models using the 16 body part schematic and two animals can also be downloaded through OSF. However, SimBA will also accept alternative body labelling schematics (see the above figure). This currently includes:

- 7 body parts on two individual mice recorded 90° above angle

- 4 body parts on two individual mice recorded 90° above angle

- 8 body parts on a single mouse recorded 90° above angle

- 7 body parts on a single mouse recorded 90° above angle

- 4 body parts on a single mouse recorded 90° above angle

- 9 body parts on a single mouse recorded 45° side angle

Important: So far we have only validated machine learning models that use features calculated from 16 labelled body parts on two individual animals.

For detailed information on the DeepLabCut workflow, see the DeepLabCut repository.

- Extract Frames

- Label Frames

- Generate Training Set

- Download Weights and Train Model

- Evaluate Model

- Analyze Videos

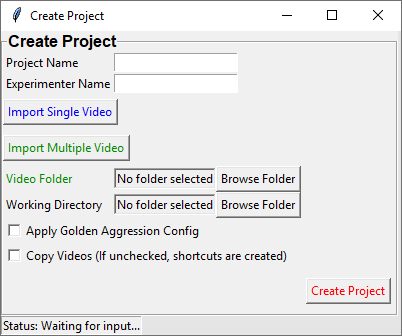

Use this menu to create a new project for your DeepLabCut pose-estimation tracking analysis.

This step generates a new DeepLabCut project.

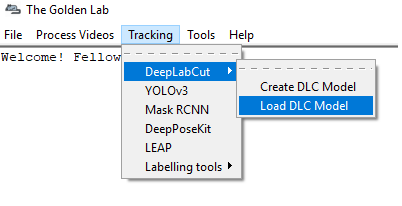

- In the main SimBA window, click on

Tracking-->DeepLabCut-->Create DLC Model. The following window will pop up.

-

Enter the name of your project in the

Project nameentry box. -

Enter the name of the experimenter in the

Experimenter nameentry box.

Note: Spaces are not allowed in the project name or the experimenter name.

-

Next, import the videos into your project. If you are adding only one video, you can click on

and the

in green should change to

blue. Next, click on

Browse Fileto select a video file. -

To import multiple videos into your project, click on

and the green

will appear. Click on

Browse Folderand choose a folder containing the videos to import into your project.

Note: The default settings is to import multiple videos.

-

Next, select the main directory that your project will be located in. Next to

Project directory, click onBrowse Folderand choose a directory. -

If you wish to use the settings used by the Golden lab (This setting is used to track two mice, and eight body parts on each of the two mice) check the `Apply Golden Aggression Config' box. For more information, click here. If you wish to generate your on DeepLabCut tracking config, please leave this box un-ticked.

-

You can either copy all the videos to your DeepLabCut project folder, or create shortcuts to the videos. By checking the

Copy Videoscheckbox, the videos will be copied to your project folder. If this box is left un-ticked, shortcuts to your videos will be created. -

Click on

Create Projectto create your DeepLabCut project. The project will be located in the chosen working directory.

These menus are used to load created DeepLabCut projects.

-

Go to the project folder.

-

Double click on the config.yaml file and open it in a text editor like Notepad.

-

Change the settings if necessary.

- In the main SimBA window, click on

Tracking-->DeepLabCut-->Load DLC Model. The following window will pop up.

- Under the Load Model tab, click on

Browse Fileand load the config.yaml file from your project folder.

-

Under the Add videos into project tab and Single Video heading, click on

Browse Fileand select the video you wish to add to the project. -

Click on

Add single video.

-

Under the Add videos into project tab and Multiple Videos heading, click on

Browse Folderand select the folder containing the videos you wish to add to the project. -

Click on

Add multiple videos.

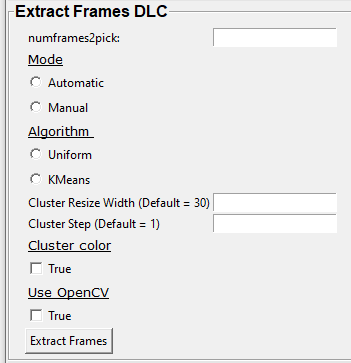

Step 4: Extract frames for labeling body parts using DLC. For more details, see the DeepLabcut repository

-

Under the Extract/label frames tab tab and Extract Frames DLC heading, enter the number of frames you wish to extract from the videos in the

numframes2pickentry box. -

Select the Mode of extracting the frames.

-

Automaticselects the frames to extract automatically. -

Manualallows you to select the frames to extract.

- Select the Algorithm used to pick the frames to extract.

-

Uniformselects the frames uniformly in a series format. -

KMeansuses k-means clustering algorithm to select the frames randomly based on cluster. -

Cluster Resize Widthis the setting for KMeans. The default is set to 30. -

Cluster Stepis the setting for KMeans. The default is set to 1.

-

To use color information for clustering, check the

Truebox next to Cluster Color. -

If you wish to use OpenCV to extract frames, check the

Truebox. If you wish to use ffmpeg, keep this box un-checked. -

Click on

Extract Framesto begin to extract the frames.

-

Under Label Frames heading in the Extract/label frames tab tab, click on

Label Framesand the DeepLabCut- Labelling ToolBox will pop up. -

Click on

Load Frameslocated in the bottom left-hand corner. -

Navigate to the folder with the video name where your extracted frames are saved, and click

Select Folder. They should be located at workingdirectory/yourproject/labeled-data/ -

Now you can start labelling frames. For more details, see the DeepLabCut repository.

-

Once all the frames are labelled, move on to Step 6.

- Under Check Labels, click on

Check Labelled Framesto check the labelled frames.

- Under Generate Training Set, click on the

Generate training setbutton to generate a new training set.

Train the model using a training set.

-

In the

iterationentry box, fill in an integer (e.g., 0) representing the model iteration number. Once done, click on Update iteration. If left blank, then the iteration number will be the most recently used. If the iteration number isn't specified, and you are working with a new project, it will default to 0. -

In the

init_weightbox, specify the path to the initial weights. If this is left blank it will default to resnet-50. If you want to use other weights, click onBrowse File. Pre-trained weights for mouse and rat resident-intruder protocols using 16 body-parts, as well as other pre-trained weights, can be downloaded here. Downloaded weights may consist of three files (.index, .meta, .data). In theinit_weightbox, specify the path to any of the three downloaded files.

- Click on

Evaluate Networkto evaluate the trained model. For more details, see the DeepLabCut repository.

-

Under the Video Analysis tab and the Single Video Analysis header, click on

Browse Fileand select one video file. -

Click on

Single Video Analysis.

-

Under the Video Analysis tab and the Multiple Videos Analysis header, click on

Browse Folderand select a folder containing the videos you wish to analyze. -

Enter the video format in the

Video typeentry box (eg: mp4, avi). Do not include any " . " dot in the entry box (e.g,: for example, do not enter .mp4, .flv, or .avi. Instead enter mp4, flv, or avi). -

Click on

Multi Videos Analysis.

-

Click on

Browse Fileto select the video. -

Click on

Plot Results. For more details, see the DeepLabCut documentation.

This step will generate a video with labelled tracking points.

-

Click on

Browse Fileto select the video. -

You can choose to save the frames with tracking points on the video, and not just the compressed video file, by checking the

Save Framesbox. This process takes longer if you want to render to whole video. However, if you just want to check on a few frames how the model is doing, you can render a few images and terminate the process and save time. -

Click on

Create Video.

There are two ways that you can improve DeepLabCut generated tracking models.

-

Extract more frames to train the model.

-

Correct the predictions made by the model on new frames.

This step automates the Extract Frames process to only extract frames from select videos. This function will copy the settings from the DeepLabCut config.yaml and remove all the videos in the config.yaml file. The function will then add the new videos that the user specifies into a new, temporary yaml file, and extract frames from only the videos included in the temporary yaml file.

-

Under Load Model, click

Browse Fileand select the config.yaml file. -

Under

[Generate temp yaml]tab -->Single video, click onBrowse Fileand select the video. -

Click on

Add single video -

A temp.yaml file will be generated in the same folder as the project folder.

-

Under Load Model, click

Browse Fileand select the config.yaml file. -

Under

[Generate temp yaml]tab -->Multiple videos, click onBrowse Folderand select the folder containing only the videos you want to add to your peoject and extract additional frames from. -

Click on

Add multiple videos. -

A temp.yaml file will be generated in the same folder as the project folder.

-

Under Load Model, click

Browse Fileand select the temp.yaml that you have just created. -

Now you can extract frames of the videos that you have just added.

-

Go to

Tracking-->DeepLabCut-->Load DLC Model. -

Under Load Model, click on

Browse Fileand load the config.yaml file from the project folder.

-

Under Extract Outliers, click

Browse Fileto select the videos to you want to extract outlier frames from. -

Click on

Extract Outliers.

-

Under Label Outliers, click on

Refine Outliers. The DeepLabCut - Refinement ToolBox will pop open. -

In the bottom left-hand corner, click on

Load labelsand select the machinelabels file to start to correct the tracking points. For more information on how to correct outliers in DeepLabCut, go to the DeepLabCut repository

- Under Merge Labeled Outliers, click on

Merge Labelled Outliersto add the labelled outlier frames to your dataset.