This repo contains code for the paper, "Automatically Neutralizing Subjective Bias in Text".

Concretely this means algorithms for

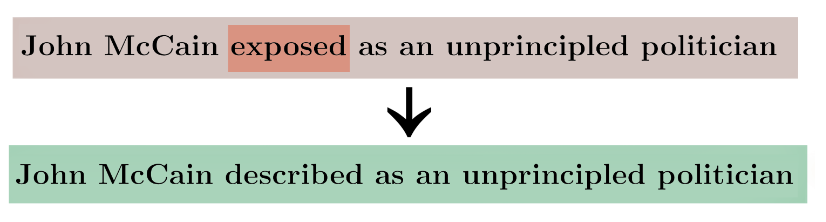

- Identifying biased words in sentences.

- Neutralizing bias in sentences.

This repo was tested with python 3.7.7.

These commands will download data and a pretrained model before running inference.

$ cd src/

$ python3 -m venv venv

$ source venv/bin/activate

$ pip install -r requirements.txt

$ python

>> import nltk; nltk.download("punkt")

$ sh download_data_ckpt_and_run_inference.sh

You can also run sh integration_test.sh to further verify that everything is installed correctly and working as it should be.

Click this link to download (100MB, expands to 500MB).

If that link is broken, try this mirror: download link

Click this link to download a (Modular) model checkpoint. We used this command to train it.

harvest/: Code for making the dataset. It works by crawling and filtering Wikipedia for bias-driven edits.

src/: Code for training models and using trained models to run inference. The models implemented here are referred to as MODULAR and CONCURRENT in the paper.

Please see src/README.md for bias neutralization directions.

See harvest/README.md for making a new dataset (as opposed to downloading the one available above).

- Get your data into the same format as the bias dataset. You can do this by making a tsv file with columns [id, src tokenized, tgt tokenized, src raw, tgt raw] and then adding POS tags, etc with this script.

- Training the pretrained model on your custom dataset using either the train command used to build that model or follow the instructions in the src/ directory to write your own training commands.