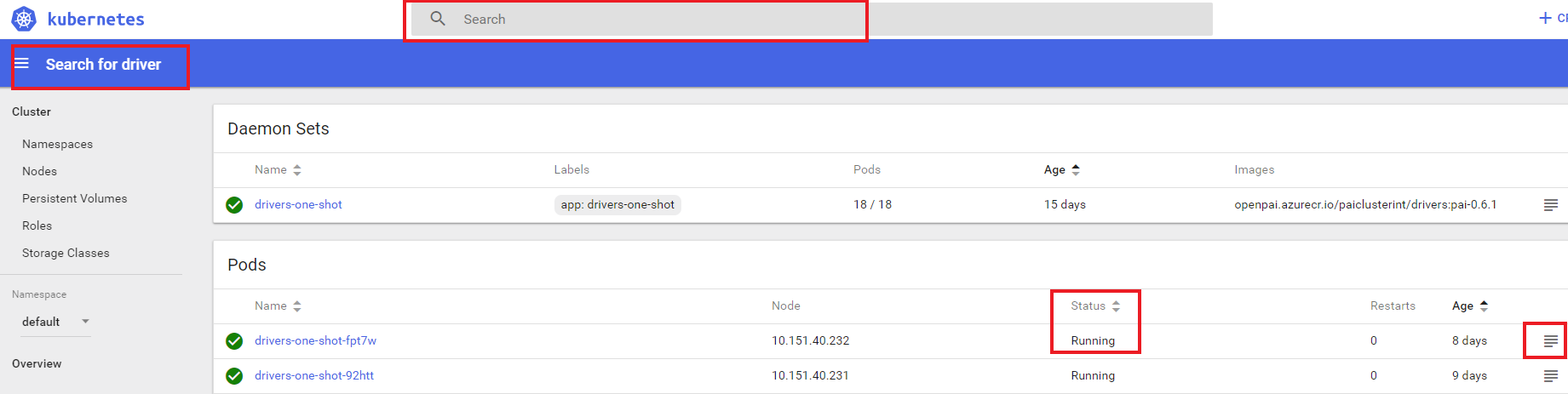

Dashboard:

http://<master>:9090

search driver, view driver status

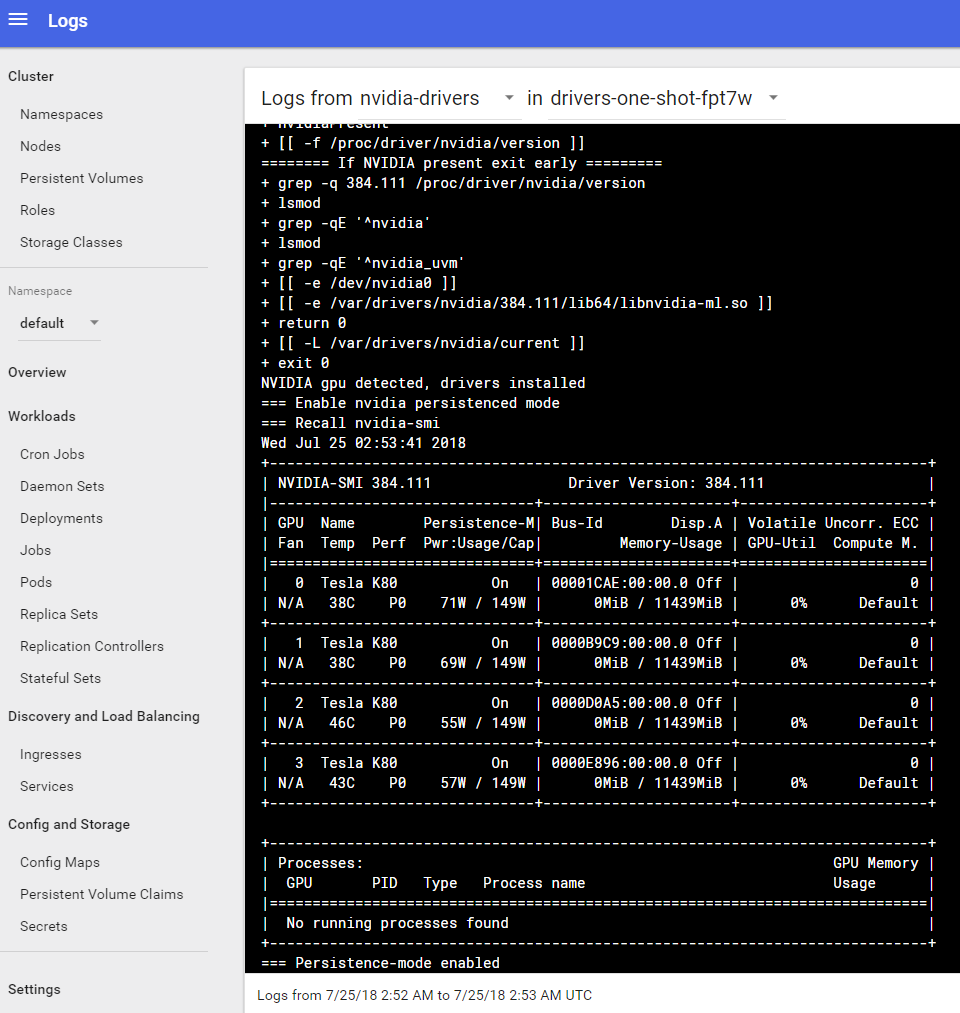

view driver logs, this log shows driver in health status

# (1) find driver container at server

~$ sudo docker ps | grep driver

daeaa9a81d3f aiplatform/drivers "/bin/sh -c ./inst..." 8 days ago Up 8 days k8s_nvidia-drivers_drivers-one-shot-d7fr4_default_9d91059c-9078-11e8-8aea-000d3ab5296b_0

ccf53c260f6f gcr.io/google_containers/pause-amd64:3.0 "/pause" 8 days ago Up 8 days k8s_POD_drivers-one-shot-d7fr4_default_9d91059c-9078-11e8-8aea-000d3ab5296b_0

# (2) login driver container

~$ sudo docker exec -it daeaa9a81d3f /bin/bash

# (3) checker driver version

root@~/drivers# nvidia-smi

Fri Aug 3 01:53:04 2018

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 384.111 Driver Version: 384.111 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

|===============================+======================+======================|

| 0 Tesla K80 On | 0000460D:00:00.0 Off | 0 |

| N/A 31C P8 31W / 149W | 0MiB / 11439MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: GPU Memory |

| GPU PID Type Process name Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

A configuration in service-configuration.yaml's cluster.commmon.data-path. The default value is /datastorage

#SSH to the master machine

~$ ls /datastorage

hadooptmp hdfs launcherlogs prometheus yarn zoodata

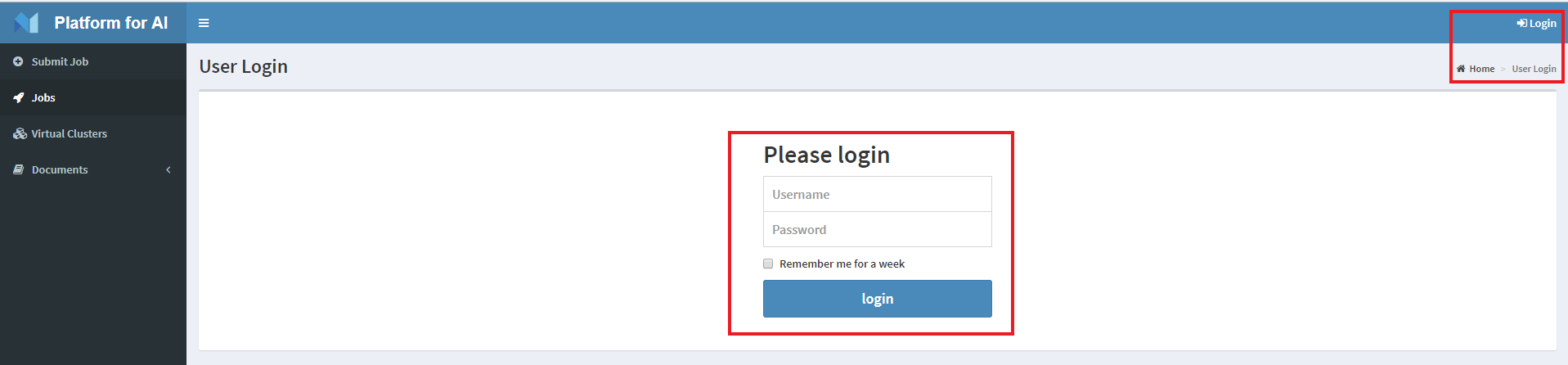

Dashboard:

http://<master>:9286/virtual-clusters.html

try to login:

Note: The username and password are configured in the service-configuraiton.yaml's rest-server field.

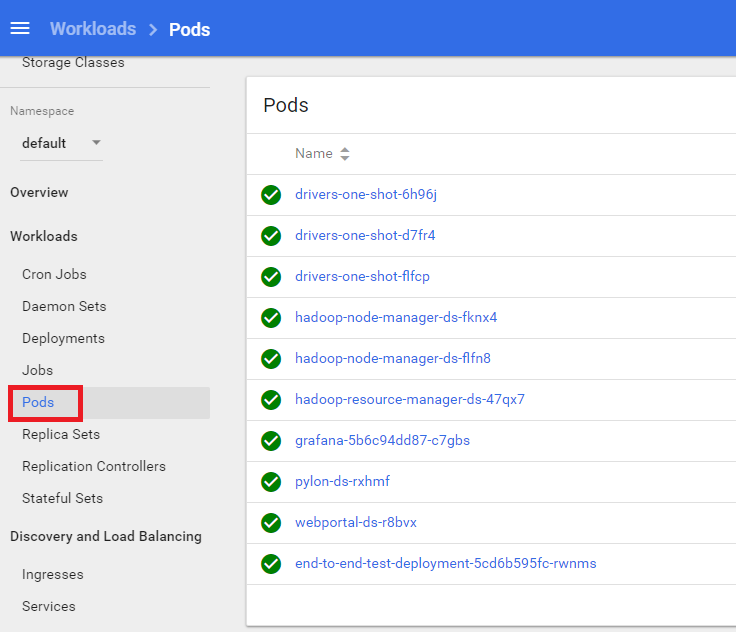

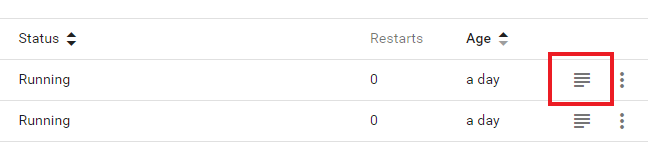

- Monitor

From kubernetes webportal:

Dashboard:

http://<master>:9090

From OpenPAI watchdog:

- Log

From kubernetes webportal:

From each node container / pods log file:

View containers log under folder:

ls /var/log/containersView pods log under folder:

ls /var/log/pods- Debug

As OpenPAI services are deployed on kubernetes, please refer debug kubernetes pods

- Update OpenPAI Configuration

Check and refine 4 yaml files:

- layout.yaml

- kubernetes-configuration.yaml

- k8s-role-definition.yaml

- serivices-configuration.yaml

- Customize config for specific service

If user want to customize single service, you could find service config file at src and find image dockerfile at src.

-

Update Code & Image

- Customize image dockerfile or code

User could find service's image dockerfile at src and customize them.

- Rebuild image

User could execute the following cmds:

Build docker image

paictl.py image build -p /path/to/configuration/ [ -n image-x ]Push docker image

paictl.py image push -p /path/to/configuration/ [ -n image-x ]If the -n parameter is specified, only the given image, e.g. rest-server, webportal, watchdog, etc., will be build / push.

Stop single or all services.

python paictl.py service stop \

[ -c /path/to/kubeconfig ] \

[ -n service-list ]If the -n parameter is specified, only the given services, e.g. rest-server, webportal, watchdog, etc., will be stopped. If not, all PAI services will be stopped.

Boot up single all OpenPAI services.

Please refer to this section for details.

Please refer Kubernetes Troubleshoot Clusters

- StackOverflow: If you have questions about OpenPAI, please submit question at Stackoverflow under tag: openpai

- Report an issue: If you have issue/ bug/ new feature, please submit it at Github