It's Raw! Audio Generation with State-Space Models

Karan Goel, Albert Gu, Chris Donahue, Christopher Ré

Paper: https://arxiv.org/abs/2202.09729

- Standalone Implementation

- Datasets

- Model Training

- Audio Generation

- Automated Metrics

- Mean Opinion Scores (Amazon MTurk)

Samples of SaShiMi and baseline audio can be found online.

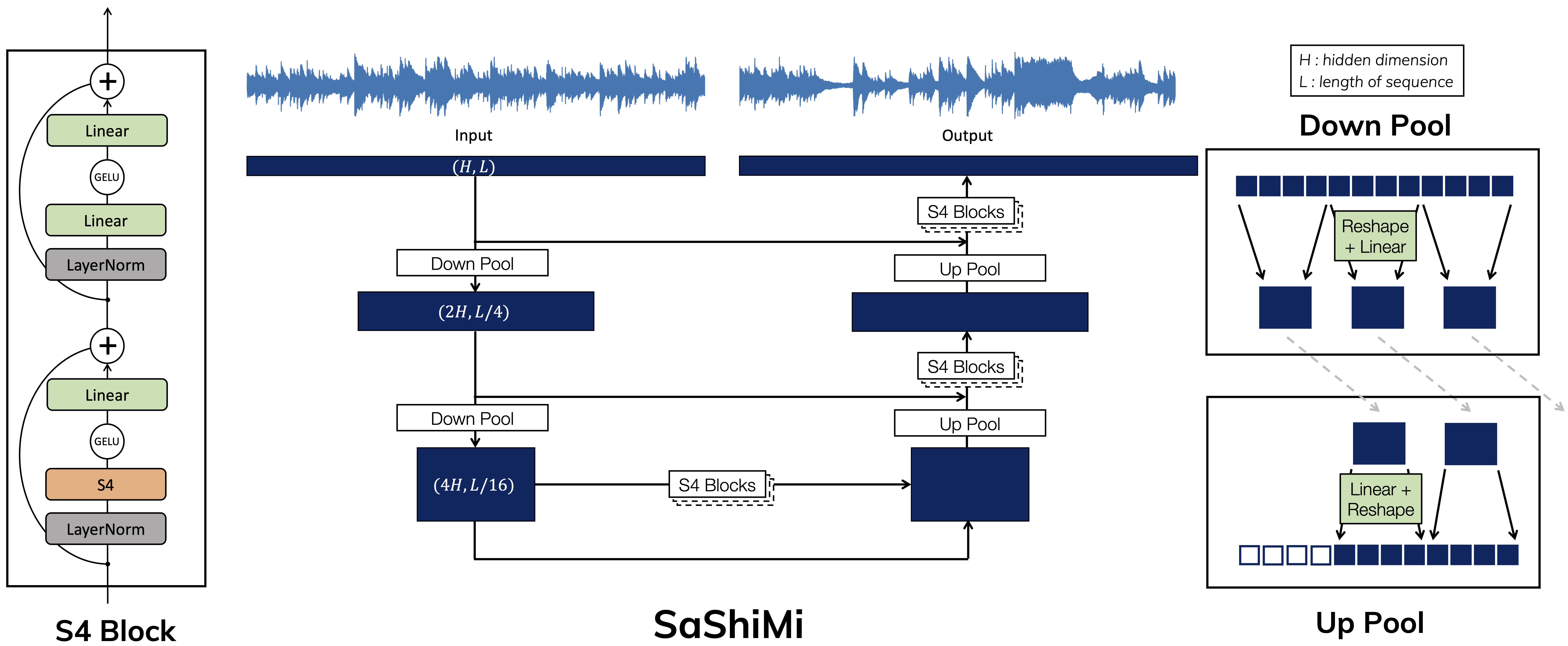

We provide a standalone PyTorch implementation of the SaShiMi architecture backbone in state-spaces/sashimi/sashimi.py, which you can use in your own code. Note that you'll need to also copy over the standalone S4 layer implementation, which can be found at state-spaces/src/models/sequence/ss/standalone/s4.py.

You can treat the SaShiMi module as a sequence-to-sequence map taking (batch, seq, dim) inputs to (batch, seq, dim) outputs i.e.

sashimi = Sashimi().cuda() # refer to docstring for arguments

x = torch.randn(batch_size, seq_len, dim).cuda()

# Run forward

y = sashimi(x) # y.shape == x.shapeIf you use SaShiMi for autoregressive generation, you can convert it to a recurrent model at inference time and then step it to generate samples one at a time.

with torch.no_grad():

sashimi.eval()

sashimi.setup_rnn() # setup S4 layers in recurrent mode

# alternately, use sashimi.setup_rnn('diagonal') for a speedup

# Run recurrence

ys = []

state = sashimi.default_state(*x.shape[:1], device='cuda')

for i in tqdm(range(seq_len)):

y_, state = sashimi.step(x[:, i], state)

ys.append(y_.detach().cpu())

ys = torch.stack(ys, dim=1) # ys.shape == x.shapeYou can download the Beethoven, YouTubeMix and SC09 datasets from the following links on the Huggingface Hub. Details about the datasets can be found in the README files on the respective dataset pages.

For each dataset, you only need to download and unzip the <dataset>.zip file inside the data/ directory at the top-level of the state-spaces repository.

Details about the training-validation-test splits used are also included in the README files on the dataset pages. If you reproduce our results, this splitting will be handled automatically by our training scripts. The specific data processing code that we use can be found at src/dataloaders/audio.py, and dataset definitions for use with our training code are included in src/dataloaders/datasets.py. The dataset configs can be found at configs/datasets/.

SaShiMi models rely on the same training framework as S4 (see the README for details). To reproduce our results or train new SaShiMi models, you can use the following commands:

# Train SaShiMi models on YouTubeMix, Beethoven and SC09

python -m train experiment=sashimi-youtubemix wandb=null

python -m train experiment=sashimi-beethoven wandb=null

python -m train experiment=sashimi-sc09 wandb=nullIf you encounter GPU OOM errors on either Beethoven or YouTubeMix, we recommend reducing the sequence length used for training by setting dataset.sample_len to a lower value e.g. dataset.sample_len=120000. For SC09, we recommend reducing batch size if GPU memory is an issue, by setting loader.batch_size to a lower value.

We also include implementations of SampleRNN and WaveNet models, which can be trained easily using the following commands:

# Train SampleRNN models on YouTubeMix, Beethoven and SC09

python -m train experiment=samplernn-youtubemix wandb=null

python -m train experiment=samplernn-beethoven wandb=null

python -m train experiment=samplernn-sc09 wandb=null

# Train WaveNet models on YouTubeMix, Beethoven and SC09

python -m train experiment=wavenet-youtubemix wandb=null

python -m train experiment=wavenet-beethoven wandb=null

python -m train experiment=wavenet-sc09 wandb=nullAudio generation models are generally slow to train, e.g. YouTubeMix SaShiMi models take up to a week to train on a single V100 GPU.

Note on model performance: due to limited compute resources, our results involved a best-effort reproduction of the baselines, and relatively limited hyperparameter tuning for SaShiMi. We expect that aggressive hyperparameter tuning should lead to improved results for all models. If you're interested in pushing these models to the limits and have compute $ or GPUs, please reach out to us!

We provide checkpoints for SaShiMi, SampleRNN and WaveNet on YouTubeMix and SC09 on the Huggingface Hub. The checkpoint files are named checkpoints/<model>_<dataset>.pt and are provided for use with our generation script at state-spaces/sashimi/generation.py.

To generate audio, you can use the state-spaces/sashimi/generation.py script. First, put the checkpoints you downloaded at state-spaces/sashimi/checkpoints/.

Then, run the following command to generate audio (see the --help flag for more details):

python -m sashimi.generation --model <model> --dataset <dataset> --sample_len <sample_len_in_steps>For example, to generate 32 unconditional samples of 1 second 16kHz audio from the SaShiMi model on YouTubeMix, run the following command:

python -m sashimi.generation --model sashimi --dataset youtubemix --n_samples 32 --sample_len 16000The generated .wav files will be saved to sashimi/samples/. You can generate audio for all models and datasets in a similar way.

Note 1 (log-likehoods): the saved

.wavfiles will be ordered by their (exact) log-likelihoods, with the first sample having the lowest log-likelihood. This is possible since all models considered here have exact, tractable likelihoods. We found that samples near the bottom (i.e. those with lowest likelihoods) or close to the top (i.e. those with highest likelihoods) tend to have worse quality. Concretely, throwing out the bottom 40% and top 5% of samples is a simple heuristic for improving average sample quality, and performance on automated generation metrics (see Appendix C.3.1 of our paper for details).

Note 2 (runaway noise): samples generated by autoregressive models can often have "runaway noise", where a sample suddenly degenerates into pure noise. Intuitively, this happens when the model finds itself in an unseen state that it struggles to generalize to, which is often the case for extremely long sequence generation. We found that SaShiMi also suffers from this problem when generating long sequences, and fixing this issue for autoregressive generation is an interesting research direction.

You can also generate conditional samples, e.g. to generate 32 samples conditioned on 0.5 seconds of audio from the SaShiMi model on YouTubeMix, run the following command:

python -m sashimi.generation --model sashimi --dataset youtubemix --n_samples 8 --n_reps 4 --sample_len 16000 --prefix 8000 --load_dataThe prefix flag specifies the number of steps to condition on. The script selects the first n_samples examples of the specified split (defaults to val) of the dataset. n_reps specifies how many generated samples will condition on a prefix from a single example (i.e. the total number of generated samples is n_samples x n_reps).

Note that it is necessary to pass the load_data flag and you will need to make sure the datasets are available in the data/ directory when running conditional generation.

We provide a standalone implementations of automated evaluation metrics for evaluating the quality of generated samples on the SC09 dataset in metrics.py. Following Kong et al. (2021), we implemented the Frechet Inception Distance (FID), Inception Score (IS), Modified Inception Score (mIS), AM Score (AM) and the number of statistically different bins score (NDB). Details about the metrics and the procedure followed by us can be found in Appendix C.3 of our paper.

We use a modified version of the training/testing script provided by the pytorch-speech-commands repository, which we include under state-spaces/sashimi/sc09_classifier. Following Kong et al. (2021), we used a ResNeXt model trained on SC09 spectrograms.

This classifier has two purposes:

- To calculate the automated metrics, each SC09 audio clip must be converted into a feature vector.

- Following Donahue et al. (2019), we use classifier confidence as a proxy for the quality and intelligibility of the generated audio. Roughly, we sample a large number of samples from each model, and then select the top samples (as ranked by classifier confidence) per class (as assigned by the classifier). These are then used in MOS experiments.

Requirements are included in the requirements.txt file for reference. We recommend running your torch and torchvision install using whatever best practices you follow before installing other requirements.

pip install -r requirements.txtThis code is provided as-is, so depending on your

torchversion, you may need to make slight tweaks to the code to get it running. It's been tested withtorchversion1.9.0+cu102.

For convenience, we recommend redownloading the Speech Commands dataset for classifier training using the commands below. Downloading and extraction should take a few minutes.

cd sashimi/sc09_classifier/

bash download_speech_commands_dataset.shTo train the classifier, run the following command:

mkdir checkpoints/

python train_speech_commands.py --batch-size 96 --learning-rate 1e-2Training is fast and should take only a few hours on a T4 GPU. The best model checkpoint should be saved under state-spaces/sashimi/sc09_classifier/ with a leading timestamp. Note that we provide these instructions for completeness, and you should be able to reuse our classifier checkpoint directly (see Downloads next).

To reproduce our evaluation results on the SC09 dataset, we provide sample directories, a classifier checkpoint and a preprocessed cache of classifier outputs.

- Samples: We provide all samples generated by all models on the Huggingface Hub under

samples/. Download and unzip all the sample directories instate-spaces/sashimi/samples/. - Classifier: You can use our SC09 classifier checkpoint rather than training your own. We provide this for convenience on the Huggingface Hub at

sc09_classifier/resnext.pth. This model achieves98.08%accuracy on the SC09 test set. Download this checkpoint and place it instate-spaces/sashimi/sc09_classifier/. - Cache: We provide a cache of classifier outputs that are used to speed up automated evaluation, as well as used in MTurk experiments for gathering mean opinion scores. You can find these on the Huggingface Hub at

sc09_classifier/cache. Download and place thecachedirectory understate-spaces/sashimi/sc09_classifier/.

At the end of this your directory structure should look something like this:

state-spaces/

├── sashimi/

│ ├── samples/

│ │ ├── sc09/

│ │ ├── youtubemix/

│ ├── sc09_classifier/

│ │ ├── resnext.pth

│ │ ├── cache/

...We provide instructions for calculating the automated SC09 metrics next.

To generate the automated metrics for the dataset, run the following command:

python test_speech_commands.py resnext.pthIf you didn't correctly place the cache folder under state-spaces/sashimi/sc09_classifier, this will be a little slow to run the first time, as it caches features and predictions (train_probs.npy, test_probs.npy, train_activations.npy, test_activations.npy) for the train and test sets under state-spaces/sashimi/sc09_classifier/cache/. Subsequent runs reuse this and are much faster.

For autoregressive models, we follow a threshold tuning procedure that is outlined in Appendix C.3 of our paper. We generated 10240 samples for each model, using 5120 to tune thresholds for rejecting samples with the lowest and highest likelihoods, and evaluating the metrics on the 5120 samples that are held out. This is all taken care of automatically by the test_speech_commands.py script (with the --threshold flag passed in).

# SaShiMi (4.1M parameters)

python test_speech_commands.py --sample-dir ../samples/sc09/10240-sashimi-8-glu/ --threshold resnext.pth

# SampleRNN (35.0M parameters)

python test_speech_commands.py --sample-dir ../samples/sc09/10240-samplernn-3/ --threshold resnext.pth

# WaveNet (4.2M parameters)

python test_speech_commands.py --sample-dir ../samples/sc09/10240-wavenet-1024/ --threshold resnext.pthImportant: the commands above assume that samples inside the

sample-dirdirectory are sorted by log-likelihoods (in increasing order), since the--thresholdflag is being passed. Our autoregressive generation script does this automatically, but if you generated samples manually through a separate script, you should sort them by log-likelihoods before running the above commands. If you cannot sort the samples by log-likelihoods, you can simply omit the--thresholdflag.

For DiffWave models and WaveGAN (which don't provide exact likelihoods), we simply calculate metrics directly on 2048 samples.

# DiffWave with WaveNet backbone (24.1M parameters), trained for 500K steps

python test_speech_commands.py --sample-dir ../samples/sc09/2048-diffwave-500k/ resnext.pth

# DiffWave with WaveNet backbone (24.1M parameters), trained for 1M steps

python test_speech_commands.py --sample-dir ../samples/sc09/2048-diffwave-1m/ resnext.pth

# Small DiffWave with WaveNet backbone (6.8M parameters), trained for 500K steps

python test_speech_commands.py --sample-dir ../samples/sc09/2048-diffwave-small-500k/ resnext.pth

# DiffWave with bidirectional SaShiMi backbone (23.0M parameters), trained for 500K steps

python test_speech_commands.py --sample-dir ../samples/sc09/2048-sashimi-diffwave-500k/ resnext.pth

# DiffWave with bidirectional SaShiMi backbone (23.0M parameters), trained for 800K steps

python test_speech_commands.py --sample-dir ../samples/sc09/2048-sashimi-diffwave-800k/ resnext.pth

# Small DiffWave with bidirectional SaShiMi backbone (7.5M parameters), trained for 500K steps

python test_speech_commands.py --sample-dir ../samples/sc09/2048-sashimi-diffwave-small-500k/ resnext.pth

# Small DiffWave with unidirectional SaShiMi backbone (7.1M parameters), trained for 500K steps

python test_speech_commands.py --sample-dir ../samples/sc09/2048-sashimi-diffwave-small-uni-500k/ resnext.pth

# WaveGAN model (19.1M parameters)

python test_speech_commands.py --sample-dir ../samples/sc09/2048-wavegan/ resnext.pthDetails and instructions on how we ran our MOS studies on Amazon Mechnical Turk can be found in Appendix C.4 of our paper. We strongly recommend referring to that section while going through the instructions below. We provide both code and samples that you can use to compare against SaShiMi, as well as run your own MOS studies.

We provide templates for generating HTML required for constructing HITs on Amazon MTurk at sashimi/mturk/templates/. Our templates are largely derived and repurposed from the templates provided by Neekhara et al. (2019). The template in template_music.py can be used to generate HITs for evaluating any music generation model, while the template in template_speech.py can be used for evaluating models on SC09 (and could likely be repurposed for evaluating other types of speech generation models). Each HTML template corresponds to what is shown in a single HIT to a crowdworker.

The SaShiMi release page for the Huggingface Hub contains the final set of .wav files that we use in our MOS MTurk experiments at mturk/sc09 and mturk/youtubemix. You should download and unzip these files and place them at state-spaces/sashimi/mturk/sc09/ and state-spaces/sashimi/mturk/youtubemix/ respectively.

If you want to run MOS studies on YouTubeMix (or for any music generation dataset and models), the instructions below should help you get setup using our code. The steps that you will follow are:

- Generating samples from each model that is being compared. We use 16 second samples for our YouTubeMix MOS study.

- Selecting a few samples from each model to use in the MOS study. In our work, we use a simple filtering criteria to select

30samples for each model that you can find in Appendix C.4 of our paper. - Randomizing and generating the batches of samples that will constitute each HIT in the MOS study using

turk_create_batch.py. - Generating an HTML template using

template_music.py. - Uploading the final batches to public storage.

- Posting the HITs to Amazon MTurk (see Posting HITs).

- Downloading results and calculating the MOS scores using our

MTurk YouTubeMix MOSnotebook atstate-spaces/sashimi/mturk/mos.

For Steps 1 and 2, you can use your own generation scripts or refer to our generation code (see Audio Generation).

For Step 3, we provide the turk_create_batch.py script that takes a directory of samples for a collection of methods and organizes them as a batch of HITs. Note that this script only organizes the data that will be contained in each HIT and does not actually post the HITs to MTurk.

Assuming you have the following directory structure (make sure you followed Download Artifacts above):

state-spaces/

├── sashimi/

│ ├── mturk/

│ │ ├── turk_create_batch.py

│ │ └── youtubemix/

│ │ ├── youtubemix-unconditional-16s-exp-1

│ │ | ├── sashimi-2

│ │ | | ├── 0.wav

│ │ | | ├── 1.wav

│ │ | | ├── ...

│ │ | | ├── 29.wav

│ │ | ├── sashimi-8

│ │ | | ├── 0.wav

│ │ | | ├── 1.wav

│ │ | | ├── ...

│ │ | | ├── 29.wav

│ │ | ├── samplernn-3

│ │ | | ├── 0.wav

│ │ | | ├── 1.wav

│ │ | | ├── ...

│ │ | | ├── 29.wav

│ │ | ├── wavenet-1024

│ │ | | ├── 0.wav

│ │ | | ├── 1.wav

│ │ | | ├── ...

│ │ | | ├── 29.wav

│ │ | └── test

│ │ | | ├── 0.wav

│ │ | | ├── 1.wav

│ │ | | ├── ...

│ │ | | ├── 29.wav

# For your own MTurk music experiment, you should follow this structure

... ├── <condition> # folder for your MTurk music experiment

... | ├── <method-1> # with your method folders

... | | ├── 0.wav # containing samples named 0.wav, 1.wav, ... for each method

... | | ├── 1.wav

... | | ├── ...

... | ├── <method-2>

... | ├── <method-3>

... | ├── ...You can then run the following command to organize the data for a batch of HITs (run python turk_create_batch.py --help for more details):

# Reproduce our MOS experiment on YouTubeMix exactly

python turk_create_batch.py \

--condition youtubemix-unconditional-16s-exp-1 \

--input_dir youtubemix/youtubemix-unconditional-16s-exp-1/ \

--output_dir final/ \

--methods wavenet-1024 sashimi-2 sashimi-8 samplernn-3 test \

--batch_size 1

# Run your own

python turk_create_batch.py \

--condition <condition> \

--input_dir path/to/<condition>/ \

--output_dir final/ \

--methods <method-1> <method-2> ... <method-k> \

--batch_size 1The result of this command will generate a folder inside final/ with the name of the condition. For example, the resulting directory when reproducing our MOS experiment on YouTubeMix will look like:

final/

├── youtubemix-unconditional-16s-exp-1

│ ├── 0/ # batch, with one wav file per method

│ │ ├── 176ea1b164264cd51ea45cd69371a71f.wav # some uids

│ │ ├── 21e150949efee464da90f534a23d4c9d.wav # ...

│ │ ├── 3405095c8a5006c1ec188efbd080e66e.wav

│ │ ├── 41a93f90dc8215271da3b7e2cad6e514.wav

│ │ ├── e3e70682c2094cac629f6fbed82c07cd.wav

│ ├── 1/

│ ├── 2/

│ ├── ...

│ ├── 29/ # 30 total batches

| ├── batches.txt # mapping from the batch index to the wav file taken from each method

| ├── uids.txt # mapping from (method, wav file) to a unique ID

| ├── urls.csv # list of file URLs for each batch: you'll upload this to MTurk later for creating the HITs

| ├── urls_0.csv

| ├── ...

│ └── urls_29.csvThe urls.csv file will be important for posting the HITs to MTurk.

Note about URLs: You will need to upload the folder generated inside

final/above (e.g.youtubemix-unconditional-16s-exp-1) to a server where it can be accessed publicly through a URL. We used a public Google Cloud Bucket for this purpose. Depending on your upload location, you will need to change the--url_templargument passed toturk_create_batch.py(see the default argument we use inside that file to get an idea of how to change it). This will then change the corresponding URLs in theurls.csvfile.

If you run an MOS study on YouTubeMix in particular and want to compare to SaShiMi, we recommend reusing the samples we provided above, and selecting 30 samples for your method using the process outlined in the Appendix of our paper. In particular, note that five .wav files in test/ are gold standard examples that we use for quality control on the HITs (the code for this filtering is provided in the MTurk YouTubeMix MOS notebook at state-spaces/sashimi/mturk/mos).

For Step 4, the template in template_music.py can be used to generate HITs for evaluating any music generation model. Each HIT will consist of a single sample from each model being compared, along with a single dataset sample that will help calibrate the raters' responses. We follow Dieleman et al. (2019) and collect ratings on the fidelity and musicality of the generated samples.

To generate HTML for a HIT that should contain n_samples samples, run the following command:

python template_music.py <n_samples>

# here `n_samples` should be the number of models being compared + 1 for the dataset

# e.g. we compared two SaShiMi variants to SampleRNN and WaveNet, so we set `n_samples` to 5Steps 5 and 6 are detailed in Posting HITs.

Finally for Step 7, to calculate final scores, you can use the MTurk YouTubeMix MOS notebook provided in the mos/ folder. Note that you will need to put the results from MTurk (which can be downloaded from MTurk for your batch of HITs and is typically named Batch_<batch_index>_batch_results.csv) in the mos/ folder. Run the notebook to generate final results. Please be sure to double-check the names of the gold-standard files used in the notebook, to make sure that the worker filtering process is carried out correctly.

If you want to run MOS studies on SC09 (or for a speech generation dataset and model), the instructions below should help you get setup using our code. These instructions focus mainly on SC09, and you can pick and choose what you might need if you're working with another speech dataset. The steps that you will follow are:

- Generating samples from each model that is being compared. We generated

2048samples for each model. - Training an SC09 classifier. We use a ResNeXt model as discussed in SC09 Classifier Training.

- Generating predictions for all models and saving them using the

test_speech_commands.pyscript. - Using these predictions, selecting the top-50 (or any other number) most confident samples per class for each model using the

prepare_sc09.pyscript. - Randomizing and generating the batches of samples that will constitute each HIT in the MOS study using

turk_create_batch.py. - Generating an HTML template using

template_speech.py. - Uploading the final batches to public storage.

- Posting the HITs to Amazon MTurk (see Posting HITs).

- Downloading results and calculating the MOS scores using our

MTurk SC09 MOSnotebook atstate-spaces/sashimi/mturk/mos.

For Step 1, you can use your own generation scripts or refer to our generation code (see Audio Generation).

Step 2 can be completed by referring to the SC09 Classifier Training section or reusing our provided ResNeXt model checkpoint.

For Step 3, we first output predictions for samples generated by each model. Note that we use the 2048- prefix for the sample directories used for MOS studies (you should already have downloaded and unzipped these sample directories from the Huggingface Hub at sashimi/samples/sc09/).

To generate and save predictions for the sample directory corresponding to each model, run the following command using the test_speech_commands.py script from the state-spaces/sashimi/sc09_classifier/ folder:

python test_speech_commands.py --sample-dir ../samples/sc09/2048-<model> --save-probs resnext.pth

# this should save a file named `2048-<model>-resnext-probs.npy` at `state-spaces/sashimi/sc09_classifier/cache/`You should not need to do this for our results, since we provide predictions for all models in the cache directory for convenience.

In Step 4, given the 2048 samples generated by each model, we select the top-50 most confident samples for each class using the classifier outputs from Step 3 i.e. the 2048-<model>-resnext-probs.npy files generated by test_speech_commands.py. We provide the state-spaces/sashimi/mturk/prepare_sc09.py script for this.

python prepare_sc09.py --methods <method-1> <method-2> ... <method-k>

# To reproduce our MOS experiment on SC09, we use the following command:

python prepare_sc09.py --methods diffwave-500k diffwave-1m diffwave-small-500k samplernn-3 sashimi-8-glu sashimi-diffwave-small-500k sashimi-diffwave-500k sashimi-diffwave-800k sashimi-diffwave-small-uni-500k test wavenet-1024 waveganThe prepare_sc09.py script takes additional arguments for cache_dir, sample_dir and target_dir to customize paths. By default, it will look for the 2048-<model>-resnext-probs.npy files in state-spaces/sashimi/sc09_classifier/cache/ (cache_dir), the samples in state-spaces/sashimi/samples/sc09/ (sample_dir) and the output directory state-spaces/sashimi/mturk/sc09/sc09-unconditional-exp-confident-repro/ (target_dir).

As a convenience, we provide the output of this step on the Huggingface Hub under the mturk/sc09 folder (called sc09-unconditional-exp-confident). This corresponds to the directory of samples we used for SC09 MOS evaluation. Either download and place this inside state-spaces/sashimi/mturk/sc09/ before proceeding to the commands below, OR make sure you've followed the previous steps to generate samples for each method using the prepare_sc09.py script.

For Step 5, we then provide the turk_create_batch.py script that takes a directory of samples for a collection of methods and organizes them as a batch of HITs. Note that this script only organizes the data that will be contained in each HIT and does not actually post the HITs to MTurk.

You can run the following command to organize the data for a batch of HITs for each method:

# Reproduce our MOS experiment on SC09 exactly

python turk_create_batch.py \

--condition sc09-unconditional-exp-confident-test \

--input_dir sc09/sc09-unconditional-exp-confident/ \

--output_dir final/ \

--methods test \

--batch_size 10

python turk_create_batch.py \

--condition sc09-unconditional-exp-confident-wavenet-1024 \

--input_dir sc09/sc09-unconditional-exp-confident/ \

--output_dir final/ \

--methods wavenet-1024 \

--batch_size 10

# Do this for all methods under sc09/sc09-unconditional-exp-confident/

python turk_create_batch.py \

--condition sc09-unconditional-exp-confident-<method-name> \

--input_dir sc09/sc09-unconditional-exp-confident/ \

--output_dir final/ \

--methods <method-name> \

--batch_size 10The result of this command will generate a folder inside final/ with the name of the condition. You can run this command on your methods in an identical manner.

In Step 6, the template in template_speech.py can be used to generate HITs for evaluating models on SC09. Each HIT will consist of multiple samples from only a single model. We chose to not mix samples from different models into a single HIT to reduce the complexity of running the study, as we evaluated a large number of models.

To generate HTML for a HIT that will contain n_samples samples, run the following command:

python template_speech.py <n_samples>

# we set `n_samples` to be 10Steps 7 and 8 are detailed in Posting HITs.

Finally for Step 9, to calculate final scores, you can use the MTurk SC09 MOS notebook provided in the mos/ folder. Note that you will need to put the results from MTurk (which can be downloaded from MTurk for your batch of HITs and is typically named Batch_<batch_index>_batch_results.csv) in the mos/ folder. Run the notebook to generate final results. Please be sure to double-check the paths and details in the notebook to ensure correctness.

To post HITs, the steps are:

- Upload the

final/samples for each model to a public cloud bucket. - Login to your MTurk account and create a project. We recommend doing this on the Requester Sandbox first.

- Go to New Project > Audio Naturalness > Create Project

- On the Edit Project page, add a suitable title, description, and keywords. Refer to

Appendix C.4of our paper for details on the payment and qualifications we used. - On the Design Layout page, paste in the HTML that you generated using the

template_<music/speech>.pyscripts. - Check the Preview to make sure everything looks good.

- Your Project should now show up on the "Create Batch with an Existing Project" page.

- Click "Publish Batch" on the project you just created. Upload the

urls.csvfile generated as a result of running theturk_create_batch.pyscript. Please make sure that you can access the audio files at the URLs in theurls.csvfile (MTurk Sandbox testing should surface any issues). - Once you're ready, post the HITs.

To access results, you can go to the "Manage" page and you should see "Batches in progress" populated with the HITs you posted. You should be able to download a CSV file of the results for each batch. You can plug this into the Jupyter Notebooks we include under state-spaces/sashimi/mturk/mos/ to get final results.

Note: for SC09 HITs, we strongly recommend posting HITs for all models at the same time to reduce the possibility of scoring discrepancies due to different populations of workers.