CALVIN - A benchmark for Language-Conditioned Policy Learning for Long-Horizon Robot Manipulation Tasks Oier Mees, Lukas Hermann, Erick Rosete, Wolfram Burgard

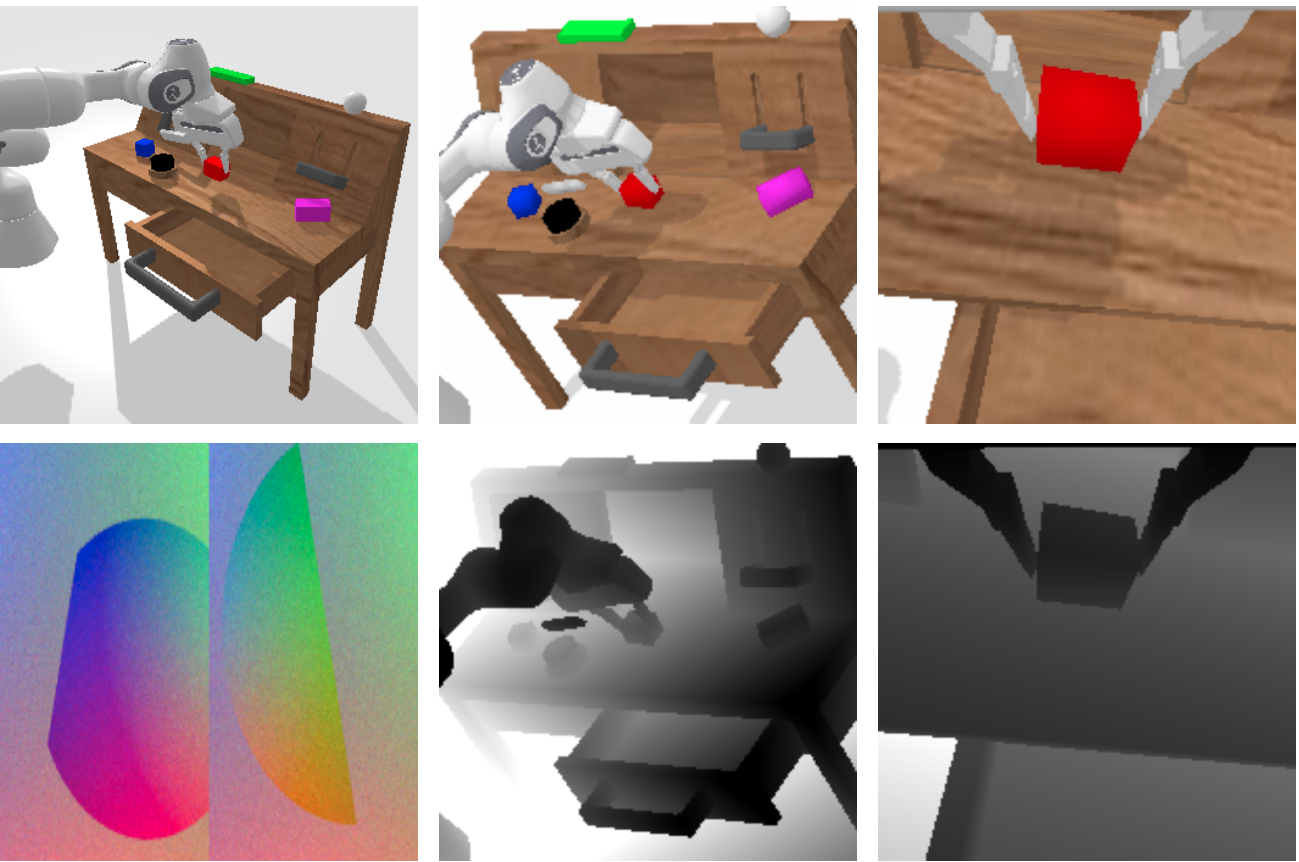

We present CALVIN (Composing Actions from Language and Vision), an open-source simulated benchmark to learn long-horizon language-conditioned tasks. Our aim is to make it possible to develop agents that can solve many robotic manipulation tasks over a long horizon, from onboard sensors, and specified only via human language. CALVIN tasks are more complex in terms of sequence length, action space, and language than existing vision-and-language task datasets and supports flexible specification of sensor suites.

To begin, clone this repository locally

git clone https://github.com/mees/calvin.git

$ export CALVIN_ROOT=$(pwd)/calvin

Install requirements:

$ cd $CALVIN_ROOT

$ virtualenv -p $(which python3) --system-site-packages calvin_env # or use conda

$ source calvin_env/bin/activate

$ pip install --upgrade pip

$ pip install -r requirements.txtDownload dataset:

$ cd $CALVIN_ROOT/data

$ sh download_data.shTrain baseline models:

$ cd $CALVIN_ROOT

$ python train.pyCALVIN supports a range of sensors commonly utilized for visuomotor control:

- Static camera RGB images - with shape

200x200x3. - Static camera Depth maps - with shape

200x200x1. - Gripper camera RGB images - with shape

200x200x3. - Gripper camera Depth maps - with shape

200x200x1. - Tactile image - with shape

120x160x2x3. - Proprioceptive state - EE position (3), EE orientation in euler angles (3), gripper width (1), joint positions (7), gripper action (1).

In CALVIN, the agent must perform closed-loop continuous control to follow unconstrained language instructions characterizing complex robot manipulation tasks, sending continuous actions to the robot at 30hz. In order to give researchers and practitioners the freedom to experiment with different action spaces, CALVIN supports the following actions spaces:

- Absolute cartesian pose - EE position (3), EE orientation in euler angles (3), gripper action (1).

- Relative cartesian displacement - EE position (3), EE orientation in euler angles (3), gripper action (1).

- Joint action - Joint positions (7), gripper action (1).

The aim of the CALVIN benchmark is to evaluate the learning of long-horizon language-conditioned continuous control policies. In this setting, a single agent must solve complex manipulation tasks by understanding a series of unconstrained language expressions in a row, e.g., “open the drawer. . . pick up the blue block. . . now push the block into the drawer. . . now open the sliding door” We provide an evaluation protocol with evaluation modes of varying difficulty by choosing different combinations of sensor suites and amounts of training environments.

If you find the dataset or code useful, please cite:

@article{calvin21,

author = {Oier Mees and Lukas Hermann and Erick Rosete and Wolfram Burgard},

title = {CALVIN - A benchmark for Language-Conditioned Policy Learning for Long-Horizon Robot Manipulation Tasks},

journal={arXiv preprint arXiv:foo},

year = 2020,

}

MIT License