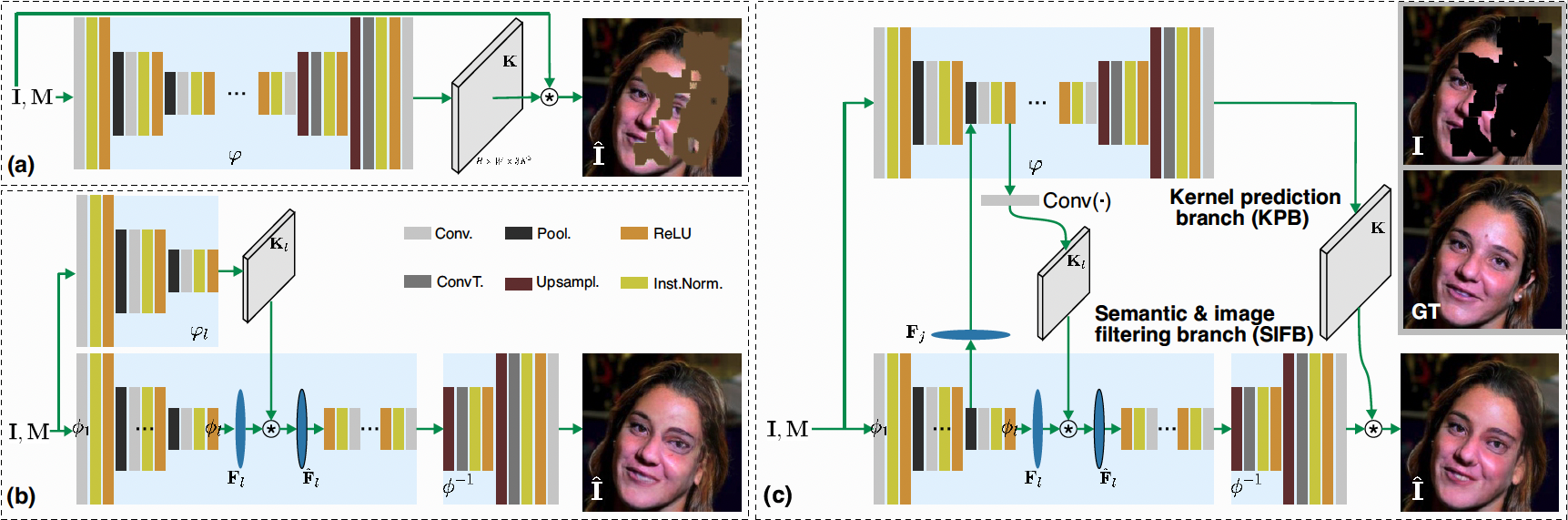

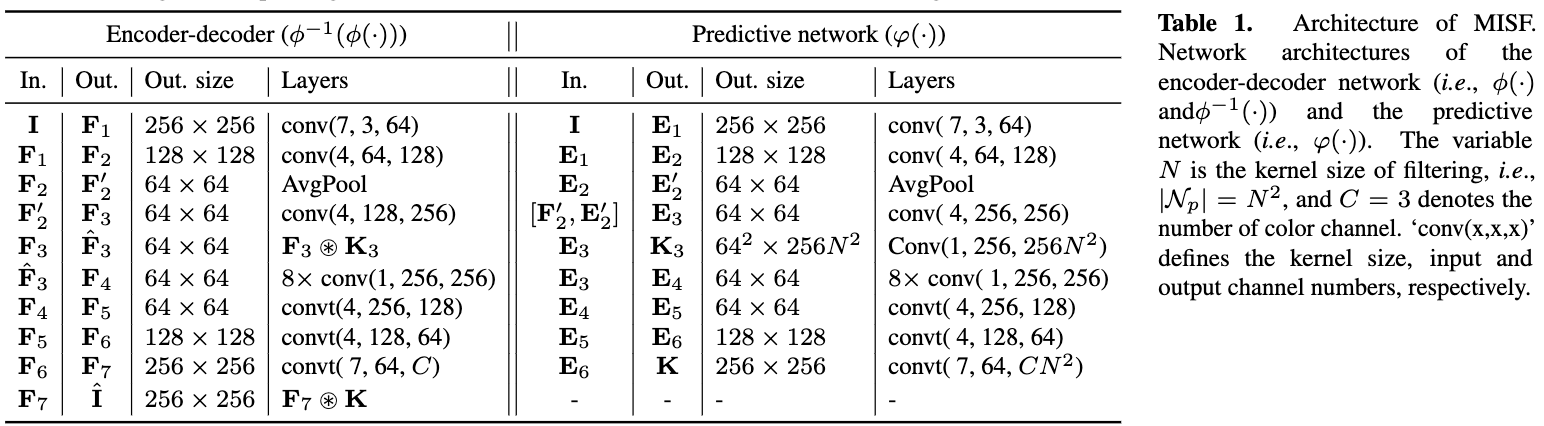

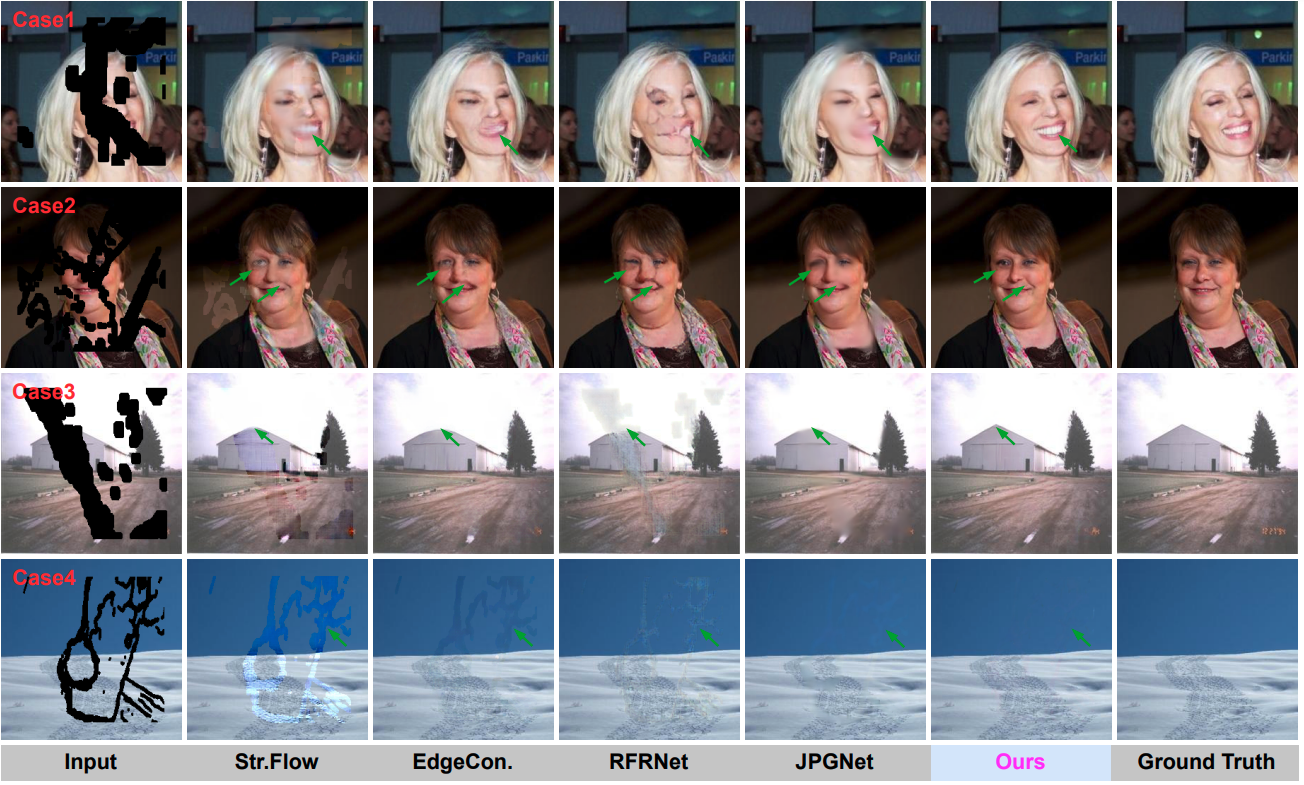

We proposed a novel approach for high-fidelity image inpainting. Specifically, we use a single predictive network to conduct predictive filtering at the image level and deep feature level, simultaneously. The image-level filtering is to recover details, while the deep feature-level filtering is to complete semantic information, which leads to high-fidelity inpainting results. Our method outperforms state-of-the-art methods on three public datasets.

[ArXiv]

- Python 3.7

- PyTorch >= 1.0 (test on PyTorch 1.0 and PyTorch 1.7.0)

- Places2 Data of Places365-Standard

- CelebA

- [Dunhuang]

- Mask

- For data folder path (CelebA) organize them as following:

--CelebA

--train

--1-1.png

--valid

--1-1.png

--test

--1-1.png

--mask-train

--1-1.png

--mask-valid

--1-1.png

--mask-test

--0%-20%

--1-1.png

--20%-40%

--1-1.png

--40%-60%

--1-1.png- Run the code

./data/data_list.pyto generate the data list

python train.py

For the parameters: checkpoints/config.yml

Such as test on the face dataset, please follow the following:

- Make sure you have downloaded the "celebA_InpaintingModel_dis.pth" and "celebA_InpaintingModel_gen.pth" and put that inside the checkpoints folder.

- Change "MODEL_LOAD: celebA_InpaintingModel" in checkpoints/config.yml.

- python test.py #For the parameters: checkpoints/config.yml

@article{li2022misf,

title={MISF: Multi-level Interactive Siamese Filtering for High-Fidelity Image Inpainting},

author={Li, Xiaoguang and Guo, Qing and Lin, Di and Li, Ping and Feng, Wei and Wnag, Song},

journal={CVPR},

year={2022}

}

Parts of this code were derived from:

https://github.com/tsingqguo/efficientderain

https://github.com/knazeri/edge-connect