Important

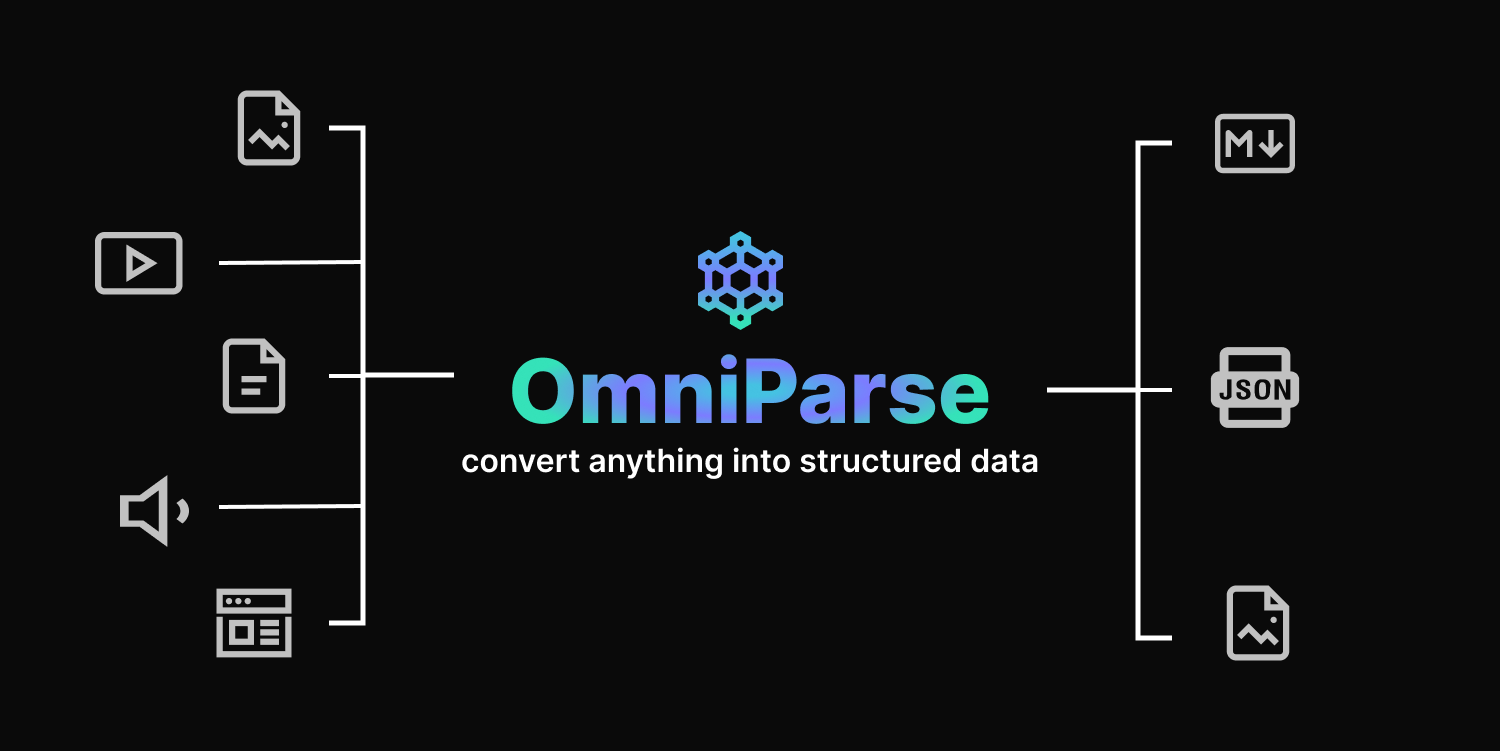

OmniParse is a platform that ingests/parses any unstructured data into structured, actionable data optimized for GenAI (LLM) applcaitons. Whether working with documents, tables, images, videos, audio files, or web pages, OmniParse ensures your data is clean and ready for GenAI applications, like RAG fineutning etc.

✅ Completely local, no external APIs

✅ Fits in a T4 GPU

✅ Supports 10+ file types

✅ Convert documents, multimedia, and web pages to high-quality structured markdown

✅ Table extraction, image extraction/captioning, audio/video transcription, web page crawling

✅ Easily deployable using Docker and Skypilot

✅ Colab friendly

It's challenging to process data as it comes in different shapes and sizes. OmniParse aims to be an ingestion/parsing platform where you can ingest any type of data, such as documents, images, audio, video, and web content, and get the most structured and actionable output that is GenAI (LLM) friendly.

| Type | Supported Extensions |

|---|---|

| Documents | .doc, .docx, .odt, .pdf, .ppt, .pptx |

| Images | .png, .jpg, .jpeg, .tiff, .bmp, .heic |

| Video | .mp4, .mkv, .avi, .mov |

| Audio | .mp3, .wav, .aac |

| Web | dynamic webpages, http://.com |

To install OmniParse, you can use pip:

git clone https://github.com/adithya-s-k/omniparse

cd omniparseCreate a Virtual Environment:

conda create --name omniparse-venv python=3.10

conda activate omniparse-venvInstall Dependencies:

poetry install

# or

pip install -e .Run the Server:

python server.py --host 0.0.0.0 --port 8000 --documents --media --web--documents: Load in all the models that help you parse and ingest documents (Surya OCR series of models and Florence-2).--media: Load in Whisper model to transcribe audio and video files.--web: Set up selenium crawler.

(Include instructions for setting up the environment and installing dependencies here)

To start the API server, run the following command:

python main.py --host 0.0.0.0 --port 8000

Arguments:

--host: Host IP address (default: 0.0.0.0)--port: Port number (default: 8000)

[!IMPORTANT]

Client library compatible with Langchain, llamaindex, and haystack integrations coming soon.

Endpoint: /parse_document

Method: POST

Parses PDF, PowerPoint, or Word documents.

Curl command:

curl -X POST -F "file=@/path/to/document" http://localhost:8000/parse_document

Endpoint: /parse_document/pdf

Method: POST

Parses PDF documents.

Curl command:

curl -X POST -F "file=@/path/to/document.pdf" http://localhost:8000/parse_document/pdf

Endpoint: /parse_document/ppt

Method: POST

Parses PowerPoint presentations.

Curl command:

curl -X POST -F "file=@/path/to/presentation.ppt" http://localhost:8000/parse_document/ppt

Endpoint: /parse_document/docs

Method: POST

Parses Word documents.

Curl command:

curl -X POST -F "file=@/path/to/document.docx" http://localhost:8000/parse_document/docs

Endpoint: /parse_media

Method: POST

Parses images, videos, or audio files.

Curl command:

curl -X POST -F "file=@/path/to/media_file" http://localhost:8000/parse_media

Endpoint: /parse_media/image

Method: POST

Parses image files (PNG, JPEG, JPG, TIFF, WEBP).

Curl command:

curl -X POST -F "file=@/path/to/image.jpg" http://localhost:8000/parse_media/image

Endpoint: /parse_media/process_image

Method: POST

Processes an image with a specific task.

Curl command:

curl -X POST -F "image=@/path/to/image.jpg" -F "task=Caption" -F "prompt=Optional prompt" http://localhost:8000/parse_media/process_image

Arguments:

image: The image filetask: The processing task (e.g., Caption, Object Detection)prompt: Optional prompt for certain tasks

Endpoint: /parse_media/video

Method: POST

Parses video files (MP4, AVI, MOV, MKV).

Curl command:

curl -X POST -F "file=@/path/to/video.mp4" http://localhost:8000/parse_media/video

Endpoint: /parse_media/audio

Method: POST

Parses audio files (MP3, WAV, FLAC).

Curl command:

curl -X POST -F "file=@/path/to/audio.mp3" http://localhost:8000/parse_media/audio

Endpoint: /parse_website

Method: POST

Parses a website given its URL.

Curl command:

curl -X POST -H "Content-Type: application/json" -d '{"url": "https://example.com"}' http://localhost:8000/parse_website

Arguments:

url: The URL of the website to parse

🦙 LlamaIndex | Langchain | Haystack integrations coming soon

📚 Batch processing data

⭐ Dynamic chunking and structured data extraction based on specified Schema

🛠️ One magic API: just feed in your file prompt what you want, and we will take care of the rest

🔧 Dynamic model selection and support for external APIs

📄 Batch processing for handling multiple files at once

📦 New open-source model to replace Surya OCR and Marker

Final goal: replace all the different models currently being used with a single MultiModel Model to parse any type of data and get the data you need.

OmniParse is licensed under the Apache License. See LICENSE for more information.

This project is built on top of the remarkable Marker project created by Vik Paruchuri. We express our gratitude for the inspiration and foundation provided by this project. Special thanks to Surya-OCR and Texify for the OCR models extensively used in this project, and to Crawl4AI for their contributions.

For any inquiries, please contact us at [email protected]