- 20/April/2023: We release our project on github and Huggingface!

Done

- Huggingface Space

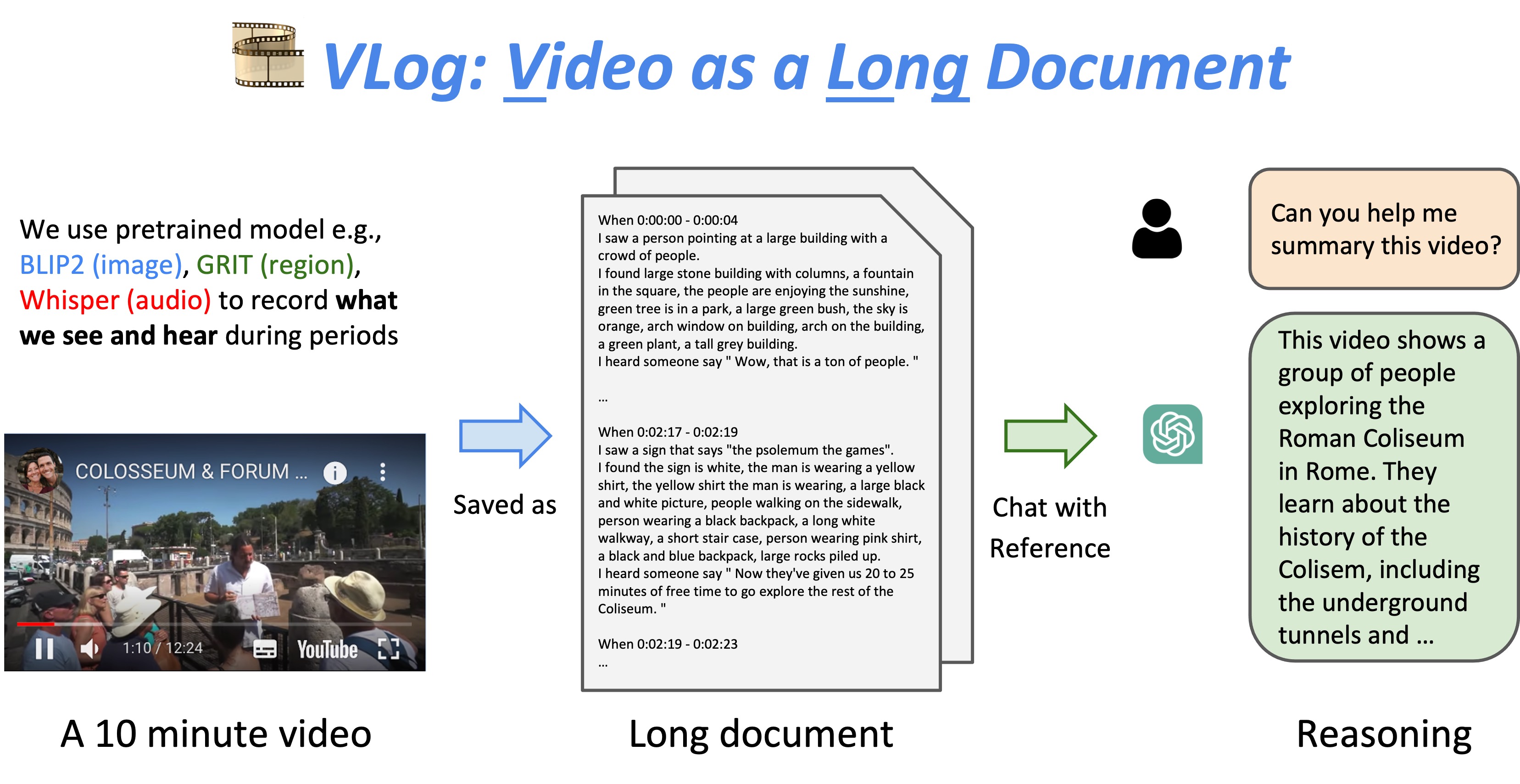

- LLM Reasoner: ChatGPT (multilingual) + LangChain

- Vision Captioner: BLIP2 + GRIT

- ASR Translator: Whisper (multilingual)

- Video Segmenter: KTS

Doing

there are a lot of improvement space we are working on it

- Improve Vision Models: MiniGPT-4, LLaVA, Family of Segment-anything

- Replace ChatGPT with own trained LLM

- Improve ASR Translator

Please find installation instructions in install.md.

python main.py --video_path "examples/demo.mp4"

The generated vlog is saved in examples/demo.log

python main_gradio.py

The project is stay tuned 🔥

If you have more suggestions or functions need to be implemented in this codebase, feel free to drop us an email kevin.qh.lin@gmail, [email protected] or open an issue.

This work is based on ChatGPT, BLIP2, GRIT, KTS, Whisper, LangChain, Image2Paragraph.

See other wonderful Video + LLM projects: Ask-anything, Socratic Models, Vid2Seq, LaViLa.