This a simple version of GPT model with 300 lines of code.

The project is adapted from The Annotated Transformer.

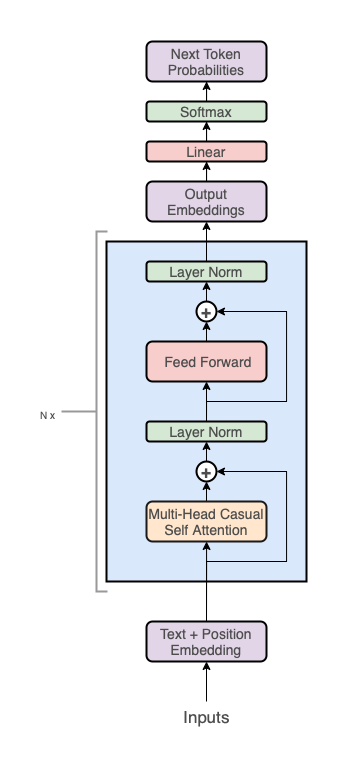

The transformer is encoder-decoder architecture, but the GPT is decoder-only architecture. This is the main difference between these two projects. Other than that, most of the code is the same as that project.