-

Non-autoregressive Expressive TTS: This project aims to provide a cornerstone for future research and application on a non-autoregressive expressive TTS includingEmotional TTSandConversational TTS. For datasets, AIHub Multimodal Video AI datasets and IEMOCAP database are picked for Korean and English, respectively.Note: If you are interested in GST-Tacotron or VAE-Tacotron like expressive stylistic TTS model but under non-autoregressive decoding, you may also be interested in STYLER [demo, code].

-

Annotated Data Processing: This project shed light on how to handle the new dataset, even with a different language, for the successful training of non-autoregressive emotional TTS. -

English and Korean TTS: In addition to English, this project gives a broad view of treating Korean for the non-autoregressive TTS where the additional data processing must be considered under the language-specific features (e.g., training Montreal Forced Aligner with your own language and dataset). Please closely look intotext/.

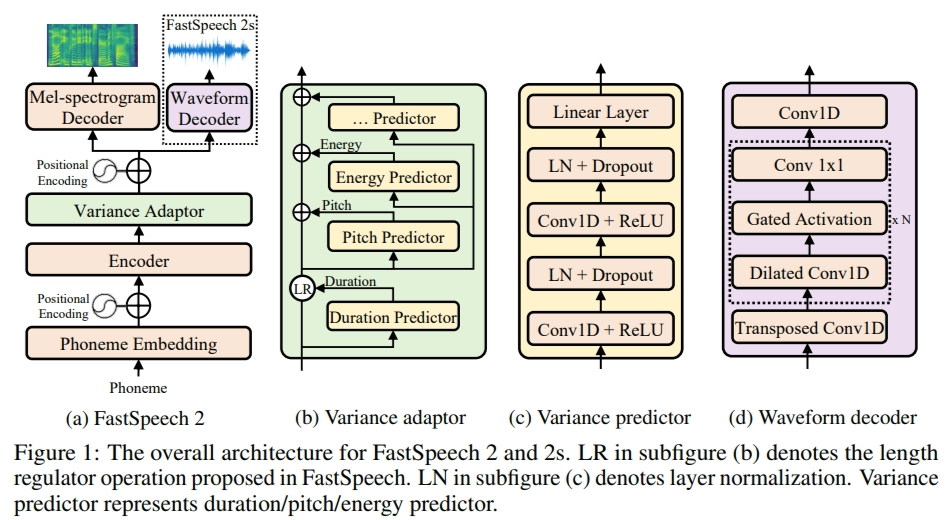

In this project, FastSpeech2 is adapted as a base non-autoregressive multi-speaker TTS framework, so it would be helpful to read the paper and code first (Also see FastSpeech2 branch).

-

Emotional TTS: Following branches contain implementations of the basic paradigm intorduced by Emotional End-to-End Neural Speech synthesizer.- categorical branch: only conditioning categorical emotional descriptors (such as happy, sad, etc.)

- continuous branch: conditioning continuous emotional descriptors (such as arousal, valence, etc.) in addition to categorical emotional descriptors

-

Conversational TTS: Following branch contains implementation of Conversational End-to-End TTS for Voice Agent- conversational branch: conditioning chat history

If you would like to use or refer to this implementation, please cite the repo.

@misc{lee2021expressive_fastspeech2,

author = {Lee, Keon},

title = {Expressive-FastSpeech2},

year = {2021},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/keonlee9420/Expressive-FastSpeech2}}

}