Logarithmic Memory Networks (LMNs) offer a novel approach to long-range sequence modeling, addressing challenges in computational and memory efficiency faced by traditional architectures such as Transformers and Recurrent Neural Networks (RNNs). This repository provides the implementation of LMNs and demonstrates their performance on sequence modeling tasks.

LMNs leverage a hierarchical logarithmic tree structure to efficiently store and retrieve historical information. This architecture employs:

- Parallel execution mode during training for efficient processing.

- Sequential execution mode during inference for reduced memory usage.

LMNs also eliminate the need for explicit positional encoding by implicitly encoding positional information. This results in a robust and scalable architecture for sequence modeling tasks with lower computational costs.

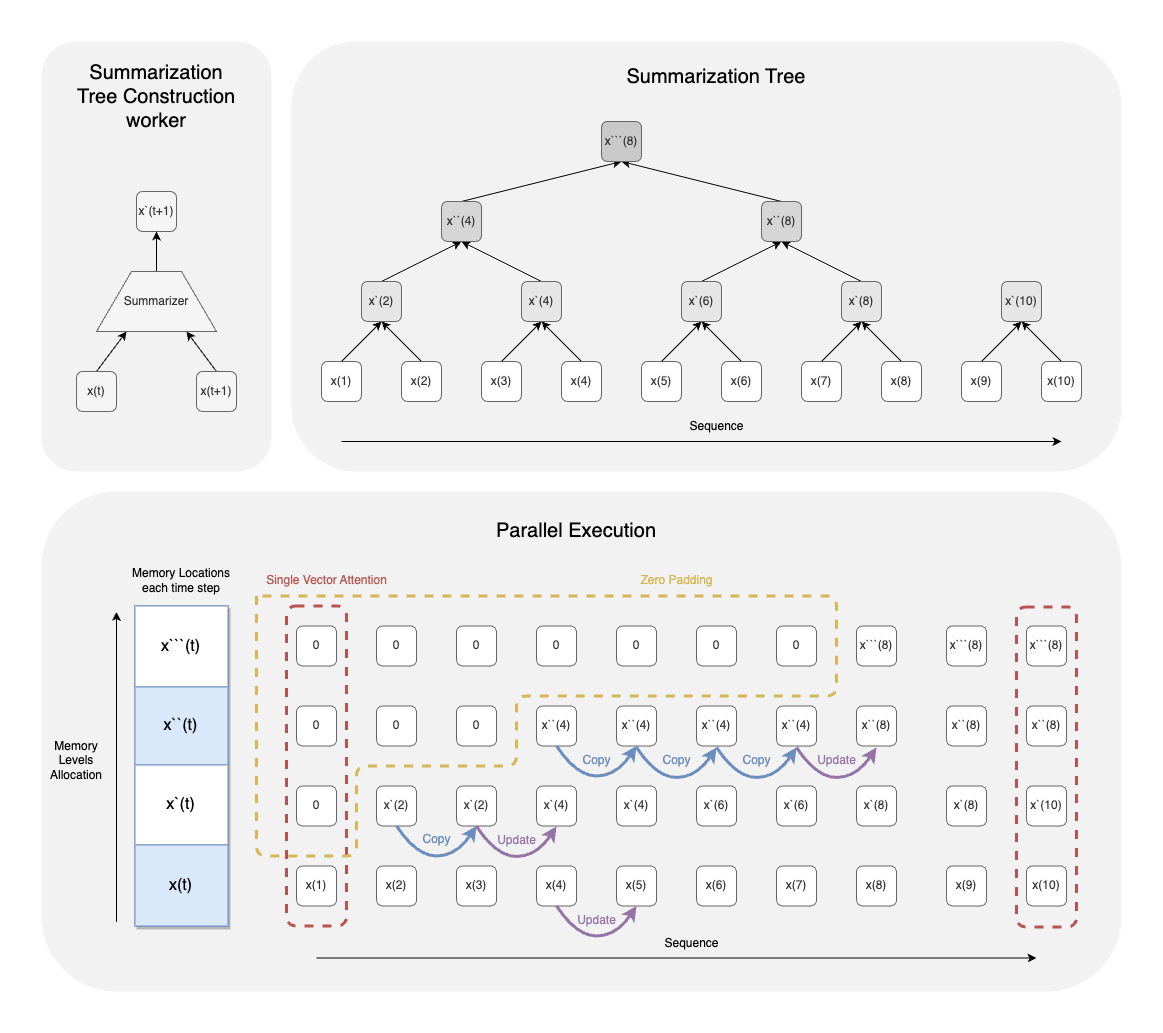

The LMN architecture introduces a summarization layer that builds a logarithmic tree to manage memory effectively. This enables efficient access to long-term dependencies with reduced complexity. The model also employs a single-vector targeted attention mechanism for precise retrieval of stored information.

During training (parallel mode), the summarization layer builds the logarithmic memory tree efficiently. This parallel execution speeds up processing and ensures that the hierarchical structure is properly optimized.

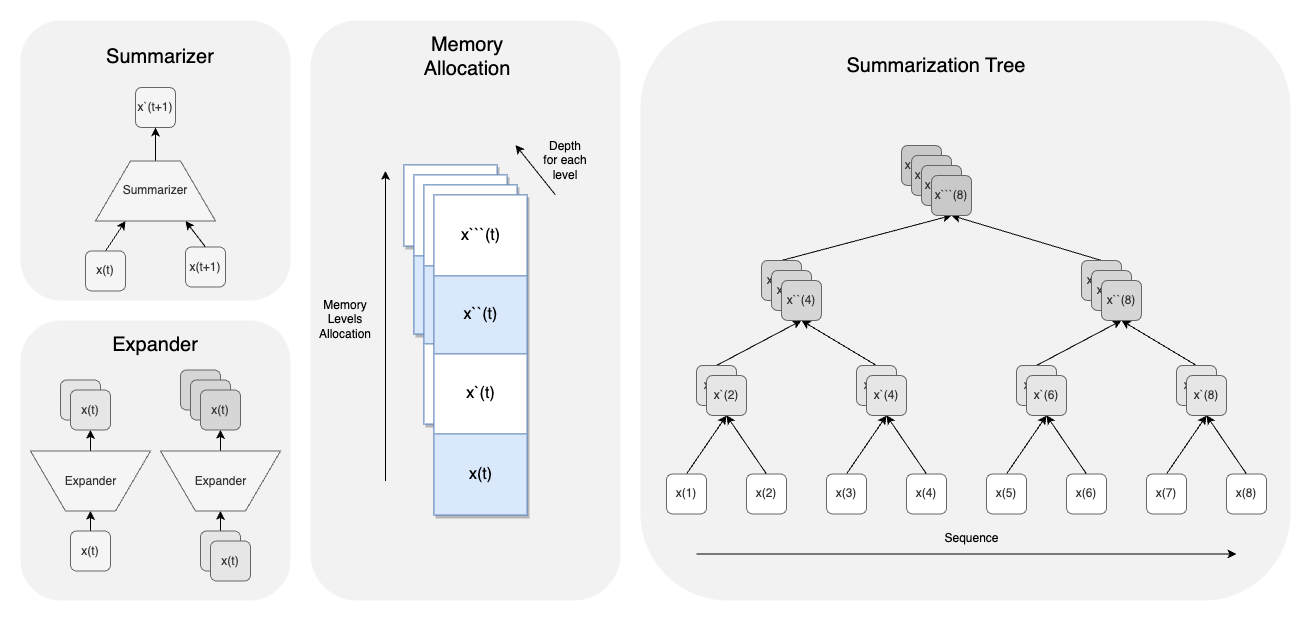

In inference (sequential mode), LMNs dynamically summarize memory, reducing the memory footprint significantly. This mode processes sequences step-by-step, making it ideal for memory-constrained devices.

The LMN employs a single-vector attention mechanism, which efficiently retrieves relevant information from memory without the need for computationally expensive multi-vector attention operations.

The hierarchical tree encodes each token’s relative position as a path or binary representation during parallel or sequential summarization

The Expander Summarizer Architecture uses hierarchical memory and an expander layer with 1D transposed convolution to improve long-sequence processing. It achieves scalability with a complexity of O(k/2 \cdot \log^2(n)) while retaining critical information efficiently.

./Source: Contains the core implementation of the LMN architecture../Notebook: A Jupyter notebook that demonstrates the use of LMNs on sequence modeling tasks../Figures: visualizations used in this repository and the accompanying paper.

-

Clone this repository:

git clone https://github.com/AhmedBoin/LogarithmicMemory.git cd LogarithmicMemory -

Explore the test_notebook.ipynb notebook to test and visualize LMN performance.

📜 License

This repository is licensed under the MIT License. See the LICENSE file for details.

✍️ Citation

If you use this repository in your research or find it helpful, please cite our work as:

@article{Taha2025LogMem,

author = {Mohamed A. Taha},

title = {Logarithmic Memory Networks (LMNs): Efficient Long-Range Sequence Modeling for Resource-Constrained Environments},

journal = {arXiv preprint},

volume = {arXiv:2501.07905},

year = {2025},

note = {Under consideration at Springer: Artificial Intelligence Review},

url = {https://arxiv.org/abs/2501.07905}

}📬 Contact

For questions, collaborations, or feedback, feel free to reach out:

• 📧 Gmail: [[email protected]]

• 💼 LinkedIn: [https://www.linkedin.com/in/ahmed-boin/]

• 🐦 Twitter: [https://x.com/AhmedBoin]

I would like to express my deepest gratitude to Radwa A. Rakha for her invaluable support, encouragement, and guidance throughout the development and presentation of this research. Her insightful feedback and unwavering enthusiasm played a pivotal role in refining this work. I am truly grateful for her contributions and dedication, which have greatly enriched the quality of this study.

Happy coding! 😊