TODO

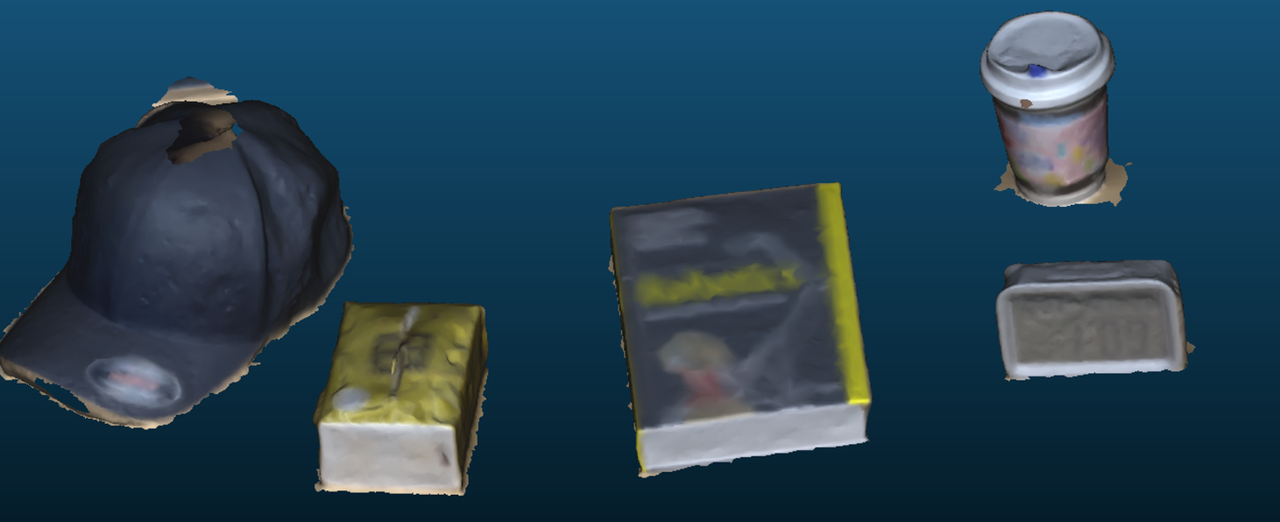

- Pose Estimation, 2D Segmentation

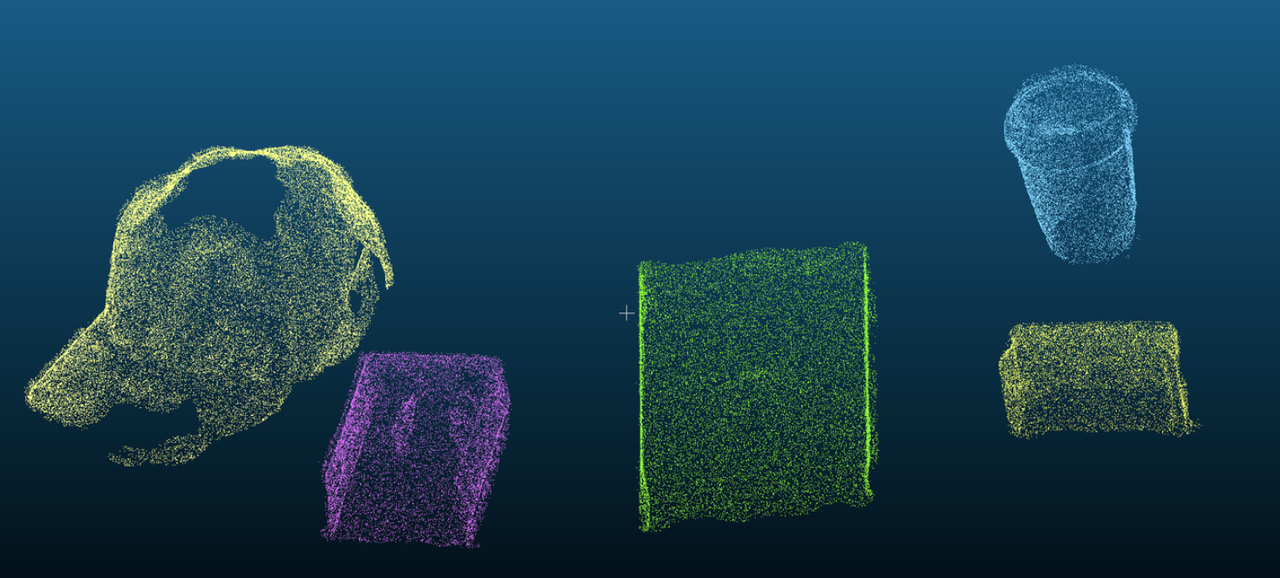

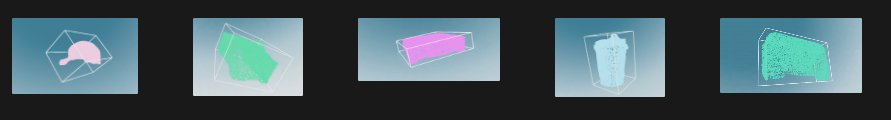

- Dense Reconstruction, 3D Segmentation

- Assist-GS Reconstruction, Scene Decomposition

- LLM Label Annotations

- Simulation

## clone our code

git clone --recursive https://github.com/BigCiLeng/R2G.git

## create the env

conda create -n r2g python=3.10

## install the dependency

pip install -r ./requirements.txtPlease install assist-gs following the guidance here for decomposition GS modeling.

Please install SAMpro3D following the guidance here for zero-shot 3D segmentation.

Please install Cutie following the guidance here for video-segmentation.

Please install OpenMVS-2.1.0 following the guidance here for dense initlization of your datasets.

If you have GPS information in your image exif data, install colmap to use spatial_matching which utilizes GPS info.

You can use Metashap Professional Version 2.1.0 to replace Hloc and OpenMVS, we support both dense and sprase point cloud exporting for Metashape.

TODO

Example Dataset Format

<location>

├── align_matrix.txt

├── sparse_pc.ply

├── dense_pc.ply

├── transform_matrix.json

├── transforms.json

├── depth

│ ├── frame_00001.tif

│ └── frame_xxxxx.tif

├── images

│ ├── frame_00001.png

│ └── frame_xxxxx.png

└── sparse

└── 0

├── cameras.bin

├── images.bin

├── points3D.bin

└── points3D.plyuse the demo efficientSAM implemented by EfficientSAM: Leveraged Masked Image Pretraining for Efficient Segment Anything

Example Result:

<location>

└── initial_mask

├── frame_00001.png

└── frame_xxxxx.pngTODO

<location>

└── instance

├── frame_00001.png

└── frame_xxxxx.pngTODO

<location>

├── align_matrix.txt

├── bboxs_aabb.npy

└── models

├── point_cloud

│ ├── obj_00001.ply

│ └── obj_xxxxx.ply

└── mesh

├── obj_00001.ply

└── obj_xxxxx.plyTODO

<location>

└── models

└── gaussians

├── obj_00001.ply

└── obj_xxxxx.plygpt4_method_json:

// template.json must follow the example shown below

{

"instance_id": "",

"label": {

"name": "",

"color": "",

"description": "a red apple"

},

"physics": {

"mass": "200",

"collider": "convexHull",

"rigid_body": "cube"

}

}

// instance_color_map.json must follow the exmaple shown below

{

"0": [0, 0, 0],

"1": [255, 0, 0],

"2": [0, 255, 0],

"3": [0, 0, 255],

"4": [255, 255, 0],

"5": [255, 0, 255]

}Example Result:

// SINGLE Mask

{

"instance_id": "1",

"label": {

"name": "baseball cap",

"color": "black",

"description": "a black baseball cap with a curved brim and an adjustable strap in the back"

},

"physics": {

"mass": "0.1",

"collider": "mesh"

}

}

// SCENE Mask

{

{

"instance_id": "1",

"label": {

"name": "book",

"color": "red",

"description": "a thick book titled Robotics with a multicolor cover"

},

"physics": {

"mass": "1.5",

"collider": "box"

}

},

//...

}<location>

└── models

└── label

├── obj_00001.ply

└── obj_xxxxx.plyTODO

Example Result:

Media1.mp4

Media2.mp4

TODO

Please first put the images you want to use in a directory <location>/images.

the final data format

<location>

├── align_matrix.txt

├── bboxs_aabb.npy

├── sparse_pc.ply

├── transform_matrix.json

├── transforms.json

├── depth

│ ├── frame_00001.tif

│ └── frame_xxxxx.tif

├── images

│ ├── frame_00001.png

│ └── frame_xxxxx.png

├── initial_mask

│ ├── frame_00001.png

│ └── frame_xxxxx.png

├── instance

│ ├── frame_00001.png

│ └── frame_xxxxx.png

├── models

│ ├── gaussians

│ │ ├── obj_00001.ply

│ │ └── obj_xxxxx.ply

│ ├── point_cloud

│ │ ├── obj_00001.ply

│ │ └── obj_xxxxx.ply

│ ├── label

│ │ ├── obj_00001.json

│ │ └── obj_xxxxx.json

│ └── mesh

│ ├── obj_00001.ply

│ └── obj_xxxxx.ply

└── sparse

└── 0

├── cameras.bin

├── images.bin

├── points3D.bin

└── points3D.ply