FitDiT is designed for high-fidelity virtual try-on using Diffusion Transformers (DiT).

2025/1/16: We provide the ComfyUI version of FitDiT, you can use FitDiT in ComfyUI now.

Download or clone the repo of FitDiT-ComfyUI branch and place it in the ComfyUI/custom_nodes/ directory, you can follow the following steps:

- goto

ComfyUI/custom_nodesdir in terminal(cmd) git clone https://github.com/BoyuanJiang/FitDiT.git -b FitDiT-ComfyUI FitDiT- Restart ComfyUI

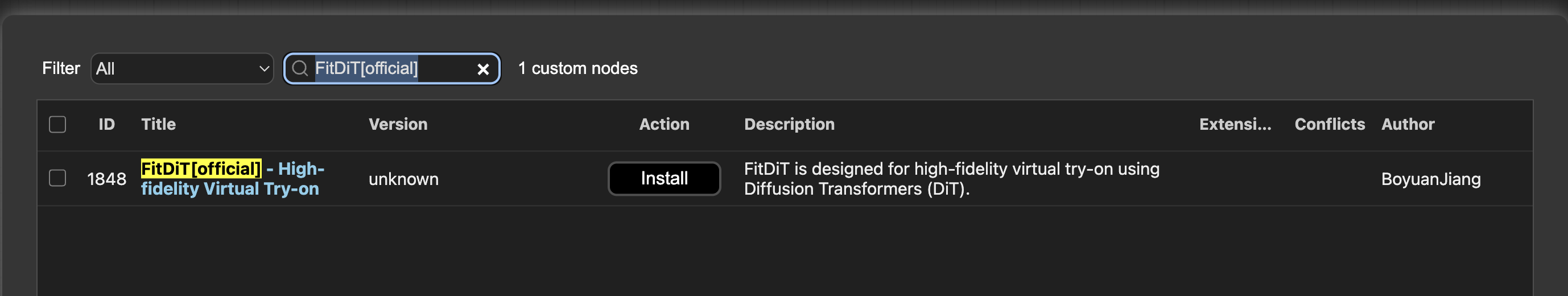

You can also use ComfyUI-Manager to install FitDiT by searching FitDiT[official] in the ComfyUI-Manager.

FItDiT was tested under the following environment, but other versions should also work. You can first use your own existing environment.

- torch==2.4.0

- torchvision==0.19.0

- accelerate==0.31.0

- diffusers==0.31.0

- transformers==4.39.3

- numpy==1.23.0

- scikit-image==0.24.0

- huggingface_hub==0.26.5

- onnxruntime==1.20.1

- opencv-python

- matplotlib==3.8.3

- einops==0.7.0

Download the FitDiT model and place it in the ComfyUI/models/FitDiT_models directory, the clip-vit-large-patch14 and CLIP-ViT-bigG-14 and place them in the ComfyUI/models/clip directory.

You can download the model with the following command:

pip install -U huggingface_hub

python download_model.py --dir /path/to/ComfyUI/

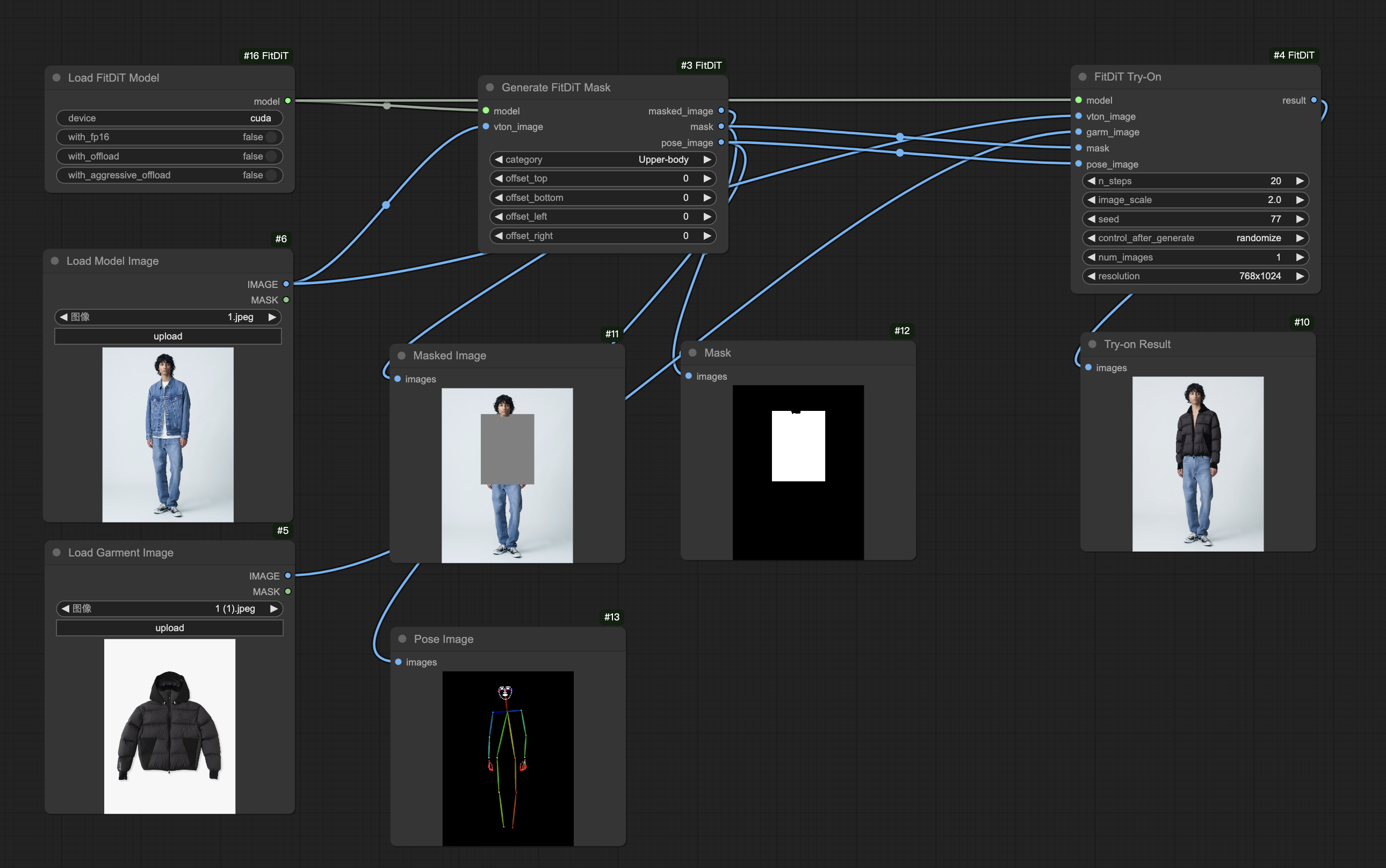

fitdit_workflow.json is the example workflow of FitDiT in ComfyUI. If you have less GPU memory, you can set with_offload or with_aggressive_offload to True. Set with_offload to True with moderate gpu memroty, moderate inference time. Set with_aggressive_offload to True with lowest gpu memroty, longest inference time.

This model can only be used for non-commercial use. For commercial use, please visit Tencent Cloud for support.

If you find our work helpful for your research, please consider citing our work.

@misc{jiang2024fitditadvancingauthenticgarment,

title={FitDiT: Advancing the Authentic Garment Details for High-fidelity Virtual Try-on},

author={Boyuan Jiang and Xiaobin Hu and Donghao Luo and Qingdong He and Chengming Xu and Jinlong Peng and Jiangning Zhang and Chengjie Wang and Yunsheng Wu and Yanwei Fu},

year={2024},

eprint={2411.10499},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2411.10499},

}