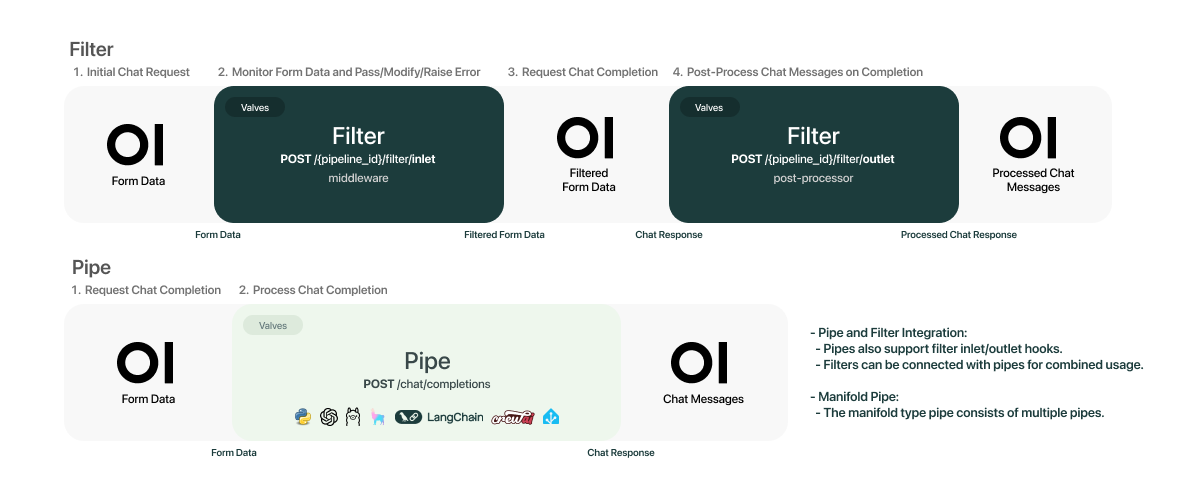

Welcome to Pipelines, an Open WebUI initiative. Pipelines bring modular, customizable workflows to any UI client supporting OpenAI API specs – and much more! Easily extend functionalities, integrate unique logic, and create dynamic workflows with just a few lines of code.

- Start pipeline container

git clone https://github.com/CYH4157/cyh_pipelines.git

cd cyh_pipelines

docker build -t cyh-pipelines:latest .

docker run -d -p 9099:9099 --add-host=host.docker.internal:host-gateway -v $(pwd):/app --name pipelines --network hydra_llm_network --restart always cyh-pipelines- hydra docker-compose to get the qdrant container started put your qdrant embedding data

cd

mkdir storage_qdrant

mv -r your_embedding_data ./storage_qdrant/collections/your_embedding_data- Ollama Download model

docker exec -it ollama ollama pull llama3.1:8b-instruct-fp16

docker exec -it ollama ollama pull chatfire/bge-m3:q8_0- Setting openwebui admin panel go to open webui and connect with pipeline container http://your_open_webui_ip:3000/

# default passwd

0p3n-w3bu!- upload your code or git url

- find your models

- Limitless Possibilities: Easily add custom logic and integrate Python libraries, from AI agents to home automation APIs.

- Seamless Integration: Compatible with any UI/client supporting OpenAI API specs. (Only pipe-type pipelines are supported; filter types require clients with Pipelines support.)

- Custom Hooks: Build and integrate custom pipelines.

- Function Calling Pipeline: Easily handle function calls and enhance your applications with custom logic.

- Custom RAG Pipeline: Implement sophisticated Retrieval-Augmented Generation pipelines tailored to your needs.

- Message Monitoring Using Langfuse: Monitor and analyze message interactions in real-time using Langfuse.

- Rate Limit Filter: Control the flow of requests to prevent exceeding rate limits.

- Real-Time Translation Filter with LibreTranslate: Seamlessly integrate real-time translations into your LLM interactions.

- Toxic Message Filter: Implement filters to detect and handle toxic messages effectively.

- And Much More!: The sky is the limit for what you can accomplish with Pipelines and Python. Check out our scaffolds to get a head start on your projects and see how you can streamline your development process!

Integrating Pipelines with any OpenAI API-compatible UI client is simple. Launch your Pipelines instance and set the OpenAI URL on your client to the Pipelines URL. That's it! You're ready to leverage any Python library for your needs.

Warning

Pipelines are a plugin system with arbitrary code execution — don't fetch random pipelines from sources you don't trust.

For a streamlined setup using Docker:

-

Run the Pipelines container:

docker run -d -p 9099:9099 --add-host=host.docker.internal:host-gateway -v pipelines:/app/pipelines --name pipelines --restart always ghcr.io/open-webui/pipelines:main

-

Connect to Open WebUI:

- Navigate to the Settings > Connections > OpenAI API section in Open WebUI.

- Set the API URL to

http://localhost:9099and the API key to0p3n-w3bu!. Your pipelines should now be active.

Note

If your Open WebUI is running in a Docker container, replace localhost with host.docker.internal in the API URL.

-

Manage Configurations:

- In the admin panel, go to Admin Settings > Pipelines tab.

- Select your desired pipeline and modify the valve values directly from the WebUI.

Tip

If you are unable to connect, it is most likely a Docker networking issue. We encourage you to troubleshoot on your own and share your methods and solutions in the discussions forum.

If you need to install a custom pipeline with additional dependencies:

-

Run the following command:

docker run -d -p 9099:9099 --add-host=host.docker.internal:host-gateway -e PIPELINES_URLS="https://github.com/open-webui/pipelines/blob/main/examples/filters/detoxify_filter_pipeline.py" -v pipelines:/app/pipelines --name pipelines --restart always ghcr.io/open-webui/pipelines:main

Alternatively, you can directly install pipelines from the admin settings by copying and pasting the pipeline URL, provided it doesn't have additional dependencies.

That's it! You're now ready to build customizable AI integrations effortlessly with Pipelines. Enjoy!

Get started with Pipelines in a few easy steps:

-

Ensure Python 3.11 is installed.

-

Clone the Pipelines repository:

git clone https://github.com/open-webui/pipelines.git cd pipelines -

Install the required dependencies:

pip install -r requirements.txt

-

Start the Pipelines server:

sh ./start.sh

Once the server is running, set the OpenAI URL on your client to the Pipelines URL. This unlocks the full capabilities of Pipelines, integrating any Python library and creating custom workflows tailored to your needs.

The /pipelines directory is the core of your setup. Add new modules, customize existing ones, and manage your workflows here. All the pipelines in the /pipelines directory will be automatically loaded when the server launches.

You can change this directory from /pipelines to another location using the PIPELINES_DIR env variable.

Find various integration examples in the /examples directory. These examples show how to integrate different functionalities, providing a foundation for building your own custom pipelines.

We’re continuously evolving! We'd love to hear your feedback and understand which hooks and features would best suit your use case. Feel free to reach out and become a part of our Open WebUI community!

Our vision is to push Pipelines to become the ultimate plugin framework for our AI interface, Open WebUI. Imagine Open WebUI as the WordPress of AI interfaces, with Pipelines being its diverse range of plugins. Join us on this exciting journey! 🌍