[WIP] The all in one inference optimization solution for ComfyUI, universal, flexible, and fast.

- Dynamic Caching (First Block Cache)

- Enhanced

torch.compile

More to come...

- Multi-GPU Inference (ComfyUI version of ParaAttention's Context Parallelism)

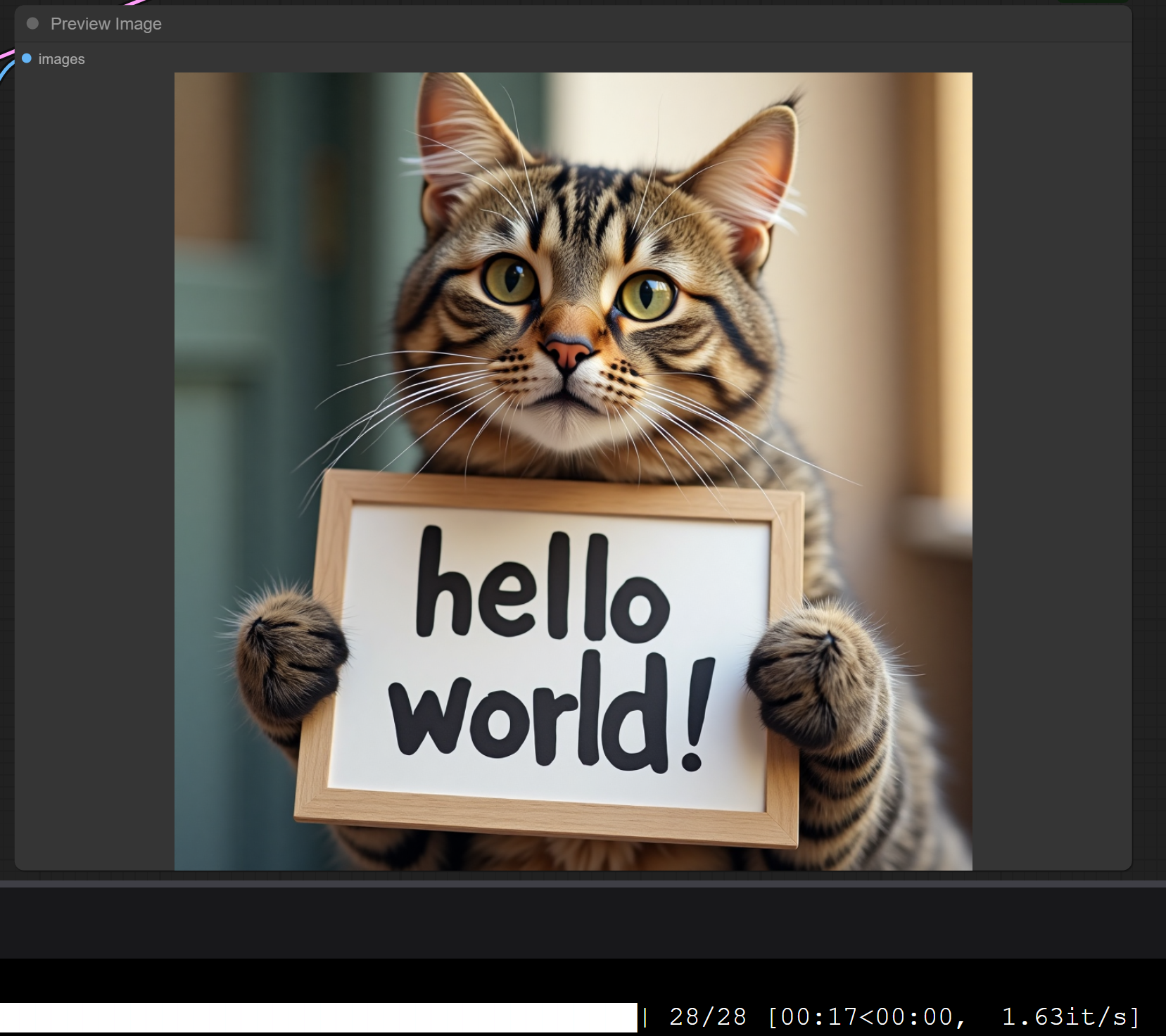

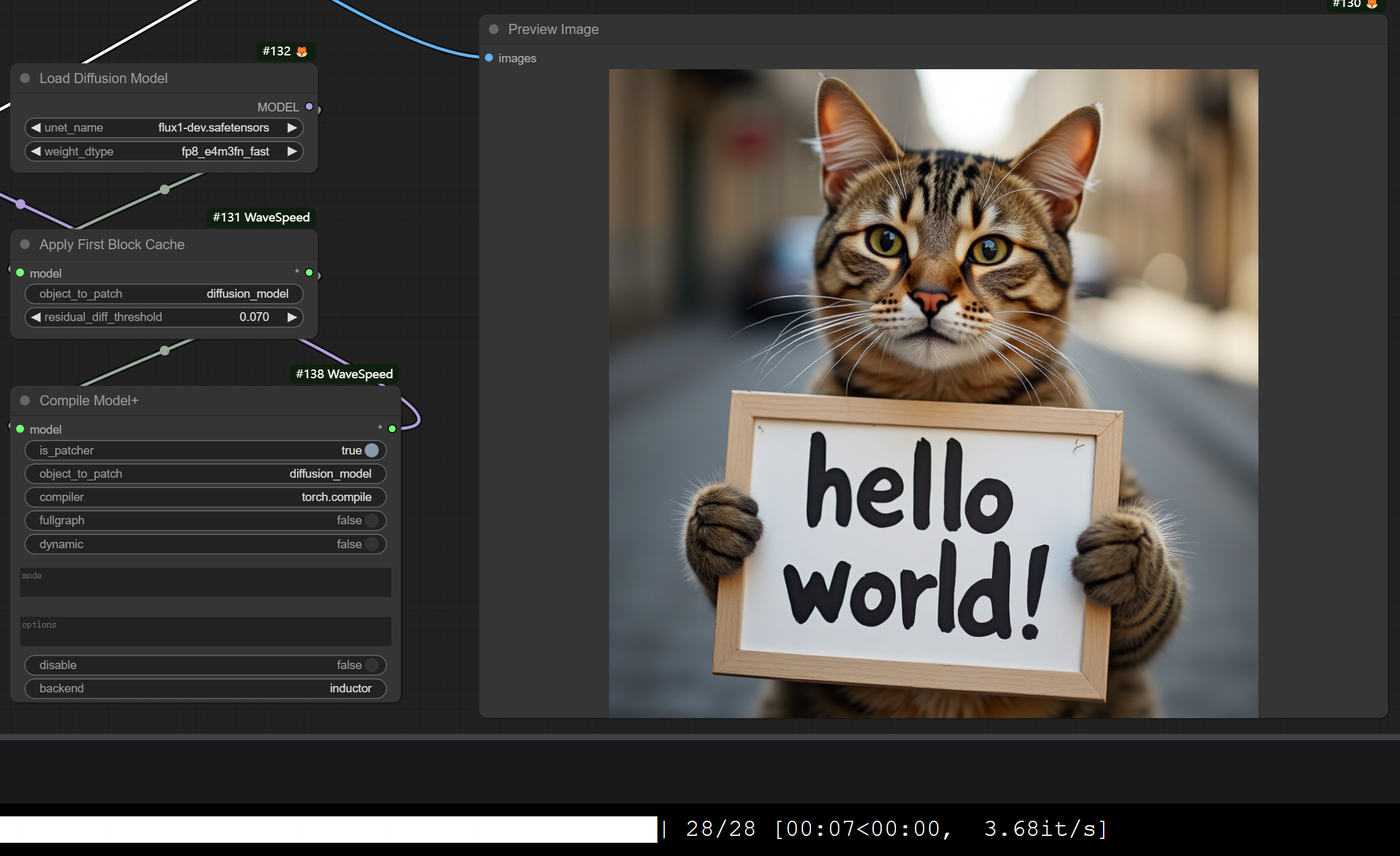

| FLUX.1-dev Original | FLUX.1-dev with First Block Cache and Compilation |

|---|---|

|

|

This is just launched, and we are working on it. Please stay tuned. For any request or question, please join the Discord server.

cd custom_nodes

git clone https://github.com/chengzeyi/Comfy-WaveSpeed.gitYou can find demo workflows in the workflows folder.

Dynamic Caching (First Block Cache)

Inspired by TeaCache and other denoising caching algorithms, we introduce First Block Cache (FBCache) to use the residual output of the first transformer block as the cache indicator. If the difference between the current and the previous residual output of the first transformer block is small enough, we can reuse the previous final residual output and skip the computation of all the following transformer blocks. This can significantly reduce the computation cost of the model, achieving a speedup of up to 2x while maintaining high accuracy.

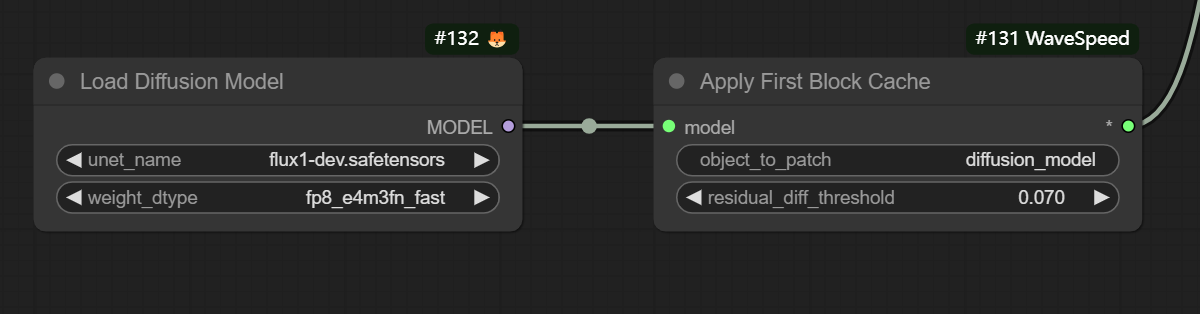

To use first block cache, simply add the wavespeed->Apply First Block Cache node to your workflow after your Load Diffusion Model node and adjust the residual_diff_threashold value to a suitable value for your model, for example: 0.07 for flux-dev.safetensors with fp8_e4m3fn_fast and 28 steps.

It is expected to see a speedup of 1.5x to 3.0x with acceptable accuracy loss.

It supports many models like FLUX, LTXV and HunyuanVideo (native), feel free to try it out and let us know if you have any issues!

See Apply First Block Cache on FLUX.1-dev for more information and detailed comparison on quality and speed.

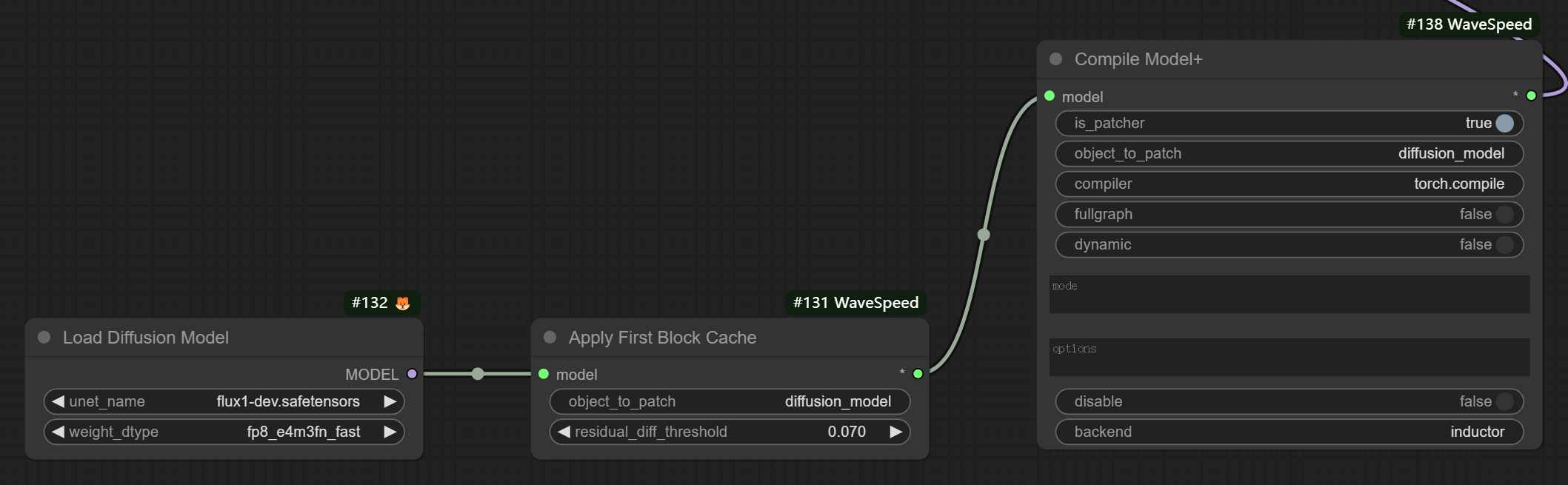

To use the Enhanced torch.compile, simply add the wavespeed->Compile Model+ node to your workflow after your Load Diffusion Model node or Apply First Block Cache node.

The compilation process happens the first time you run the workflow, and it takes quite a long time, but it will be cached for future runs.

You can pass different mode values to make it runs faster, for example max-autotune or max-autotune-no-cudagraphs.

NOTE: torch.compile might not be able to work with model offloading well, you could try passing --gpu-only when launching your ComfyUI to disable model offloading.

Please refer to ParaAttention for more information.