VideoRefer can understand any object you're interested within a video.

- [2025.1.1] We Release the code of VideoRefer and the VideoRefer-Bench.

videorefer-demo.mp4

- HD video can be viewed on YouTube.

VideoRefer Suite is designed to enhance the fine-grained spatial-temporal understanding capabilities of Video Large Language Models (Video LLMs). It consists of three primary components:

- Model (VideoRefer)

VideoRefer is an effective Video LLM, which enables fine-grained perceiving, reasoning, and retrieval for user-defined regions at any specified timestamps—supporting both single-frame and multi-frame region inputs.

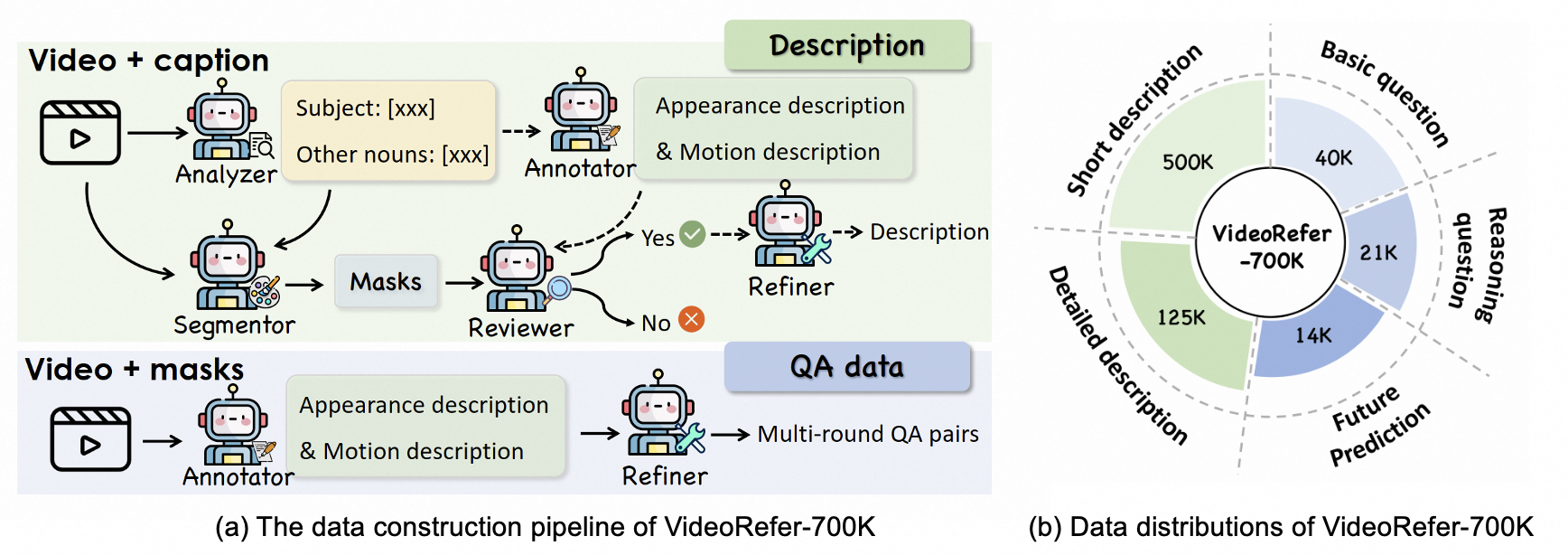

- Dataset (VideoRefer-700K)

VideoRefer-700K is a large-scale, high-quality object-level video instruction dataset. Curated using a sophisticated multi-agent data engine to fill the gap for high-quality object-level video instruction data.

- Benchmark (VideoRefer-Bench)

VideoRefer-Bench is a comprehensive benchmark to evaluate the object-level video understanding capabilities of a model, which consists of two sub-benchmarks: VideoRefer-Bench-D and VideoRefer-Bench-Q.

Basic Dependencies:

- Python >= 3.8

- Pytorch >= 2.2.0

- CUDA Version >= 11.8

- transformers == 4.40.0 (for reproducing paper results)

- tokenizers == 0.19.1

Install required packages:

git clone https://github.com/DAMO-NLP-SG/VideoRefer

cd VideoRefer

pip install -r requirements.txt

pip install flash-attn==2.5.8 --no-build-isolationPlease refer to the examples in infer.ipynb for detailed instructions on how to use our model for single video inference, which supports both single-frame and multi-frame modes.

For better usage, the demo integrates with SAM2, to get started, please install SAM2 first:

git clone https://github.com/facebookresearch/sam2.git && cd sam2

SAM2_BUILD_CUDA=0 pip install -e ".[notebooks]"Then, download sam2.1_hiera_large.pt to checkpoints.

The training data and data structure can be found in Dataset preparation.

The training pipeline of our model is structured into four distinct stages.

-

Stage1: Image-Text Alignment Pre-training

- We use the same data as in VideoLLaMA2.1.

- The pretrained projector weights can be found in VideoLLaMA2.1-7B-16F-Base.

-

Stage2: Region-Text Alignment Pre-training

- Prepare datasets used for stage2.

- Run

bash scripts/train/stage2.sh.

-

Stage2.5: High-Quality Knowledge Learning

- Prepare datasets used for stage2.5.

- Run

bash scripts/train/stage2.5.sh.

-

Stage3: Visual Instruction Tuning

- Prepare datasets used for stage3.

- Run

bash scripts/train/stage3.sh.

For model evaluation, please refer to eval.

| Model Name | Visual Encoder | Language Decoder | # Training Frames |

|---|---|---|---|

| VideoRefer-7B | siglip-so400m-patch14-384 | Qwen2-7B-Instruct | 16 |

| VideoRefer-7B-stage2 | siglip-so400m-patch14-384 | Qwen2-7B-Instruct | 16 |

| VideoRefer-7B-stage2.5 | siglip-so400m-patch14-384 | Qwen2-7B-Instruct | 16 |

VideoRefer-Bench assesses the models in two key areas: Description Generation, corresponding to VideoRefer-BenchD, and Multiple-choice Question-Answer, corresponding to VideoRefer-BenchQ.

videorefer-bench.mp4

-

The annotations of the benchmark can be found in 🤗benchmark.

-

The usage of VideoRefer-Bench is detailed in doc.

If you find VideoRefer Suite useful for your research and applications, please cite using this BibTeX:

@article{yuan2025videorefersuite,

title = {VideoRefer Suite: Advancing Spatial-Temporal Object Understanding with Video LLM},

author = {Yuqian Yuan, Hang Zhang, Wentong Li, Zesen Cheng, Boqiang Zhang, Long Li, Xin Li, Deli Zhao, Wenqiao Zhang, Yueting Zhuang, Jianke Zhu, Lidong Bing},

journal={arXiv},

year={2025},

url = {http://arxiv.org/abs/2501.00599}

}💡 Some other multimodal-LLM projects from our team may interest you ✨.

Video-LLaMA: An Instruction-tuned Audio-Visual Language Model for Video Understanding

Hang Zhang, Xin Li, Lidong Bing

VideoLLaMA 2: Advancing Spatial-Temporal Modeling and Audio Understanding in Video-LLMs

Zesen Cheng, Sicong Leng, Hang Zhang, Yifei Xin, Xin Li, Guanzheng Chen, Yongxin Zhu, Wenqi Zhang, Ziyang Luo, Deli Zhao, Lidong Bing

Osprey: Pixel Understanding with Visual Instruction Tuning

Yuqian Yuan, Wentong Li, Jian Liu, Dongqi Tang, Xinjie Luo, Chi Qin, Lei Zhang, Jianke Zhu

The codebase of VideoRefer is adapted from VideoLLaMA 2. The visual encoder and language decoder we used in VideoRefer are Siglip and Qwen2, respectively.