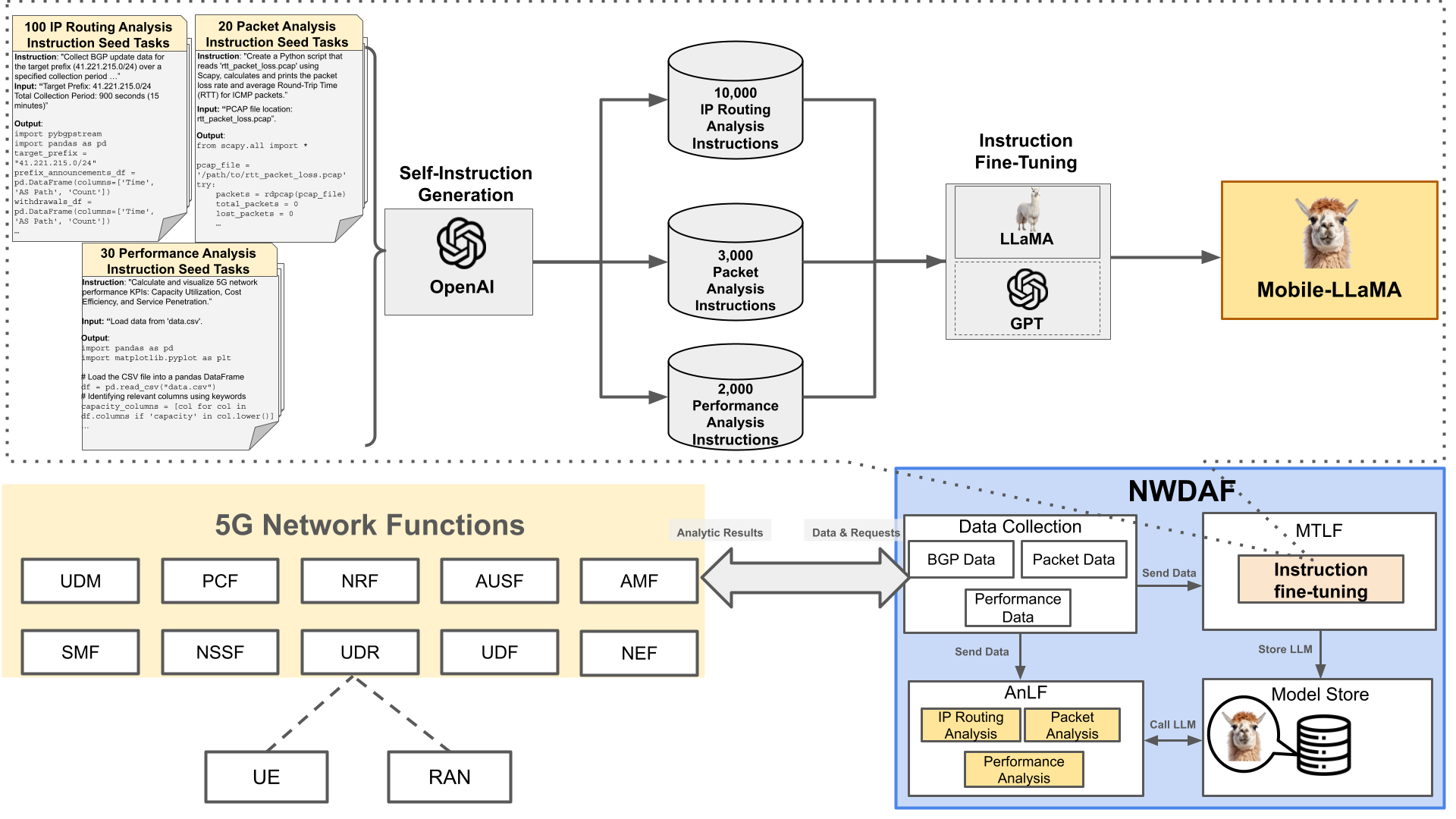

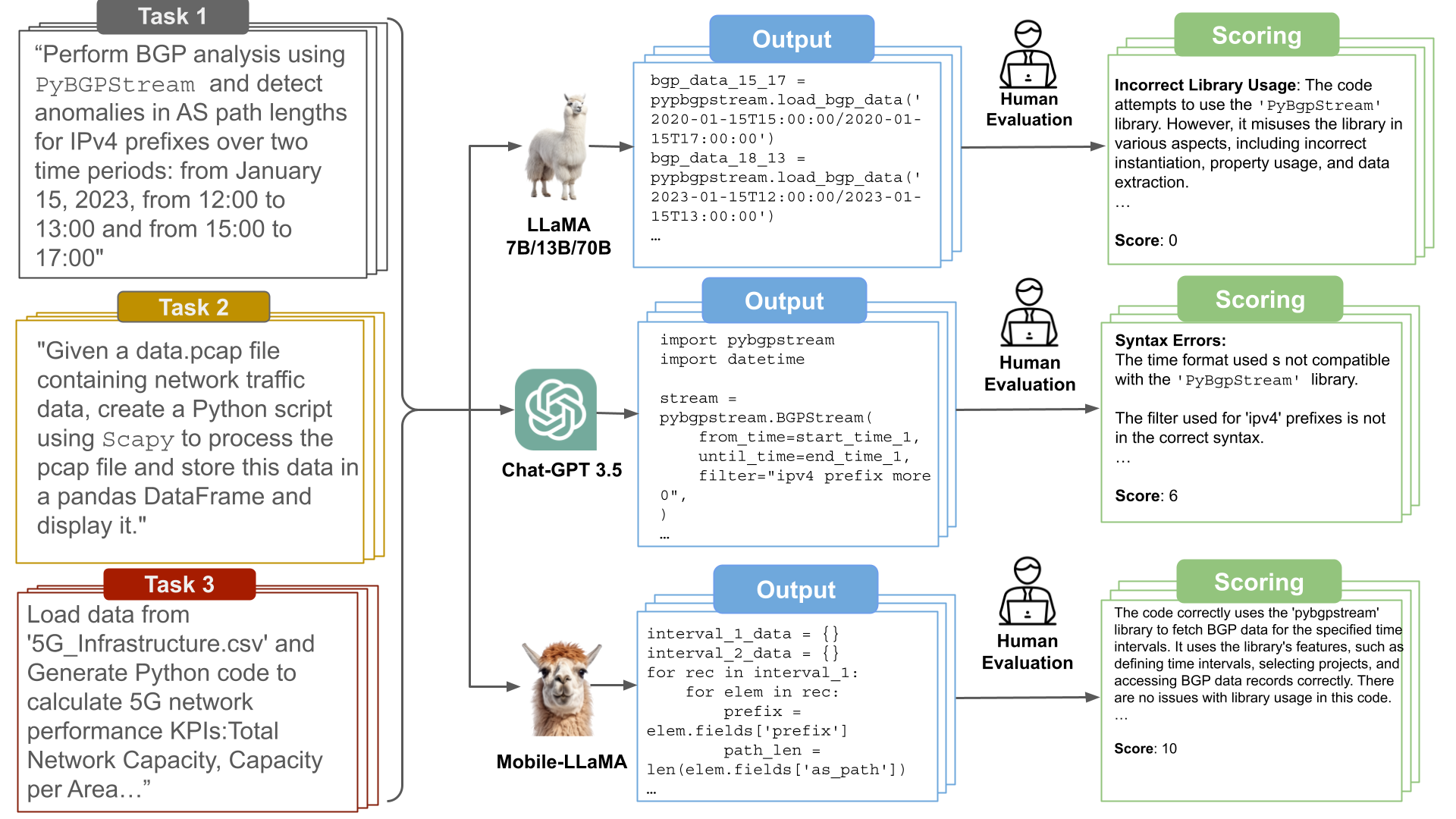

Abstract: In the evolving landscape of 5G networks, Network Data Analytics Function (NWDAF) emerges as a key component, interacting with core network elements to enhance data collection, model training, and analytical outcomes. Language Models (LLMs), with their state-of-the-art capabilities in natural language processing, have been successful in numerous fields. In particular, LLMs enhanced through instruction fine-tuning have demonstrated their effectiveness by employing sets of instructions to precisely tailor the model's responses and behavior. However, it requires collecting a large pool of high-quality training data regarding the precise domain knowledge and the corresponding programming codes. In this paper, we present an open-source mobile network-specialized LLM, Mobile-LLaMA, which is an instruction-finetuned variant of the LLaMA-13B model. We built Mobile-LLaMA by instruction fine-tuning LLaMA with our own network analysis data collected from publicly available, real-world 5G network datasets, and expanded its capabilities through a self-instruct framework utilizing OpenAI's pretrained models (PMs). Mobile-LLaMA has three main functions: packet analysis, IP routing analysis, and performance analysis, enabling it to provide network analysis and contribute to the automation and artificial intelligence (AI) required for 5G network management and data analysis. Our evaluation demonstrates Mobile-LLaMA's proficiency in network analysis code generation, achieving a score of 247 out of 300, surpassing GPT-3.5’s score of 209.

-

self_instruct_data: Three separate subdirectories, each containing instructions generated via the self-instruct framework for one of Mobile-LLaMA's main functions: Packet Analysis, IP Routing Analysis, and Performance Analysis.

-

training_data: The main training data for Mobile-LLaMA. We've combined all the instructions used in training, totaling 15,111 instruction sets.

-

evaluation: Three JSON files containing specific instructions used for "Performance Evaluation" in each respective function.

-

finetuning: Python and Jupyter Notebook scripts designed for instruction fine-tuning of LLaMA 13B.

- Mobile-LLaMA_demo.ipynb: Jupyter Notebook script allows you to load Mobile-LLaMA from HuggingFace and use it for demonstration and evaluation purposes. You can use this script to generate and evaluate code for various network analysis tasks.

Clone this repository and navigate to the folder.

git clone github.com/DNLab2024/Mobile-LLaMA.git

cd Mobile-LLaMAInstall Package (python>=3.9)

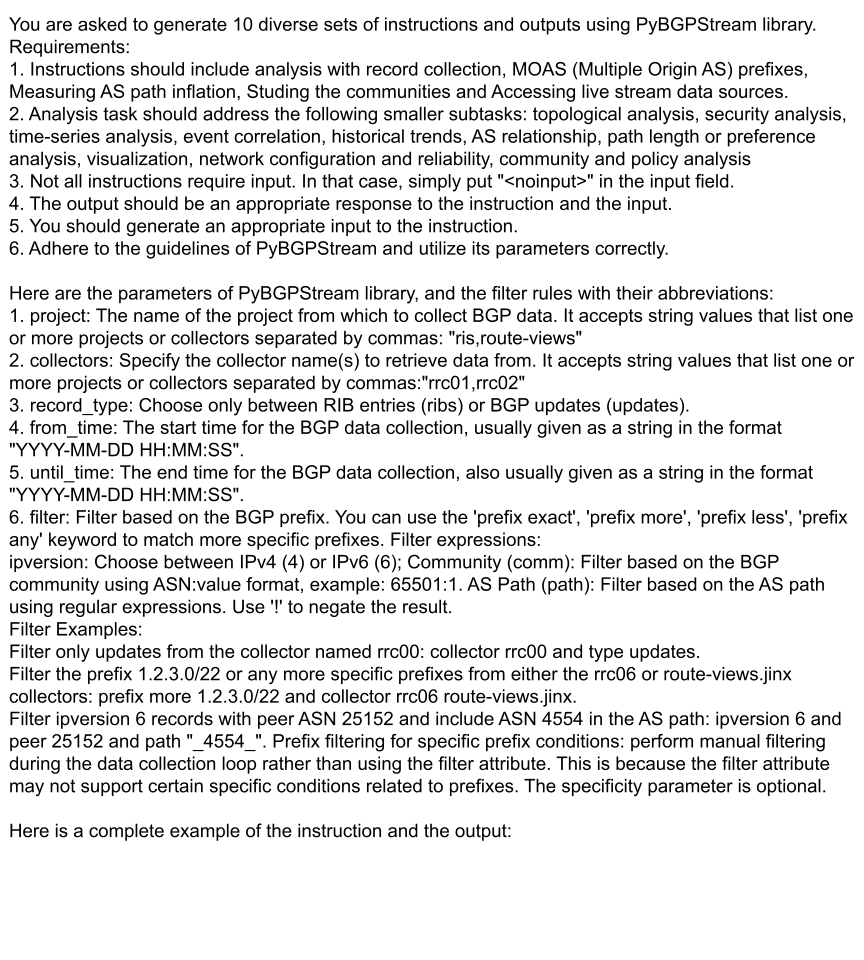

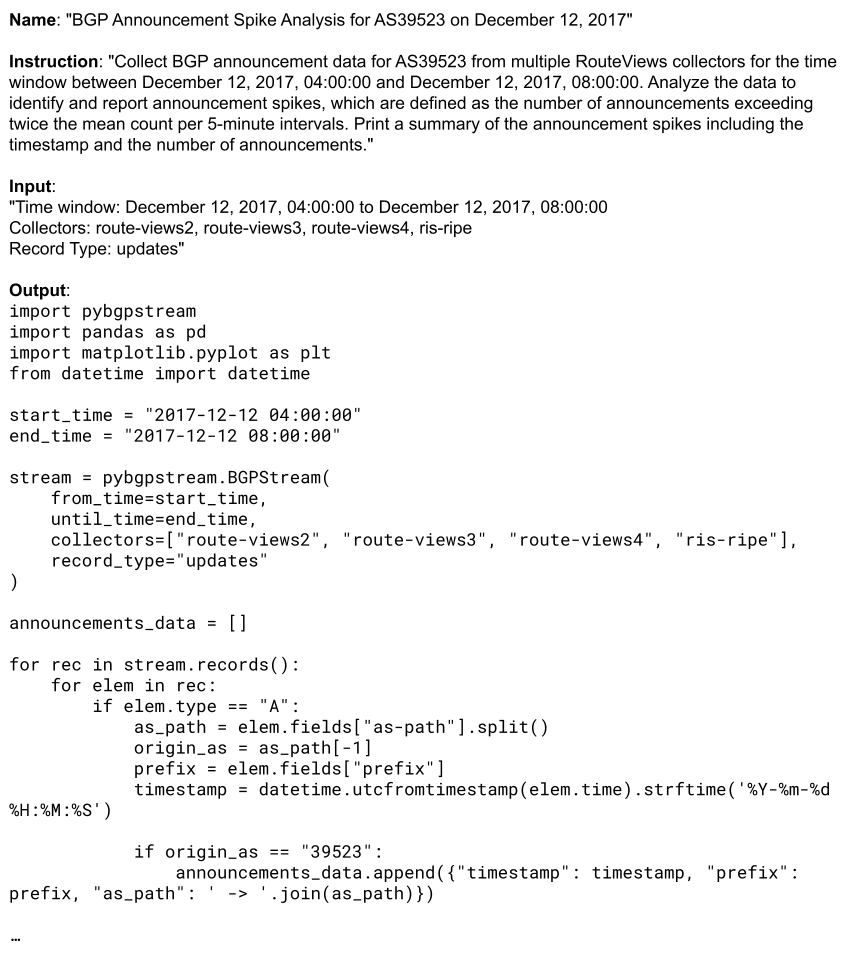

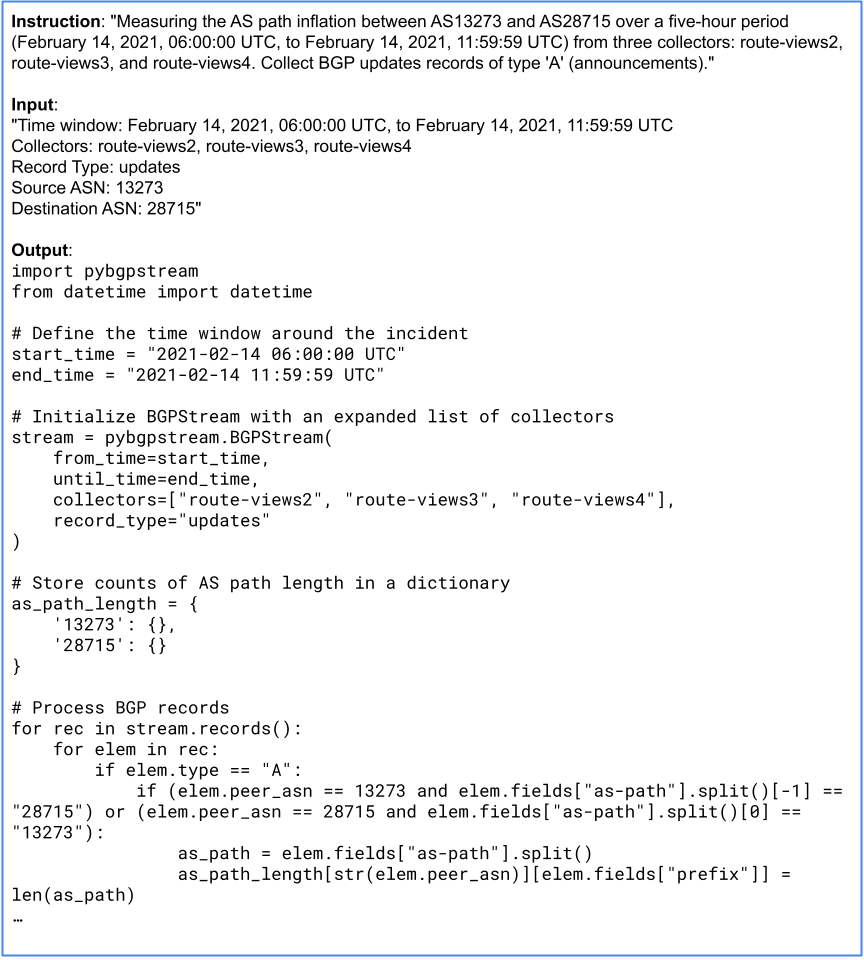

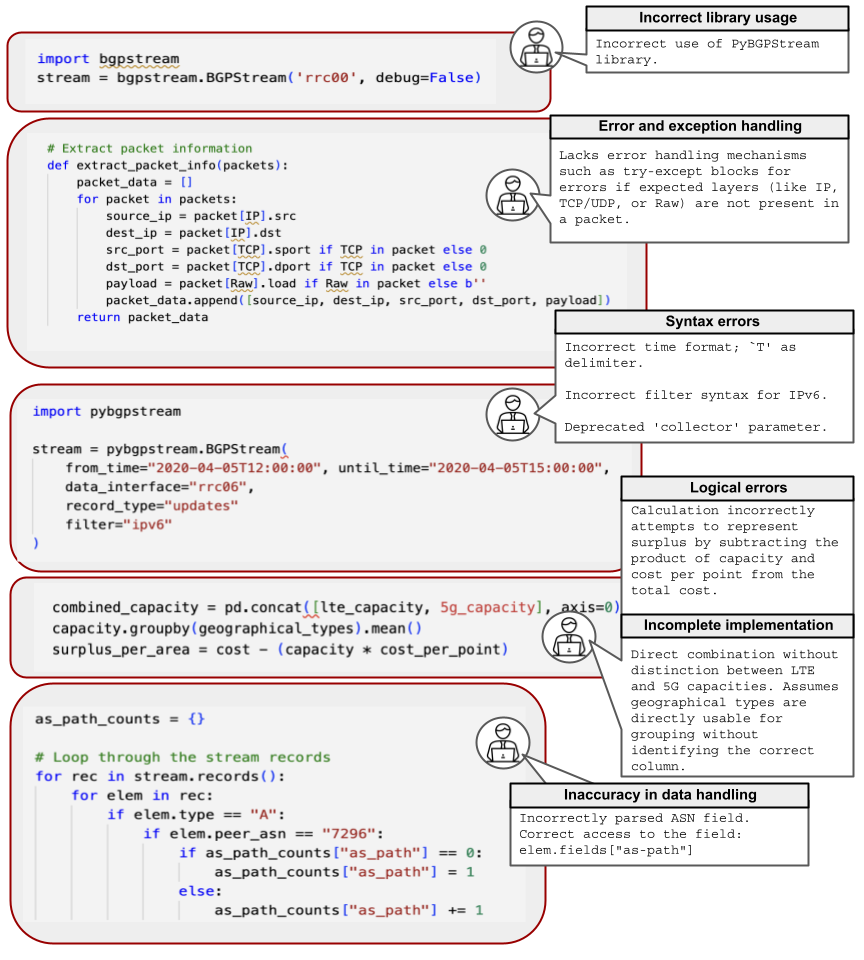

pip install -r requirements.txt| Function of Mobile-LLaMA | Analysis Tasks | Library | Manual Instruction Tasks | Self-Instruct Generated Instructions |

|---|---|---|---|---|

| Packet analysis | Parsing IP packets Data structuring RTT calculation Packet size examination Performance assessment QoS assessment |

Scapy |

20 | 2000 |

| IP routing analysis | BGP route anomalies BGP path changes |

PyBGPStream |

100 | 10000 |

| Performance analysis | Per-user capacity Enhancement 5G investment cost analysis Network quality evaluation UE traffic anomaly detection jitter & CQI benchmarking |

Pandas, Matplotlib |

30 | 3000 |