- Simplifed GPT2 train scripts(based on Grover, supporting TPUs)

- Ported bert tokenizer, multilingual corpus compatible

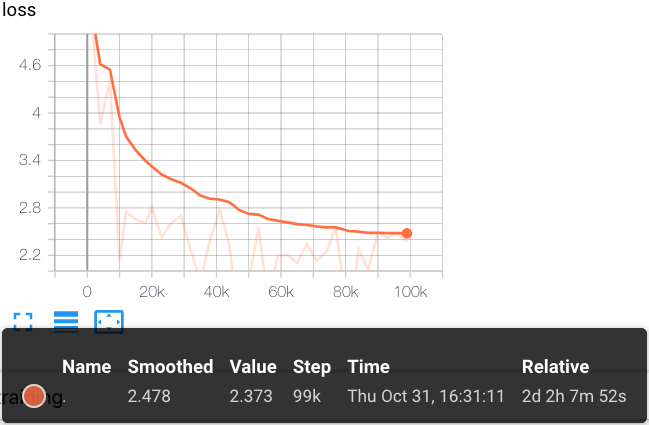

- 1.5B GPT2 pretrained Chinese model ( ~15G corpus, 10w steps )

- Batteries-included Colab demo #

- 1.5B GPT2 pretrained Chinese model ( ~30G corpus, 22w steps )

| Size | Language | Corpus | Vocab | Link | SHA256 |

|---|---|---|---|---|---|

| 1.5B parameters | Chinese | ~30G | CLUE ( 8021 tokens ) | Google Drive | e698cc97a7f5f706f84f58bb469d614e 51d3c0ce5f9ab9bf77e01e3fcb41d482 |

| 1.5B parameters | Chinese | ~15G | Bert ( 21128 tokens ) | Google Drive | 4a6e5124df8db7ac2bdd902e6191b807 a6983a7f5d09fb10ce011f9a073b183e |

Corpus from THUCNews and nlp_chinese_corpus

Using Cloud TPU Pod v3-256 to train 22w steps

With just 2 clicks (not including Colab auth process), the 1.5B pretrained Chinese model demo is ready to go:

The following commands will generate big costs on Google Cloud!

Set the zone to europe-west4-a (the only one with big TPUs)

gcloud config set compute/zone europe-west4-a

Create a Storage Bucket

gsutil mb -p <PROJECT-ID> -c standard gs://<BUCKET-NAME>

Upload the dataset to the Bucket. Run the following command on your local computer

gsutil cp -r ./dataset gs://<BUCKET-NAME>/

Create a TPU and VM machine. Here we are setting the small v2-8 TPU as a safeguard, you will need bigger TPUs to train the models.

ctpu up --tf-version=2.1 --project=<PROJECT-ID> --tpu-size=v2-8 --preemptible

When logged in on the new VM machine type the install Tensorflow 1.15.2

pip3 install tensorflow==1.15.2

Clone the gpt2-ml from Deep-ESP

git clone https://github.com/DeepESP/gpt2-ml.git

Edit the training script and setup the OUTPUT_DIR (gs://<BUCKET-NAME>/output) and input_file (gs://<BUCKET-NAME>/dataset)

cd gpt2-ml/train/

nano train_tpu_adafactor.sh

Run the training script

bash train_tpu_adafactor.sh

From your local computer

tensorboard --logdir gs://<BUCKET-NAME>/output --reload_interval 60

Note to self: loss, accuracy, perplexity. Accuracy shoulg get near 40%.

Trick for faster fine tuning: Keep the same vector for tokens in common between Spanish and English.

To download the checkpoints run the following command on your local computer

gsutil cp -r gs://<BUCKET-NAME>/output ./

This will download all checkpoints, you might want to download only the last one.

The contents in this repository are for academic research purpose, and we do not provide any conclusive remarks.

@misc{GPT2-ML,

author = {Zhibo Zhang},

title = {GPT2-ML: GPT-2 for Multiple Languages},

year = {2019},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/DeepESP/gpt2-ml}},

}

https://github.com/google-research/bert

https://github.com/rowanz/grover

Research supported with Cloud TPUs from Google's TensorFlow Research Cloud (TFRC)