This repository contains the environments in our paper:

SECANT: Self-Expert Cloning for Zero-Shot Generalization of Visual Policies

ICML 2021

Linxi "Jim" Fan, Guanzhi Wang, De-An Huang, Zhiding Yu, Li Fei-Fei, Yuke Zhu, Animashree Anandkumar

Quick Links: [Project Website] [Arxiv] [Demo Video] [ICML Page]

Generalization has been a long-standing challenge for reinforcement learning (RL). Visual RL, in particular, can be easily distracted by irrelevant factors in high-dimensional observation space.

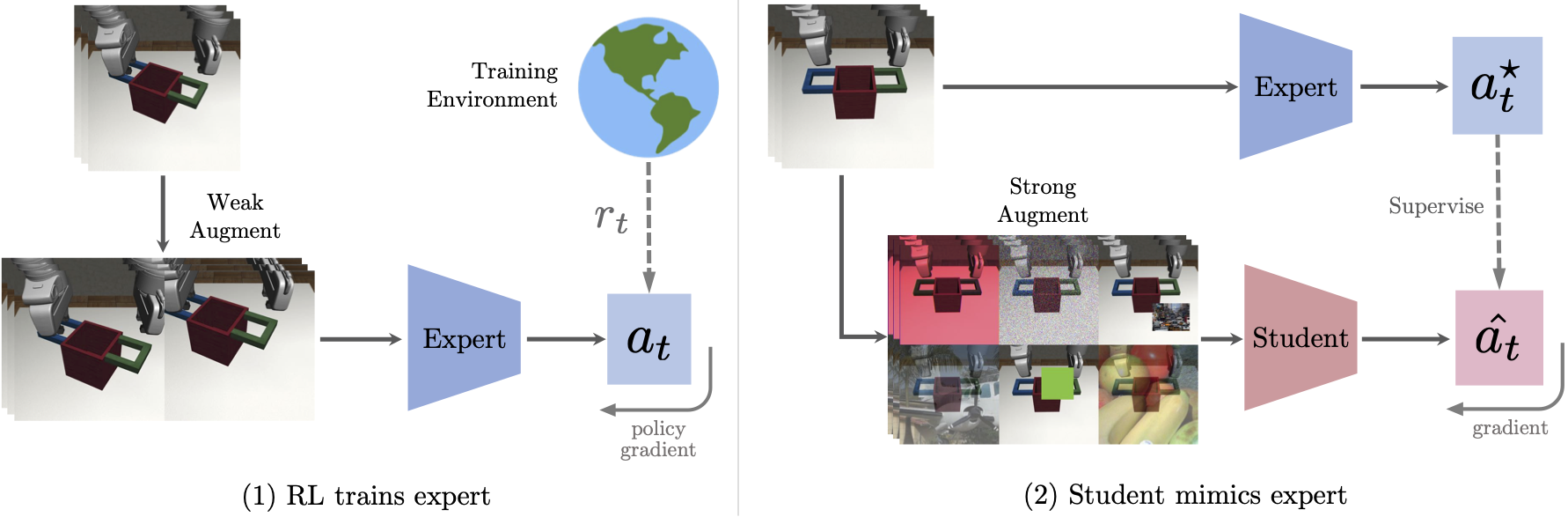

In this work, we consider robust policy learning which targets zero-shot generalization to unseen visual environments with large distributional shift. We propose SECANT, a novel self-expert cloning technique that leverages image augmentation in two stages to decouple robust representation learning from policy optimization.

Specifically, an expert policy is first trained by RL from scratch with weak augmentations. A student network then learns to mimic the expert policy by supervised learning with strong augmentations, making its representation more robust against visual variations compared to the expert.

Extensive experiments demonstrate that SECANT significantly advances the state of the art in zero-shot generalization across 4 challenging domains. Our average reward improvements over prior SOTAs are: DeepMind Control (+26.5%), robotic manipulation (+337.8%), vision-based autonomous driving (+47.7%), and indoor object navigation (+15.8%).

Please refer to dm_control and mujoco-py for how to download and set up MuJoCo for Deepmind Control Suite and RoboSuite, respectively. Make sure environment variables, LD_LIBRARY_PATH and MJLIB_PATH are set correctly.

Please refer to the CARLA doc for installation instructions.

# Create your virtual environment

conda create --name secant python=3.7

conda activate secant

# Install dm_control

pip install dm_control

# Install robosuite adapted for SECANT

pip install git+git://github.com/wangguanzhi/robosuite.git

# Clone this repo

git clone https://github.com/LinxiFan/SECANT

cd SECANT

# Install SECANT

pip install -e .- Deepmind Control Suite (

dm_control): simple vision-based robotic control - CARLA: autonomous driving simulator

- RoboSuite: dexterous robotic manipulation tasks.

SECANT follows the Gym API.

Basic usage:

from secant.envs.dm_control import make_dmc

env = make_dmc(task="walker_walk")

env.reset()

done = False

while not done:

action = env.action_space.sample()

obs, reward, done, info = env.step(action) You can try other environments easily.

from secant.envs.robosuite import make_robosuite

from secant.envs.carla import make_carlaPlease see examples and docs on how to use each environment.

| environment | make_env | training | test |

|---|---|---|---|

| dm_control |

secant.envs.robosuite.make_dmc |

background="original" |

background="color_easy"

background="color_hard"

background="video[0-9]" |

| carla |

secant.envs.carla.make_carla |

weather="clear_noon" |

weather="wet_sunset"

weather="wet_cloudy_noon"

weather="soft_rain_sunset"

weather="mid_rain_sunset"

weather="hard_rain_noon" |

| robosuite |

secant.envs.robosuite.make_robosuite |

mode="train"

scene_id=0 |

mode="eval-easy"

mode="eval-hard"

mode="eval-extreme"

scene_id=[0-9] |

Thank you so much for your interest in our work! For your convenience, we provide the BibTeX code to cite our ICML 2021 paper:

@InProceedings{pmlr-v139-fan21c,

title = {SECANT: Self-Expert Cloning for Zero-Shot Generalization of Visual Policies},

author = {Fan, Linxi and Wang, Guanzhi and Huang, De-An and Yu, Zhiding and Fei-Fei, Li and Zhu, Yuke and Anandkumar, Animashree},

booktitle = {Proceedings of the 38th International Conference on Machine Learning},

pages = {3088--3099},

year = {2021},

editor = {Meila, Marina and Zhang, Tong},

volume = {139},

series = {Proceedings of Machine Learning Research},

month = {18--24 Jul},

publisher = {PMLR},

pdf = {http://proceedings.mlr.press/v139/fan21c/fan21c.pdf},

url = {https://proceedings.mlr.press/v139/fan21c.html},

}