-

(SIGGRAPH 2017) Audio-Driven Facial Animation by Joint End-to-End Learning of Pose and Emotion [Paper][Demo]

The network learns a mapping from input waveforms to the 3D vertex coordinates of a face model, and simultaneously discovers a latent code that disambiguates the variations in facial expression that cannot be explained by the audio alone.

-

(SIGGRAPH 2017) A Deep Learning Approach for Generalized Speech Animation [Paper][Demo]

The approach uses a sliding window predictor that learns the mapping from phonemes to mouth movements. It is speaker-independent and the generated animation can be retargeted to any animation rig.

-

(SIGGRAPH 2018) VisemeNet: Audio-Driven Animator-Centric Speech Animation [Paper][Demo]

The architecture processes an audio signal to predict JALI-based viseme representations.

-

(SIGGRAPH 2016) JALI: An Animator-Centric Viseme Model for Expressive Lip Synchronization [Paper][Demo]

Given an audio and speech transcript, the system generates expressive lip-synchronized facial animation. They draw from psycholinguistics to capture this variation using two visually distinct anatomical actions: Jaw and Lip. A template JALI 3D facial rig is constructed.

-

(CVPR 2019) Capture, learning, and synthesis of 3D speaking styles [Paper][Demo]

Based on FLAME, the network learns to transform audio features to 3D vertex displacement. It generalizes well across various speech sources, languages, and 3D face templates.

-

(CVPR Workshop 2017) Speech-driven 3D Facial Animation with Implicit Emotional Awareness: A Deep Learning Approach [Paper]

The framework learns to map speech to head rotations and expression weights of a 3D blendshape model (FaceWarehouse). They use the 3D face tracker to extract these 3D animation parameters (head rotations and AU intensities) from videos as the ground truth.

-

(SIGGRAPH 2006) Expressive Speech-Driven Facial Animation [Paper][Demo]

They derive a generative model of expressive facial motion that incorporates emotion control while maintaining accrate lip-synching.

-

(SIGGRAPH Asia 2015) Video-Audio Driven Real-Time Facial Animation [Paper][Demo]

The DNN acoustic model is applied to extract phoneme state posterior probabilities (PSPP) from the audio. After that, a lip motion regressor refines the 3D mouth shape based on both PSPP and expression weights of the 3D mouth shapes. Finally, the refined 3D mouth shape is combined with other parts of the 3D face to generate the final result.

-

(ICMI 2020) Modality Dropout for Improved Performance-driven Talking Faces [Paper]

Speech-related facial movements (mouth and jaw) are generated using audio-visual information, and non-speech facial movements (the rest of the face and the head pose) are generated using only visual information

-

(SIGGRAPH 2017) Synthesizing Obama: Learning Lip Sync from Audio [Paper][Demo]

It synthesizes only the region around the mouth and borrow the rest of Obama from a target video. The system first converts audio to the sparse mouth shape, then generates photo-realistic mouth texture, and finally composites it into the mouth region of a target video.

-

(WACV 2020) Animating Face using Disentangled Audio Representations [Paper]

It claims that the proposed approach is the first attempt to explicitly learn emotionally and content aware disentangled audio representations for facial animation. The framework learns to disentangle audio sequences into phonetic content, emotional tone, and other factors.

-

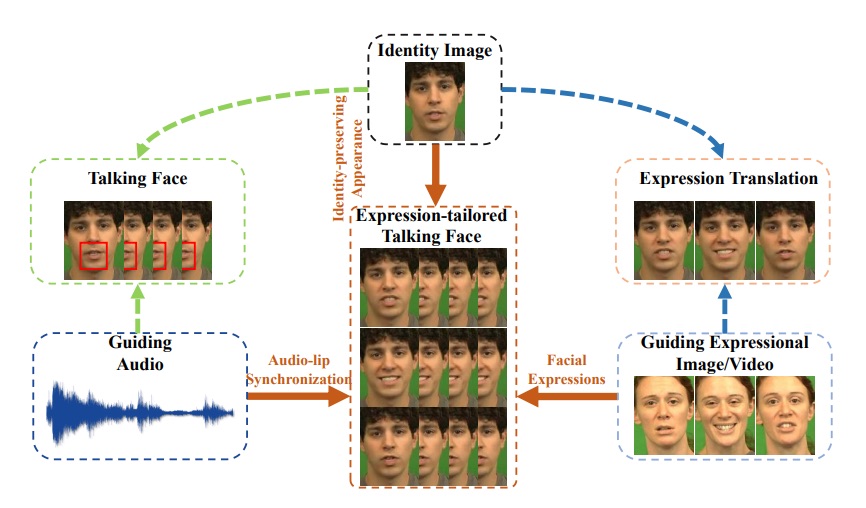

(ACM MM 2020) Talking Face Generation with Expression-Tailored Generative Adversarial Network [Paper]

It uses an expression encoder to disentangle the emotion information from expressional video clips, thus generating high quality expression-tailored face videos beyond audio-lip synchronization.

-

(SIGGRAPH ASIA 2020) MakeItTalk: Speaker-Aware Talking-Head Animation [Paper][Demo][Project Webpage]

The audio content robustly controls the motion of lips and nearby facial regions, while the speaker information determines the specifics of facial expressions and the rest of the talking-head dynamics. They use the prediction of facial landmarks reflecting the speaker-aware dynamics.

-

(BMVC 2017) You Said That? Synthesising Talking Faces from Audio [Paper][Demo]

The overall Speech2Vid model is a combination of two encoders (an identity encoder and an audio encoder), and a decoder that generates images corresponding to the audio.

-

(AAAI 2019) Talking Face Generation by Adversarially Disentangled Audio-Visual Representation [Paper][Demo]

The proposed method disentangles the subject-related information and the speech-related information through an adversarial training process.

-

(IEEE Transactions on Affective Computing2019) Speech-Driven Expressive Talking Lips with Conditional Sequential Generative Adversarial Networks [Paper]

-

(INTERSPEECH 2016) Expressive Speech Driven Talking Avatar Synthesis with DBLSTM using Limited Amount of Emotional Bimodal Data [Paper]