This repository contains the code for the paper "(Predictable) Performance Bias in Unsupervised Anomaly Detection" by Felix Meissen, Svenja Breuer, Moritz Knolle, Alena Buyx, Ruth Müller, Georgios Kaissis, Benedikt Wiestler, and Daniel Rückert.

Download this repository by running

git clone https://github.com/FeliMe/unsupervised_fairnessCreate and activate the Anaconda environment:

conda env create -f environment.yml

conda activate ad_fairnessAdditionally, you need to install the repository as a package:

python3 -m pip install --editable .To be able to use Weights & Biases for logging follow the instructions at https://docs.wandb.ai/quickstart.

Download the datasets from the respective sources, specify the MIMIC_CXR_DIR,

CXR14_DIR, and CHEXPERT_DIR environment variables, and run the

src/data/mimic_cxr.py, src/data/cxr14.py, and src/data/chexpert.py

scripts to prepare the datasets.

Dowload sources:

To reproduce the results of the manuscript, make sure the environment is

activated and run the ./run_experiments.sh script.

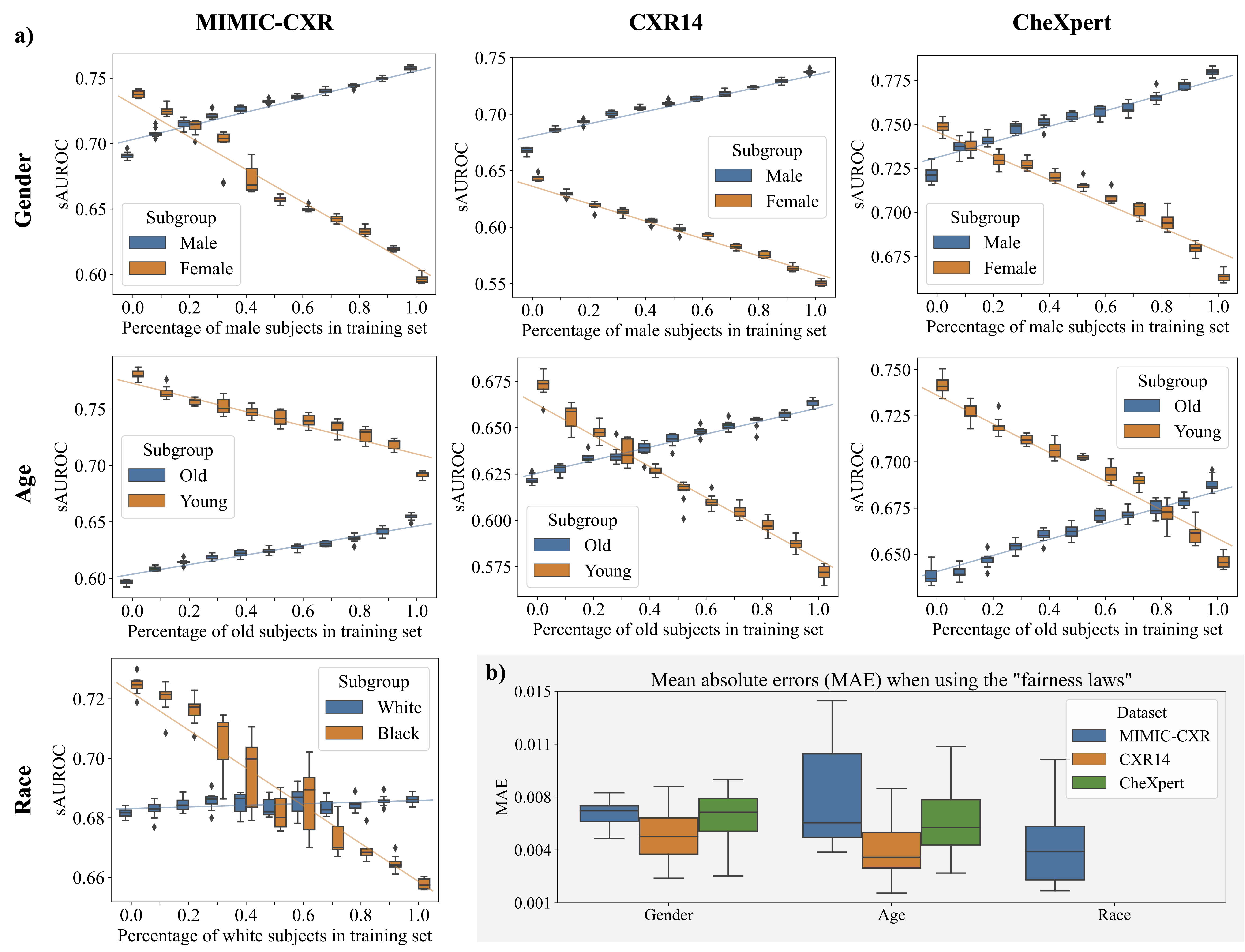

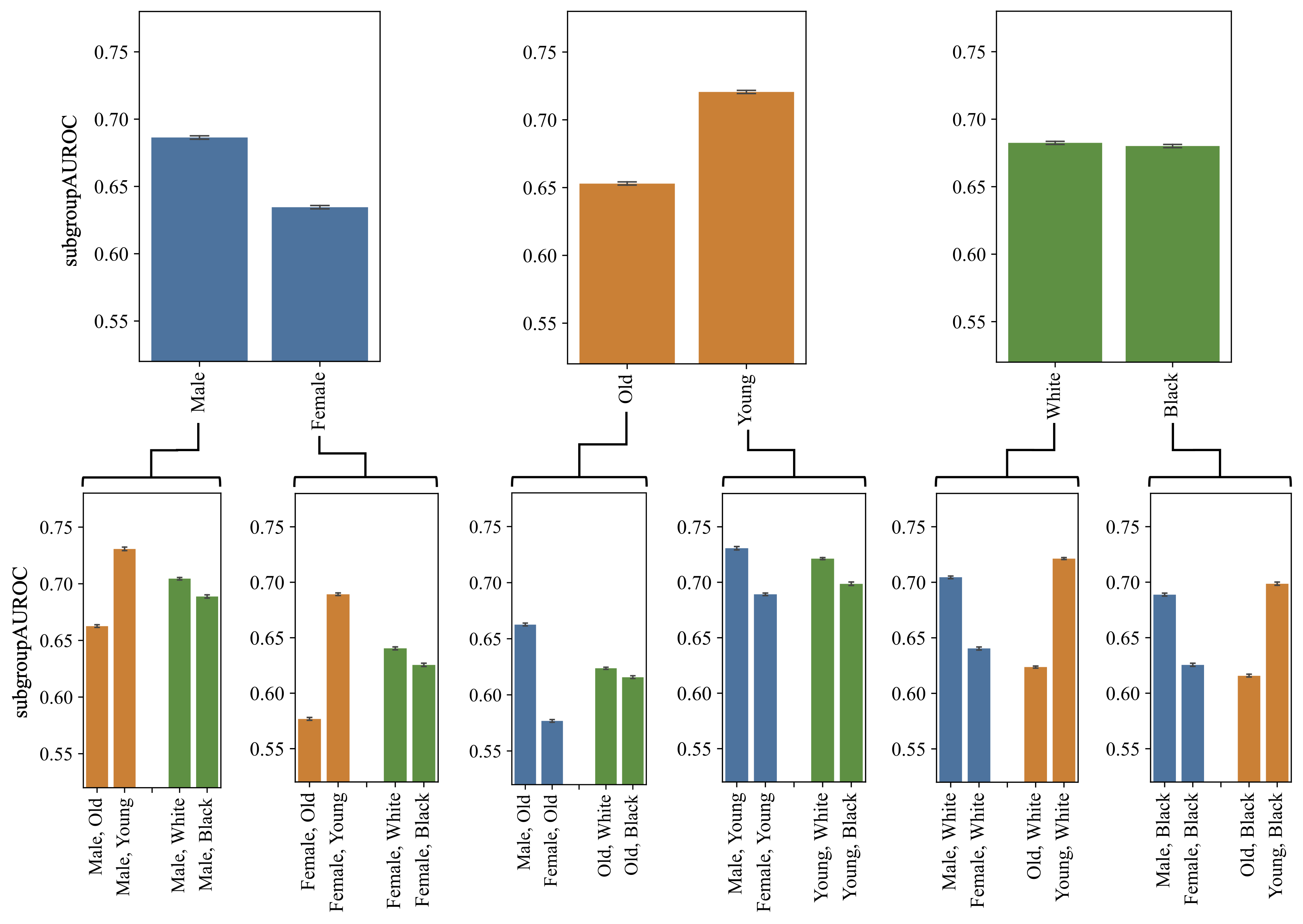

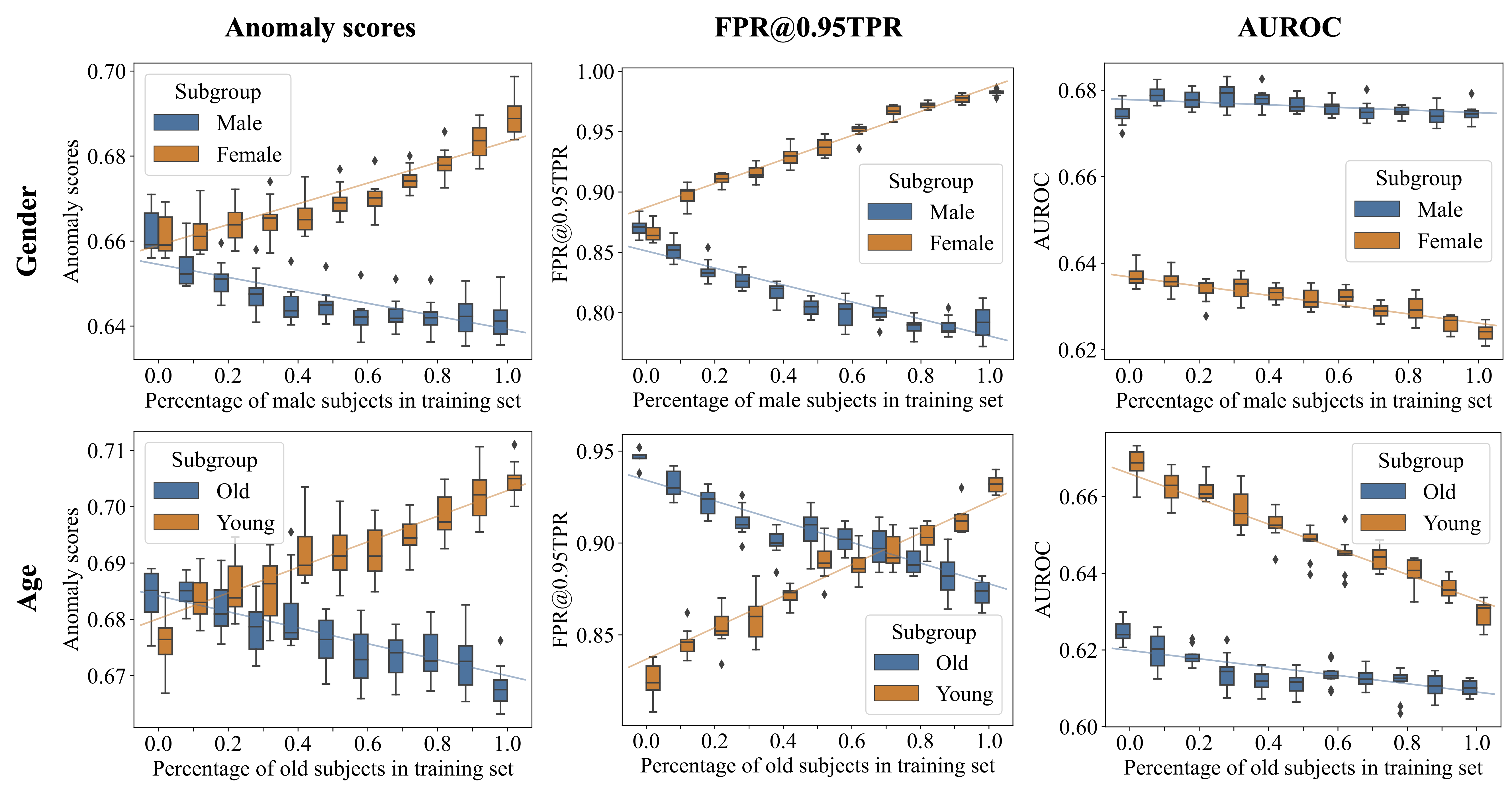

After the experiments are finished, run the src/analysis/plot_main.py

script to which generate the plots necessary to assemble Figures 3a), 4, and 5.

For Figure 3b), additionally src/analysis/mae_plot.py needs to be ran.

This work was supported by the DAAD programme Konrad Zuse Schools of Excellence in Artificial Intelligence, sponsored by the Federal Ministry of Education and Research. Daniel Rueckert has been supported by ERC grant Deep4MI (884622). Svenja Breuer, Ruth Mu ̈ller and Alena Buyx have been supported via the project MedAIcine by the Center for Responsible AI Technologies of the Technical University of Munich, the University of Augsburg, and the Munich School of Philosophy.