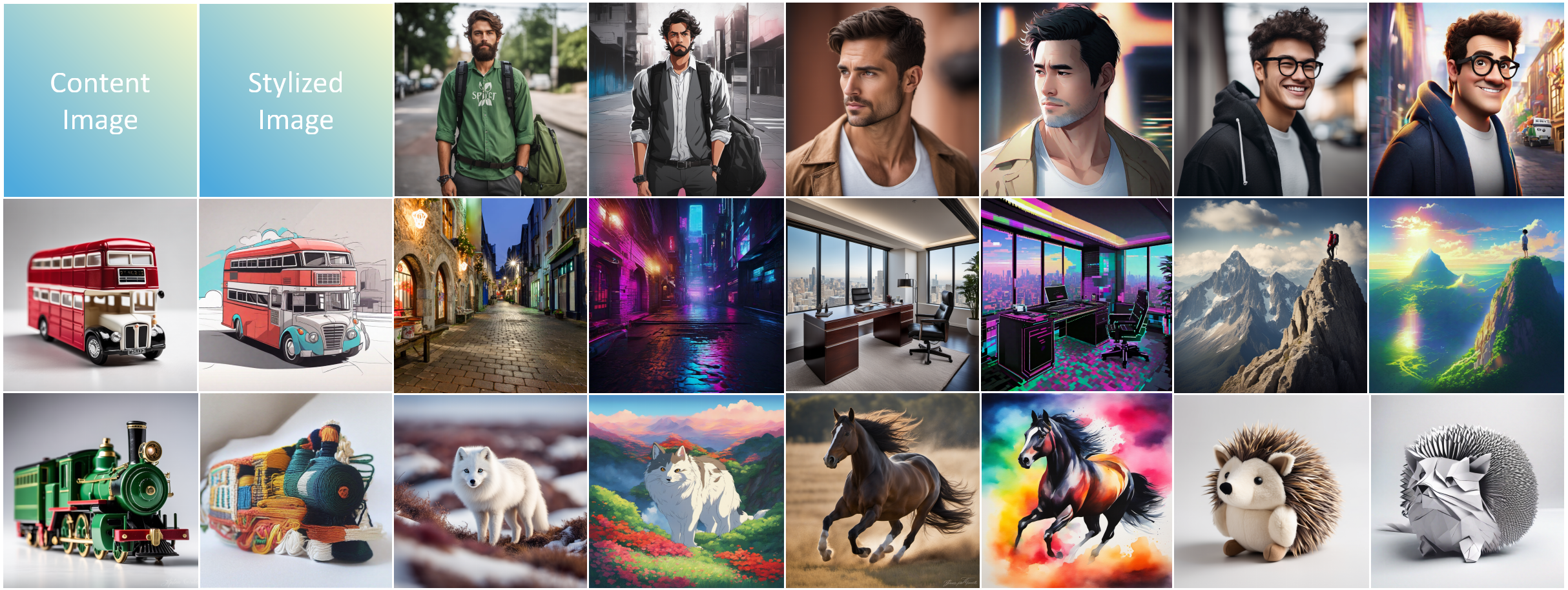

The rapid development of generative diffusion models has significantly advanced the field of style transfer. However, most current style transfer methods based on diffusion models typically involve a slow iterative optimization process, e.g., model fine-tuning and textual inversion of style concept. In this paper, we introduce FreeStyle, an innovative style transfer method built upon a pre-trained large diffusion model, requiring no further optimization. Besides, our method enables style transfer only through a text description of the desired style, eliminating the necessity of style images. Specifically, we propose a dual-stream encoder and single-stream decoder architecture, replacing the conventional U-Net in diffusion models. In the dual-stream encoder, two distinct branches take the content image and style text prompt as inputs, achieving content and style decoupling. In the decoder, we further modulate features from the dual streams based on a given content image and the corresponding style text prompt for precise style transfer.

For details see the project page and paper

conda create -n stylefree python==3.8.18

conda activate stylefreeInstallation dependencies

cd diffusers

pip install -e .

pip install torchsde -i https://pypi.tuna.tsinghua.edu.cn/simple

cd ../diffusers_test

pip install transformers

pip install acceleratedownload the SDXL and put it into: ./diffusers_test/stable-diffusion-xl-base-1.0

You can find some examples and their specific parameter settings in the path: ./diffusers_test/ContentImages/imgs_and_hyperparameters/. You can run them by setting up your own tasks. Additionally, you can quickly run a demo using the following code.

cd ./diffusers_test

python stable_diffusion_xl_test.py --refimgpath ./ContentImages/imgs0 --model_name "./stable-diffusion-xl-base-1.0" --unet_name ./stable-diffusion-xl-base-1.0/unet/ --prompt_json ./style_prompt0.json --num_images_per_prompt 4 --output_dir ./output0 --sampler "DDIM" --step 30 --cfg 5 --height 1024 --width 1024 --seed 123456789 --n 160 --b 1.8 --s 1 cd ./diffusers_test

python stable_diffusion_xl_test.py --refimgpath ./ContentImages/imgs1 --model_name "./stable-diffusion-xl-base-1.0" --unet_name ./stable-diffusion-xl-base-1.0/unet/ --prompt_json ./style_prompt1.json --num_images_per_prompt 4 --output_dir ./output1 --sampler "DDIM" --step 30 --cfg 5 --height 1024 --width 1024 --seed 123456789 --n 160 --b 2.5 --s 1 cd ./diffusers_test

python stable_diffusion_xl_test.py --refimgpath ./ContentImages/imgs2 --model_name "./stable-diffusion-xl-base-1.0" --unet_name ./stable-diffusion-xl-base-1.0/unet/ --prompt_json ./style_prompt2.json --num_images_per_prompt 4 --output_dir ./output2 --sampler "DDIM" --step 30 --cfg 5 --height 1024 --width 1024 --seed 123456789 --n 160 --b 2.5 --s 1 cd ./diffusers_test

python stable_diffusion_xl_test.py --refimgpath ./ContentImages/imgs3 --model_name "./stable-diffusion-xl-base-1.0" --unet_name ./stable-diffusion-xl-base-1.0/unet/ --prompt_json ./style_prompt3.json --num_images_per_prompt 4 --output_dir ./output3 --sampler "DDIM" --step 30 --cfg 5 --height 1024 --width 1024 --seed 123456789 --n 160 --b 2.8 --s 1 cd ./diffusers_test

python stable_diffusion_xl_test.py --refimgpath ./ContentImages/imgs4 --model_name "./stable-diffusion-xl-base-1.0" --unet_name ./stable-diffusion-xl-base-1.0/unet/ --prompt_json ./style_prompt4.json --num_images_per_prompt 4 --output_dir ./output4 --sampler "DDIM" --step 30 --cfg 5 --height 1024 --width 1024 --seed 123456789 --n 160 --b 2.8 --s 1 cd ./diffusers_test

python stable_diffusion_xl_test.py --refimgpath ./ContentImages/imgs5 --model_name "./stable-diffusion-xl-base-1.0" --unet_name ./stable-diffusion-xl-base-1.0/unet/ --prompt_json ./style_prompt5.json --num_images_per_prompt 4 --output_dir ./output5 --sampler "DDIM" --step 30 --cfg 5 --height 1024 --width 1024 --seed 123456789 --n 160 --b 1.8 --s 1Perform style transfer inference according to the following method.

cd ./diffusers_test

python stable_diffusion_xl_test.py --refimgpath ./ContentImages/imgs0 --model_name "./stable-diffusion-xl-base-1.0" --unet_name ./stable-diffusion-xl-base-1.0/unet/ --prompt_json ./style_prompt0.json --num_images_per_prompt 4 --output_dir ./output1 --sampler "DDIM" --step 30 --cfg 5 --height 1024 --width 1024 --seed 123456789 --n 640 --b 1.5 --s 2--refimgpath Path to Content Images

--model_name SDXL saved path

--unet_name Path to unet folder in SDXL

--prompt_json JSON file for style prompts

--num_images_per_prompt how many images are generated for each image and style

--output_dir Path to save stylized images

--n hyperparameter n∈[160,320,640,1280]

--b hyperparameter b∈(1,3)

--s hyperparameter s∈(1,2)Content images with higher quality can achieve better stylization results.

We recommend setting the parameters as: n=160, b=2.5, s=1.

When the expression of style information is ambiguous, please reduce b (increase s).

When the expression of content information is unclear, please increase b (decrease s).

Adjust the parameter n appropriately when there is noise in the stylized image.

You can obtain images with an aspect ratio of 1:1 through the diffusers_test/centercrop.py.

For most images, adjusting hyperparameters can usually yield satisfactory results. If the generated outcome is suboptimal, it is advisable to try several different hyperparameters.

@misc{he2024freestyle,

title={FreeStyle: Free Lunch for Text-guided Style Transfer using Diffusion Models},

author={Feihong He and Gang Li and Mengyuan Zhang and Leilei Yan and Lingyu Si and Fanzhang Li},

year={2024},

eprint={2401.15636},

archivePrefix={arXiv},

primaryClass={cs.CV}

}Please feel free to open an issue or contact us personally if you have questions, need help, or need explanations. Write to one of the following email addresses: