This is a cleaner version of Diffsinger, which provides:

- fewer code: scripts unused in the DiffSinger are marked *isolated*;

- better readability: many important functions are annotated (however, we assume the reader already knows how the neural networks work);

- abstract classes: the bass classes are filtered out into the "basics/" folder and are annotated. Other classes inherent from the base classes.

- better file structre: tts-related files are filtered out into the "tts/" folder, as they are not used in DiffSinger.

- (new) Much condensed version of the preprocessing, training, and inference pipeline. The preprocessing pipeline is at 'preprocessing/opencpop.py', the training pipeline is at 'training/diffsinger.py', the inference pipeline is at 'inference/ds_cascade.py' or 'inference/ds_e2e.py'.

# Install PyTorch manually (1.8.2 LTS recommended)

# See instructions at https://pytorch.org/get-started/locally/

# Below is an example for CUDA 11.1

pip3 install torch==1.8.2 torchvision==0.9.2 torchaudio==0.8.2 --extra-index-url https://download.pytorch.org/whl/lts/1.8/cu111

# Install other requirements

pip install -r requirements.txtexport PYTHONPATH=.

CUDA_VISIBLE_DEVICES=0 python data_gen/binarize.py --config configs/acoustic/nomidi.yamlCUDA_VISIBLE_DEVICES=0 python run.py --config configs/acoustic/nomidi.yaml --exp_name $MY_DS_EXP_NAME --reset CUDA_VISIBLE_DEVICES=0 python run.py --exp_name $MY_DS_EXP_NAME --inferEasy inference with Google Colab:

This repository is the official PyTorch implementation of our AAAI-2022 paper, in which we propose DiffSinger (for Singing-Voice-Synthesis) and DiffSpeech (for Text-to-Speech).

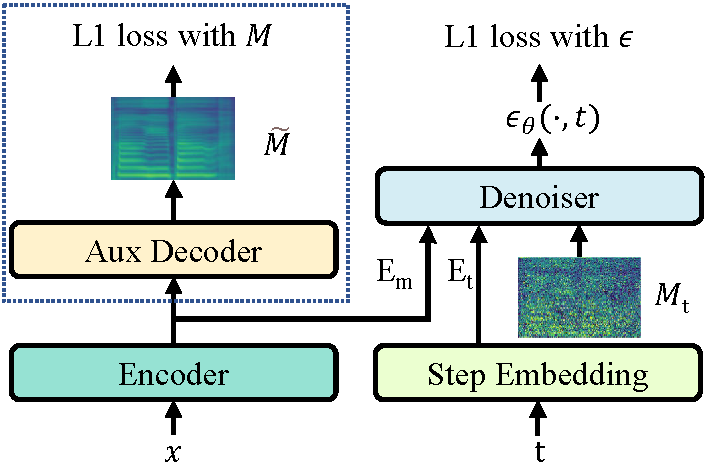

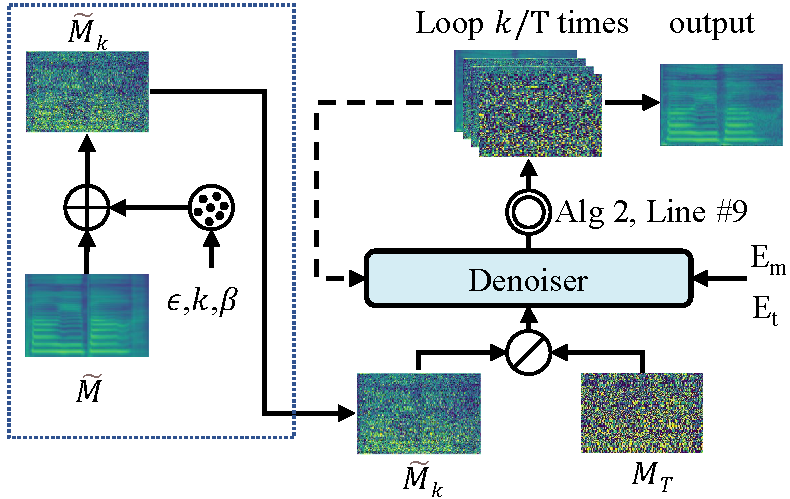

| DiffSinger/DiffSpeech at training | DiffSinger/DiffSpeech at inference |

|---|---|

|

|

🎉 🎉 🎉 Updates:

- Sep.11, 2022: 🔌 DiffSinger-PN. Add plug-in PNDM, ICLR 2022 in our laboratory, to accelerate DiffSinger freely.

- Jul.27, 2022: Update documents for SVS. Add easy inference A & B; Add Interactive SVS running on HuggingFace🤗 SVS.

- Mar.2, 2022: MIDI-B-version.

- Mar.1, 2022: NeuralSVB, for singing voice beautifying, has been released.

- Feb.13, 2022: NATSpeech, the improved code framework, which contains the implementations of DiffSpeech and our NeurIPS-2021 work PortaSpeech has been released.

- Jan.29, 2022: support MIDI-A-version SVS.

- Jan.13, 2022: support SVS, release PopCS dataset.

- Dec.19, 2021: support TTS. HuggingFace🤗 TTS

🚀 News:

- Feb.24, 2022: Our new work, NeuralSVB was accepted by ACL-2022

. Demo Page.

- Dec.01, 2021: DiffSinger was accepted by AAAI-2022.

- Sep.29, 2021: Our recent work

PortaSpeech: Portable and High-Quality Generative Text-to-Speechwas accepted by NeurIPS-2021.

- May.06, 2021: We submitted DiffSinger to Arxiv

.

conda create -n your_env_name python=3.8

source activate your_env_name

pip install -r requirements_2080.txt (GPU 2080Ti, CUDA 10.2)

or pip install -r requirements_3090.txt (GPU 3090, CUDA 11.4)tensorboard --logdir_spec exp_name |

Old audio samples can be found in our demo page. Audio samples generated by this repository are listed here:

Speech samples (test set of LJSpeech) can be found in demos_1213.

Singing samples (test set of PopCS) can be found in demos_0112.

@article{liu2021diffsinger,

title={Diffsinger: Singing voice synthesis via shallow diffusion mechanism},

author={Liu, Jinglin and Li, Chengxi and Ren, Yi and Chen, Feiyang and Liu, Peng and Zhao, Zhou},

journal={arXiv preprint arXiv:2105.02446},

volume={2},

year={2021}}

Our codes are based on the following repos:

Also thanks Keon Lee for fast implementation of our work.