A production-ready implementation of Retrieval Augmented Generation (RAG) using Next.js, PostgreSQL + pgvector (with

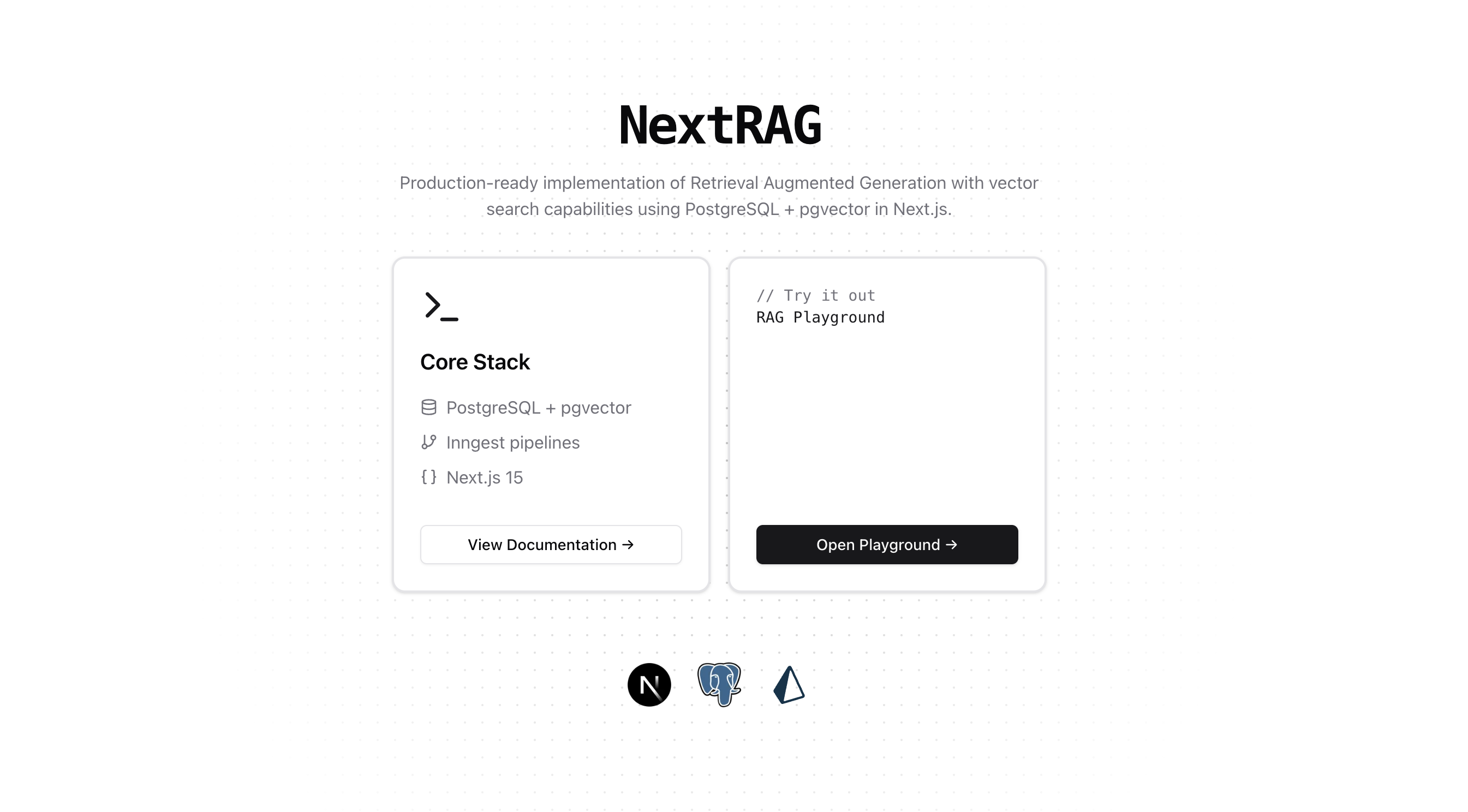

A production-ready implementation of Retrieval Augmented Generation (RAG) using Next.js, PostgreSQL + pgvector (with node-pg), Prisma, and Vercel AI SDK.

This project demonstrates how to implement RAG (Retrieval Augmented Generation) using PostgreSQL's vector similarity search capabilities. It's designed as a reference implementation that you can adapt for your specific use case.

-

Vector Embeddings: Text is converted into high-dimensional vectors that capture semantic meaning using OpenAI's embedding models.

-

Similarity Search: pgvector enables efficient similarity search between these vectors, helping find relevant content.

-

Chunking Strategies: Documents are broken down into manageable pieces using different strategies (sentence, paragraph, or fixed-size) to optimize retrieval.

-

Metadata Tracking: Each chunk maintains metadata about its source, creation time, and relationship to other chunks.

-

Background Processing: Long-running tasks like document ingestion are handled asynchronously using Inngest.

This codebase provides:

- A flexible

VectorDBclass for vector operations - Multiple chunking strategies

- Configurable similarity metrics

- Type-safe database operations

- RAG-powered chat interface

- 🔍 Semantic search with pgvector

- 🤖 RAG-powered chat interface

- 📝 Multiple text chunking strategies

- 🔄 Background processing with Inngest

- 🎯 Flexible similarity metrics

- 📊 Rich metadata support

- 🔒 Type-safe database operations

- Next.js 15 - React framework

- PostgreSQL + pgvector - Vector similarity search

- Vercel AI SDK - AI/LLM utilities

- Prisma - Type-safe database schema

- node-pg - SQL query sanitization

- Inngest - Background job processing

- OpenAI - Embeddings and chat completion

- Tailwind CSS - Styling

- TypeScript - Type safety

git clone https://github.com/hamedmp/nextrag

cd nextrag

pnpm installYou need environment variables for the LLM of your choice and the Database

cp .env.example .envRequired environment variables:

# Database (Vercel Postgres or Neon)

POSTGRES_URL="postgres://..."

# OpenAI

OPENAI_API_KEY="sk-..."

# node-pg

PGUSER=...

PGPASSWORD=...

PGHOST=...

PGPORT=...

PGDATABASE=...

# Inngest (optional, for background jobs)

INNGEST_EVENT_KEY="..."

INNGEST_SIGNING_KEY="..."Enable pgvector extension in your PostgreSQL database:

CREATE EXTENSION IF NOT EXISTS vector;Alternatively, you can do it with the generated Prisma migrations:

Prisma doesn't natively support pgvector's vector type, but we can use the Unsupported scalar:

generator client {

provider = "prisma-client-js"

}

datasource db {

provider = "postgresql"

url = env("POSTGRES_URL")

}

model documents {

id BigInt @id @default(autoincrement())

content String?

embedding Unsupported("vector")?

metadata Json? @default("{}")

createdAt DateTime @default(now())

updatedAt DateTime @updatedAt

}- Create a migration:

pnpm prisma migrate dev --name add_vector_support- In the generated migration file, add pgvector setup:

-- Enable pgvector extension

CREATE EXTENSION IF NOT EXISTS vector;

-- CreateTable

CREATE TABLE "documents" (

"id" BIGSERIAL NOT NULL,

"content" TEXT,

"embedding" vector(1536),

"metadata" JSONB DEFAULT '{}',

"createdAt" TIMESTAMP(3) NOT NULL DEFAULT CURRENT_TIMESTAMP,

"updatedAt" TIMESTAMP(3) NOT NULL,

CONSTRAINT "documents_pkey" PRIMARY KEY ("id")

);

-- Create HNSW index for faster similarity search

CREATE INDEX ON documents USING hnsw (embedding vector_cosine_ops);- Apply the migration:

pnpm prisma migrate deployIn a separate terminal, run the Inngest server:

pnpm run inngestThe server will start on http://127.0.0.1:8288.

In another separate terminal, run the Next.js development server:

pnpm devVisit http://localhost:3000 to see the app.

The VectorDB class provides a flexible interface for vector operations:

// Initialize with table configuration

const vectorDB = new VectorDB(

{

tableName: 'documents',

columns: {

id: 'id',

vector: 'embedding',

content: 'text',

metadata: 'metadata',

createdAt: 'createdAt',

},

},

{

// Optional custom configuration

embedding: {

model: 'text-embedding-3-small',

dimensions: 1536,

distance: 'cosine',

},

chunking: {

method: 'paragraph',

fixedSize: 500,

},

search: {

defaultLimit: 5,

},

}

);

// Add text with chunking and metadata

await vectorDB.addText('Your content here', {

chunkingMethod: 'paragraph',

metadata: {

source: 'documentation',

category: 'setup',

},

});

// Search with filters and custom options

const results = await vectorDB.searchSimilar('your query', {

limit: 10,

distance: 'cosine',

filter: { category: 'setup' },

select: ['content', 'metadata->category as category'],

});Three text chunking strategies are available:

// 1. Sentence-based chunking

const chunks = vectorDB.chunkText(text, 'sentence');

// 2. Paragraph-based chunking (split by double newline)

const chunks = vectorDB.chunkText(text, 'paragraph');

// 3. Fixed-size chunking (with word boundaries)

const chunks = vectorDB.chunkText(text, 'fixed');PGVector supports multiple similarity metrics:

// Cosine similarity (normalized, recommended for OpenAI embeddings)

await vectorDB.searchSimilar(query, { distance: 'cosine' });

// Euclidean distance

await vectorDB.searchSimilar(query, { distance: 'euclidean' });

// Inner product

await vectorDB.searchSimilar(query, { distance: 'inner_product' });src/

├── app/ # Next.js App Router pages

│ ├── (chat)/ # Playground

│ ├── api/ # API routes

│ └── docs/ # Documentation pages

├── components/ # UI components

├── lib/

│ ├── db/ # Database utilities

│ │ ├── vector.ts # VectorDB class

│ │ └── config.ts # Configuration

│ └── actions/ # Server actions

└── inngest/ # Background jobs

- Node.js 18+

- PostgreSQL 15+ with pgvector extension

- OpenAI API key

- Vercel account (for deployment)

For better search performance, create appropriate indexes:

-- For cosine similarity (recommended)

CREATE INDEX ON documents USING hnsw (embedding vector_cosine_ops);

-- For L2 distance

CREATE INDEX ON documents USING hnsw (embedding vector_l2_ops);- Fork the repository

- Create your feature branch (

git checkout -b feature/amazing-feature) - Commit your changes (

git commit -m 'Add amazing feature') - Push to the branch (

git push origin feature/amazing-feature) - Open a Pull Request

MIT License - see LICENSE file for details.

Built with ❤️ using Next.js and pgvector