This repository is the official implementation of Magic Clothing

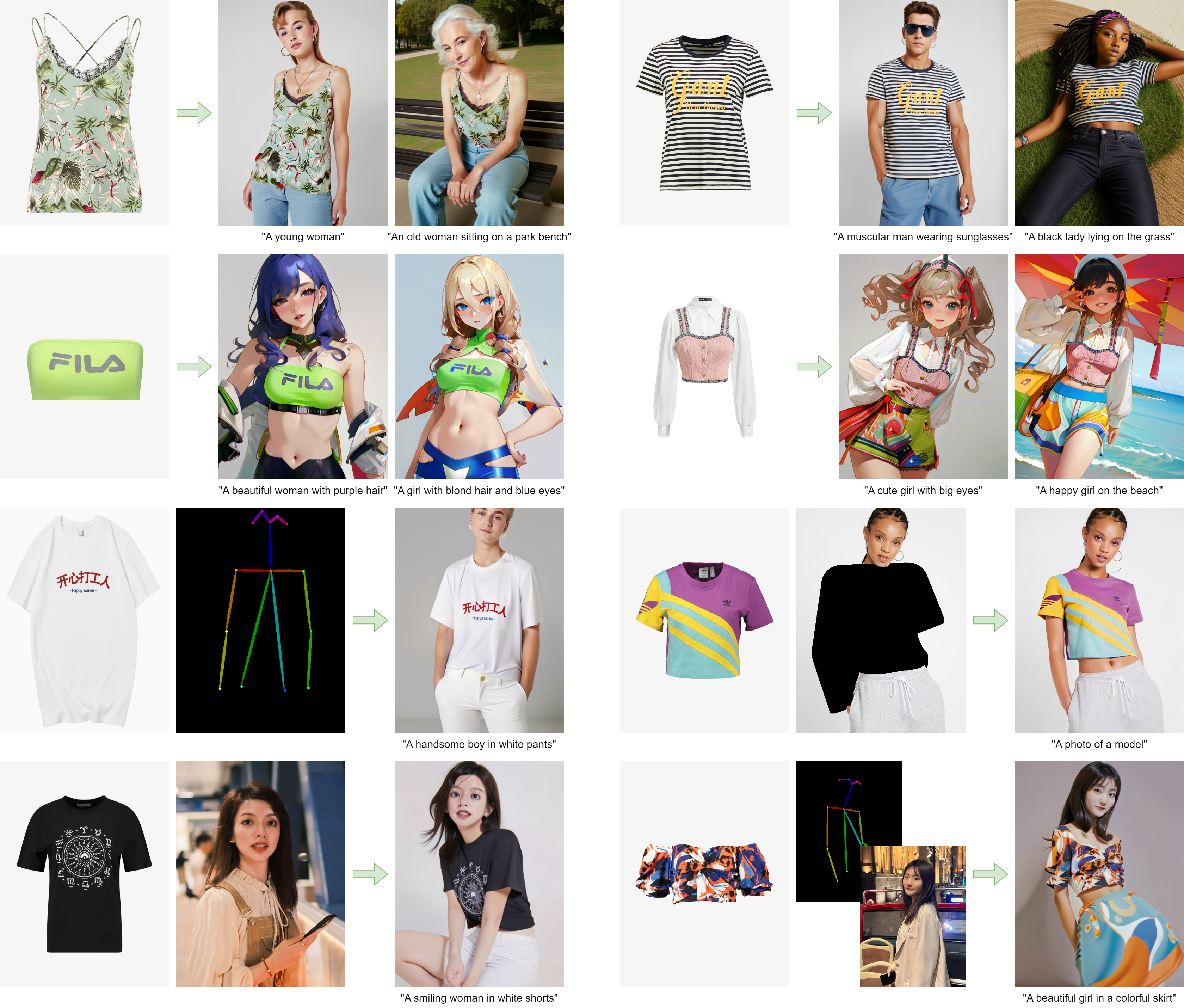

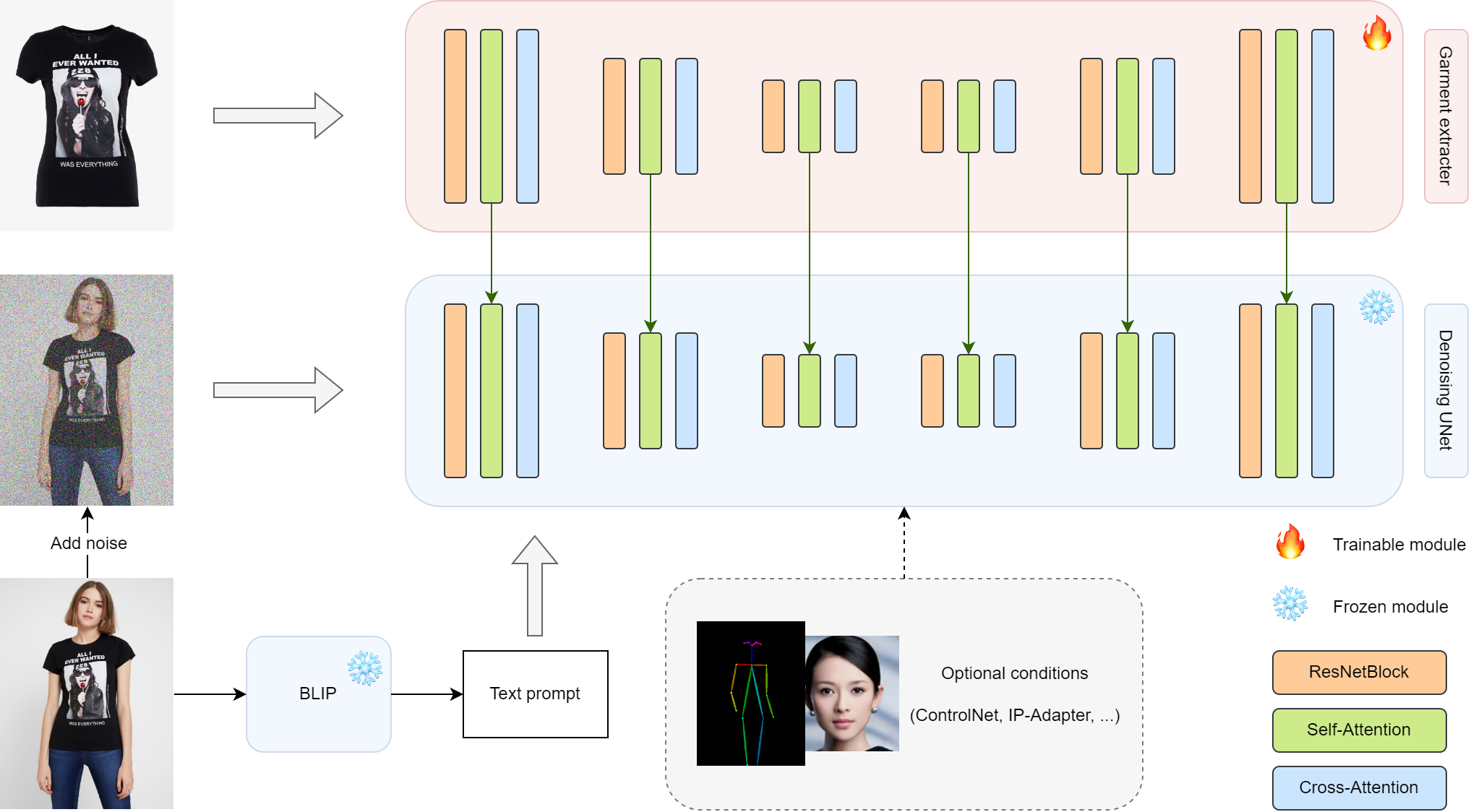

Magic Clothing is a branch version of OOTDiffusion, focusing on controllable garment-driven image synthesis

Please refer to our previous paper for more details

Magic Clothing: Controllable Garment-Driven Image Synthesis (coming soon)

Weifeng Chen*, Tao Gu*, Yuhao Xu, Chengcai Chen

* Equal contribution

Xiao-i Research

🔥 [2024/3/8] We released the model weights trained on the 768 resolution. The strength of clothing and text prompts can be independently adjusted.

🔥 [2024/2/28] We support IP-Adapter-FaceID with ControlNet-Openpose! A portrait and a reference pose image can be used as additional conditions.

Have fun with gradio_ipadapter_openpose.py

🔥 [2024/2/23] We support IP-Adapter-FaceID now! A portrait image can be used as an additional condition.

Have fun with gradio_ipadapter_faceid.py

- Clone the repository

git clone https://github.com/ShineChen1024/MagicClothing.git- Create a conda environment and install the required packages

conda create -n magicloth python==3.10

conda activate magicloth

pip install torch==2.0.1 torchvision==0.15.2 numpy==1.25.1 diffusers==0.25.1 opencv-python==4.9.0.80 transformers==4.31.0 gradio==4.16.0 safetensors==0.3.1 controlnet-aux==0.0.6 accelerate==0.21.0- Python demo

512 weights

python inference.py --cloth_path [your cloth path] --model_path [your model path]768 weights

python inference.py --cloth_path [your cloth path] --model_path [your model path] --enable_cloth_guidance- Gradio demo

512 weights

python gradio_generate.py --model_path [your model path] 768 weights

python gradio_generate.py --model_path [your model path] --enable_cloth_guidance- Paper

- Gradio demo

- Inference code

- Model weights

- Training code