Arxiv | Project Page | Saved Weights | Data

@article{viswanath2024reduced,

title={Reduced-Order Neural Operators: Learning Lagrangian Dynamics on Highly Sparse Graphs},

author={Viswanath, Hrishikesh and Chang, Yue and Berner, Julius and Chen, Peter Yichen and Bera, Aniket},

journal={arXiv preprint arXiv:2407.03925},

year={2024}

}

| Neural Operator Inference | Discretization Agnostic Neural Field | Final Rendered Sim |

|---|---|---|

|

|

|

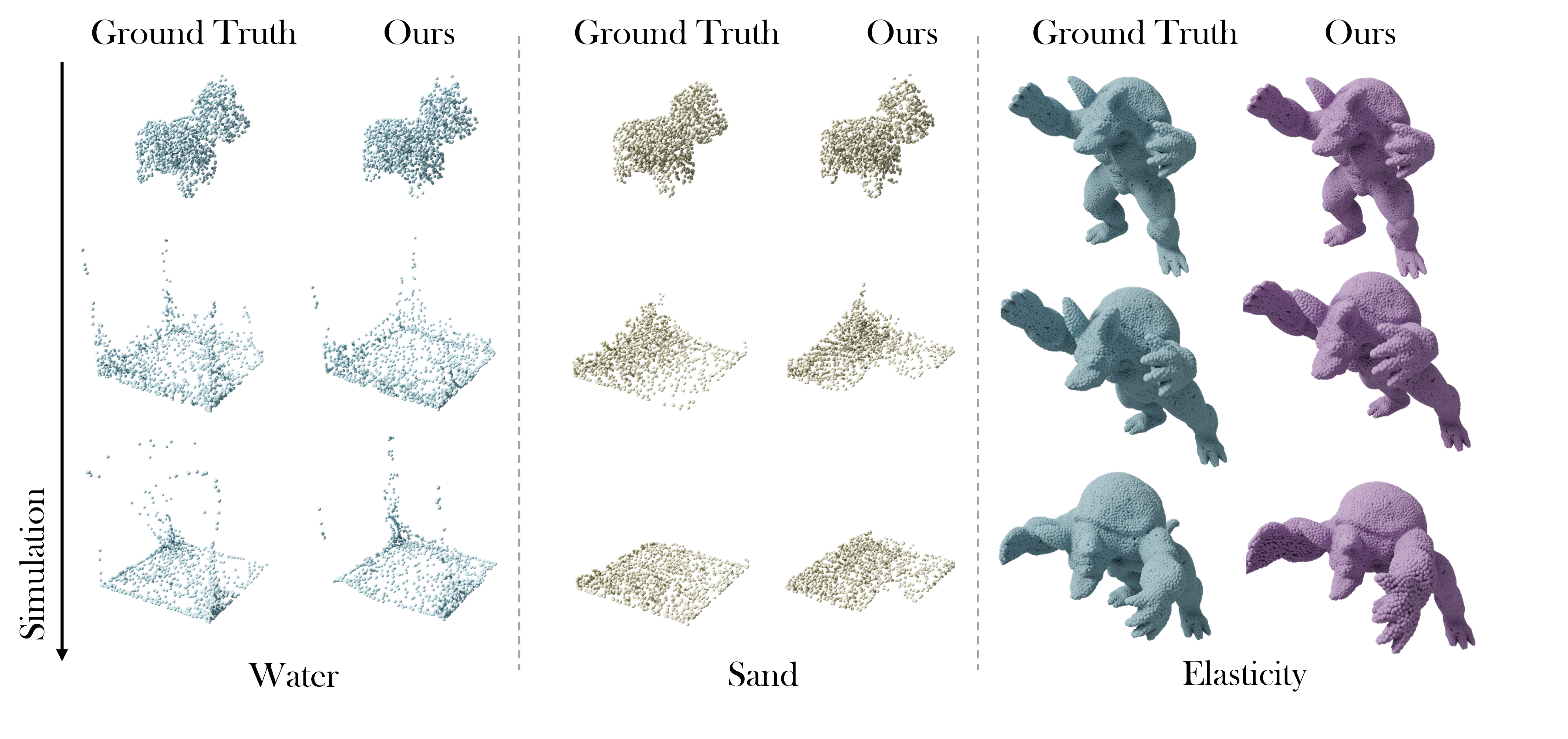

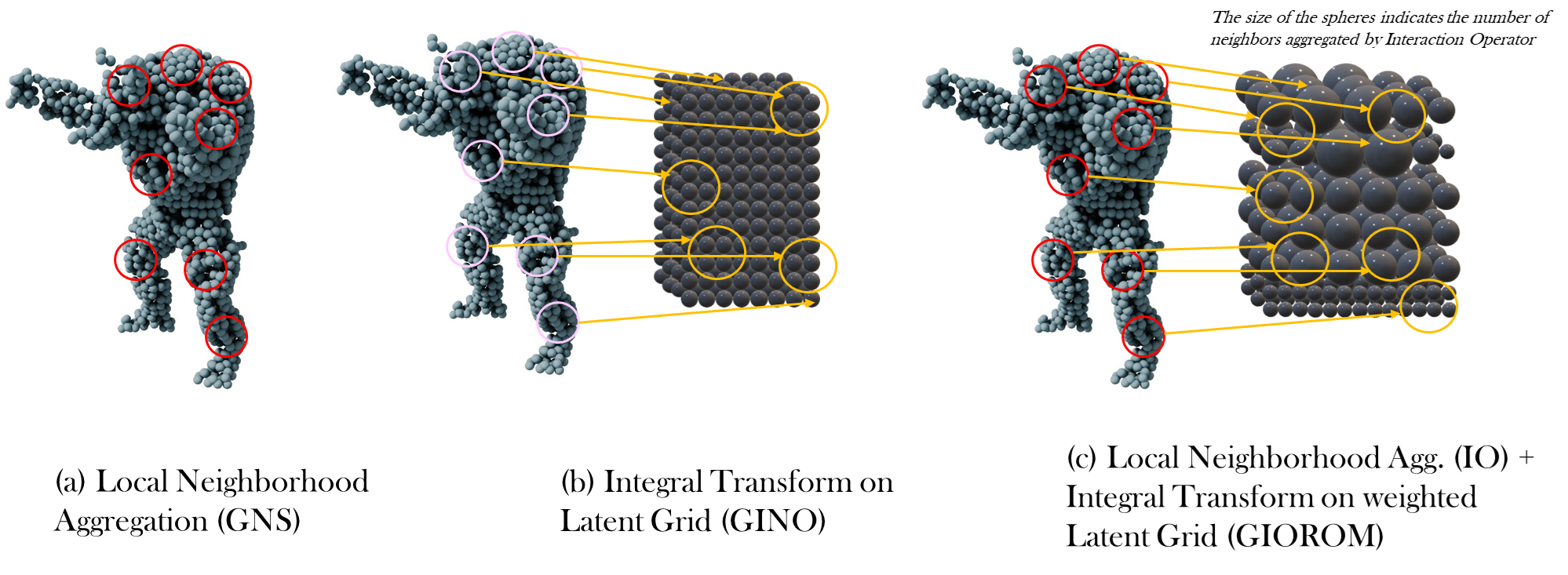

We present a neural operator architecture to simulate Lagrangian dynamics, such as fluid flow, granular flows, and elastoplasticity. Traditional numerical methods, such as the finite element method (FEM), suffer from long run times and large memory consumption. On the other hand, approaches based on graph neural networks are faster but still suffer from long computation times on dense graphs, which are often required for high-fidelity simulations. Our model, GIOROM or Graph Interaction Operator for Reduced-Order Modeling, learns temporal dynamics within a reduced-order setting, capturing spatial features from a highly sparse graph representation of the input and generalizing to arbitrary spatial locations during inference. The model is geometry-aware and discretization-agnostic and can generalize to different initial conditions, velocities, and geometries after training. We show that point clouds of the order of 100,000 points can be inferred from sparse graphs with $\sim$1000 points, with negligible change in computation time. We empirically evaluate our model on elastic solids, Newtonian fluids, Non-Newtonian fluids, Drucker-Prager granular flows, and von Mises elastoplasticity. On these benchmarks, our approach results in a 25$\times$ speedup compared to other neural network-based physics simulators while delivering high-fidelity predictions of complex physical systems and showing better performance on most benchmarks. The code and the demos are provided at (https://github.com/HrishikeshVish/GIOROM).

conda create --name <env> --file requirements.txt

mkdir giorom_datasets

mkdir <datasetname>

We provide code to process datasets provided by GNS [1] and NCLAW [2]

- [1] Sanchez-Gonzales+ Learning to Simulate Complex Physics with Graph Neural Networks

- [2] Ma+ Learning neural constitutive laws from motion observations for generalizable pde dynamics

cd Dataset\ Parsers/

python parseData.py --data_config nclaw_Sand

python parseData.py --data_config WaterDrop2D

python train.py --train_config train_configs_nclaw_Water

python train.py --batch_size 2 --epoch 100 --lr 0.0001 --noise 0.0003 --eval_interval 1500 --rollout_interval 1500 --sampling true --sampling_strategy fps --graph_type radius --connectivity_radius 0.032 --model giorom2d_large --dataset WaterDrop2D --load_checkpoint true --ckpt_name giorom2d_large_WaterDrop2D --dataset_rootdir giorom_datasets/

python eval.py --eval_config train_configs_Water2D

We have not provided the code to save the rollout output as a pytorch tensor. However, this snippet can be found at eval_3d.ipynb

To render the results with Polyscope or Blender Use the following. This part of the code needs to be modified and appropriate paths need to be provided in the code

cd Viz

python createObj.py

python blender_rendering.py

Neural Fields Inference: We follow the code and training strategy provided by LiCROM

We support existing datasets provided by GNS and have new dataset curated from NCLAW framework. We shall upload the new dataset soon...

Currently tested datasets from Learning to Simulate Complex Physics with Graph Neural Networks (GNS)

{DATASET_NAME}one of the datasets following the naming used in the paper:WaterDropWaterSandGoopMultiMaterialWater-3DSand-3DGoop-3D

Datasets are available to download via:

-

Metadata file with dataset information (sequence length, dimensionality, box bounds, default connectivity radius, statistics for normalization, ...):

https://storage.googleapis.com/learning-to-simulate-complex-physics/Datasets/{DATASET_NAME}/metadata.json -

TFRecords containing data for all trajectories (particle types, positions, global context, ...):

https://storage.googleapis.com/learning-to-simulate-complex-physics/Datasets/{DATASET_NAME}/{DATASET_SPLIT}.tfrecord

Where:

{DATASET_SPLIT}is one of:trainvalidtest