This is the official repo for the paper: CogVideo: Large-scale Pretraining for Text-to-Video Generation via Transformers.

News! The demo for CogVideo is available!

It's also integrated into Huggingface Spaces 🤗 using Gradio. Try out the Web Demo

News! The code and model for text-to-video generation is now available! Currently we only supports simplified Chinese input.

CogVideo_samples.mp4

- Read our paper CogVideo: Large-scale Pretraining for Text-to-Video Generation via Transformers on ArXiv for a formal introduction.

- Try our demo at https://models.aminer.cn/cogvideo/

- Run our pretrained models for text-to-video generation. Please use A100 GPU.

- Cite our paper if you find our work helpful

@article{hong2022cogvideo,

title={CogVideo: Large-scale Pretraining for Text-to-Video Generation via Transformers},

author={Hong, Wenyi and Ding, Ming and Zheng, Wendi and Liu, Xinghan and Tang, Jie},

journal={arXiv preprint arXiv:2205.15868},

year={2022}

}

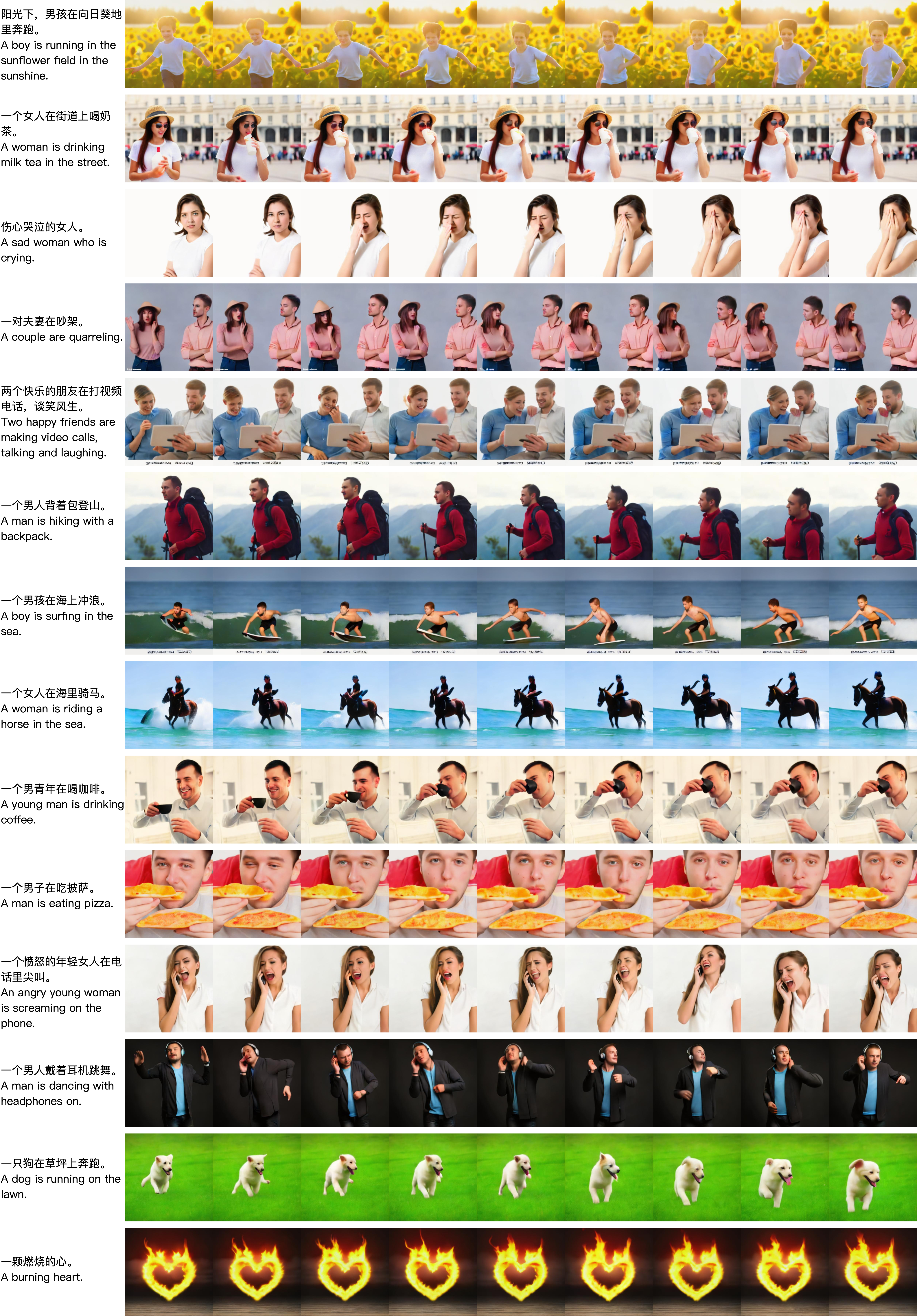

The demo for CogVideo is at https://models.aminer.cn/cogvideo/, where you can get hands-on practice on text-to-video generation. The original input is in Chinese.

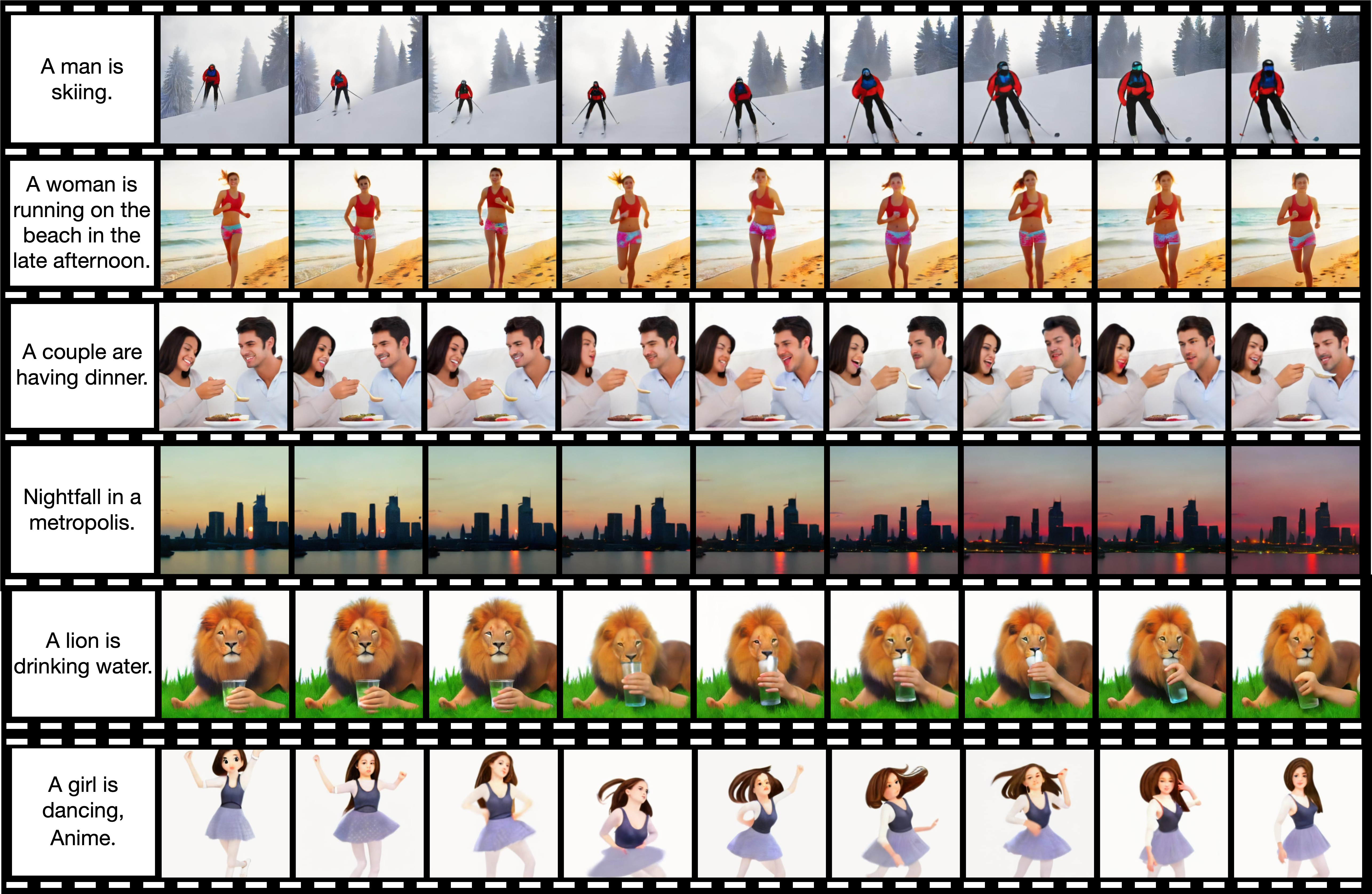

Video samples generated by CogVideo. The actual text inputs are in Chinese. Each sample is a 4-second clip of 32 frames, and here we sample 9 frames uniformly for display purposes.

CogVideo is able to generate relatively high-frame-rate videos. A 4-second clip of 32 frames is shown below.

- Hardware: Linux servers with Nvidia A100s are recommended, but it is also okay to run the pretrained models with smaller

--max-inference-batch-sizeand--batch-sizeor training smaller models on less powerful GPUs. - Environment: install dependencies via

pip install -r requirements.txt. - LocalAttention: Make sure you have CUDA installed and compile the local attention kernel.

pip install git+https://github.com/Sleepychord/Image-Local-AttentionAlternatively you can use Docker to handle all dependencies.

- Run

./build_image.sh - Run

./run_image.sh - Run

./install_image_local_attention

Optionally, after that you can recommit the image to avoid having to install image local attention again.

Our code will automatically download or detect the models into the path defined by environment variable SAT_HOME. You can also manually download CogVideo-Stage1 , CogVideo-Stage2 and CogView2-dsr place them under SAT_HOME (with folders named cogvideo-stage1 , cogvideo-stage2 and cogview2-dsr)

./script/inference_cogvideo_pipeline.sh

Arguments useful in inference are mainly:

--input-source [path or "interactive"]. The path of the input file with one query per line. A CLI would be launched when using "interactive".--output-path [path]. The folder containing the results.--batch-size [int]. The number of samples will be generated per query.--max-inference-batch-size [int]. Maximum batch size per forward. Reduce it if OOM.--stage1-max-inference-batch-size [int]Maximum batch size per forward in Stage 1. Reduce it if OOM.--both-stages. Run both stage1 and stage2 sequentially.--use-guidance-stage1Use classifier-free guidance in stage1, which is strongly suggested to get better results.

You'd better specify an environment variable SAT_HOME to specify the path to store the downloaded model.

Currently only Chinese input is supported.