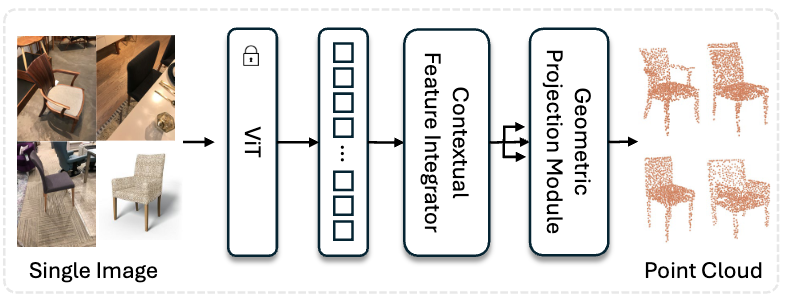

RGB2Point is officially accepted to WACV 2025. It takes a single unposed RGB image to generate 3D Point Cloud. Check more details from the paper.

RGB2Point is tested on Ubuntu 22 and Windows 11. Python 3.9+ and Pytorch 2.0+ is required.

Assuming Pytorch 2.0+ with CUDA is installed, run:

pip install timm

pip install accelerate

pip install wandb

pip install open3d

pip install scikit-learn

python train.py

Please download 1) Point cloud data zip file, 2) Rendered Images, and 3) Train/test filenames.

Next, modify the downloaded 1), 2), 3) file paths to L#36, L#38, L#14 and L#16.

Download the model trained on Chair, Airplane and Car from ShapeNet.

https://drive.google.com/file/d/1Z5luy_833YV6NGiKjGhfsfEUyaQkgua1/view?usp=sharing

python inference.py

Change image_path and save_path in inference.py accrodingly.

If you find this paper and code useful in your research, please consider citing:

@article{lee2024rgb2point,

title={RGB2Point: 3D Point Cloud Generation from Single RGB Images},

author={Lee, Jae Joong and Benes, Bedrich},

journal={arXiv preprint arXiv:2407.14979},

year={2024}

}