This is a repository to run audio-visual speaker diarization pipeline, which was proposed in the paper "Spot the conversation : speaker diarisation in the wild" (Interspeech 2020). The pipeline was used to make the VoxConverse dataset.

- First, clone the repository.

git clone https://github.com/JaesungHuh/av-diarization.git

cd av-diarization- Install packages.

conda create -n avdiarizer python=3.10 -y

conda activate avdiarizer

pip install --upgrade pip # enable PEP 660 support

pip install -e .It also requires the command-line tool ffmpeg to be installed on your system, which is available from most package managers:

# on Ubuntu or Debian

sudo apt update && sudo apt install ffmpeg

# on Arch Linux

sudo pacman -S ffmpeg

# on MacOS using Homebrew (https://brew.sh/)

brew install ffmpeg

# on Windows using Chocolatey (https://chocolatey.org/)

choco install ffmpeg

# on Windows using Scoop (https://scoop.sh/)

scoop install ffmpeg

git pull

pip install -e .Please run the following command. The diarization results will be saved in the [PATH OF OUTPUT DIRECTORY].

python diarize.py -i [PATH OF VIDEOFILE] -o [PATH OF OUTPUT DIRECTORY]

# example : python diarize.py -i sample/sample.mp4 -o outputIf you want to visualize the face detection / SyncNet results, please put --visualize when running the command. The resultant video file will be saved in the output directory.

python diarize.py -i [PATH OF VIDEOFILE] -o [PATH OF OUTPUT DIRECTORY] --visualizeArgparse arguments

-i, --input (default : sample/sample.mp4): input video file you want to diarize

---cache_dir (default : None): The directory to store intermediate results. If None, the cache will be stored in temporary directory made using tempfile. It will be removed after the process is finished. We advise you to set this to a path where I/O operation is fast.

---ckpt_dir (default : None): The directory to store the model checkpoint. If None, the checkpoints will be downloaded from the internet and stored in ~/.cache/voxconverse.

-o, --out_dir (default : output): The directory to store the output results.

--visualize: If this flag is provided, the face detection and SyncNet results will be visualized and saved in the output directory. Otherwise, no visualization is performed.

--vad (default : pywebrtcvad): Type of voice activity detection model.

--speaker_model (default : resnetse34): Type of speaker recognition model.

If you want to use the original version that was used to make VoxConverse dataset in 2020, you can use the following command.

python diarize.py -i [PATH OF VIDEOFILE] -o [PATH OF OUTPUT DIRECTORY] --vad pywebrtcvad --speaker_model resnetse34The original version was developed in 2020. Since then, many new models and libraries have been released. We can now use updated voice activity detection and speaker recognition models. (Note: SyncNet is trained on cropped faces from S3FD, so we cannot use other face detection or embedding models.)

Run the following command to use the new version.

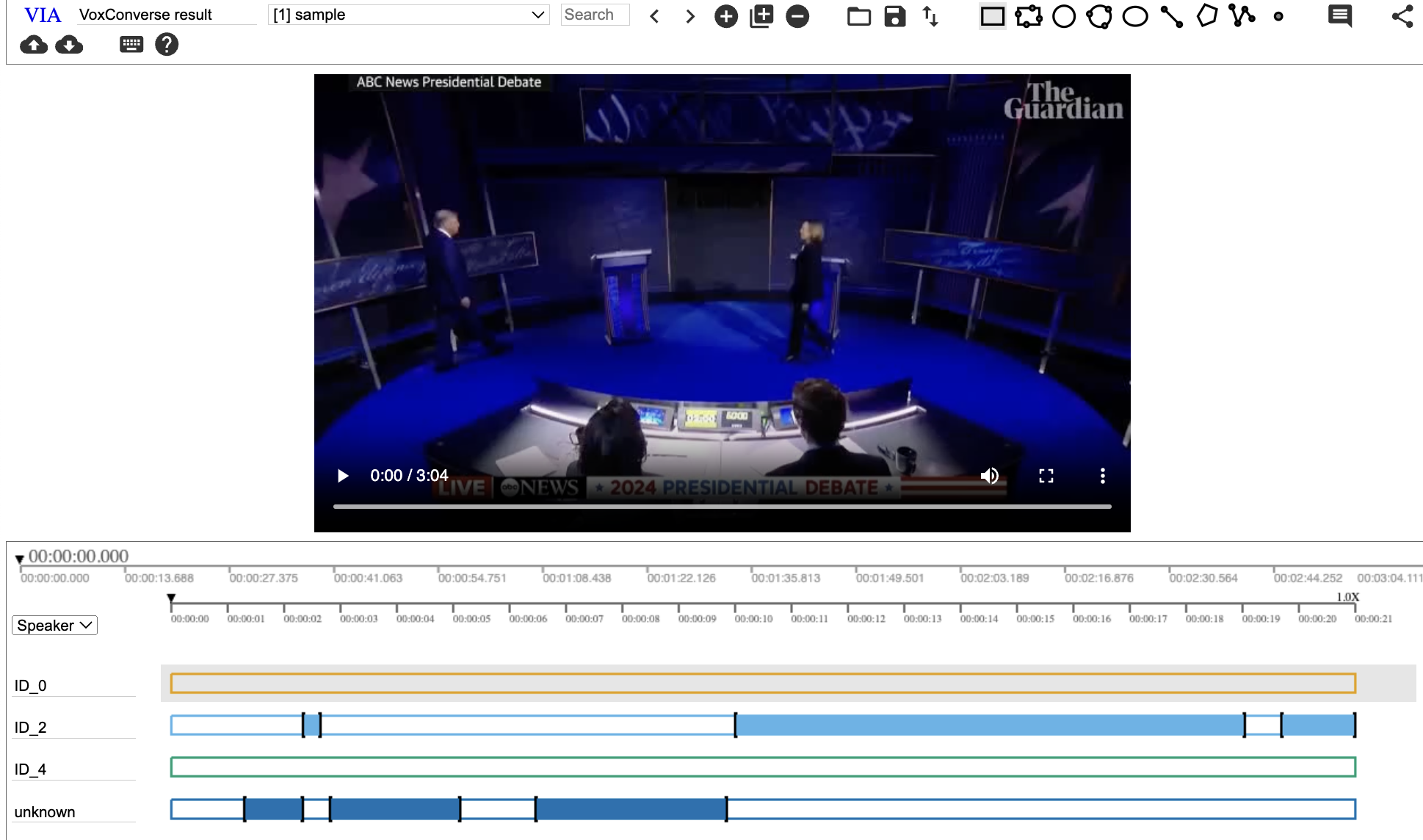

python diarize.py -i [PATH OF VIDEOFILE] -o [PATH OF OUTPUT DIRECTORY] --vad silero --speaker_model ecapa-tdnnThe outputs of this pipeline are a rttm file and a json file. Json file contains the diarization results that can be visualized using VIA Video Annotator.

-

Open the VIA Video Annotator

-

Select the json file you want to visualize.

-

You might see this kind of error message below. Click Choose file and select the original video (or video with visualization).

Of course, this code is built on top of the previous works.

- S3FD : [paper] [code]

- SyncNet : [paper] [code]

- Speechbrain project : [paper] [code]

- VoxCeleb_trainer : [paper] [code]

- Silero-vad : [code]

- Pywebrtcvad : [code]

- ECAPA-TDNN : [paper] [speechbrain-pretrained-model]

This guy developed the initial version of pipeline.

Please cite the following paper if you make use of this code.

@article{chung2020spot,

title={Spot the conversation: speaker diarisation in the wild},

author={Chung, Joon Son and Huh, Jaesung and Nagrani, Arsha and Afouras, Triantafyllos and Zisserman, Andrew},

booktitle={Interspeech},

year={2020}

}