Directory forked from mmdetection3d. It is adapted to load feather files as input for training and evaluation on pointpillars model. The performance is automatically evaluated using the evaluation code from SeMoLi. For training we use the standard hyperparameters for pointpillars training on Waymo Open Dataset. We support training on Waymo Open Dataset and Argoverse2 dataset. During training and evaluation we only use points and labels within a 100mx40m rectangle around the ego-vehicle.

If you installed the conda environment from SeMoLi, all libraries are already installed and you can run the code if you activate conda activate SeMoLi. Otherwise, perpare a conda evironment running the following:

conda create -n mmdetection3d python=3.9

conda activate mmdetection3d

bash setup.sh

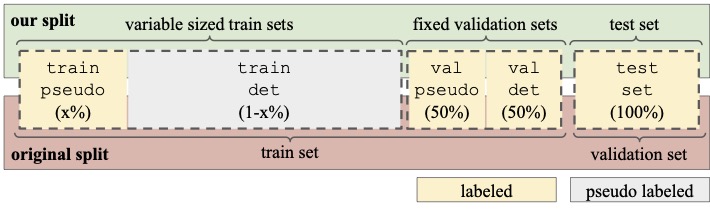

In this repository we follow the data split convention of SeMoLi given by:

For training and evaluation, you can use either pseudo-labels stored in a feather file or ground truth labels loaded from a feather file. et the train and validation label paths to the corresponding feather file by running:

export TRAIN_LABELS=<train_label_path>

export VAL_LABELS=<val_label_path>

For validation, set the path to the feather file containing ground truth data. If you want to use the val_detector dataset from SeMoLi for evaluation, set the path to the feather file containing ground truth training data. If you want to use the real validation set, i.e., the val_evaluation split set the path to the file containing validation set ground truth data.

For example, if you are using this repository within the SeMoLi repository, the ground truth train and real validation set paths for Waymo Open Dataset can be set by:

export TRAIN_LABELS=../SeMoLi/data_utils/Waymo_Converted_filtered/train_1_per_frame_remove_non_move_remove_far_filtered_version_city_w0.feather

export VAL_LABELS=../SeMoLi/data_utils/Waymo_Converted_filtered/val_1_per_frame_remove_non_move_remove_far_filtered_version_city_w0.feather

For AV2 dataset the paths can be set to

export TRAIN_LABELS=../SeMoLi/data_utils/AV2_filtered/train_1_per_frame_remove_non_move_remove_far_filtered_version_city_w0.feather

export VAL_LABELS=../SeMoLi/data_utils/AV2_filtered/val_1_per_frame_remove_non_move_remove_far_filtered_version_city_w0.feather

The base command for training and evaluation on Waymo Open Dataset is given by:

./tools/dist_train.sh configs/pointpillars/pointpillars_hv_secfpn_sbn-all_8xb4-2x_waymo-3d-class_agnostic.py <num_gpus> <percentage_train> <percentage_val> $TRAIN_LABELS $VAL_LABELS --eval --val_detection_set=val_evaluation --auto-scale-lr

where

num_gpusis the number of GPUs that are used for the trainingpercentage_trainis the percentage of training data you want to use for training according to SeMoLi splits, i.e., percentage_train corresponds to x in the above figure. Hence ifx=0.1and the split istrain_detector, the actual percentage of the data used is1-0.1=0.9percentage_valis 1.0 if you want to use eitherval_detectoror the real validation setval_evaluation. If you want to use any part of thetrain_detectorortrain_gnnfor evaluation, please set the percentage according to SeMoLievalif eval is set, you will only evaluate and not trainval_detectiondetermines the detection split you want to use, i.e.,val_detectoror the real validation setval_gnnauto-scale-lradapts the learning rate to the batch size according to a given base learning rate

If you want to use labeled and unlabeled data together, set the train data path to the pseudo labels and set a second path for the labeled data:

export TRAIN_LABELS=<path_to_pseudo_labels>

export TRAIN_LABELS2=../SeMoLi/data_utils/AV2_filtered/train_1_per_frame_remove_non_move_remove_far_filtered_version_city_w0.feather

Then run the following:

./tools/dist_train.sh configs/pointpillars/pointpillars_hv_secfpn_sbn-all_8xb4-2x_waymo-3d-class_agnostic.py <num_gpus> <percentage_train> <percentage_val> $TRAIN_LABELS $VAL_LABELS --label_path2 $TRAIN_LABELS2--val_detection_set=val_evaluation --auto-scale-lr

For AV2 dataset set the dataset paths as above and change the config file to configs/pointpillars/pointpillars_hv_secfpn_sbn-all_8xb4-2x_av2-3d-class_agnostic.py:

./tools/dist_train.sh configs/pointpillars/pointpillars_hv_secfpn_sbn-all_8xb4-2x_av2-3d-class_agnostic.py <num_gpus> <percentage_train> <percentage_val> $TRAIN_LABELS $VAL_LABELS --val_detection_set=val_evaluation --auto-scale-lr

For training in a class specific setting with ground truth data, change the config file to pointpillars_hv_secfpn_sbn-all_8xb4-2x_waymo-3d-class_specific.py

./tools/dist_train.sh configs/pointpillars/pointpillars_hv_secfpn_sbn-all_8xb4-2x_waymo-3d-class_specific.py <num_gpus> <percentage_train> <percentage_val> $TRAIN_LABELS $VAL_LABELS --val_detection_set=val_evaluation --auto-scale-lr