Zongzheng Zhang1,2* · Xinrun Li2* · Sizhe Zou1 · Guoxuan Chi1 · Siqi Li1

Xuchong Qiu2 · Guoliang Wang1 · Guantian Zheng1 · Leichen Wang2 · Hang Zhao3 and Hao Zhao1

1Institute for AI Industry Research (AIR), Tsinghua University · 2Bosch Corporate Research

3Institute for Interdisciplinary Information Sciences (IIIS), Tsinghua University

(* indicates equal contribution)

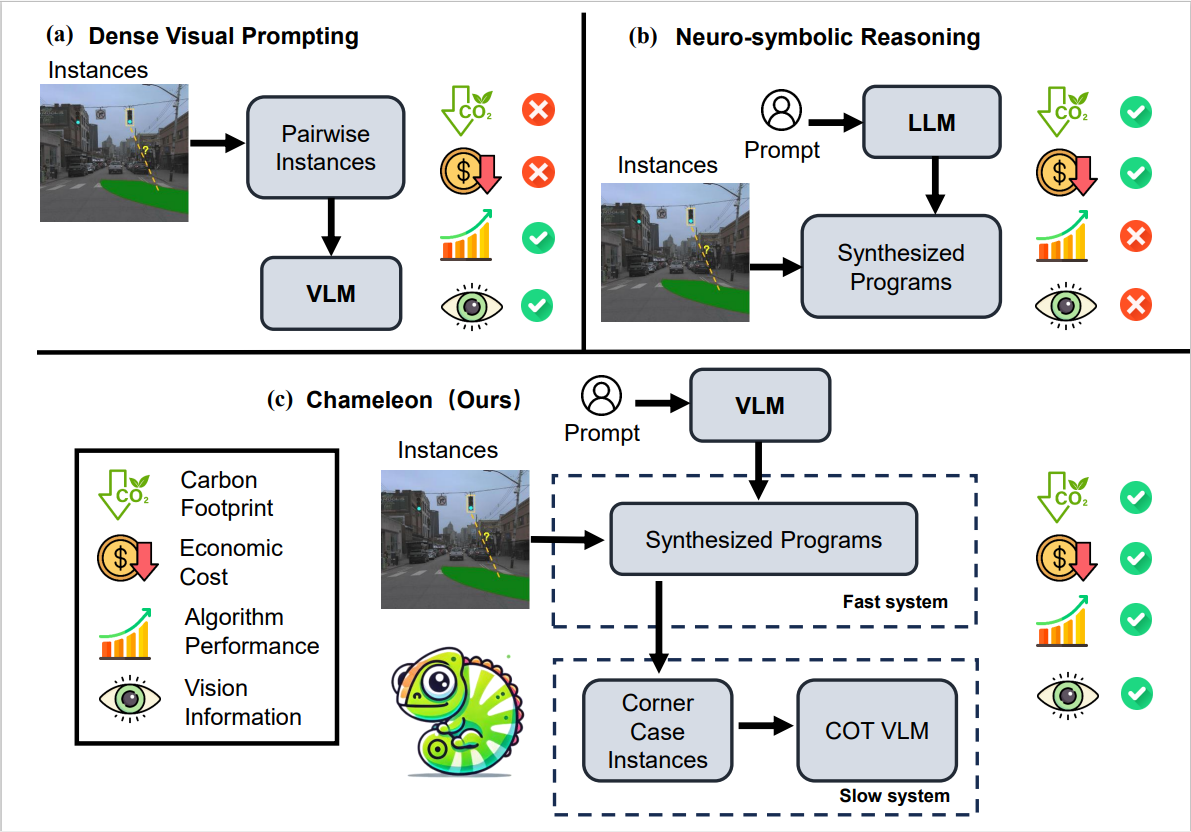

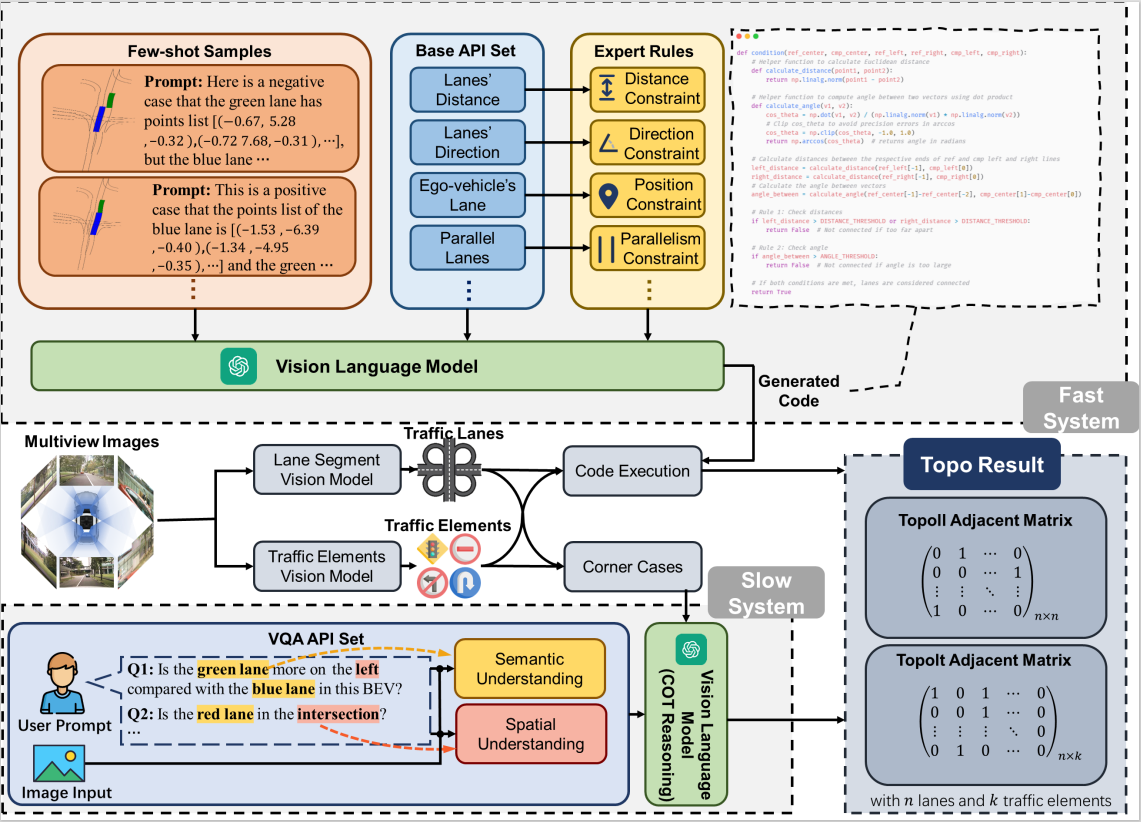

Lane topology extraction involves detecting lanes and traffic elements and determining their relationships, a key perception task for mapless autonomous driving. This task requires complex reasoning, such as determining whether it is possible to turn left into a specific lane. To address this challenge, we introduce neuro-symbolic methods powered by visionlanguage foundation models (VLMs).

We propose a fast-slow neuro-symbolic lane topology extraction algorithm, named Chameleon, which alternates between a fast system that directly reasons over detected instances using synthesized programs and a slow system that utilizes a VLM with a chain-of-thought design to handle corner cases. Chameleon leverages the strengths of both approaches, providing an affordable solution while maintaining high performance. We evaluate the method on the OpenLane-V2 dataset, showing consistent improvements across various baseline detectors.

video.mp4

Before the data generation, you can directly get the lane segment and traffic element perception results of TopoMLP from the Google Drive. You can download the pickle file and save it in /dataset.

You can run the following command to convert the pickle file into timestamp-wise json files:

# Convert pkl to json

python tools/pkl2json.py --input $PKL_PATH --output $OUTPUT_PATH --verbose

# For example, you can try this

python tools/pkl2json.py --input ./dataset/results_base.pkl --output ./dataset/output_json --verboseThen you can generate the corresponding visual prompt data for different VQA sub-tasks. And you can find data generation scripts in /VQA/sub-task/data. For intersection task, you should generate BEV images and PV images respectively, and then make mosaics of them. Here, we take the connection VQA task for instance:

# Generate BEV images for the connection VQA task

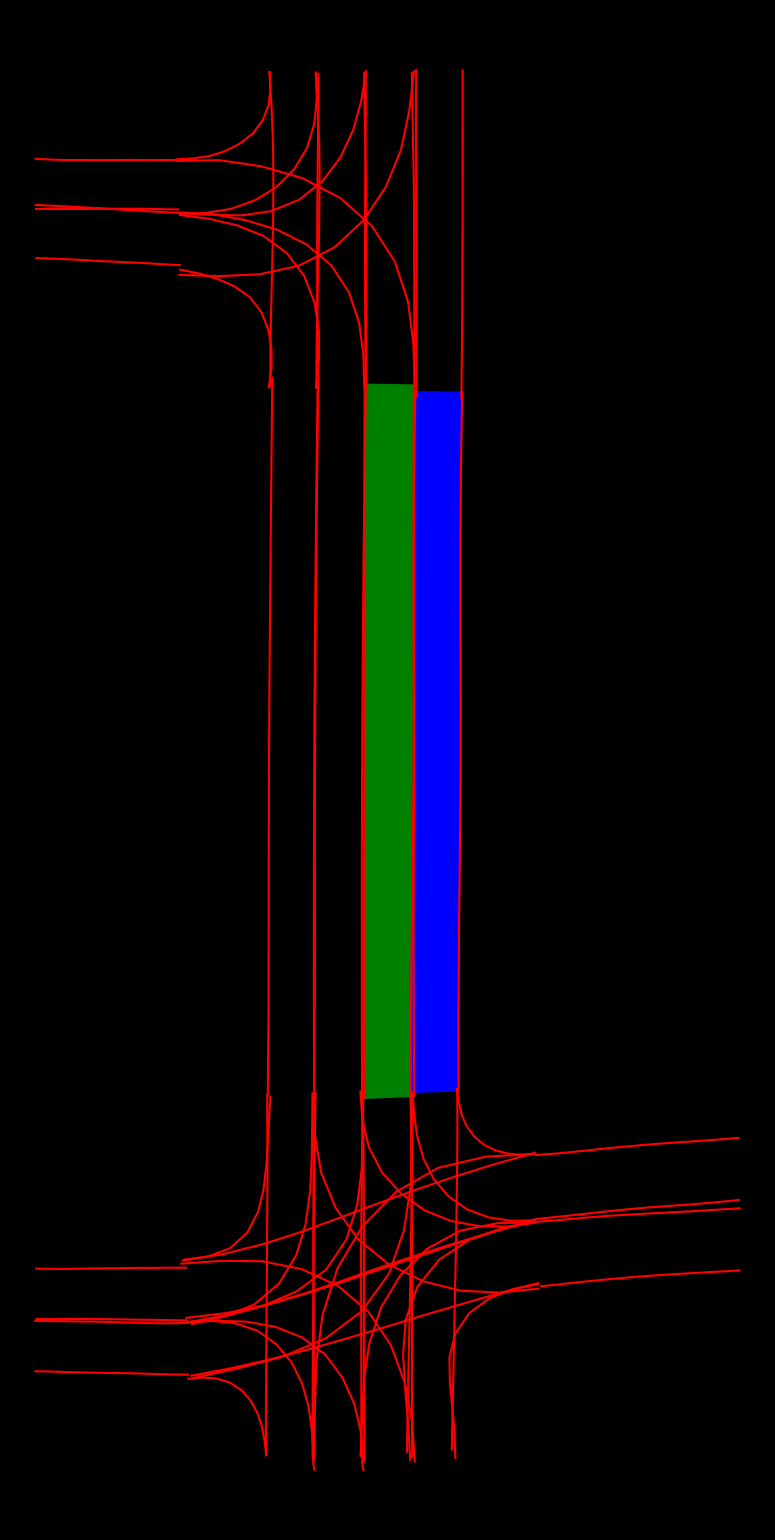

python ./VQA/connection/data/pairwise_conn_BEV.py --output $GENERATION_PATH --verboseThrough the command, you can obtain visual prompts like as follows:

On top of that, you should make your text prompts for the VQA task and save it as a txt file. For instance:

system_context = '''

The red lines in the photos are lane boundaries. Two segments in different lanes don't have any connection relationship. Only two segments in the same lane end to end adjacent are considered as directly connected.

'''

prompt = '''

You are an expert in determining adjacent lane segments in the image. Let's determine if the the green segment is directly connected with the blue segmemt. Please reply in a brief sentence starting with "Yes" or "No".

'''So far, you get done all preparations for an VQA task.

You can run the VQA task with the following command, here we use the connection VQA task as an example.

# Run the connection VQA task

python ./VQA/connection/conn_VQA.py --txt $TEXT_PROMPT_PATH --visual $VISUAL_PROMPT_PATH --output $OUTPUT_RESULT_PATH --key $OPENAI_API_KEY --verboseFor evaluation, you can run the following command:

# Evaluate your prediction

python ./VQA/connection/evaluate_conn.py --path $RESULT_PATH